本篇文章将带给各位读者关于 Scrapy 与 MongoDB 的结合,打磨出完美的指纹存储机制,同时也解决了 Redis 内存压力的问题。我们将深入探讨Scrapy-Redis 源码的改造,使其可以根据不同场景进行灵活配置和使用。欢迎各位读者阅读并参与讨论!

特别声明:本公众号文章只作为学术研究,不作为其他不法用途;如有侵权请联系作者删除。

这是「进击的Coder」的第 937 篇技术分享

作者:TheWeiJun

来源:逆向与爬虫的故事

“

阅读本文大概需要 17 分钟。

”立即加星标

每月看好文

目录

一、前言介绍

二、架构梳理

三、源码分析

四、源码重写

五、文章总结

一、前言介绍

在使用 Scrapy-Redis 进行数据采集时,经常会面临着 Redis 内存不足的困扰,特别是当 Redis 中存储的指纹数量过多时,可能导致 Redis 崩溃、指纹丢失,进而影响整个爬虫的稳定性。那么,面对这类问题,我们应该如何应对呢?我将在本文中分享解决方案:通过改造 Scrapy-Redis 源码,引入 MongoDB 持久化存储,从根本上解决了上述问题。敬请关注我的文章,一起探讨这个解决方案的实现过程,以及带来的收益和挑战。

二、架构梳理

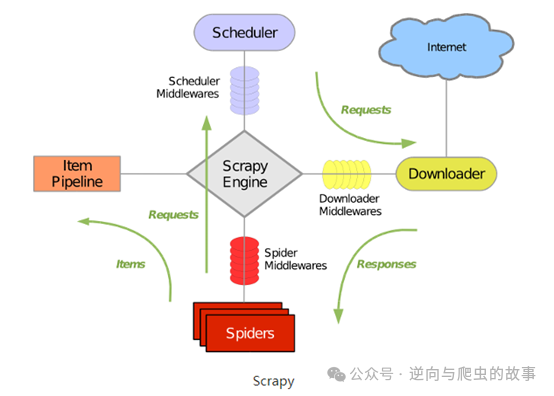

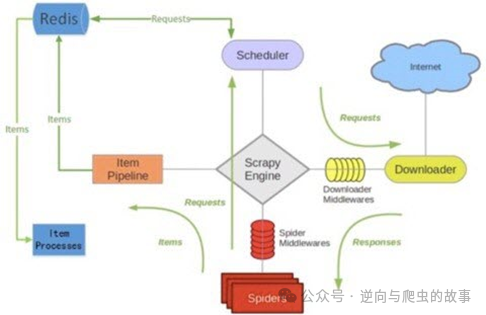

1、进行源码分析之前,我们需要先了解下 scrapy 及 scrapy-redis 的架构图,两者相比,是哪些地方进行了改造?带着这样的疑问,我们来看下两个框架的架构图:

图1(scrapy架构图)

图2(scrapy-redis架构图)

2、拿 图2 同 图1 对比,我们可以看到 scrapy-redis 在 scrapy 的架构上增加了 redis,基于 redis 的特性拓展了如下四种组件:Scheduler,Dupfilter,ItemPipeline,BaseSpider,这也是为什么在 redis 中会生成spider:requests、spider:items、spider:dupfilter 三个 key 的原因。接下来我们进入源码分析环节,来看看 scrapy-redis 如何进行指纹改造吧。

三、源码分析

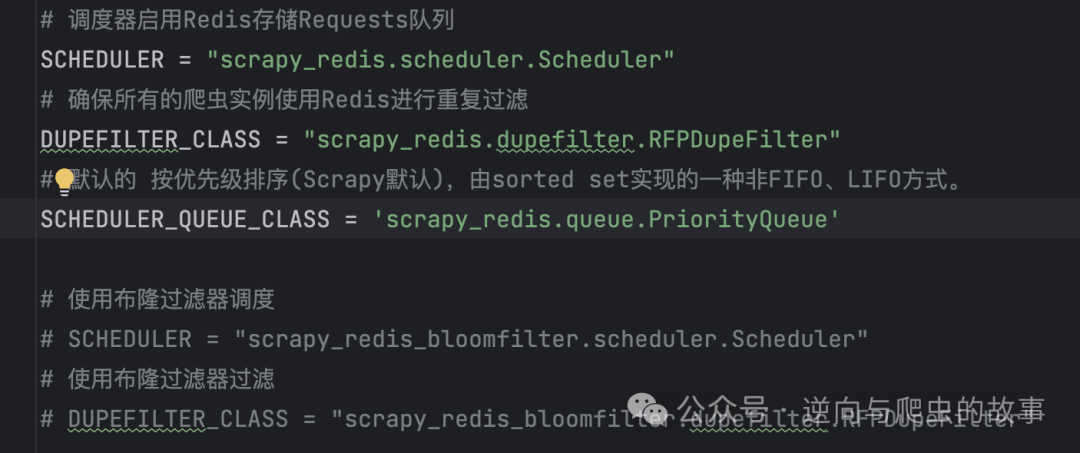

1、分析 scrapy-redis 源码,我们在使用 scrapy-redis 时,在 settings 模块都会进行如下配置:

总结:这里面的三个参数,分别同 redis 进行请求出入、请求指纹、请求优先级交互,如果我们想要修改 redis 指纹模块,那么我们需要对 RFPDupeFilter 模块进行重写,从而结合 mongodb 进行大量指纹存储,接下来进入源码分析环节。

2、阅读分析 RFPDupeFilter 源码,我们先来附上 RFPDupeFilter 完整源码如下:

import logging

import time

from scrapy.dupefilters import BaseDupeFilter

from scrapy.utils.request import request_fingerprint

from . import defaults

from .connection import get_redis_from_settings

logger = logging.getLogger(__name__)

# TODO: Rename class to RedisDupeFilter.

class RFPDupeFilter(BaseDupeFilter):

"""Redis-based request duplicates filter.

This class can also be used with default Scrapy's scheduler.

"""

logger = logger

def __init__(self, server, key, debug=False):

"""Initialize the duplicates filter.

Parameters

----------

server : redis.StrictRedis

The redis server instance.

key : str

Redis key Where to store fingerprints.

debug : bool, optional

Whether to log filtered requests.

"""

self.server = server

self.key = key

self.debug = debug

self.logdupes = True

@classmethod

def from_settings(cls, settings):

"""Returns an instance from given settings.

This uses by default the key ``dupefilter:<timestamp>``. When using the

``scrapy_redis.scheduler.Scheduler`` class, this method is not used as

it needs to pass the spider name in the key.

Parameters

----------

settings : scrapy.settings.Settings

Returns

-------

RFPDupeFilter

A RFPDupeFilter instance.

"""

server = get_redis_from_settings(settings)

# XXX: This creates one-time key. needed to support to use this

# class as standalone dupefilter with scrapy's default scheduler

# if scrapy passes spider on open() method this wouldn't be needed

# TODO: Use SCRAPY_JOB env as default and fallback to timestamp.

key = defaults.DUPEFILTER_KEY % {'timestamp': int(time.time())}

debug = settings.getbool('DUPEFILTER_DEBUG')

return cls(server, key=key, debug=debug)

@classmethod

def from_crawler(cls, crawler):

"""Returns instance from crawler.

Parameters

----------

crawler : scrapy.crawler.Crawler

Returns

-------

RFPDupeFilter

Instance of RFPDupeFilter.

"""

return cls.from_settings(crawler.settings)

def request_seen(self, request):

"""Returns True if request was already seen.

Parameters

----------

request : scrapy.http.Request

Returns

-------

bool

"""

fp = self.request_fingerprint(request)

# This returns the number of values added, zero if already exists.

added = self.server.sadd(self.key, fp)

return added == 0

def request_fingerprint(self, request):

"""Returns a fingerprint for a given request.

Parameters

----------

request : scrapy.http.Request

Returns

-------

str

"""

return request_fingerprint(request)

@classmethod

def from_spider(cls, spider):

settings = spider.settings

server = get_redis_from_settings(settings)

dupefilter_key = settings.get("SCHEDULER_DUPEFILTER_KEY", defaults.SCHEDULER_DUPEFILTER_KEY)

key = dupefilter_key % {'spider': spider.name}

debug = settings.getbool('DUPEFILTER_DEBUG')

return cls(server, key=key, debug=debug)

def close(self, reason=''):

"""Delete data on close. Called by Scrapy's scheduler.

Parameters

----------

reason : str, optional

"""

self.clear()

def clear(self):

"""Clears fingerprints data."""

self.server.delete(self.key)

def log(self, request, spider):

"""Logs given request.

Parameters

----------

request : scrapy.http.Request

spider : scrapy.spiders.Spider

"""

if self.debug:

msg = "Filtered duplicate request: %(request)s"

self.logger.debug(msg, {'request': request}, extra={'spider': spider})

elif self.logdupes:

msg = ("Filtered duplicate request %(request)s"

" - no more duplicates will be shown"

" (see DUPEFILTER_DEBUG to show all duplicates)")

self.logger.debug(msg, {'request': request}, extra={'spider': spider})

self.logdupes = False3、我们对 scrapy-redis dupfilter.py 源码进行分析如下:

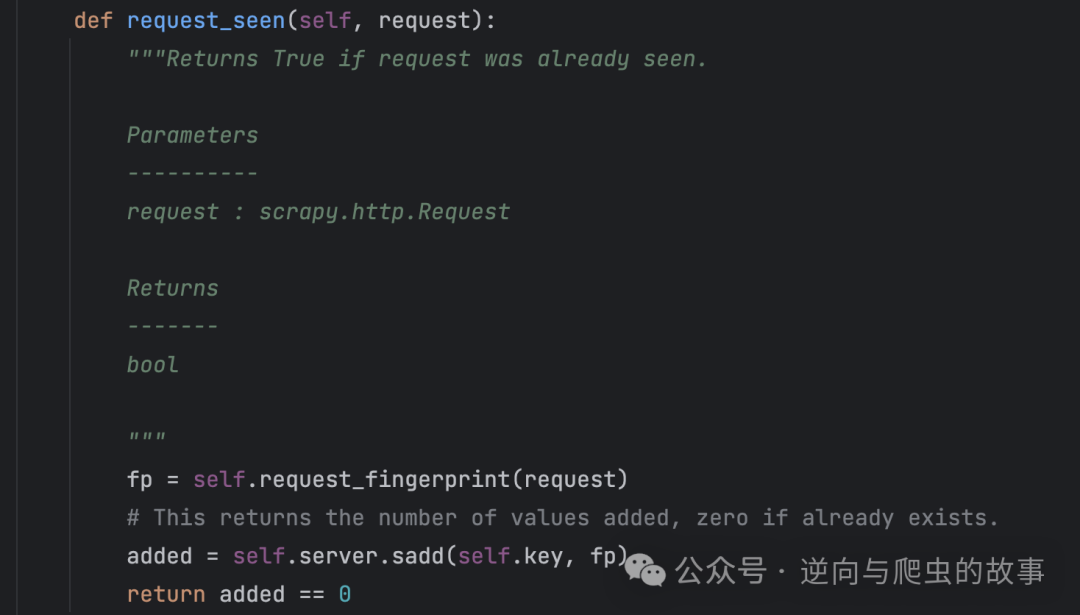

解读:request_seen 方法中的 self.request_fingerprint 方法会对请求指纹进行 sha1 加密运算得到一个 40 位长度的 fp 参数,然后 redis set 会对该指纹进行 add 添加,如果指纹不存在则返回 True,return True==0 则最后结果返回 False,如果指纹存在则返回 True,return False==0 则最后结果返回 True。接下来分析下调度器是如何进行最终指纹判重的!

4、我们分析 Schedulter 源码,查看 Scheduler 对请求进行入队列处理逻辑如下:

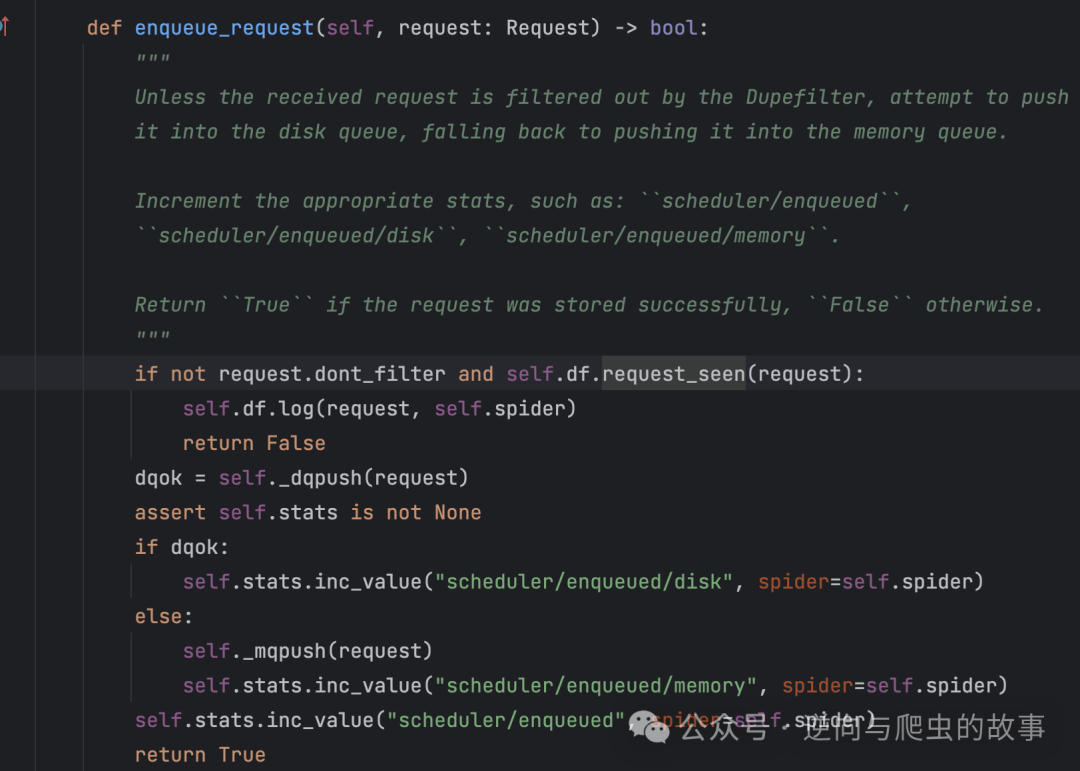

解读:通过分析 enqueue_request 方法,我们可以看到相关逻辑,如果该请求设置为去重并且 request_seen 方法返回为 True,则该请求不入队列;相反该请求需要入队列,并进行相关数据自增统计。

总结:其实分析到这里,我们只需要修改 request_seen 方法,即可完成 scrapy-redis fp 源码改造,通过结合 mongodb,实现各种爬虫 fp 指纹持久化存储;长话短说,接下来进入源码重写环节。

四、源码重写

1、首先我们需要在 settings 里配置 mongodb 相关参数,代码如下:

MONGO_DB = "crawler"

MONGO_URL = "mongodb://localhost:27017"2、紧接着笔者通过继承重写BaseDupeFilter源码,自定义去重模块 MongoRFPDupeFilter 源码如下:

import logging

import time

from pymongo import MongoClient

from scrapy.dupefilters import BaseDupeFilter

from scrapy.utils.request import request_fingerprint

from scrapy_redis import defaults

logger = logging.getLogger(__name__)

class MongoRFPDupeFilter(BaseDupeFilter):

"""Redis-based request duplicates filter.

This class can also be used with default Scrapy's scheduler.

"""

logger = logger

def __init__(self, key, debug=False, settings=None):

self.key = key

self.debug = debug

self.logdupes: bool = True

self.mongo_uri = settings.get('MONGO_URI')

self.mongo_db = settings.get('MONGO_DB')

self.client = MongoClient(self.mongo_uri)

self.db = self.client[self.mongo_db]

self.collection = self.db[self.key]

self.collection.create_index([("_id", 1)])

@classmethod

def from_settings(cls, settings):

key = defaults.DUPEFILTER_KEY % {'timestamp': int(time.time())}

debug = settings.getbool('DUPEFILTER_DEBUG')

return cls(key=key, debug=debug, settings=settings)

@classmethod

def from_crawler(cls, crawler):

"""Returns instance from crawler.

Parameters

----------

crawler : scrapy.crawler.Crawler

Returns

-------

RFPDupeFilter

Instance of RFPDupeFilter.

"""

return cls.from_settings(crawler.settings)

def request_seen(self, request):

"""Returns True if request was already seen.

"""

fp = self.request_fingerprint(request)

# This returns the number of values added, zero if already exists.

if self.collection.find_one({'_id': fp}):

return True

self.collection.insert_one(

{'_id': fp, "crawl_time": time.strftime("%Y-%m-%d")})

return False

def request_fingerprint(self, request):

return request_fingerprint(request)

@classmethod

def from_spider(cls, spider):

settings = spider.settings

dupefilter_key = settings.get("SCHEDULER_DUPEFILTER_KEY", defaults.SCHEDULER_DUPEFILTER_KEY)

key = dupefilter_key % {'spider': spider.name}

debug = settings.getbool('DUPEFILTER_DEBUG')

return cls(key=key, debug=debug, settings=settings)

def close(self, reason=''):

"""Delete data on close. Called by Scrapy's scheduler.

Parameters

----------

reason : str, optional

"""

self.clear()

def clear(self):

"""Clears fingerprints data."""

self.collection.delete(self.key)

def log(self, request, spider):

"""Logs given request.

Parameters

----------

request : scrapy.http.Request

spider : scrapy.spiders.Spider

"""

if self.debug:

msg = "Filtered duplicate request: %(request)s"

self.logger.debug(msg, {'request': request}, extra={'spider': spider})

elif self.logdupes:

msg = ("Filtered duplicate request %(request)s"

" - no more duplicates will be shown"

" (see DUPEFILTER_DEBUG to show all duplicates)")

self.logger.debug(msg, {'request': request}, extra={'spider': spider})

self.logdupes = False3、第三步,我们需要将继承重写的MongoRFPDupeFilter模块配置到settings文件中,代码如下:

# 确保所有的爬虫实例使用Mongodb进行重复过滤

DUPEFILTER_CLASS = "test_scrapy.dupfilter.MongoRFPDupeFilter"4、编写测试爬虫(编写代码环节跳过),直接查看mongdb collection中fp结果,截图如下:

总结:到这里整个流程就结束了,接下来不管我们开发多少个爬虫,都默认使用mongodb对request fp指纹进行存储。最后我们来总结下scrapy-redis同scrapy-mongodb的指纹方式优缺点吧!

scrapy-redis 速度快,但由于指纹过大,内存不足会导致redis宕机,内存昂贵

scrapy+mongo 速度同redis相比,不是很优,优点是能存储大批量指纹,磁盘廉价

五、文章总结

亲爱的读者们,感谢你们与我一同在这个公众号里探索、学习。为了让我们能够更紧密地交流、共同进步,我特意开通了留言功能。这里不仅是一个分享知识的平台,更是一个携手成长的角落。欢迎你们在评论区留下你的学习心得、疑惑或者建议,让我们一起探讨、学习,共同成长。期待在这里,我们能够一起分享智慧的火花,点亮前行的道路。再次感谢你们的陪伴,让我们一起学习,一起成长!

本篇文章分享到这里就结束了,欢迎大家关注下期文章,我们不见不散

点分享

点收藏

点点赞

点在看

2196

2196

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?