备注:

Hive 版本 2.1.1

一.Hive压缩概述

一般在hadoop集群上运行一个MapReduce会有以下步骤:

input-> Map-> shuffle -> reduce -> output

如果我们采用了数据压缩,在map阶段产生的数据大小就会减少,会减少磁盘的IO,同时还能够减少网络的IO。

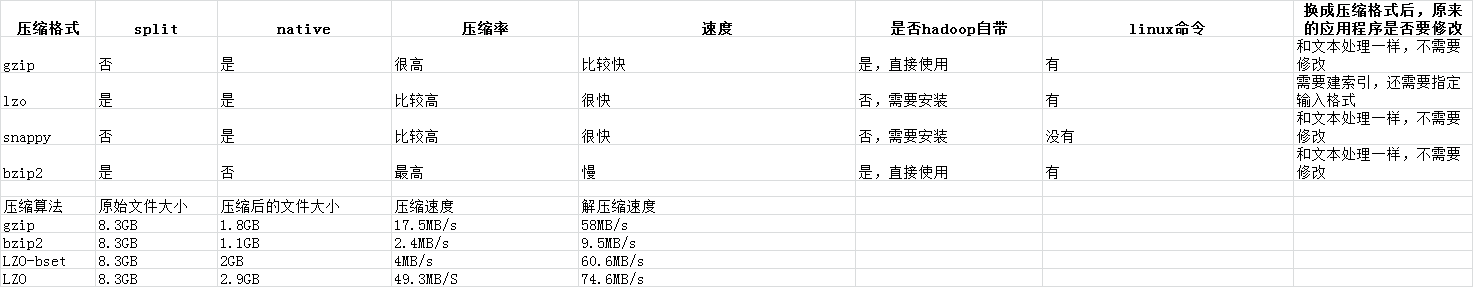

1.1 常见压缩算法比较

1.2 开启压缩

调整参数:

我们可以通过Job history查看每个job运行的时候参数配置,与压缩有关的参数如下:

mapreduce.map.output.compress和mapreduce.output.fileoutputformat.compress

这两个参数可以设置为true或false来控制是否使用压缩算法。

可以通过下面两个参数来配置压缩算法:

mapreduce.map.output.compress.codec和mapreduce.output.fileoutputformat.compress.codec

hadoop支持多种压缩算法,常用的算法对应的类有下面两个:

org.apache.hadoop.io.compress.SnappyCodec和org.apache.hadoop.compress.lzo.LzoCodec

分别对应的是snappy和lzo压缩算法,其中在2013年之前常用lzo压缩算法,目前这经常使用snappy算法。

在Map阶段启用snappy压缩的参数设置为:

mapreduce.map.output.compress=true

mapreduce.map.output.compress.codec=org.apache.hadoop.io.compress.SnappyCodec

在Reduce阶段启用lzo压缩的参数设置为:

mapreduce.output.fileoutputformat.compress=true

mapreduce.output.fileoutputformat.compress.codec=org.apache.hadoop.compress.lzo.LzoCodec

set hive.exec.compress.intermediate=true; --启用中间数据压缩

SET hive.exec.compress.output=true; -- 启用最终数据输出压缩

修改配置文件:

<property>

<name>hive.exec.compress.intermediate</name>

<value>true</value>

</property>

<property>

<name>hive.exec.compress.output</name>

<value>true</value>

</property>

<!-- map输出压缩 -->

<property>

<name>mapreduce.map.output.compress</name>

<value>true</value>

</property>

<property>

<name>mapreduce.map.output.compress.codec</name>

<value>org.apache.hadoop.io.compress.SnappyCodec</value>

</property>

<!-- reduce输出压缩 -->

<property>

<name>mapreduce.output.fileoutputformat.compress</name>

<value>true</value>

</property>

<property>

<name>mapreduce.output.fileoutputformat.compress.codec</name>

<value>org.apache.hadoop.io.compress.SnappyCodec</value>

</property>

二.压缩测试

查看Hive的默认参数,可以看到map端默认已经启用了压缩,为snappy

hive>

> set mapreduce.map.output.compress;

mapreduce.map.output.compress=true

hive> set mapreduce.map.output.compress.codec;

mapreduce.map.output.compress.codec=org.apache.hadoop.io.compress.SnappyCodec

hive> set mapreduce.output.fileoutputformat.compress;

mapreduce.output.fileoutputformat.compress=false

hive> set mapreduce.output.fileoutputformat.compress.codec;

mapreduce.output.fileoutputformat.compress.codec=org.apache.hadoop.io.compress.DefaultCodec

hive>

2.1 测试Orc文件压缩格式

代码:

CREATE TABLE compress_test1(

sale_date string,

prod_name string,

total_nums bigint)

STORED AS orc tblproperties ("orc.compress"="SNAPPY");

;

insert into compress_test1 select sale_date,prod_name,total_nums from dm_sale;

CREATE TABLE compress_test2(

sale_date string,

prod_name string,

total_nums bigint)

STORED AS orc;

;

insert into compress_test2 select sale_date,prod_name,total_nums from dm_sale;

测试记录:

可以看到两个测试表执行时间差不多,用snappy存储空间居然增加了20%左右,因为因为原本默认使用的是 ZLIB (接近bzip2)压缩

hive>

> CREATE TABLE compress_test1(

> sale_date string,

> prod_name string,

> total_nums bigint)

> STORED AS orc tblproperties ("orc.compress"="SNAPPY");

OK

Time taken: 0.093 seconds

hive>

> insert into compress_test1 select * from dm_sale;

FAILED: SemanticException [Error 10044]: Line 1:12 Cannot insert into target table because column number/types are different 'compress_test1': Table insclause-0 has 3 columns, but query has 4 columns.

hive>

>

> insert into compress_test1 select sale_date,prod_name,total_nums from dm_sale;

Query ID = root_20210107113125_6471a05e-04aa-4e65-9979-84604d6accb1

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks is set to 0 since there's no reduce operator

21/01/07 11:31:25 INFO client.ConfiguredRMFailoverProxyProvider: Failing over to rm69

Starting Job = job_1609141291605_0052, Tracking URL = http://hp3:8088/proxy/application_1609141291605_0052/

Kill Command = /opt/cloudera/parcels/CDH-6.3.1-1.cdh6.3.1.p0.1470567/lib/hadoop/bin/hadoop job -kill job_1609141291605_0052

Hadoop job information for Stage-1: number of mappers: 2; number of reducers: 0

2021-01-07 11:31:32,837 Stage-1 map = 0%, reduce = 0%

2021-01-07 11:31:40,033 Stage-1 map = 50%, reduce = 0%, Cumulative CPU 4.76 sec

2021-01-07 11:31:41,060 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 10.07 sec

MapReduce Total cumulative CPU time: 10 seconds 70 msec

Ended Job = job_1609141291605_0052

Stage-4 is filtered out by condition resolver.

Stage-3 is selected by condition resolver.

Stage-5 is filtered out by condition resolver.

21/01/07 11:31:42 INFO client.ConfiguredRMFailoverProxyProvider: Failing over to rm69

Starting Job = job_1609141291605_0053, Tracking URL = http://hp3:8088/proxy/application_1609141291605_0053/

Kill Command = /opt/cloudera/parcels/CDH-6.3.1-1.cdh6.3.1.p0.1470567/lib/hadoop/bin/hadoop job -kill job_1609141291605_0053

Hadoop job information for Stage-3: number of mappers: 2; number of reducers: 0

2021-01-07 11:31:52,731 Stage-3 map = 0%, reduce = 0%

2021-01-07 11:31:58,908 Stage-3 map = 100%, reduce = 0%, Cumulative CPU 1.67 sec

MapReduce Total cumulative CPU time: 4 seconds 180 msec

Ended Job = job_1609141291605_0053

Loading data to table test.compress_test1

MapReduce Jobs Launched:

Stage-Stage-1: Map: 2 Cumulative CPU: 10.07 sec HDFS Read: 388259 HDFS Write: 50078 HDFS EC Read: 0 SUCCESS

Stage-Stage-3: Map: 2 Cumulative CPU: 4.18 sec HDFS Read: 119587 HDFS Write: 49915 HDFS EC Read: 0 SUCCESS

Total MapReduce CPU Time Spent: 14 seconds 250 msec

OK

Time taken: 36.078 seconds

hive> CREATE TABLE compress_test2(

> sale_date string,

> prod_name string,

> total_nums bigint)

> STORED AS orc;

OK

Time taken: 0.086 seconds

hive> ;

hive> insert into compress_test2 select sale_date,prod_name,total_nums from dm_sale;

Query ID = root_20210107113231_3b12bc61-3ff7-4182-ba14-08e1b5152894

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks is set to 0 since there's no reduce operator

21/01/07 11:32:32 INFO client.ConfiguredRMFailoverProxyProvider: Failing over to rm69

Starting Job = job_1609141291605_0054, Tracking URL = http://hp3:8088/proxy/application_1609141291605_0054/

Kill Command = /opt/cloudera/parcels/CDH-6.3.1-1.cdh6.3.1.p0.1470567/lib/hadoop/bin/hadoop job -kill job_1609141291605_0054

Hadoop job information for Stage-1: number of mappers: 2; number of reducers: 0

2021-01-07 11:32:38,999 Stage-1 map = 0%, reduce = 0%

2021-01-07 11:32:46,196 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 9.79 sec

MapReduce Total cumulative CPU time: 9 seconds 790 msec

Ended Job = job_1609141291605_0054

Stage-4 is filtered out by condition resolver.

Stage-3 is selected by condition resolver.

Stage-5 is filtered out by condition resolver.

21/01/07 11:32:47 INFO client.ConfiguredRMFailoverProxyProvider: Failing over to rm69

Starting Job = job_1609141291605_0055, Tracking URL = http://hp3:8088/proxy/application_1609141291605_0055/

Kill Command = /opt/cloudera/parcels/CDH-6.3.1-1.cdh6.3.1.p0.1470567/lib/hadoop/bin/hadoop job -kill job_1609141291605_0055

Hadoop job information for Stage-3: number of mappers: 2; number of reducers: 0

2021-01-07 11:32:58,918 Stage-3 map = 0%, reduce = 0%

2021-01-07 11:33:05,090 Stage-3 map = 100%, reduce = 0%, Cumulative CPU 3.42 sec

MapReduce Total cumulative CPU time: 3 seconds 420 msec

Ended Job = job_1609141291605_0055

Loading data to table test.compress_test2

MapReduce Jobs Launched:

Stage-Stage-1: Map: 2 Cumulative CPU: 9.79 sec HDFS Read: 388417 HDFS Write: 40242 HDFS EC Read: 0 SUCCESS

Stage-Stage-3: Map: 2 Cumulative CPU: 3.42 sec HDFS Read: 106505 HDFS Write: 40079 HDFS EC Read: 0 SUCCESS

Total MapReduce CPU Time Spent: 13 seconds 210 msec

OK

Time taken: 35.818 seconds

hive>

[root@hp1 ~]# hadoop fs -ls /user/hive/warehouse/test.db/compress_test1 |awk -F ' ' '{print $5}'|awk '{a+=$1}END{print a}'

49915

[root@hp1 ~]# hadoop fs -ls /user/hive/warehouse/test.db/compress_test2 |awk -F ' ' '{print $5}'|awk '{a+=$1}END{print a}'

40079

[root@hp1 ~]#

下面我们测试下不带压缩的orc文件格式

代码:

CREATE TABLE compress_test3(

sale_date string,

prod_name string,

total_nums bigint)

STORED AS orc tblproperties ("orc.compress"="NONE");

;

insert into compress_test3 select sale_date,prod_name,total_nums from dm_sale;

测试记录:

可以看到未压缩存储空间差不多是bzip2压缩格式的2倍

hive>

>

> CREATE TABLE compress_test3(

> sale_date string,

> prod_name string,

> total_nums bigint)

> STORED AS orc tblproperties ("orc.compress"="NONE");

OK

Time taken: 0.087 seconds

hive> ;

hive> insert into compress_test3 select sale_date,prod_name,total_nums from dm_sale;

Query ID = root_20210107115133_3d87f50b-6d9a-4057-9e2d-a955545c77d8

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks is set to 0 since there's no reduce operator

21/01/07 11:51:34 INFO client.ConfiguredRMFailoverProxyProvider: Failing over to rm69

Starting Job = job_1609141291605_0056, Tracking URL = http://hp3:8088/proxy/application_1609141291605_0056/

Kill Command = /opt/cloudera/parcels/CDH-6.3.1-1.cdh6.3.1.p0.1470567/lib/hadoop/bin/hadoop job -kill job_1609141291605_0056

Hadoop job information for Stage-1: number of mappers: 2; number of reducers: 0

2021-01-07 11:51:41,090 Stage-1 map = 0%, reduce = 0%

2021-01-07 11:51:48,291 Stage-1 map = 50%, reduce = 0%, Cumulative CPU 4.86 sec

2021-01-07 11:51:49,320 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 9.0 sec

MapReduce Total cumulative CPU time: 9 seconds 0 msec

Ended Job = job_1609141291605_0056

Stage-4 is filtered out by condition resolver.

Stage-3 is selected by condition resolver.

Stage-5 is filtered out by condition resolver.

21/01/07 11:51:50 INFO client.ConfiguredRMFailoverProxyProvider: Failing over to rm69

Starting Job = job_1609141291605_0057, Tracking URL = http://hp3:8088/proxy/application_1609141291605_0057/

Kill Command = /opt/cloudera/parcels/CDH-6.3.1-1.cdh6.3.1.p0.1470567/lib/hadoop/bin/hadoop job -kill job_1609141291605_0057

Hadoop job information for Stage-3: number of mappers: 2; number of reducers: 0

2021-01-07 11:52:01,020 Stage-3 map = 0%, reduce = 0%

2021-01-07 11:52:07,184 Stage-3 map = 100%, reduce = 0%, Cumulative CPU 3.3 sec

MapReduce Total cumulative CPU time: 3 seconds 300 msec

Ended Job = job_1609141291605_0057

Loading data to table test.compress_test3

MapReduce Jobs Launched:

Stage-Stage-1: Map: 2 Cumulative CPU: 9.0 sec HDFS Read: 388457 HDFS Write: 83344 HDFS EC Read: 0 SUCCESS

Stage-Stage-3: Map: 2 Cumulative CPU: 3.3 sec HDFS Read: 152813 HDFS Write: 83181 HDFS EC Read: 0 SUCCESS

Total MapReduce CPU Time Spent: 12 seconds 300 msec

OK

Time taken: 34.835 seconds

hive>

[root@hp1 ~]# hadoop fs -ls /user/hive/warehouse/test.db/compress_test1 |awk -F ' ' '{print $5}'|awk '{a+=$1}END{print a}'

49915

[root@hp1 ~]# hadoop fs -ls /user/hive/warehouse/test.db/compress_test2 |awk -F ' ' '{print $5}'|awk '{a+=$1}END{print a}'

40079

[root@hp1 ~]#

[root@hp1 ~]# hadoop fs -ls /user/hive/warehouse/test.db/compress_test3 |awk -F ' ' '{print $5}'|awk '{a+=$1}END{print a}'

83181

[root@hp1 ~]#

2.2 textfile文件格式测试

代码:

CREATE TABLE compress_test1(

sale_date string,

prod_name string,

total_nums bigint)

STORED AS textfile tblproperties ("orc.compress"="SNAPPY");

insert into compress_test1 select sale_date,prod_name,total_nums from dm_sale;

CREATE TABLE compress_test2(

sale_date string,

prod_name string,

total_nums bigint)

STORED AS textfile;

insert into compress_test2 select sale_date,prod_name,total_nums from dm_sale;

CREATE TABLE compress_test3(

sale_date string,

prod_name string,

total_nums bigint)

STORED AS textfile tblproperties ("orc.compress"="NONE");

insert into compress_test3 select sale_date,prod_name,total_nums from dm_sale;

测试记录:

textfile默认是不做压缩的

hive>

> CREATE TABLE compress_test1(

> sale_date string,

> prod_name string,

> total_nums bigint)

> STORED AS textfile tblproperties ("orc.compress"="SNAPPY");

OK

Time taken: 0.085 seconds

hive> ;

hive> insert into compress_test1 select sale_date,prod_name,total_nums from dm_sale;

Query ID = root_20210107115646_b3ccf723-8def-42be-a13c-7c171e173213

Total jobs = 3

Launching Job 1 out of 3

Number of reduce tasks is set to 0 since there's no reduce operator

21/01/07 11:56:46 INFO client.ConfiguredRMFailoverProxyProvider: Failing over to rm69

Starting Job = job_1609141291605_0058, Tracking URL = http://hp3:8088/proxy/application_1609141291605_0058/

Kill Command = /opt/cloudera/parcels/CDH-6.3.1-1.cdh6.3.1.p0.1470567/lib/hadoop/bin/hadoop job -kill job_1609141291605_0058

Hadoop job information for Stage-1: number of mappers: 2; number of reducers: 0

2021-01-07 11:56:53,501 Stage-1 map = 0%, reduce = 0%

2021-01-07 11:57:00,700 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 7.98 sec

MapReduce Total cumulative CPU time: 7 seconds 980 msec

Ended Job = job_1609141291605_0058

Stage-4 is filtered out by condition resolver.

Stage-3 is selected by condition resolver.

Stage-5 is filtered out by condition resolver.

Launching Job 3 out of 3

Number of reduce tasks is set to 0 since there's no reduce operator

21/01/07 11:57:01 INFO client.ConfiguredRMFailoverProxyProvider: Failing over to rm69

Starting Job = job_1609141291605_0059, Tracking URL = http://hp3:8088/proxy/application_1609141291605_0059/

Kill Command = /opt/cloudera/parcels/CDH-6.3.1-1.cdh6.3.1.p0.1470567/lib/hadoop/bin/hadoop job -kill job_1609141291605_0059

Hadoop job information for Stage-3: number of mappers: 1; number of reducers: 0

2021-01-07 11:57:12,761 Stage-3 map = 0%, reduce = 0%

2021-01-07 11:57:18,948 Stage-3 map = 100%, reduce = 0%, Cumulative CPU 1.83 sec

MapReduce Total cumulative CPU time: 1 seconds 830 msec

Ended Job = job_1609141291605_0059

Loading data to table test.compress_test1

MapReduce Jobs Launched:

Stage-Stage-1: Map: 2 Cumulative CPU: 7.98 sec HDFS Read: 387993 HDFS Write: 292661 HDFS EC Read: 0 SUCCESS

Stage-Stage-3: Map: 1 Cumulative CPU: 1.83 sec HDFS Read: 295269 HDFS Write: 292500 HDFS EC Read: 0 SUCCESS

Total MapReduce CPU Time Spent: 9 seconds 810 msec

OK

Time taken: 34.22 seconds

hive>

> CREATE TABLE compress_test2(

> sale_date string,

> prod_name string,

> total_nums bigint)

> STORED AS textfile;

OK

Time taken: 0.062 seconds

hive> insert into compress_test2 select sale_date,prod_name,total_nums from dm_sale;

Query ID = root_20210107115729_adfdcf1d-d4aa-47a7-9f2f-0db35e753505

Total jobs = 3

Launching Job 1 out of 3

Number of reduce tasks is set to 0 since there's no reduce operator

21/01/07 11:57:29 INFO client.ConfiguredRMFailoverProxyProvider: Failing over to rm69

Starting Job = job_1609141291605_0060, Tracking URL = http://hp3:8088/proxy/application_1609141291605_0060/

Kill Command = /opt/cloudera/parcels/CDH-6.3.1-1.cdh6.3.1.p0.1470567/lib/hadoop/bin/hadoop job -kill job_1609141291605_0060

Hadoop job information for Stage-1: number of mappers: 2; number of reducers: 0

2021-01-07 11:57:36,513 Stage-1 map = 0%, reduce = 0%

2021-01-07 11:57:42,693 Stage-1 map = 50%, reduce = 0%, Cumulative CPU 3.55 sec

2021-01-07 11:57:43,720 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 8.09 sec

MapReduce Total cumulative CPU time: 8 seconds 90 msec

Ended Job = job_1609141291605_0060

Stage-4 is filtered out by condition resolver.

Stage-3 is selected by condition resolver.

Stage-5 is filtered out by condition resolver.

Launching Job 3 out of 3

Number of reduce tasks is set to 0 since there's no reduce operator

21/01/07 11:57:44 INFO client.ConfiguredRMFailoverProxyProvider: Failing over to rm69

Starting Job = job_1609141291605_0061, Tracking URL = http://hp3:8088/proxy/application_1609141291605_0061/

Kill Command = /opt/cloudera/parcels/CDH-6.3.1-1.cdh6.3.1.p0.1470567/lib/hadoop/bin/hadoop job -kill job_1609141291605_0061

Hadoop job information for Stage-3: number of mappers: 1; number of reducers: 0

2021-01-07 11:57:55,724 Stage-3 map = 0%, reduce = 0%

2021-01-07 11:58:01,900 Stage-3 map = 100%, reduce = 0%, Cumulative CPU 1.8 sec

MapReduce Total cumulative CPU time: 1 seconds 800 msec

Ended Job = job_1609141291605_0061

Loading data to table test.compress_test2

MapReduce Jobs Launched:

Stage-Stage-1: Map: 2 Cumulative CPU: 8.09 sec HDFS Read: 387941 HDFS Write: 292661 HDFS EC Read: 0 SUCCESS

Stage-Stage-3: Map: 1 Cumulative CPU: 1.8 sec HDFS Read: 295238 HDFS Write: 292500 HDFS EC Read: 0 SUCCESS

Total MapReduce CPU Time Spent: 9 seconds 890 msec

OK

Time taken: 34.102 seconds

hive>

> CREATE TABLE compress_test3(

> sale_date string,

> prod_name string,

> total_nums bigint)

> STORED AS textfile tblproperties ("orc.compress"="NONE");

OK

Time taken: 0.082 seconds

hive> insert into compress_test3 select sale_date,prod_name,total_nums from dm_sale;

Query ID = root_20210107115814_bda3951d-f323-44e9-a5b0-60f8a852dc19

Total jobs = 3

Launching Job 1 out of 3

Number of reduce tasks is set to 0 since there's no reduce operator

21/01/07 11:58:14 INFO client.ConfiguredRMFailoverProxyProvider: Failing over to rm69

Starting Job = job_1609141291605_0062, Tracking URL = http://hp3:8088/proxy/application_1609141291605_0062/

Kill Command = /opt/cloudera/parcels/CDH-6.3.1-1.cdh6.3.1.p0.1470567/lib/hadoop/bin/hadoop job -kill job_1609141291605_0062

Hadoop job information for Stage-1: number of mappers: 2; number of reducers: 0

2021-01-07 11:58:21,413 Stage-1 map = 0%, reduce = 0%

2021-01-07 11:58:27,591 Stage-1 map = 50%, reduce = 0%, Cumulative CPU 3.49 sec

2021-01-07 11:58:28,620 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 8.11 sec

MapReduce Total cumulative CPU time: 8 seconds 110 msec

Ended Job = job_1609141291605_0062

Stage-4 is filtered out by condition resolver.

Stage-3 is selected by condition resolver.

Stage-5 is filtered out by condition resolver.

Launching Job 3 out of 3

Number of reduce tasks is set to 0 since there's no reduce operator

21/01/07 11:58:29 INFO client.ConfiguredRMFailoverProxyProvider: Failing over to rm69

Starting Job = job_1609141291605_0063, Tracking URL = http://hp3:8088/proxy/application_1609141291605_0063/

Kill Command = /opt/cloudera/parcels/CDH-6.3.1-1.cdh6.3.1.p0.1470567/lib/hadoop/bin/hadoop job -kill job_1609141291605_0063

Hadoop job information for Stage-3: number of mappers: 1; number of reducers: 0

2021-01-07 11:58:40,583 Stage-3 map = 0%, reduce = 0%

2021-01-07 11:58:46,772 Stage-3 map = 100%, reduce = 0%, Cumulative CPU 1.85 sec

MapReduce Total cumulative CPU time: 1 seconds 850 msec

Ended Job = job_1609141291605_0063

Loading data to table test.compress_test3

MapReduce Jobs Launched:

Stage-Stage-1: Map: 2 Cumulative CPU: 8.11 sec HDFS Read: 387989 HDFS Write: 292661 HDFS EC Read: 0 SUCCESS

Stage-Stage-3: Map: 1 Cumulative CPU: 1.85 sec HDFS Read: 295267 HDFS Write: 292500 HDFS EC Read: 0 SUCCESS

Total MapReduce CPU Time Spent: 9 seconds 960 msec

OK

Time taken: 34.061 seconds

hive>

[root@hp1 ~]# hadoop fs -ls /user/hive/warehouse/test.db/compress_test1 |awk -F ' ' '{print $5}'|awk '{a+=$1}END{print a}'

292500

[root@hp1 ~]# hadoop fs -ls /user/hive/warehouse/test.db/compress_test2 |awk -F ' ' '{print $5}'|awk '{a+=$1}END{print a}'

292500

[root@hp1 ~]# hadoop fs -ls /user/hive/warehouse/test.db/compress_test3 |awk -F ' ' '{print $5}'|awk '{a+=$1}END{print a}'

292500

[root@hp1 ~]#

参考:

1.https://zhuanlan.zhihu.com/p/59767485

2.https://blog.csdn.net/caizhengwu/article/details/89218799

2685

2685

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?