配置参考https://banzaicloud.com/docs/one-eye/logging-operator/configuration/fluentd/

github https://github.com/banzaicloud/logging-operator/

官方的demo https://banzaicloud.com/docs/one-eye/logging-operator/quickstarts/es-nginx/

logging opeator(插件): https://banzaicloud.com/docs/one-eye/logging-operator/configuration/plugins/

一 https://jishuin.proginn.com/p/763bfbd572ae

二 https://blog.csdn.net/tao12345666333/article/details/116178235

三 https://www.jianshu.com/p/9c18c4a2b7ed

准备

k8s 1.23

helm 3.8

持久化卷

- Fluent Operator vs logging-operator 对比

两者皆可自动部署 Fluent Bit 与 Fluentd。logging-operator 需要同时部署 Fluent Bit 和 Fluentd,而 Fluent Operator 支持可插拔部署 Fluent Bit 与 Fluentd,非强耦合,用户可以根据自己的需要自行选择部署 Fluentd 或者是 Fluent Bit,更为灵活。

在 logging-operator 中 Fluent Bit 收集的日志都必须经过 Fluentd 才能输出到最终的目的地,而且如果数据量过大,那么 Fluentd 存在单点故障隐患。Fluent Operator 中 Fluent Bit 可以直接将日志信息发送到目的地,从而规避单点故障的隐患。

logging-operator 定义了 loggings,outputs,flows,clusteroutputs 以及 clusterflows 四种 CRD,而 Fluent Operator 定义了 13 种 CRD。相较于 logging-operator,Fluent Operator 在 CRD 定义上更加多样,用户可以根据需要更灵活的对 Fluentd 以及 Fluent Bit 进行配置。同时在定义 CRD 时,选取与 Fluentd 以及 Fluent Bit 配置类似的命名,力求命名更加清晰,以符合原生的组件定义。

两者均借鉴了 Fluentd 的 label router 插件实现多租户日志隔离。

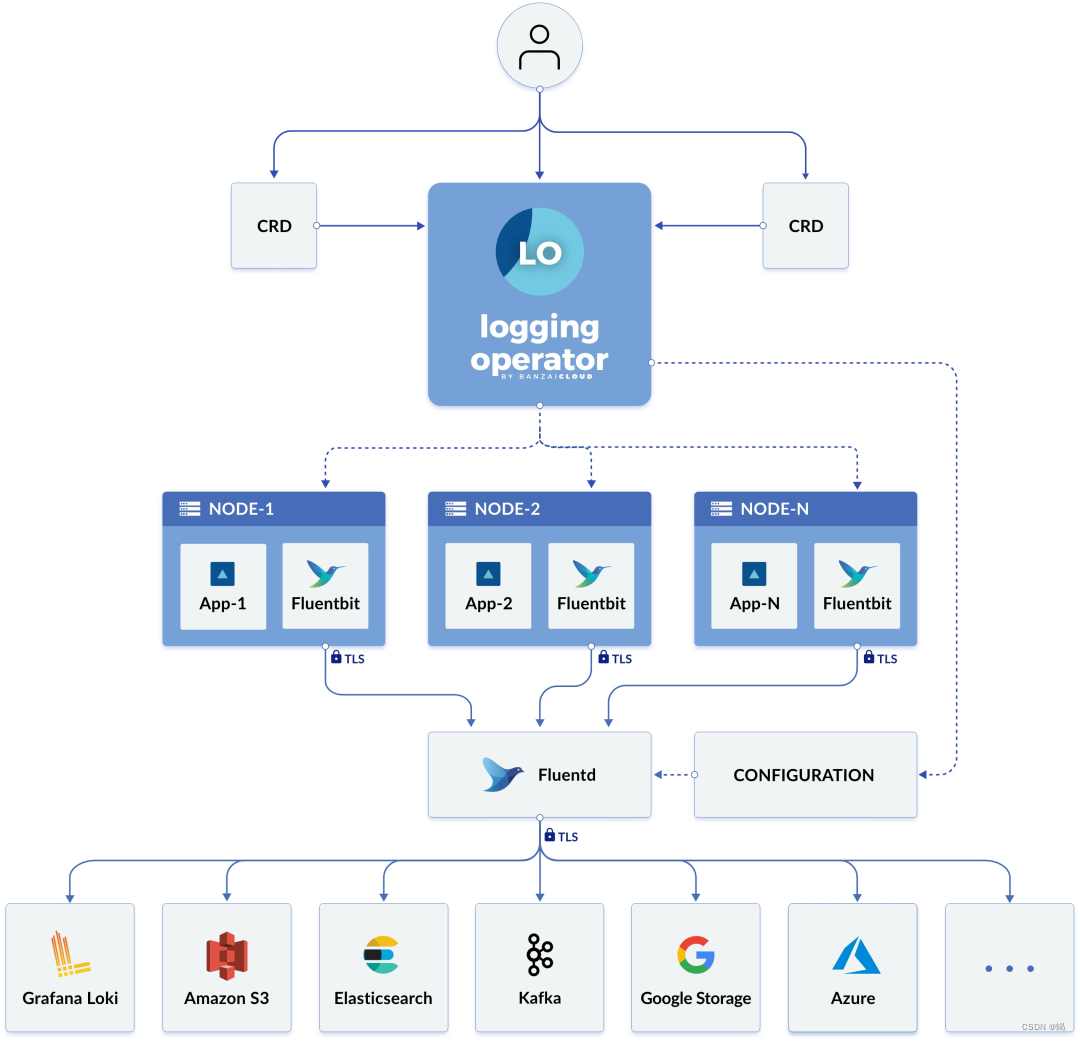

- logging operator 架构图

- 整个Logging Operator的核心CRD就只有5个,它们分别是

logging:用于定义一个日志采集端(FleuntBit)和传输端(Fleuntd)服务的基础配置;

flow:用于定义一个namespaces级别的日志过滤、解析和路由等规则。

clusterflow:用于定义一个集群级别的日志过滤、解析和路由等规则。

output:用于定义namespace级别的日志的输出和参数;

clusteroutput:用于定义集群级别的日志输出和参数,它能把被其他命名空间内的flow关联;

- helm安装

helm repo add banzaicloud-stable https://kubernetes-charts.banzaicloud.com

# 更新下仓库

helm repo update

#指定变量

pro=logging-operator

chart_version=3.17.5

mkdir -p /data/$pro

cd /data/$pro

#下载charts

helm pull banzaicloud-stable/$pro --version=$chart_version

#提取values.yaml文件

tar zxvf $pro-$chart_version.tgz --strip-components 1 $pro/values.yaml

cat > /data/$pro/start.sh << EOF

helm upgrade --install --wait $pro $pro-$chart_version.tgz \

--create-namespace \

-f values.yaml \

-n logging

EOF

bash /data/logging-operator/start.sh

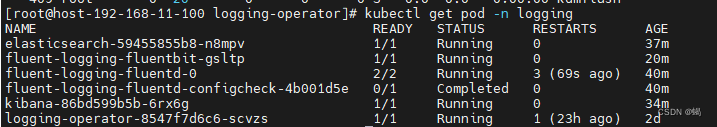

# kubectl get pod -n logging

NAMESPACE NAME READY STATUS RESTARTS AGE

logging logging-operator-8547f7d6c6-2sk8w 1/1 Running 0 13m

# kubectl get crds|grep logging

clusterflows.logging.banzaicloud.io 2022-05-08T12:57:37Z

clusteroutputs.logging.banzaicloud.io 2022-05-08T12:57:37Z

eventtailers.logging-extensions.banzaicloud.io 2022-05-08T12:57:37Z

flows.logging.banzaicloud.io 2022-05-08T12:57:37Z

hosttailers.logging-extensions.banzaicloud.io 2022-05-08T12:57:37Z

loggings.logging.banzaicloud.io 2022-05-08T12:57:37Z

outputs.logging.banzaicloud.io 2022-05-08T12:57:37Z

- flow或clustflow 配置 ,flow只对单个namespace有效,clusterflow对全局有效

cat > /data/logging-operator/clusterflow.yaml << 'EOF'

apiVersion: logging.banzaicloud.io/v1beta1

kind: ClusterFlow

metadata:

name: clusterflow

spec:

filters:

- parser:

remove_key_name_field: true

parse:

type: nginx

- tag_normaliser:

format: ${namespace_name}.${pod_name}.${container_name}

match:

- exclude:

labels:

componnet: reloader

namespaces:

- stage

- select:

labels:

app: nginx

namespaces:

- default

- prod

- dev

- test

EOF

实战

1、准备nginx

2、准备elasticsearch

3、helm安装logging-operator

4、配置logging, flow, output、clusteroutput、clusterflow、HostTailer、EventTailer等。

5、验证

- 部署 nginx

cat > /data/logging-operator/nginx.yaml << 'EOF'

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

namespace: default

labels:

app: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: banzaicloud/log-generator:0.3.2

ports:

- containerPort: 80

---

kind: Service

apiVersion: v1

metadata:

name: nginx-service

namespace: default

spec:

selector:

app: nginx

ports:

- protocol: TCP

port: 80

targetPort: 80

EOF

kubectl apply -f /data/logging-operator/nginx.yaml

- 部署elasticsearch

mkdir -p /var/lib/container/elasticsearch/data \

&& chmod 777 /var/lib/container/elasticsearch/data

cat > /data/logging-operator/elasticsearch.yaml << 'EOF'

apiVersion: v1

kind: Secret

metadata:

name: elasticsearch-password

namespace: logging

data:

ES_PASSWORD: RWxhc3RpY3NlYXJjaDJPMjE=

type: Opaque

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: elasticsearch

namespace: logging

spec:

replicas: 1

selector:

matchLabels:

app: elasticsearch

template:

metadata:

labels:

app: elasticsearch

spec:

volumes:

- name: elasticsearch-data

hostPath:

path: /var/lib/container/elasticsearch/data

- name: localtime

hostPath:

path: /usr/share/zoneinfo/Asia/Shanghai

containers:

- env:

- name: TZ

value: Asia/Shanghai

- name: xpack.security.enabled

value: "true"

- name: discovery.type

value: single-node

- name: ES_JAVA_OPTS

value: "-Xms512m -Xmx512m"

- name: ELASTIC_PASSWORD

valueFrom:

secretKeyRef:

name: elasticsearch-password

key: ES_PASSWORD

name: elasticsearch

image: elasticsearch:7.13.1

imagePullPolicy: Always

ports:

- containerPort: 9200

- containerPort: 9300

resources:

requests:

memory: 1000Mi

cpu: 200m

limits:

memory: 1000Mi

cpu: 500m

volumeMounts:

- name: elasticsearch-data

mountPath: /usr/share/elasticsearch/data

- name: localtime

mountPath: /etc/localtime

---

apiVersion: v1

kind: Service

metadata:

labels:

app: elasticsearch

name: elasticsearch

namespace: logging

spec:

ports:

- port: 9200

protocol: TCP

targetPort: 9200

selector:

app: elasticsearch

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kibana

namespace: logging

labels:

app: kibana

spec:

selector:

matchLabels:

app: kibana

template:

metadata:

labels:

app: kibana

spec:

# nodeSelector:

# es: log

containers:

- name: kibana

image: kibana:7.13.1

#image: docker.elastic.co/kibana/kibana:7.13.1

resources:

limits:

cpu: 1000m

requests:

cpu: 1000m

env:

- name: TZ

value: Asia/Shanghai

- name: ELASTICSEARCH_HOSTS

value: http://elasticsearch:9200

- name: ELASTICSEARCH_USERNAME

value: elastic

- name: ELASTICSEARCH_PASSWORD

valueFrom:

secretKeyRef:

name: elasticsearch-password

key: ES_PASSWORD

ports:

- containerPort: 5601

---

apiVersion: v1

kind: Service

metadata:

name: kibana

namespace: logging

labels:

app: kibana

spec:

ports:

- port: 5601

nodePort: 5601

type: NodePort

selector:

app: kibana

EOF

kubectl apply -f /data/logging-operator/elasticsearch.yaml

- 配置logging, flow, output等。

cat > /data/logging-operator/es-output.yaml << 'EOF'

apiVersion: v1

kind: Secret

metadata:

name: elasticsearch-password

namespace: default

data:

ES_PASSWORD: RWxhc3RpY3NlYXJjaDJPMjE=

type: Opaque

---

apiVersion: logging.banzaicloud.io/v1beta1

kind: Output

metadata:

name: es-output

namespace: default

spec:

elasticsearch:

host: elasticsearch.logging.svc.cluster.local

port: 9200

scheme: http

#ssl_verify: false

#ssl_version: TLSv1_2

buffer:

timekey: 1m

timekey_wait: 30s

timekey_use_utc: true

logstash_format: true

logstash_prefix: nginx #索引名称

user: elastic

password:

valueFrom:

secretKeyRef:

name: elasticsearch-password

key: ES_PASSWORD

EOF

kubectl apply -f /data/logging-operator/es-output.yaml

cat > /data/logging-operator/es-flow.yaml << 'EOF'

apiVersion: logging.banzaicloud.io/v1beta1

kind: Flow

metadata:

name: flow

namespace: default

spec:

filters:

- tag_normaliser: {}

- parser:

remove_key_name_field: true

reserve_data: true

parse:

type: nginx

localOutputRefs:

- es-output

match:

- select:

labels:

app: nginx

EOF

kubectl apply -f /data/logging-operator/es-flow.yaml

cat > /data/logging-operator/config.yaml << 'EOF'

apiVersion: logging.banzaicloud.io/v1beta1

kind: Logging

metadata:

name: fluent-logging

spec:

fluentd:

disablePvc: true

# bufferStorageVolume:

# hostPath:

# path: ""

# pvc:

# spec:

# accessModes:

# - ReadWriteOnce

# resources:

# requests:

# storage: 50Gi

# storageClassName: csi-rbd

# volumeMode: Filesystem

scaling:

# replicas: 3

drain:

enabled: true

image:

repository: ghcr.io/banzaicloud/fluentd-drain-watch

tag: latest

livenessProbe:

periodSeconds: 60

initialDelaySeconds: 600

exec:

command:

- "/bin/sh"

- "-c"

- >

LIVENESS_THRESHOLD_SECONDS=${LIVENESS_THRESHOLD_SECONDS:-300};

if [ ! -e /buffers ];

then

exit 1;

fi;

touch -d "${LIVENESS_THRESHOLD_SECONDS} seconds ago" /tmp/marker-liveness;

if [ -z "$(find /buffers -type d -newer /tmp/marker-liveness -print -quit)" ];

then

exit 1;

fi;

fluentOutLogrotate:

enabled: true

path: /fluentd/log/out

age: "10"

size: "10485760"

image:

repository: banzaicloud/fluentd

tag: v1.10.4-alpine-1

pullPolicy: IfNotPresent

configReloaderImage:

repository: jimmidyson/configmap-reload

tag: v0.4.0

pullPolicy: IfNotPresent

bufferVolumeImage:

repository: quay.io/prometheus/node-exporter

tag: v1.1.2

pullPolicy: IfNotPresent

# logLevel: debug

security:

roleBasedAccessControlCreate: true

readinessDefaultCheck:

bufferFileNumber: true

bufferFileNumberMax: 5000

bufferFreeSpace: true

bufferFreeSpaceThreshold: 90

failureThreshold: 1

initialDelaySeconds: 5

periodSeconds: 30

successThreshold: 3

timeoutSeconds: 3

fluentbit:

filterKubernetes:

Kube_URL: "https://kubernetes.default.svc:443"

Use_Kubelet: "false"

tls.verify: "false"

Kube_CA_File: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

Kube_Token_File: /var/run/secrets/kubernetes.io/serviceaccount/token

Match: kubernetes.*

Kube_Tag_Prefix: kubernetes.var.log.containers

Merge_Log: "false"

Merge_Log_Trim: "true"

Kubelet_Port: "10250"

inputTail:

Skip_Long_Lines: "true"

Parser: cri

Refresh_Interval: "60"

Rotate_Wait: "5"

Mem_Buf_Limit: "128M"

Docker_Mode: "false"

Tag: "kubernetes.*"

bufferStorage:

storage.backlog.mem_limit: 10M

storage.path: /var/log/log-buffer

bufferStorageVolume:

hostPath:

path: "/var/log/log-buffer"

positiondb:

hostPath:

path: "/var/log/positiondb"

image:

repository: fluent/fluent-bit

tag: 1.8.15-debug

pullPolicy: IfNotPresent

enableUpstream: false

logLevel: debug

network:

dnsPreferIpv4: true

connectTimeout: 30

keepaliveIdleTimeout: 60

keepalive: true

tls:

enabled: false

tolerations:

- effect: NoSchedule

key: node-role.kubernetes.io/master

security:

podSecurityPolicyCreate: true

roleBasedAccessControlCreate: true

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

podSecurityContext:

fsGroup: 101

forwardOptions:

Require_ack_response: true

metrics:

interval: 60s

path: /api/v1/metrics/prometheus

port: 2020

serviceMonitor: false

controlNamespace: logging

watchNamespaces: ["default","kube-system","logging"]

EOF

kubectl apply -f /data/logging-operator/config.yaml

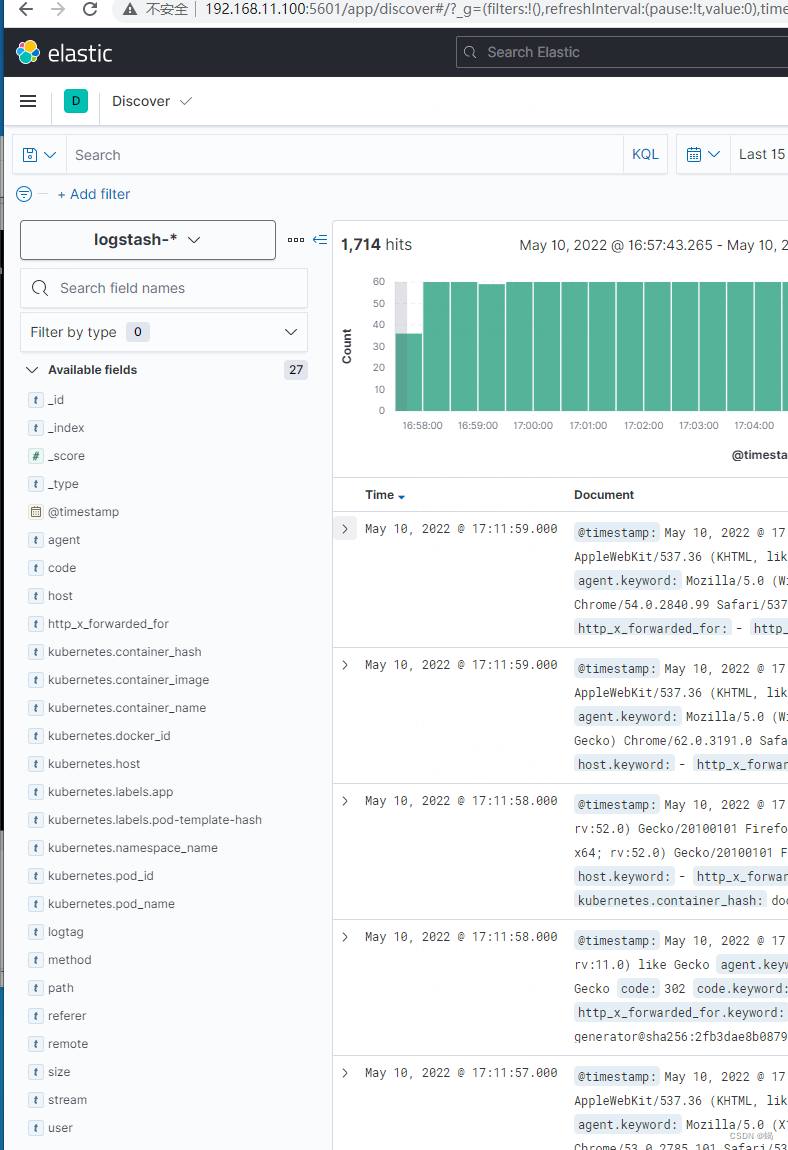

- 验证

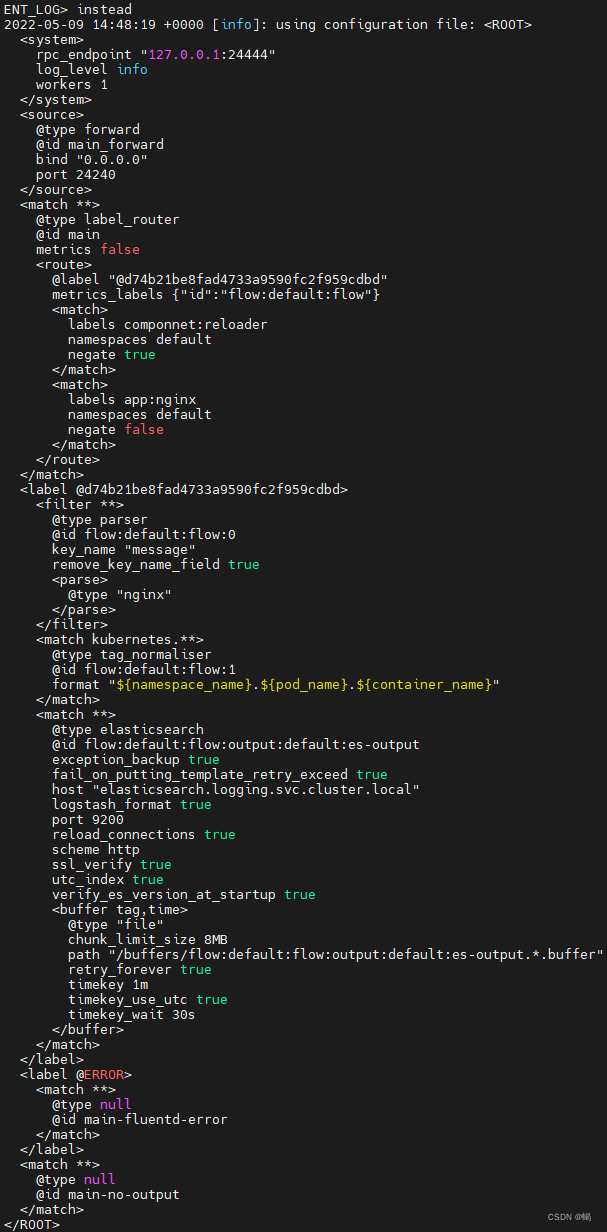

kubectl logs -f -n logging fluent-logging-fluentd-configcheck-31c22f37

为logging-operator的CRDS生成的fluentd配置

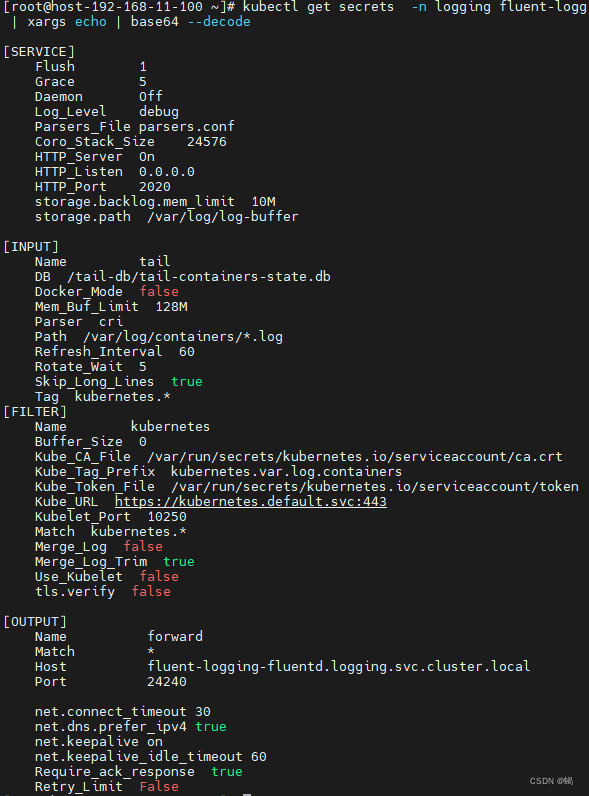

为logging-operator的CRDS生成的fluent-bit配置

#安装jq

rpm -ivh http://dl.fedoraproject.org/pub/epel/epel-release-latest-7.noarch.rpm

yum install jq -y

kubectl get secrets -n logging fluent-logging-fluentbit -o json | jq '.data."fluent-bit.conf"' | xargs echo | base64 --decode

把secrets通过base64decode后

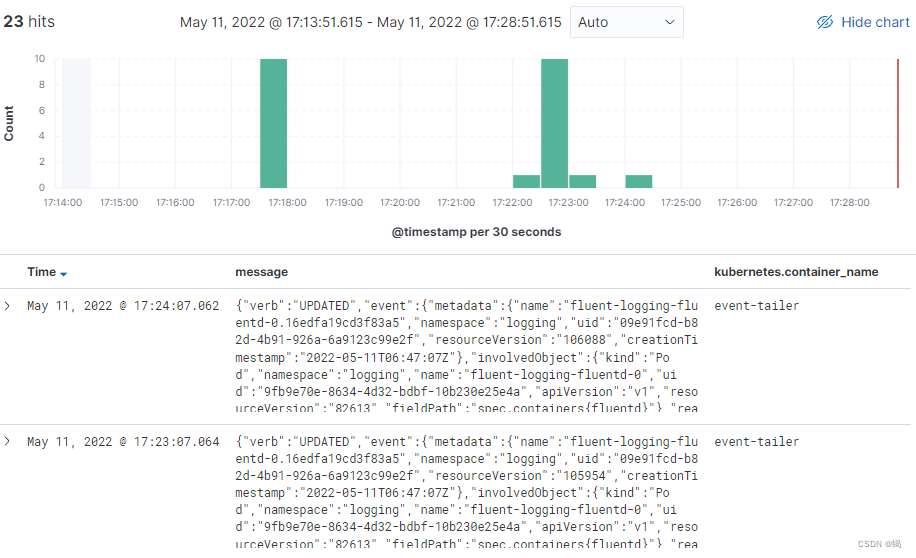

- Event Tailer

https://banzaicloud.com/docs/one-eye/logging-operator/configuration/extensions/kubernetes-event-tailer/

apiVersion: logging-extensions.banzaicloud.io/v1alpha1

kind: EventTailer

metadata:

name: event

namespace: logging

spec:

controlNamespace: logging

---

apiVersion: logging.banzaicloud.io/v1beta1

kind: Flow

metadata:

name: event-flow

namespace: logging

spec:

filters:

- tag_normaliser: {}

- parser:

parse:

type: json

match:

- select:

labels:

app.kubernetes.io/name: event-tailer

globalOutputRefs:

- global-event-output

---

apiVersion: logging.banzaicloud.io/v1beta1

kind: ClusterOutput

metadata:

name: global-event-output

namespace: logging

spec:

enabledNamespaces: ["defalut","logging"]

elasticsearch:

host: elasticsearch.logging.svc.cluster.local

port: 9200

scheme: http

#ssl_verify: false

#ssl_version: TLSv1_2

user: elastic

logstash_format: true

logstash_prefix: event #索引名称

password:

valueFrom:

secretKeyRef:

name: elasticsearch-password

key: ES_PASSWORD

buffer:

timekey: 1m

timekey_wait: 30s

timekey_use_utc: true

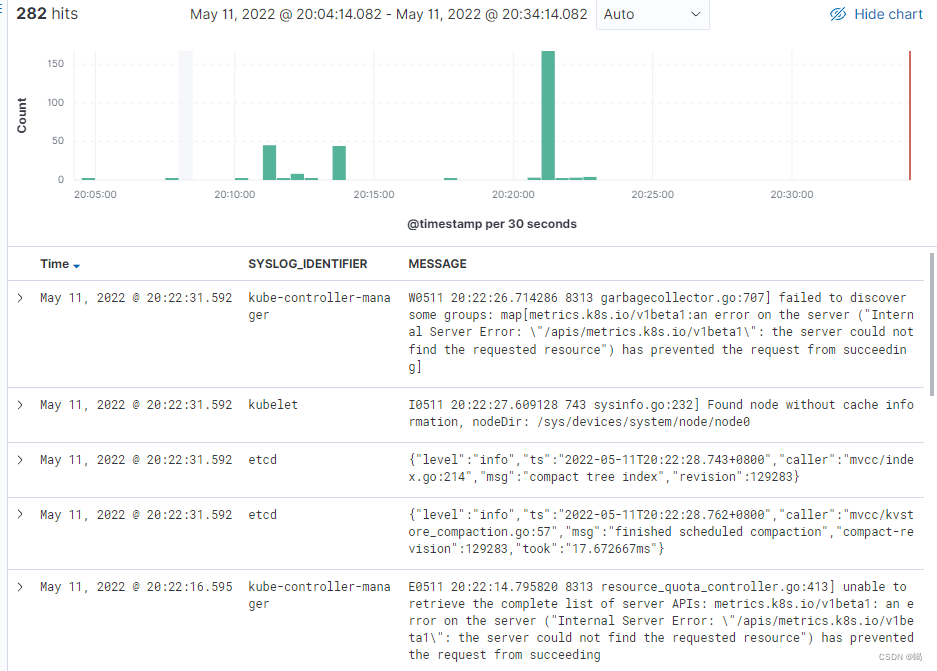

- Host Tailer

https://banzaicloud.com/docs/one-eye/logging-operator/configuration/extensions/kubernetes-host-tailer/

apiVersion: logging-extensions.banzaicloud.io/v1alpha1

kind: HostTailer

metadata:

name: systemd-hosttailer

namespace: logging

spec:

systemdTailers:

- name: systemd-tailer

disabled: false

maxEntries: 100

path: "/run/log/journal" #此目录一定要指定,不然启动不成功,/var/log/journal或/run/log/journal

#systemdFilter: kubelet.service #对单个指示进行入库时,指定systemd的service,全局默认是所有

containerOverrides:

image: fluent/fluent-bit:1.8.15-debug

fileTailers:

- name: message-tail

path: /var/log/message

buffer_max_size: 64k #此值一定要修改,不然启动不成功

disabled: false

skip_long_lines: "true"

---

apiVersion: logging.banzaicloud.io/v1beta1

kind: Flow

metadata:

name: hosttailer-flow

namespace: logging

spec:

filters:

- tag_normaliser: {}

- parser:

parse:

type: json

match:

- select:

labels:

app.kubernetes.io/name: host-tailer

globalOutputRefs:

- global-host-output

---

apiVersion: logging.banzaicloud.io/v1beta1

kind: ClusterOutput

metadata:

name: global-host-output

namespace: logging

spec:

enabledNamespaces: ["defalut","logging"]

elasticsearch:

host: elasticsearch.logging.svc.cluster.local

port: 9200

scheme: http

#ssl_verify: false

#ssl_version: TLSv1_2

user: elastic

logstash_format: true

logstash_prefix: hosttailer

password:

valueFrom:

secretKeyRef:

name: elasticsearch-password

key: ES_PASSWORD

buffer:

timekey: 1m

timekey_wait: 30s

timekey_use_utc: true

clusteroutput配置 方法

apiVersion: logging.banzaicloud.io/v1beta1

kind: ClusterOutput

metadata:

name: global-es-output

namespace: logging #把clusteroutput放在logging的namespace下

spec:

enabledNamespaces: ["defalut","logging"] #对定义的namespaces有作用

elasticsearch:

host: elasticsearch.logging.svc.cluster.local

port: 9200

scheme: http

#ssl_verify: false

#ssl_version: TLSv1_2

user: elastic

logstash_format: true

password:

valueFrom:

secretKeyRef:

name: elasticsearch-password

key: ES_PASSWORD

buffer:

timekey: 1m

timekey_wait: 30s

timekey_use_utc: true

- flow或clusterflow引用clusteroutput

apiVersion: logging.banzaicloud.io/v1beta1

kind: Flow

metadata:

name: flow

namespace: default

spec:

filters:

- tag_normaliser: {}

- parser:

remove_key_name_field: true

reserve_data: true

parse:

type: nginx

localOutputRefs: #使用output

- es-output

globalOutputRefs: #使用clusteroutput

- global-es-output

match:

- select:

labels:

app: nginx

排错:

1、查看是不有权限访问 kubernetes API

kubectl exec -it -n logging fluent-logging-fluentd-0 sh

/$

#执行 如下命令获得token

TOKEN=$(cat /var/run/secrets/kubernetes.io/serviceaccount/token)

# 访问API server

curl --cacert /var/run/secrets/kubernetes.io/serviceaccount/ca.crt -H "Authorization: Bearer $TOKEN" -s https://kubernetes.default.svc:443/api/v1/namespaces/default/pods/ -k

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {},

"status": "Failure",

"message": "pods is forbidden: User \"system:serviceaccount:logging:fluent-logging-fluentd\" cannot list resource \"pods\" in API group \"\" in the namespace \"default\"",

"reason": "Forbidden",

"details": {

"kind": "pods"

},

"code": 403

}

提示无权访问

2、验证output和flow工作是否正常

[root@host-192-168-11-100 logging-operator]# kubectl get outputs.logging.banzaicloud.io

NAME ACTIVE PROBLEMS

es-output true

[root@host-192-168-11-100 logging-operator]# kubectl get flows.logging.banzaicloud.io

NAME ACTIVE PROBLEMS

event-flow true

flow true

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?