Pig概述:

Pig可以看做hadoop的客户端软件,可以连接到hadoop集群进行数据分析工作, 是一种探索大规模数据集的脚本语言。

pig是在HDFS和MapReduce之上的数据流处理语言,它将数据流处理翻译成多个map和reduce函数,提供更高层次的抽象将程序员从具体的编程中解放出来,对于不熟悉java的用户,使用一种较为简便的类似于SQL的面向数据流的语言pig latin进行数据处理

Pig包括两部分:用于描述数据流的语言,称为Pig Latin;和用于运行Pig Latin程序的执行环境。

Pig Latin程序有一系列的operation和transformation组成,可以进行排序、过滤、求和、分组、关联等常用操作,还可以自定义函数,这是一种面向数据分析处理的轻量级脚本语言

。每个操作或变换对输入进行数据处理,然后产生输出结果。这些操作整体上描述了一个数据流。Pig内部,这些变换操作被转换成一系列的MapReduce作业。

pig可以看做是pig latin到map-reduce的映射器。

Pig不适合所有的数据处理任务,和MapReduce一样,它是为数据批处理而设计的。如果只想查询大数据集中的一小部分数据,pig的实现不会很好,因为它要扫描整个数据集或绝大部分。

pig安装:

1. 下载并解压

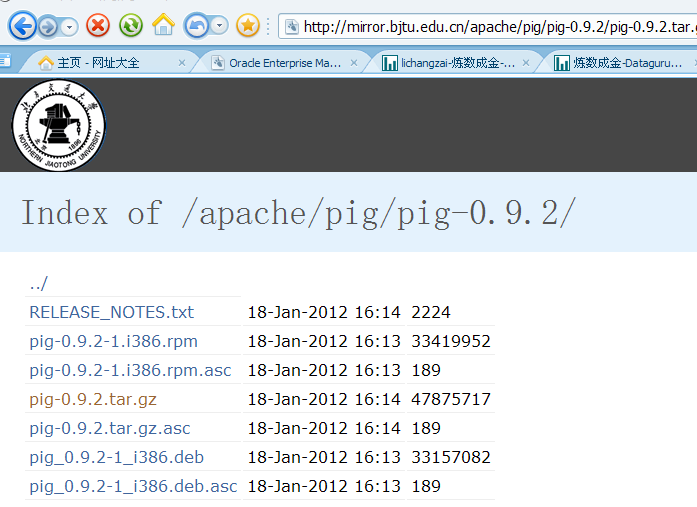

下载地址:http://mirror.bjtu.edu.cn/apache/pig/pig-0.9.2/

[grid@gc ~]$ tar xzvf pig-0.9.2.tar.gz

[grid@gc ~]$ pwd

/home/grid

[grid@gc ~]$ ls

abcd Desktop eclipse hadoop hadoop-0.20.2 hadoop-code hbase-0.90.5 input javaer.log javaer.log~ pig-0.9.2 workspace

2. pig本地模式配置环境

所有文件和执行过程都在本地,一般用于测试程序

--编辑环境变量

[grid@gc ~]$ vi .bash_profile

PATH=$PATH:$HOME/bin:/usr/java/jdk1.6.0_18/bin:/home/grid/pig-0.9.2/bin

JAVA_HOME=/usr #注意是java目录的上级目录

export PATH

export LANG=zh_CN

[grid@gc ~]$ source .bash_profile

--进入grunt shell

[grid@gc ~]$ pig -x local

2013-01-09 13:29:10,959 [main] INFO org.apache.pig.Main - Logging error messages to: /home/grid/pig_1357709350959.log

2013-01-09 13:29:13,080 [main] INFO org.apache.pig.backend.hadoop.executionengine.HExecutionEngine - Connecting to hadoop file system at: file:///

grunt>

3. pig的map-reduce模式配置环境

实际工作的环境

--编辑环境变量

PATH=$PATH:$HOME/bin:/usr/java/jdk1.6.0_18/bin:/home/grid/pig-0.9.2/bin:/home/grid/hadoop-0.20.2/bin

export JAVA_HOME=/usr

export PIG_CLASSPATH=/home/grid/pig-0.9.2/conf

export PATH

export LANG=zh_CN

--进入grunt shell

[grid@gc ~]$ pig

2013-01-09 13:55:42,303 [main] INFO org.apache.pig.Main - Logging error messages to: /home/grid/pig_1357710942292.log

2013-01-09 13:55:45,432 [main] INFO org.apache.pig.backend.hadoop.executionengine.HExecutionEngine - Connecting to hadoop file system at: hdfs://gc:9000

2013-01-09 13:55:47,409 [main] INFO org.apache.pig.backend.hadoop.executionengine.HExecutionEngine - Connecting to map-reduce job tracker at: gc:9001

grunt>

注意:因为pig要对hdfs进行操作,在启动grunt shell时之前要必需确保hadoop已经启动。

4. Pig的运行方法

Ø pig脚本:将程序写入.pig文件中

Ø Grunt:运行Pig命令的交互式shell环境

Ø 嵌入式方式

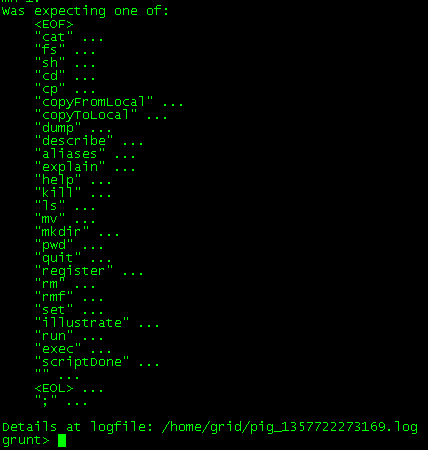

Grunt方法:

--自动补全机制

grunt> s --按tab键

set split store

grunt> l --按tab键

load long ls

--Autocomplete文件

--Eclipse插件PigPen

5. pig常用命令

grunt> ls

hdfs://gc:9000/user/grid/input/Test_1<r 3> 328

hdfs://gc:9000/user/grid/input/Test_2<r 3> 134

hdfs://gc:9000/user/grid/input/abcd<r 2> 11

grunt> copyToLocal Test_1 ttt

grunt> quit

[grid@gc ~]$ ll ttt

-rwxrwxrwx 1 grid hadoop 328 01-11 05:53 ttt

Pig操作实例:

--首先在hadoop中建立week8目录,并将access_log.txt文件传入hadoop

grunt> ls

hdfs://gc:9000/user/grid/.Trash <dir>

hdfs://gc:9000/user/grid/input <dir>

hdfs://gc:9000/user/grid/out <dir>

hdfs://gc:9000/user/grid/output <dir>

hdfs://gc:9000/user/grid/output2 <dir>

grunt> pwd

hdfs://gc:9000/user/grid

grunt> mkdir access

grunt> cd access

grunt> copyFromLocal /home/grid/access_log.txt access.log

grunt> ls

hdfs://gc:9000/user/grid/access/access.log<r 2> 7118627

--将log文件load进表a

grunt> a = load '/user/grid/access/access.log'

>> using PigStorage(' ')

>> as (ip,a1,a3,a4,a5,a6,a7,a8);

--对a进行过滤只保留ip字段

grunt> b = foreach a generate ip;

--按ip做group by

grunt> c = group b by ip;

--按ip对c进行统计

grunt> d = foreach c generate group,COUNT($1);

--显示结果:

grunt> dump d;

2013-01-12 12:07:51,482 [main] INFO org.apache.pig.tools.pigstats.ScriptState - Pig features used in the script: GROUP_BY

2013-01-12 12:07:51,827 [main] INFO org.apache.pig.newplan.logical.rules.ColumnPruneVisitor - Columns pruned for a: $1, $2, $3, $4, $5, $6, $7

2013-01-12 12:07:54,727 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.MRCompiler - File concatenation threshold: 100 optimistic? false

2013-01-12 12:07:54,775 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.CombinerOptimizer - Choosing to move algebraic foreach to combiner

2013-01-12 12:07:55,003 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.MultiQueryOptimizer - MR plan size before optimization: 1

2013-01-12 12:07:55,007 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.MultiQueryOptimizer - MR plan size after optimization: 1

2013-01-12 12:07:56,316 [main] INFO org.apache.pig.tools.pigstats.ScriptState - Pig script settings are added to the job

2013-01-12 12:07:56,683 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.JobControlCompiler - mapred.job.reduce.markreset.buffer.percent is not set, set to default 0.3

2013-01-12 12:07:56,701 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.JobControlCompiler - creating jar file Job9027177661900375605.jar

2013-01-12 12:08:12,923 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.JobControlCompiler - jar file Job9027177661900375605.jar created

2013-01-12 12:08:13,040 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.JobControlCompiler - Setting up single store job

2013-01-12 12:08:13,359 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.JobControlCompiler - BytesPerReducer=1000000000 maxReducers=999 totalInputFileSize=7118627

2013-01-12 12:08:13,360 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.JobControlCompiler - Neither PARALLEL nor default parallelism is set for this job. Setting number of reducers to 1

2013-01-12 12:08:13,616 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.MapReduceLauncher - 1 map-reduce job(s) waiting for submission.

2013-01-12 12:08:14,164 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.MapReduceLauncher - 0% complete

2013-01-12 12:08:19,125 [Thread-21] INFO org.apache.hadoop.mapreduce.lib.input.FileInputFormat - Total input paths to process : 1

2013-01-12 12:08:19,154 [Thread-21] INFO org.apache.pig.backend.hadoop.executionengine.util.MapRedUtil - Total input paths to process : 1

2013-01-12 12:08:19,231 [Thread-21] INFO org.apache.pig.backend.hadoop.executionengine.util.MapRedUtil - Total input paths (combined) to process : 1

2013-01-12 12:08:30,207 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.MapReduceLauncher - HadoopJobId: job_201301091247_0001

2013-01-12 12:08:30,208 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.MapReduceLauncher - More information at: http://gc:50030/jobdetails.jsp?jobid=job_201301091247_0001

2013-01-12 12:10:28,459 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.MapReduceLauncher - 6% complete

2013-01-12 12:10:34,050 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.MapReduceLauncher - 29% complete

2013-01-12 12:10:38,567 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.MapReduceLauncher - 50% complete

2013-01-12 12:11:28,357 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.MapReduceLauncher - 100% complete

2013-01-12 12:11:28,367 [main] INFO org.apache.pig.tools.pigstats.SimplePigStats - Script Statistics:

HadoopVersion PigVersion UserId StartedAt FinishedAt Features

0.20.2 0.9.2 grid 2013-01-12 12:07:56 2013-01-12 12:11:28 GROUP_BY

Success!

Job Stats (time in seconds):

JobId Maps Reduces MaxMapTime MinMapTIme AvgMapTime MaxReduceTime MinReduceTime AvgReduceTime Alias Feature Outputs

job_201301091247_0001 1 1 58 58 58 25 25 25 a,b,c,d GROUP_BY,COMBINER hdfs://gc:9000/tmp/temp-1148213696/tmp-241551689,

Input(s):

Successfully read 28134 records (7118627 bytes) from: "/user/grid/access/access.log"

Output(s):

Successfully stored 476 records (14039 bytes) in: "hdfs://gc:9000/tmp/temp-1148213696/tmp-241551689"

Counters:

Total records written : 476

Total bytes written : 14039

Spillable Memory Manager spill count : 0

Total bags proactively spilled: 0

Total records proactively spilled: 0

Job DAG:

job_201301091247_0001

2013-01-12 12:11:28,419 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.MapReduceLauncher - Success!

2013-01-12 12:11:28,760 [main] INFO org.apache.hadoop.mapreduce.lib.input.FileInputFormat - Total input paths to process : 1

2013-01-12 12:11:28,761 [main] INFO org.apache.pig.backend.hadoop.executionengine.util.MapRedUtil - Total input paths to process : 1

(127.0.0.1,2)

(1.59.65.67,2)

(112.4.2.19,9)

419

419

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?