18/09/11 11:41:58 INFO cluster.YarnClusterScheduler: Created YarnClusterScheduler

18/09/11 11:41:58 INFO cluster.SchedulerExtensionServices: Starting Yarn extension services with app application_1524727076954_10810 and attemptId Some(appattempt_1524727076954_10810_000001)

18/09/11 11:41:58 INFO util.Utils: Using initial executors = 5, max of spark.dynamicAllocation.initialExecutors, spark.dynamicAllocation.minExecutors and spark.executor.instances

18/09/11 11:41:58 INFO util.Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 41398.

18/09/11 11:41:58 INFO netty.NettyBlockTransferService: Server created on 10.0.115.2:41398

18/09/11 11:41:58 INFO storage.BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy

18/09/11 11:41:58 INFO storage.BlockManagerMaster: Registering BlockManager BlockManagerId(driver, 10.0.115.2, 41398, None)

18/09/11 11:41:58 INFO storage.BlockManagerMasterEndpoint: Registering block manager 10.0.115.2:41398 with 368.7 MB RAM, BlockManagerId(driver, 10.0.115.2, 41398, None)

18/09/11 11:41:58 INFO storage.BlockManagerMaster: Registered BlockManager BlockManagerId(driver, 10.0.115.2, 41398, None)

18/09/11 11:41:58 INFO storage.BlockManager: external shuffle service port = 7337

18/09/11 11:41:58 INFO storage.BlockManager: Initialized BlockManager: BlockManagerId(driver, 10.0.115.2, 41398, None)

18/09/11 11:41:58 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@3ce402ed{/metrics/json,null,AVAILABLE,@Spark}

18/09/11 11:41:58 INFO scheduler.EventLoggingListener: Logging events to hdfs://nameserviceHA/user/spark/spark2ApplicationHistory/application_1524727076954_10810_1

18/09/11 11:41:58 INFO util.Utils: Using initial executors = 5, max of spark.dynamicAllocation.initialExecutors, spark.dynamicAllocation.minExecutors and spark.executor.instances

18/09/11 11:41:58 WARN cluster.YarnSchedulerBackend$YarnSchedulerEndpoint: Attempted to request executors before the AM has registered!

18/09/11 11:41:58 ERROR spark.SparkContext: Error initializing SparkContext.

java.lang.IllegalStateException: Promise already completed.

at scala.concurrent.Promise$class.complete(Promise.scala:55)

at scala.concurrent.impl.Promise$DefaultPromise.complete(Promise.scala:153)

at scala.concurrent.Promise$class.success(Promise.scala:86)

at scala.concurrent.impl.Promise$DefaultPromise.success(Promise.scala:153)

at org.apache.spark.deploy.yarn.ApplicationMaster.org$apache$spark$deploy$yarn$ApplicationMaster$$sparkContextInitialized(ApplicationMaster.scala:367)

at org.apache.spark.deploy.yarn.ApplicationMaster$.sparkContextInitialized(ApplicationMaster.scala:824)

at org.apache.spark.scheduler.cluster.YarnClusterScheduler.postStartHook(YarnClusterScheduler.scala:32)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:570)

at org.apache.spark.SparkContext$.getOrCreate(SparkContext.scala:2511)

at org.apache.spark.sql.SparkSession$Builder$$anonfun$6.apply(SparkSession.scala:909)

at org.apache.spark.sql.SparkSession$Builder$$anonfun$6.apply(SparkSession.scala:901)

at scala.Option.getOrElse(Option.scala:121)

at org.apache.spark.sql.SparkSession$Builder.getOrCreate(SparkSession.scala:901)

at com.jlpay.action.AbstractStatAction.executeDays(AbstractStatAction.java:125)

at com.jlpay.action.check.account.file.ReadFileAbstractHbaseAction.execute(ReadFileAbstractHbaseAction.java:285)

at com.jlpay.job.read.file.qrcode.UnionWetchatJob.handle(UnionWetchatJob.java:21)

at com.jlpay.job.read.file.qrcode.UnionWetchatJob.main(UnionWetchatJob.java:15)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.yarn.ApplicationMaster$$anon$3.run(ApplicationMaster.scala:686)

18/09/11 11:41:58 INFO server.AbstractConnector: Stopped Spark@1b3242f9{HTTP/1.1,[http/1.1]}{0.0.0.0:0}

18/09/11 11:41:58 INFO ui.SparkUI: Stopped Spark web UI at http://10.0.115.2:35538

18/09/11 11:41:58 ERROR util.Utils: Uncaught exception in thread Driver

java.io.IOException: Target log file already exists (hdfs://nameserviceHA/user/spark/spark2ApplicationHistory/application_1524727076954_10810_1)

at org.apache.spark.scheduler.EventLoggingListener.stop(EventLoggingListener.scala:240)

at org.apache.spark.SparkContext$$anonfun$stop$7$$anonfun$apply$mcV$sp$5.apply(SparkContext.scala:1919)

at org.apache.spark.SparkContext$$anonfun$stop$7$$anonfun$apply$mcV$sp$5.apply(SparkContext.scala:1919)

at scala.Option.foreach(Option.scala:257)

at org.apache.spark.SparkContext$$anonfun$stop$7.apply$mcV$sp(SparkContext.scala:1919)

at org.apache.spark.util.Utils$.tryLogNonFatalError(Utils.scala:1317)

at org.apache.spark.SparkContext.stop(SparkContext.scala:1918)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:590)

at org.apache.spark.SparkContext$.getOrCreate(SparkContext.scala:2511)

at org.apache.spark.sql.SparkSession$Builder$$anonfun$6.apply(SparkSession.scala:909)

at org.apache.spark.sql.SparkSession$Builder$$anonfun$6.apply(SparkSession.scala:901)

at scala.Option.getOrElse(Option.scala:121)

at org.apache.spark.sql.SparkSession$Builder.getOrCreate(SparkSession.scala:901)

at com.jlpay.action.AbstractStatAction.executeDays(AbstractStatAction.java:125)

at com.jlpay.action.check.account.file.ReadFileAbstractHbaseAction.execute(ReadFileAbstractHbaseAction.java:285)

at com.jlpay.job.read.file.qrcode.UnionWetchatJob.handle(UnionWetchatJob.java:21)

at com.jlpay.job.read.file.qrcode.UnionWetchatJob.main(UnionWetchatJob.java:15)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.yarn.ApplicationMaster$$anon$3.run(ApplicationMaster.scala:686)

18/09/11 11:41:58 WARN cluster.YarnSchedulerBackend$YarnSchedulerEndpoint: Attempted to request executors before the AM has registered!

18/09/11 11:41:58 INFO cluster.YarnClusterSchedulerBackend: Shutting down all executors

18/09/11 11:41:58 INFO cluster.YarnSchedulerBackend$YarnDriverEndpoint: Asking each executor to shut down

18/09/11 11:41:58 INFO cluster.SchedulerExtensionServices: Stopping SchedulerExtensionServices

(serviceOption=None,

services=List(),

started=false)

18/09/11 11:41:58 INFO spark.MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

18/09/11 11:41:58 INFO memory.MemoryStore: MemoryStore cleared

18/09/11 11:41:58 INFO storage.BlockManager: BlockManager stopped

18/09/11 11:41:58 INFO storage.BlockManagerMaster: BlockManagerMaster stopped

18/09/11 11:41:58 INFO scheduler.OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

18/09/11 11:41:58 INFO spark.SparkContext: Successfully stopped SparkContext

18/09/11 11:41:58 ERROR yarn.ApplicationMaster: User class threw exception: java.lang.IllegalStateException: Promise already completed.

java.lang.IllegalStateException: Promise already completed.

at scala.concurrent.Promise$class.complete(Promise.scala:55)

at scala.concurrent.impl.Promise$DefaultPromise.complete(Promise.scala:153)

at scala.concurrent.Promise$class.success(Promise.scala:86)

at scala.concurrent.impl.Promise$DefaultPromise.success(Promise.scala:153)

at org.apache.spark.deploy.yarn.ApplicationMaster.org$apache$spark$deploy$yarn$ApplicationMaster$$sparkContextInitialized(ApplicationMaster.scala:367)

at org.apache.spark.deploy.yarn.ApplicationMaster$.sparkContextInitialized(ApplicationMaster.scala:824)

at org.apache.spark.scheduler.cluster.YarnClusterScheduler.postStartHook(YarnClusterScheduler.scala:32)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:570)

at org.apache.spark.SparkContext$.getOrCreate(SparkContext.scala:2511)

at org.apache.spark.sql.SparkSession$Builder$$anonfun$6.apply(SparkSession.scala:909)

at org.apache.spark.sql.SparkSession$Builder$$anonfun$6.apply(SparkSession.scala:901)

at scala.Option.getOrElse(Option.scala:121)

at org.apache.spark.sql.SparkSession$Builder.getOrCreate(SparkSession.scala:901)

at com.jlpay.action.AbstractStatAction.executeDays(AbstractStatAction.java:125)

at com.jlpay.action.check.account.file.ReadFileAbstractHbaseAction.execute(ReadFileAbstractHbaseAction.java:285)

at com.jlpay.job.read.file.qrcode.UnionWetchatJob.handle(UnionWetchatJob.java:21)

at com.jlpay.job.read.file.qrcode.UnionWetchatJob.main(UnionWetchatJob.java:15)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.yarn.ApplicationMaster$$anon$3.run(ApplicationMaster.scala:686)

18/09/11 11:41:58 INFO yarn.ApplicationMaster: Final app status: FAILED, exitCode: 15, (reason: User class threw exception: java.lang.IllegalStateException: Promise already completed.)

18/09/11 11:41:58 INFO util.ShutdownHookManager: Shutdown hook called

18/09/11 11:41:58 INFO util.ShutdownHookManager: Deleting directory /data4/yarn/nm/usercache/hdfs/appcache/application_1524727076954_10810/spark-9f792b92-5d81-41a8-9fb3-d6213707f80a

18/09/11 11:41:58 INFO util.ShutdownHookManager: Deleting directory /data2/yarn/nm/usercache/hdfs/appcache/application_1524727076954_10810/spark-8e45b230-73f6-4e42-9ccd-c8b2b709ed76

18/09/11 11:41:58 INFO util.ShutdownHookManager: Deleting directory /data12/yarn/nm/usercache/hdfs/appcache/application_1524727076954_10810/spark-3f36d7c1-4f75-4d23-8843-a155c0fe59a4

18/09/11 11:41:58 INFO util.ShutdownHookManager: Deleting directory /data11/yarn/nm/usercache/hdfs/appcache/application_1524727076954_10810/spark-482cf51f-f698-478a-852f-01b8daedf391

18/09/11 11:41:58 INFO util.ShutdownHookManager: Deleting directory /data5/yarn/nm/usercache/hdfs/appcache/application_1524727076954_10810/spark-dfa07bb2-5906-43fb-9d67-a3b245325e69

18/09/11 11:41:58 INFO util.ShutdownHookManager: Deleting directory /data3/yarn/nm/usercache/hdfs/appcache/application_1524727076954_10810/spark-610f745e-ec74-4d5a-b648-e130d46885ae

18/09/11 11:41:58 INFO util.ShutdownHookManager: Deleting directory /data7/yarn/nm/usercache/hdfs/appcache/application_1524727076954_10810/spark-676db708-cf44-412b-9d11-e1cf856e7e58

18/09/11 11:41:58 INFO util.ShutdownHookManager: Deleting directory /data9/yarn/nm/usercache/hdfs/appcache/application_1524727076954_10810/spark-ca3c3a8f-8ca8-4267-8b20-865167f6da2d

18/09/11 11:41:58 INFO util.ShutdownHookManager: Deleting directory /data1/yarn/nm/usercache/hdfs/appcache/application_1524727076954_10810/spark-f3482814-31db-465d-a227-abd0e027a1d3

18/09/11 11:41:58 INFO util.ShutdownHookManager: Deleting directory /data10/yarn/nm/usercache/hdfs/appcache/application_1524727076954_10810/spark-52eb7350-092e-49dc-9514-298022db0ff5

18/09/11 11:41:58 INFO util.ShutdownHookManager: Deleting directory /data8/yarn/nm/usercache/hdfs/appcache/application_1524727076954_10810/spark-cbf1ba03-ea05-41f8-aa23-62cea3818441

18/09/11 11:41:58 INFO util.ShutdownHookManager: Deleting directory /data6/yarn/nm/usercache/hdfs/appcache/application_1524727076954_10810/spark-25c8cdc7-c572-490f-9ca1-526fa366c2c4

主要原因是循环里面重复spark初始化,一个action只能初始化一个。

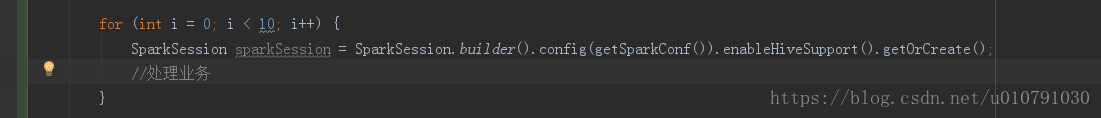

改之前

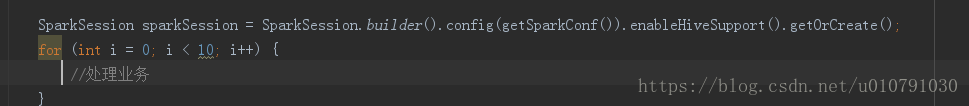

改之后

1226

1226

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?