1 介绍

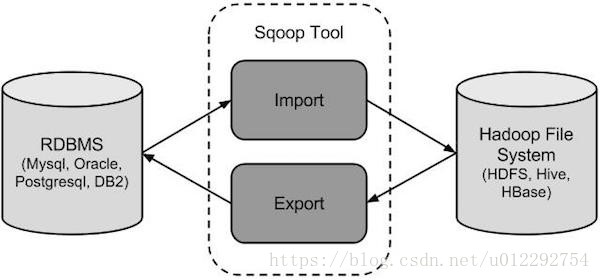

sqoop是apache旗下一款“Hadoop和关系数据库服务器之间传送数据”的工具。

导入数据:MySQL,Oracle导入数据到Hadoop的HDFS、HIVE、HBASE等数据存储系统;

导出数据:从Hadoop的文件系统中导出数据到关系数据库

1.1 工作机制

将导入或导出命令翻译成mapreduce程序来实现。在翻译出的mapreduce中主要是对inputformat和outputformat进行定制

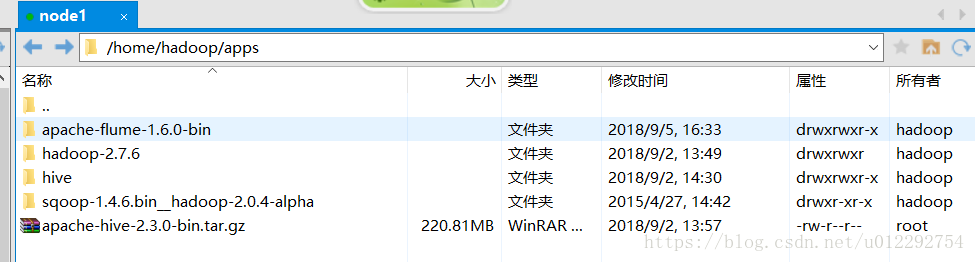

2 sqoop 安装

安装sqoop的前提是已经具备java和hadoop的环境

解压

把文件夹名字改为 sqoop。

2.1 配置 sqoop-env.sh

[hadoop@node1 ~]$ cd apps/sqoop/conf

[hadoop@node1 conf]$ ll

total 28

-rw-rw-r--. 1 hadoop hadoop 3895 Apr 27 2015 oraoop-site-template.xml

-rw-rw-r--. 1 hadoop hadoop 1404 Apr 27 2015 sqoop-env-template.cmd

-rwxr-xr-x. 1 hadoop hadoop 1345 Apr 27 2015 sqoop-env-template.sh

-rw-rw-r--. 1 hadoop hadoop 5531 Apr 27 2015 sqoop-site-template.xml

-rw-rw-r--. 1 hadoop hadoop 5531 Apr 27 2015 sqoop-site.xml

[hadoop@node1 conf]$ mv sqoop-env-template.sh sqoop-env.sh

[hadoop@node1 conf]$

#Set path to where bin/hadoop is available

export HADOOP_COMMON_HOME=/home/hadoop/apps/hadoop-2.7.6

#Set path to where hadoop-*-core.jar is available

export HADOOP_MAPRED_HOME=/home/hadoop/apps/hadoop-2.7.6

#set the path to where bin/hbase is available

#export HBASE_HOME=

#Set the path to where bin/hive is available

export HIVE_HOME=/home/hadoop/apps/hive

#Set the path for where zookeper config dir is

#export ZOOCFGDIR=2.2 把jdbc驱动包放到 sqoop目录下的 lib

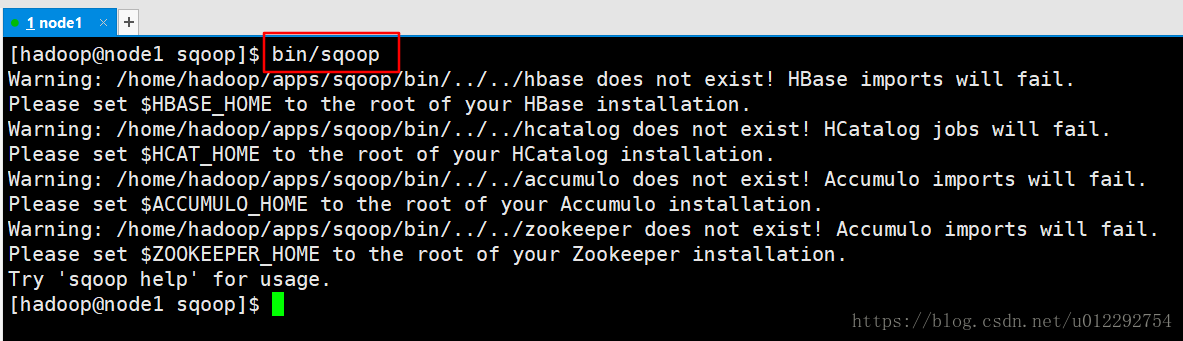

2.3 启动 sqoop

[hadoop@node1 sqoop]$ bin/sqoop help

Warning: /home/hadoop/apps/sqoop/bin/../../hbase does not exist! HBase imports will fail.

Please set $HBASE_HOME to the root of your HBase installation.

Warning: /home/hadoop/apps/sqoop/bin/../../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /home/hadoop/apps/sqoop/bin/../../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

Warning: /home/hadoop/apps/sqoop/bin/../../zookeeper does not exist! Accumulo imports will fail.

Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation.

18/09/06 11:04:29 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6

usage: sqoop COMMAND [ARGS]

Available commands:

codegen Generate code to interact with database records

create-hive-table Import a table definition into Hive

eval Evaluate a SQL statement and display the results

export Export an HDFS directory to a database table

help List available commands

import Import a table from a database to HDFS

import-all-tables Import tables from a database to HDFS

import-mainframe Import datasets from a mainframe server to HDFS

job Work with saved jobs

list-databases List available databases on a server

list-tables List available tables in a database

merge Merge results of incremental imports

metastore Run a standalone Sqoop metastore

version Display version information

See 'sqoop help COMMAND' for information on a specific command.

[hadoop@node1 sqoop]$

3 Sqoop的数据导入

“导入工具”导入单个表从RDBMS到HDFS。表中的每一行被视为HDFS的记录。所有记录都存储为文本文件的文本数据(或者Avro、sequence文件等二进制数据

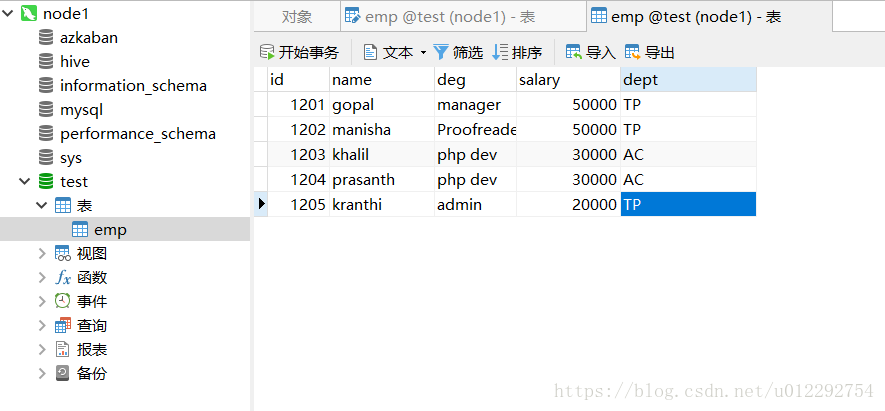

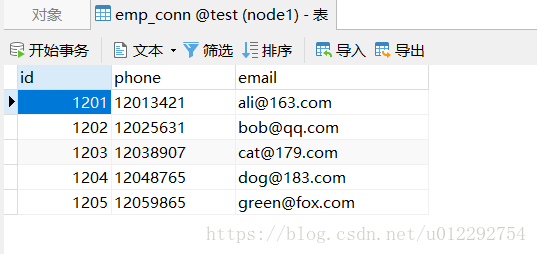

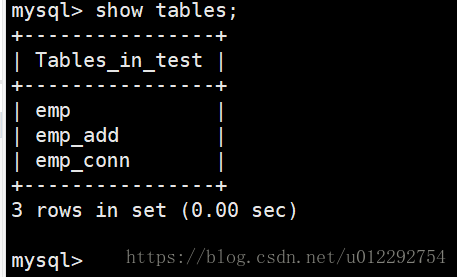

3.1 mysql 创建数据表

[hadoop@node1 ~]$ mysql -uroot -proot

mysql> show databases;

mysql> show tables;3.2 导入数据

bin/sqoop import \

--connect jdbc:mysql://node1:3306/test?useSSL=false \

--username root \

--password root \

--table emp \

--m 1[hadoop@node1 sqoop]$ bin/sqoop import \

> --connect jdbc:mysql://node1:3306/test?useSSL=false \

> --username root \

> --password root \

> --table emp \

> --m 1

Warning: /home/hadoop/apps/sqoop/bin/../../hbase does not exist! HBase imports will fail.

Please set $HBASE_HOME to the root of your HBase installation.

Warning: /home/hadoop/apps/sqoop/bin/../../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /home/hadoop/apps/sqoop/bin/../../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

Warning: /home/hadoop/apps/sqoop/bin/../../zookeeper does not exist! Accumulo imports will fail.

Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation.

18/09/06 12:23:12 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6

18/09/06 12:23:12 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

18/09/06 12:23:13 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

18/09/06 12:23:13 INFO tool.CodeGenTool: Beginning code generation

18/09/06 12:23:13 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `emp` AS t LIMIT 1

18/09/06 12:23:13 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `emp` AS t LIMIT 1

18/09/06 12:23:13 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /home/hadoop/apps/hadoop-2.7.6

Note: /tmp/sqoop-hadoop/compile/d83f1e1222d1472245af814e24d81bad/emp.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

18/09/06 12:23:15 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hadoop/compile/d83f1e1222d1472245af814e24d81bad/emp.jar

18/09/06 12:23:15 WARN manager.MySQLManager: It looks like you are importing from mysql.

18/09/06 12:23:15 WARN manager.MySQLManager: This transfer can be faster! Use the --direct

18/09/06 12:23:15 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path.

18/09/06 12:23:15 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql)

18/09/06 12:23:15 INFO mapreduce.ImportJobBase: Beginning import of emp

18/09/06 12:23:15 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

18/09/06 12:23:16 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

18/09/06 12:23:16 INFO client.RMProxy: Connecting to ResourceManager at node1/192.168.73.101:8032

18/09/06 12:23:18 INFO db.DBInputFormat: Using read commited transaction isolation

18/09/06 12:23:18 INFO mapreduce.JobSubmitter: number of splits:1

18/09/06 12:23:18 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1536195991830_0006

18/09/06 12:23:19 INFO impl.YarnClientImpl: Submitted application application_1536195991830_0006

18/09/06 12:23:19 INFO mapreduce.Job: The url to track the job: http://node1:8088/proxy/application_1536195991830_0006/

18/09/06 12:23:19 INFO mapreduce.Job: Running job: job_1536195991830_0006

18/09/06 12:23:27 INFO mapreduce.Job: Job job_1536195991830_0006 running in uber mode : false

18/09/06 12:23:27 INFO mapreduce.Job: map 0% reduce 0%

18/09/06 12:23:33 INFO mapreduce.Job: map 100% reduce 0%

18/09/06 12:23:34 INFO mapreduce.Job: Job job_1536195991830_0006 completed successfully

18/09/06 12:23:34 INFO mapreduce.Job: Counters: 30

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=140792

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=87

HDFS: Number of bytes written=150

HDFS: Number of read operations=4

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Other local map tasks=1

Total time spent by all maps in occupied slots (ms)=4320

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=4320

Total vcore-milliseconds taken by all map tasks=4320

Total megabyte-milliseconds taken by all map tasks=4423680

Map-Reduce Framework

Map input records=5

Map output records=5

Input split bytes=87

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=70

CPU time spent (ms)=700

Physical memory (bytes) snapshot=107872256

Virtual memory (bytes) snapshot=2082209792

Total committed heap usage (bytes)=18038784

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=150

18/09/06 12:23:34 INFO mapreduce.ImportJobBase: Transferred 150 bytes in 18.9089 seconds (7.9328 bytes/sec)

18/09/06 12:23:34 INFO mapreduce.ImportJobBase: Retrieved 5 records.

[hadoop@node1 sqoop]$

3.3 查看导入的数据

hadoop fs -cat /user/hadoop/emp/part-m-00000

[hadoop@node1 ~]$ hadoop fs -cat /user/hadoop/emp/part-m-00000

1201,gopal,manager,50000,TP

1202,manisha,Proofreader,50000,TP

1203,khalil,php dev,30000,AC

1204,prasanth,php dev,30000,AC

1205,kranthi,admin,20000,TP

[hadoop@node1 ~]$

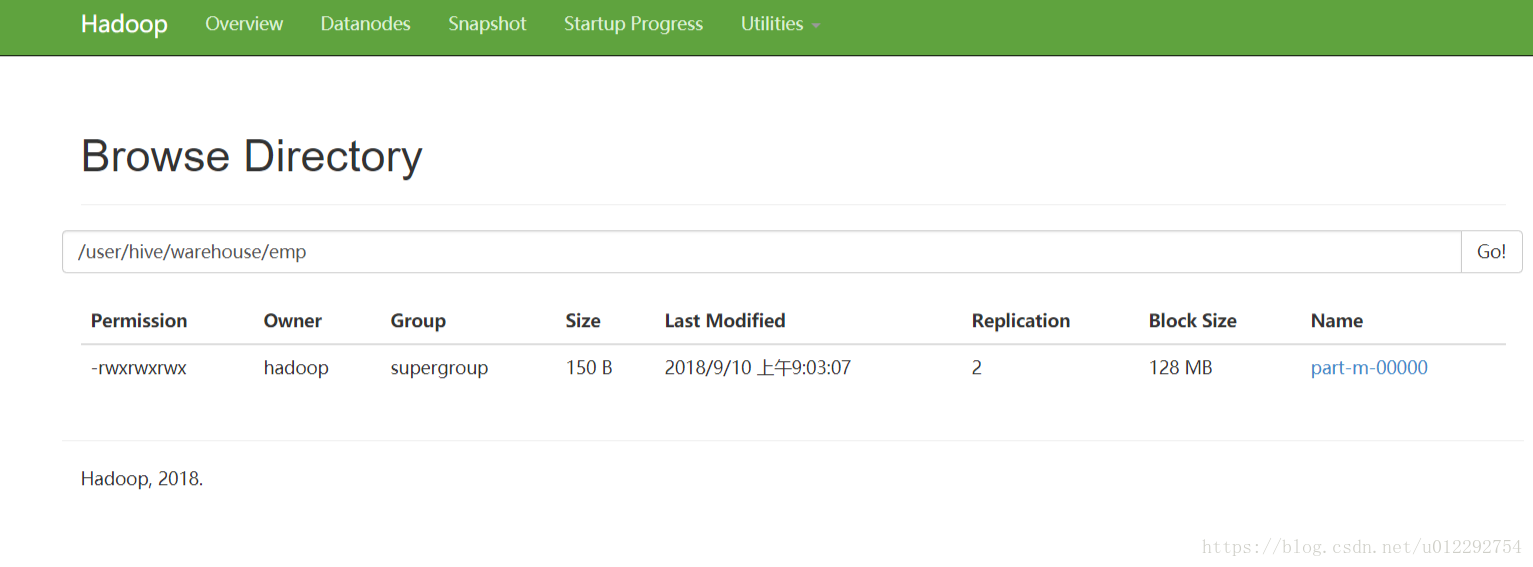

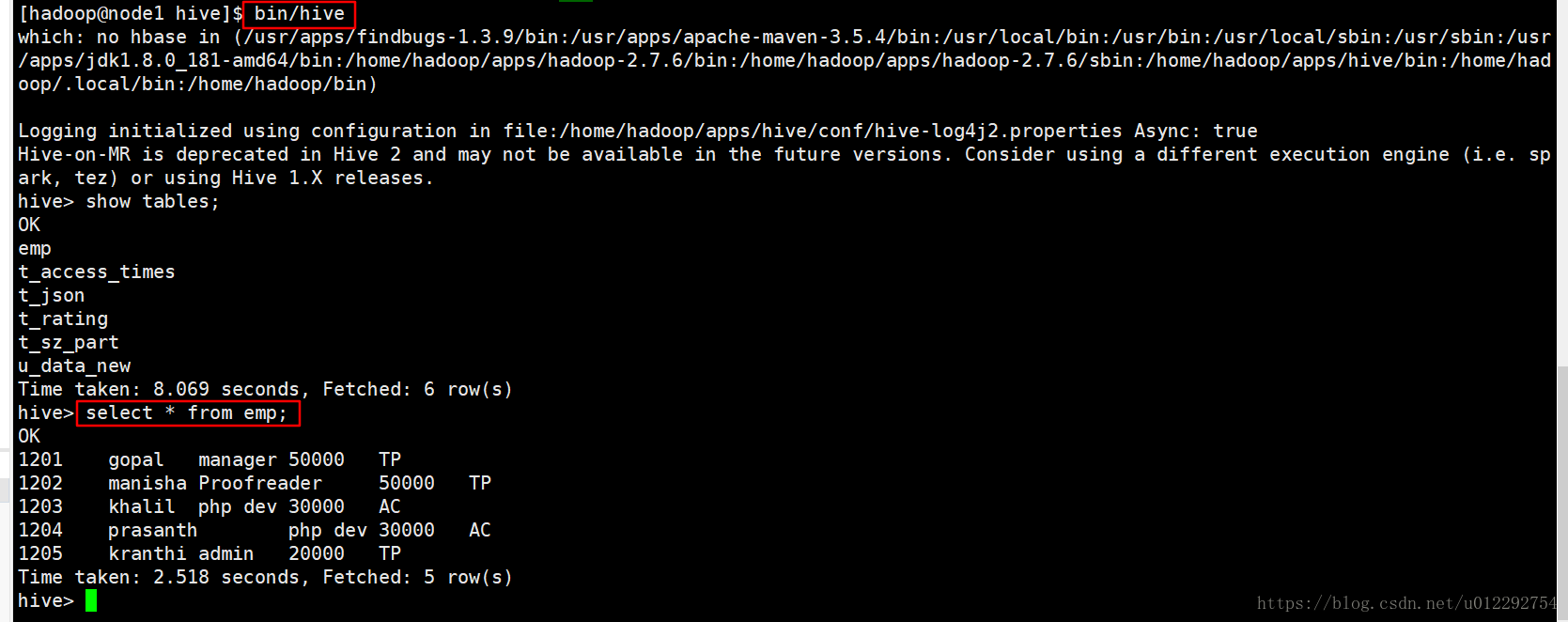

4 导入数据到 hive

[hadoop@node1 sqoop]$ hadoop fs -rm -r /user/hadoop/emp

18/09/10 09:01:05 INFO fs.TrashPolicyDefault: Namenode trash configuration: Deletion interval = 0 minutes, Emptier interval = 0 minutes.

Deleted /user/hadoop/emp

bin/sqoop import --connect jdbc:mysql://node1:3306/test?useSSL=false --username root --password root --table emp --hive-import --m 1

[hadoop@node1 sqoop]$ bin/sqoop import --connect jdbc:mysql://node1:3306/test?useSSL=false --username root --password root --table emp --hive-import --m 1

Warning: /home/hadoop/apps/sqoop/bin/../../hbase does not exist! HBase imports will fail.

Please set $HBASE_HOME to the root of your HBase installation.

Warning: /home/hadoop/apps/sqoop/bin/../../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /home/hadoop/apps/sqoop/bin/../../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

Warning: /home/hadoop/apps/sqoop/bin/../../zookeeper does not exist! Accumulo imports will fail.

Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation.

18/09/10 09:02:23 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6

18/09/10 09:02:23 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

18/09/10 09:02:23 INFO tool.BaseSqoopTool: Using Hive-specific delimiters for output. You can override

18/09/10 09:02:23 INFO tool.BaseSqoopTool: delimiters with --fields-terminated-by, etc.

18/09/10 09:02:23 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

18/09/10 09:02:23 INFO tool.CodeGenTool: Beginning code generation

18/09/10 09:02:24 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `emp` AS t LIMIT 1

18/09/10 09:02:24 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `emp` AS t LIMIT 1

18/09/10 09:02:24 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /home/hadoop/apps/hadoop-2.7.6

Note: /tmp/sqoop-hadoop/compile/2b5d23d7e11a7e8a49832360a7b0b34b/emp.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

18/09/10 09:02:28 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hadoop/compile/2b5d23d7e11a7e8a49832360a7b0b34b/emp.jar

18/09/10 09:02:28 WARN manager.MySQLManager: It looks like you are importing from mysql.

18/09/10 09:02:28 WARN manager.MySQLManager: This transfer can be faster! Use the --direct

18/09/10 09:02:28 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path.

18/09/10 09:02:28 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql)

18/09/10 09:02:28 INFO mapreduce.ImportJobBase: Beginning import of emp

18/09/10 09:02:28 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

18/09/10 09:02:30 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

18/09/10 09:02:30 INFO client.RMProxy: Connecting to ResourceManager at node1/192.168.73.101:8032

18/09/10 09:02:38 INFO db.DBInputFormat: Using read commited transaction isolation

18/09/10 09:02:38 INFO mapreduce.JobSubmitter: number of splits:1

18/09/10 09:02:38 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1536540385626_0001

18/09/10 09:02:39 INFO impl.YarnClientImpl: Submitted application application_1536540385626_0001

18/09/10 09:02:39 INFO mapreduce.Job: The url to track the job: http://node1:8088/proxy/application_1536540385626_0001/

18/09/10 09:02:39 INFO mapreduce.Job: Running job: job_1536540385626_0001

18/09/10 09:02:56 INFO mapreduce.Job: Job job_1536540385626_0001 running in uber mode : false

18/09/10 09:02:56 INFO mapreduce.Job: map 0% reduce 0%

18/09/10 09:03:09 INFO mapreduce.Job: map 100% reduce 0%

18/09/10 09:03:09 INFO mapreduce.Job: Job job_1536540385626_0001 completed successfully

18/09/10 09:03:09 INFO mapreduce.Job: Counters: 30

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=140792

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=87

HDFS: Number of bytes written=150

HDFS: Number of read operations=4

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Other local map tasks=1

Total time spent by all maps in occupied slots (ms)=9957

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=9957

Total vcore-milliseconds taken by all map tasks=9957

Total megabyte-milliseconds taken by all map tasks=10195968

Map-Reduce Framework

Map input records=5

Map output records=5

Input split bytes=87

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=110

CPU time spent (ms)=1200

Physical memory (bytes) snapshot=107380736

Virtual memory (bytes) snapshot=2082209792

Total committed heap usage (bytes)=18423808

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=150

18/09/10 09:03:09 INFO mapreduce.ImportJobBase: Transferred 150 bytes in 39.1466 seconds (3.8317 bytes/sec)

18/09/10 09:03:09 INFO mapreduce.ImportJobBase: Retrieved 5 records.

18/09/10 09:03:09 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `emp` AS t LIMIT 1

18/09/10 09:03:09 INFO hive.HiveImport: Loading uploaded data into Hive

18/09/10 09:03:13 INFO hive.HiveImport: which: no hbase in (/usr/apps/findbugs-1.3.9/bin:/usr/apps/apache-maven-3.5.4/bin:/usr/local/bin:/usr/bin:/usr/local/sbin:/usr/sbin:/usr/apps/jdk1.8.0_181-amd64/bin:/home/hadoop/apps/hadoop-2.7.6/bin:/home/hadoop/apps/hadoop-2.7.6/sbin:/home/hadoop/apps/hive/bin:/home/hadoop/.local/bin:/home/hadoop/bin)

18/09/10 09:03:22 INFO hive.HiveImport:

18/09/10 09:03:22 INFO hive.HiveImport: Logging initialized using configuration in file:/home/hadoop/apps/hive/conf/hive-log4j2.properties Async: true

18/09/10 09:03:37 INFO hive.HiveImport: OK

18/09/10 09:03:37 INFO hive.HiveImport: Time taken: 12.29 seconds

18/09/10 09:03:37 INFO hive.HiveImport: Loading data to table default.emp

18/09/10 09:03:39 INFO hive.HiveImport: OK

18/09/10 09:03:39 INFO hive.HiveImport: Time taken: 2.009 seconds

18/09/10 09:03:39 INFO hive.HiveImport: Hive import complete.

18/09/10 09:03:39 INFO hive.HiveImport: Export directory is contains the _SUCCESS file only, removing the directory.

[hadoop@node1 sqoop]$

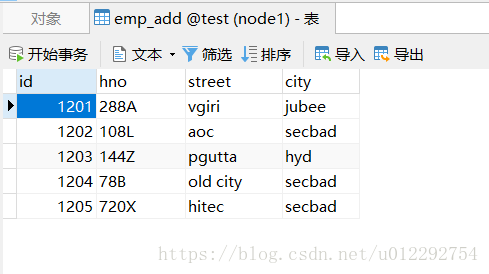

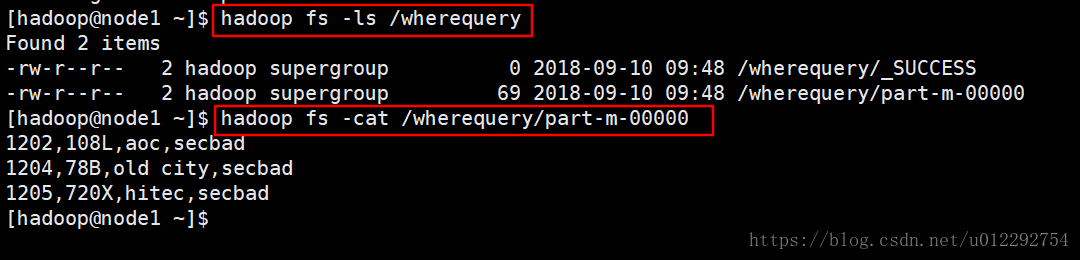

5 导入表数据子集

我们可以导入表的使用Sqoop导入工具,”where”子句的一个子集。它执行在各自的数据库服务器相应的SQL查询,并将结果存储在HDFS的目标目录。

bin/sqoop import \

--connect jdbc:mysql://node1:3306/test?useSSL=false \

--username root \

--password root \

--where "city='secbad'" \

--target-dir /wherequery \

--table emp_add --m 1[hadoop@node1 sqoop]$ bin/sqoop import \

> --connect jdbc:mysql://node1:3306/test?useSSL=false \

> --username root \

> --password root \

> --where "city='secbad'" \

> --target-dir /wherequery \

> --table emp_add --m 1

Warning: /home/hadoop/apps/sqoop/bin/../../hbase does not exist! HBase imports will fail.

Please set $HBASE_HOME to the root of your HBase installation.

Warning: /home/hadoop/apps/sqoop/bin/../../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /home/hadoop/apps/sqoop/bin/../../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

Warning: /home/hadoop/apps/sqoop/bin/../../zookeeper does not exist! Accumulo imports will fail.

Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation.

18/09/10 09:47:27 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6

18/09/10 09:47:27 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

18/09/10 09:47:27 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

18/09/10 09:47:27 INFO tool.CodeGenTool: Beginning code generation

18/09/10 09:47:28 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `emp_add` AS t LIMIT 1

18/09/10 09:47:28 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `emp_add` AS t LIMIT 1

18/09/10 09:47:28 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /home/hadoop/apps/hadoop-2.7.6

Note: /tmp/sqoop-hadoop/compile/a31034634ffe972d5a520b2e55c69a4d/emp_add.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

18/09/10 09:47:33 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hadoop/compile/a31034634ffe972d5a520b2e55c69a4d/emp_add.jar

18/09/10 09:47:33 WARN manager.MySQLManager: It looks like you are importing from mysql.

18/09/10 09:47:33 WARN manager.MySQLManager: This transfer can be faster! Use the --direct

18/09/10 09:47:33 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path.

18/09/10 09:47:33 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql)

18/09/10 09:47:33 INFO mapreduce.ImportJobBase: Beginning import of emp_add

18/09/10 09:47:34 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

18/09/10 09:47:35 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

18/09/10 09:47:36 INFO client.RMProxy: Connecting to ResourceManager at node1/192.168.73.101:8032

18/09/10 09:47:42 INFO db.DBInputFormat: Using read commited transaction isolation

18/09/10 09:47:42 INFO mapreduce.JobSubmitter: number of splits:1

18/09/10 09:47:43 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1536540385626_0002

18/09/10 09:47:44 INFO impl.YarnClientImpl: Submitted application application_1536540385626_0002

18/09/10 09:47:44 INFO mapreduce.Job: The url to track the job: http://node1:8088/proxy/application_1536540385626_0002/

18/09/10 09:47:44 INFO mapreduce.Job: Running job: job_1536540385626_0002

18/09/10 09:47:58 INFO mapreduce.Job: Job job_1536540385626_0002 running in uber mode : false

18/09/10 09:47:58 INFO mapreduce.Job: map 0% reduce 0%

18/09/10 09:48:08 INFO mapreduce.Job: map 100% reduce 0%

18/09/10 09:48:08 INFO mapreduce.Job: Job job_1536540385626_0002 completed successfully

18/09/10 09:48:09 INFO mapreduce.Job: Counters: 30

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=140946

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=87

HDFS: Number of bytes written=69

HDFS: Number of read operations=4

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Other local map tasks=1

Total time spent by all maps in occupied slots (ms)=7592

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=7592

Total vcore-milliseconds taken by all map tasks=7592

Total megabyte-milliseconds taken by all map tasks=7774208

Map-Reduce Framework

Map input records=3

Map output records=3

Input split bytes=87

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=97

CPU time spent (ms)=1230

Physical memory (bytes) snapshot=107671552

Virtual memory (bytes) snapshot=2082213888

Total committed heap usage (bytes)=17866752

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=69

18/09/10 09:48:09 INFO mapreduce.ImportJobBase: Transferred 69 bytes in 33.3618 seconds (2.0682 bytes/sec)

18/09/10 09:48:09 INFO mapreduce.ImportJobBase: Retrieved 3 records.

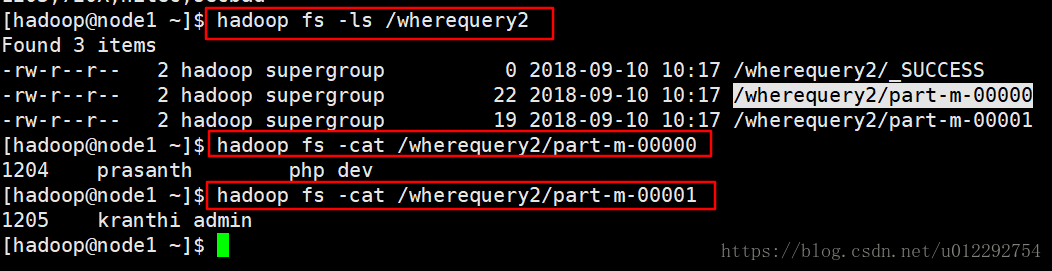

[hadoop@node1 sqoop]$5.1 按需导入

bin/sqoop import \

--connect jdbc:mysql://node1:3306/test?useSSL=false \

--username root \

--password root \

--target-dir /wherequery2 \

--query 'select id,name,deg from emp WHERE id>1203 and $CONDITIONS' \

--split-by id \

--fields-terminated-by '\t' \

--m 2Warning: /home/hadoop/apps/sqoop/bin/../../hbase does not exist! HBase imports will fail.

Please set $HBASE_HOME to the root of your HBase installation.

Warning: /home/hadoop/apps/sqoop/bin/../../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /home/hadoop/apps/sqoop/bin/../../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

Warning: /home/hadoop/apps/sqoop/bin/../../zookeeper does not exist! Accumulo imports will fail.

Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation.

18/09/10 10:16:51 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6

18/09/10 10:16:51 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

18/09/10 10:16:51 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

18/09/10 10:16:51 INFO tool.CodeGenTool: Beginning code generation

18/09/10 10:16:52 INFO manager.SqlManager: Executing SQL statement: select id,name,deg from emp WHERE id>1203 and (1 = 0)

18/09/10 10:16:52 INFO manager.SqlManager: Executing SQL statement: select id,name,deg from emp WHERE id>1203 and (1 = 0)

18/09/10 10:16:52 INFO manager.SqlManager: Executing SQL statement: select id,name,deg from emp WHERE id>1203 and (1 = 0)

18/09/10 10:16:52 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /home/hadoop/apps/hadoop-2.7.6

Note: /tmp/sqoop-hadoop/compile/d9100767fbcb8201eccbbd78778f3f65/QueryResult.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

18/09/10 10:16:55 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hadoop/compile/d9100767fbcb8201eccbbd78778f3f65/QueryResult.jar

18/09/10 10:16:55 INFO mapreduce.ImportJobBase: Beginning query import.

18/09/10 10:16:55 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

18/09/10 10:16:57 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

18/09/10 10:16:57 INFO client.RMProxy: Connecting to ResourceManager at node1/192.168.73.101:8032

18/09/10 10:17:03 INFO db.DBInputFormat: Using read commited transaction isolation

18/09/10 10:17:03 INFO db.DataDrivenDBInputFormat: BoundingValsQuery: SELECT MIN(id), MAX(id) FROM (select id,name,deg from emp WHERE id>1203 and (1 = 1) ) AS t1

18/09/10 10:17:03 INFO mapreduce.JobSubmitter: number of splits:2

18/09/10 10:17:03 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1536540385626_0003

18/09/10 10:17:03 INFO impl.YarnClientImpl: Submitted application application_1536540385626_0003

18/09/10 10:17:04 INFO mapreduce.Job: The url to track the job: http://node1:8088/proxy/application_1536540385626_0003/

18/09/10 10:17:04 INFO mapreduce.Job: Running job: job_1536540385626_0003

18/09/10 10:17:17 INFO mapreduce.Job: Job job_1536540385626_0003 running in uber mode : false

18/09/10 10:17:17 INFO mapreduce.Job: map 0% reduce 0%

18/09/10 10:17:26 INFO mapreduce.Job: map 50% reduce 0%

18/09/10 10:17:29 INFO mapreduce.Job: map 100% reduce 0%

18/09/10 10:17:29 INFO mapreduce.Job: Job job_1536540385626_0003 completed successfully

18/09/10 10:17:30 INFO mapreduce.Job: Counters: 30

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=281766

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=201

HDFS: Number of bytes written=41

HDFS: Number of read operations=8

HDFS: Number of large read operations=0

HDFS: Number of write operations=4

Job Counters

Launched map tasks=2

Other local map tasks=2

Total time spent by all maps in occupied slots (ms)=16165

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=16165

Total vcore-milliseconds taken by all map tasks=16165

Total megabyte-milliseconds taken by all map tasks=16552960

Map-Reduce Framework

Map input records=2

Map output records=2

Input split bytes=201

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=205

CPU time spent (ms)=2500

Physical memory (bytes) snapshot=220712960

Virtual memory (bytes) snapshot=4164530176

Total committed heap usage (bytes)=36175872

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=41

18/09/10 10:17:30 INFO mapreduce.ImportJobBase: Transferred 41 bytes in 32.6628 seconds (1.2553 bytes/sec)

18/09/10 10:17:30 INFO mapreduce.ImportJobBase: Retrieved 2 records.

[hadoop@node1 sqoop]$

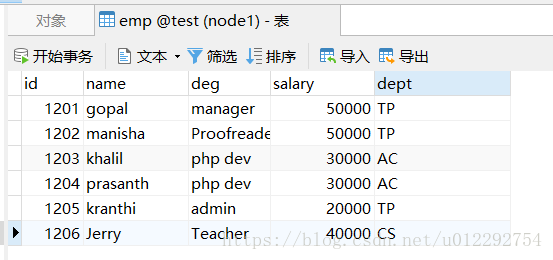

6 增量导入

增量导入是仅导入新添加的表中的行的技术。(也就是把表中新添加的行导入到hive,hdfs等存储中)

它需要添加‘incremental’, ‘check-column’, 和 ‘last-value’选项来执行增量导入。

bin/sqoop import \

--connect jdbc:mysql://node1:3306/test?useSSL=false \

--username root \

--password root \

--table emp \

--m 1 \

--incremental append \

--check-column id \

--last-value 1206

1389

1389

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?