1 Python Package Introduction

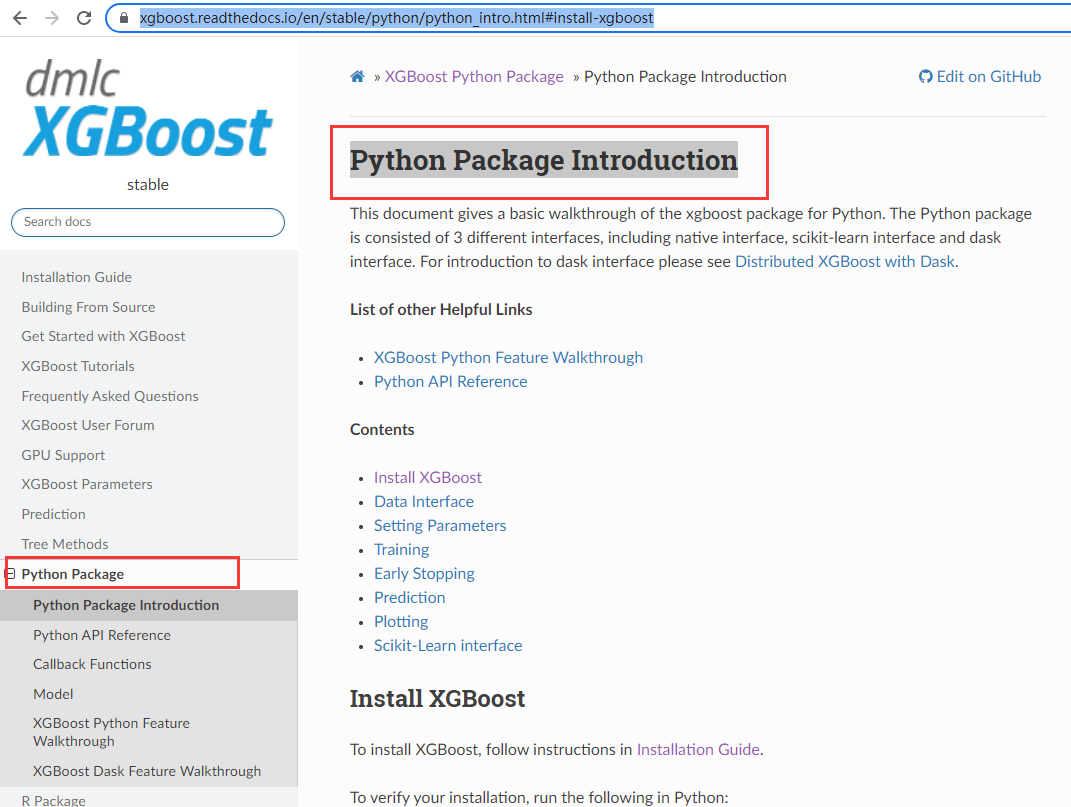

由于xgboost使用文档内容较多,时间有限,此文进队文档中的Python Package Introduction(Python软件包简介)介绍给出翻译,文档网址https://xgboost.readthedocs.io/en/stable/python/python_intro.html#install-xgboost

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-Yv5vVPOM-1652526423857)(attachment:image.png)]

This document gives a basic walkthrough of the xgboost package for Python. The Python package is consisted of 3 different interfaces, including native interface, scikit-learn interface and dask interface. For introduction to dask interface please see Distributed XGBoost with Dask.

本文档提供了 xgboost包关于Python的基本演练。Python包由3个不同的接口组成,包括原生接口、scikit-learn接口和dask接口。有关dask接口的介绍,请参阅带有dask的分布式XGBoost。

List of other Helpful Links

XGBoost Python Feature Walkthrough

Python API Reference

其他有用链接列表

XGBoost Python功能演练

Python API参考

1.1 Install XGBoost 安装XGBoost

To install XGBoost, follow instructions in Installation Guide.

To verify your installation, run the following in Python:

要安装XGBoost,请按照《安装指南》中的说明进行操作。

要验证安装,请在Python中运行以下命令:

import xgboost as xgb

1.2 Data Interface 数据接口

The XGBoost python module is able to load data from many different types of data format, including:

NumPy 2D array

SciPy 2D sparse array

Pandas data frame

cuDF DataFrame

cupy 2D array

dlpack

datatable

XGBoost binary buffer file.

LIBSVM text format file

Comma-separated values (CSV) file

(See Text Input Format of DMatrix for detailed description of text input format.)

数据接口

XGBoost python模块能够从多种不同类型的数据格式加载数据,包括:

NumPy 2维数组

SciPy 2维稀疏数组

Pandas 数据框

cuDF 数据框

cupy 2维数组

dlpack

数据表

XGBoost二进制缓冲文件

LIBSVM 文本格式文件

逗号分隔值(CSV)文件

(有关文本输入格式的详细说明,请参见DMatrix的输入格式。)

The data is stored in a DMatrix object.

数据存储在DMatrix对象中。

#dir(xgb)

from xgboost import DMatrix

import numpy as np

import scipy

To load a NumPy array into DMatrix:

加载NumPy 2维数组到DMatrix中

data = np.random.rand(5, 10) # 5 entities, each contains 10 features

label = np.random.randint(2, size=5) # binary target

dtrain = xgb.DMatrix(data, label=label)

To load a scipy.sparse array into DMatrix:

加载SciPy 2维稀疏数组到DMatrix中

csr = scipy.sparse.csr_matrix((dat, (row, col)))

dtrain = xgb.DMatrix(csr)

To load a Pandas data frame into DMatrix:

加载Pandas 数据框到DMatrix中

data = pandas.DataFrame(np.arange(12).reshape((4,3)), columns=[‘a’, ‘b’, ‘c’])

label = pandas.DataFrame(np.random.randint(2, size=4))

dtrain = xgb.DMatrix(data, label=label)

Saving DMatrix into a XGBoost binary file will make loading faster:

保存 DMatrix对象得到 XGBoost二进制文件可以使得加载速度更快

dtrain = xgb.DMatrix(‘train.svm.txt’)

dtrain.save_binary(‘train.buffer’)

Missing values can be replaced by a default value in the DMatrix constructor:

缺失值可以被替换成默认值在DMatrix构造函数中

dtrain = xgb.DMatrix(data, label=label, missing=np.NaN)

Weights can be set when needed:

当需要时,数据权重可以设置

w = np.random.rand(5, 1)

dtrain = xgb.DMatrix(data, label=label, missing=np.NaN, weight=w)

When performing ranking tasks, the number of weights should be equal to number of groups.

在执行排名任务时,权重的数量应等于组的数量。

To load a LIBSVM text file or a XGBoost binary file into DMatrix:

加载一个LIBSVM 文本格式文件或者XGBoost二进制缓冲文件 到 DMatrix对象中

dtrain = xgb.DMatrix(‘train.svm.txt’)

dtest = xgb.DMatrix(‘test.svm.buffer’)

The parser in XGBoost has limited functionality. When using Python interface, it’s recommended to use sklearn load_svmlight_file or other similar utilites than XGBoost’s builtin parser.

XGBoost中的解析器功能有限。在使用Python接口时,建议使用sklearn中 load_svmlight_file或XGBoost的内置解析器以外的其他类似实用程序。

To load a CSV file into DMatrix:

加载一个csv文件到DMatrix中

label_column specifies the index of the column containing the true label

dtrain = xgb.DMatrix(‘train.csv?format=csv&label_column=0’)

dtest = xgb.DMatrix(‘test.csv?format=csv&label_column=0’)

The parser in XGBoost has limited functionality. When using Python interface, it’s recommended to use pandas read_csv or other similar utilites than XGBoost’s builtin parser.

GBoost中的解析器功能有限。在使用Python接口时,建议使用spandas中read_csv或XGBoost的内置解析器以外的其他类似实用程序。

1.3 Setting Parameters 参数设置

XGBoost can use either a list of pairs or a dictionary to set parameters. For instance:

XGBoost可以使用配对列表或字典来设置参数。例如:

Booster parameters 助推器(提升器)参数

param = {'max_depth': 2, 'eta': 1, 'objective': 'binary:logistic'}

param['nthread'] = 4

param['eval_metric'] = 'auc'

You can also specify multiple eval metrics:

您还可以指定多个评估指标:

param['eval_metric'] = ['auc', 'ams@0']

# alternatively:

# plst = param.items()

# plst += [('eval_metric', 'ams@0')]

Specify validations set to watch performance

制定验证集去查看模型表现

evallist = [(dtest, ‘eval’), (dtrain, ‘train’)]

1.4 Training 训练

Training a model requires a parameter list and data set

训练一个模型需要参数列表和数据集合

num_round = 10

bst = xgb.train(param, dtrain, num_round, evallist)

After training, the model can be saved.

训练后,可以保存模型

bst.save_model(‘0001.model’)

The model and its feature map can also be dumped to a text file.

模型及其功能特征映射也可以转储到文本文件中。

# dump model

bst.dump_model('dump.raw.txt')

# dump model with feature map

bst.dump_model('dump.raw.txt', 'featmap.txt')

A saved model can be loaded as follows:

一个保存的模型可以按照如下方式加载

bst = xgb.Booster({'nthread': 4}) # init model

bst.load_model('model.bin') # load data

Methods including update and boost from xgboost.Booster are designed for internal usage only. The wrapper function xgboost.train does some pre-configuration including setting up caches and some other parameters.

方法包括xgboost的更新和boost,设计仅用于内部使用。装饰器函数xgboost.train会进行一些预配置,包括设置缓存和其他一些参数。

1.5 Early Stopping 提前停止

If you have a validation set, you can use early stopping to find the optimal number of boosting rounds. Early stopping requires at least one set in evals. If there’s more than one, it will use the last.

如果你有一个验证集,你可以使用提前停止来找到最佳的提升轮数。提前停止需要至少设置一个值在参数 evals中。如果有多个,它将使用最后一个。

The model will train until the validation score stops improving. Validation error needs to decrease at least every early_stopping_rounds to continue training.

模型将一直训练,直到验证分数停止改善。验证错误需要至少每 early_stopping_rounds减少才能继续训练。

If early stopping occurs, the model will have two additional fields: bst.best_score, bst.best_iteration. Note that xgboost.train() will return a model from the last iteration, not the best one.

如果提前停止,模型将有两个附加字段:bst.best_score、bst.best_iteration。注意xgboost.train()将返回最后一次迭代的模型,而不是最佳模型。

This works with both metrics to minimize (RMSE, log loss, etc.) and to maximize (MAP, NDCG, AUC). Note that if you specify more than one evaluation metric the last one in param[‘eval_metric’] is used for early stopping.

这与最小化(RMSE、log损失等)和最大化(MAP、NDCG、AUC)的指标一起工作。请注意,如果指定了多个评估指标,则param[‘eval_metric’]中的最后一个用于提前停止。

1.6 Prediction 预测

A model that has been trained or loaded can perform predictions on data sets.

经过训练或加载的模型可以对数据集进行预测。

# 7 entities, each contains 10 features

data = np.random.rand(7, 10)

dtest = xgb.DMatrix(data)

ypred = bst.predict(dtest)

If early stopping is enabled during training, you can get predictions from the best iteration with bst.best_iteration:

如果在训练期间启用了提前停止,您可以通过bst.best_iteration从最佳迭代中获得预测:

ypred = bst.predict(dtest, iteration_range=(0, bst.best_iteration + 1))

1.7 Plotting 绘图

You can use plotting module to plot importance and output tree.

To plot importance, use xgboost.plot_importance(). This function requires matplotlib to be installed.

可以使用绘图模块绘制重要性和输出树。

要绘制重要性,请使用xgboost.plot_importance()。此功能需要安装matplotlib。

xgb.plot_importance(bst)

To plot the output tree via matplotlib, use xgboost.plot_tree(), specifying the ordinal number of the target tree. This function requires graphviz and matplotlib.

要通过matplotlib绘制输出树,请使用 xgboost.plot_tree(),指定目标树的序号。此函数需要graphviz和matplotlib。

When you use IPython, you can use the xgboost.to_graphviz() function, which converts the target tree to a graphviz instance. The graphviz instance is automatically rendered in IPython.

当你使用IPython时,可以使用xgboost.to_graphviz() ,该函数将目标树转换为graphviz实例。graphviz实例将在IPython中自动渲染。

xgb.to_graphviz(bst, num_trees=2)

1.8 Scikit-Learn interface sklearn 接口

XGBoost provides an easy to use scikit-learn interface for some pre-defined models including regression, classification and ranking.

XGBoost为一些预定义模型(包括回归、分类和排名)提供了一个易于使用的sklearn接口。

# Use "gpu_hist" for training the model.

reg = xgb.XGBRegressor(tree_method="gpu_hist")

# Fit the model using predictor X and response y.

reg.fit(X, y)

# Save model into JSON format.

reg.save_model("regressor.json")

User can still access the underlying booster model when needed:

用户仍可以在需要时访问底层的增强器:

booster: xgb.Booster = reg.get_booster()

参考引用

XGBoost Documentation:https://xgboost.readthedocs.io/en/stable/

3653

3653

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?