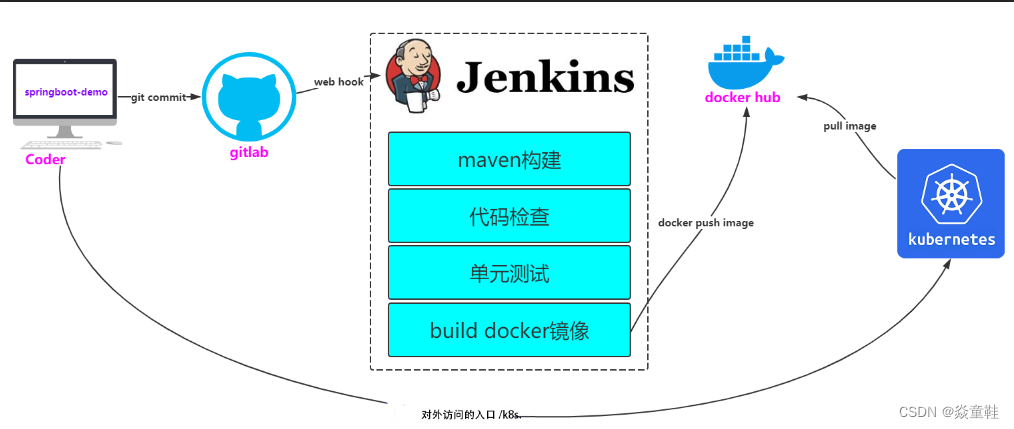

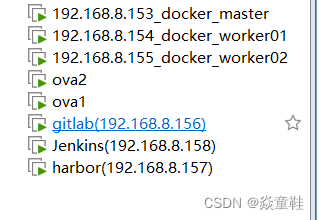

书接上回k8s集群搭建完毕,来使用它强大的扩缩容能力帮我们进行应用的持续集成和持续部署,整体的机器规划如下:

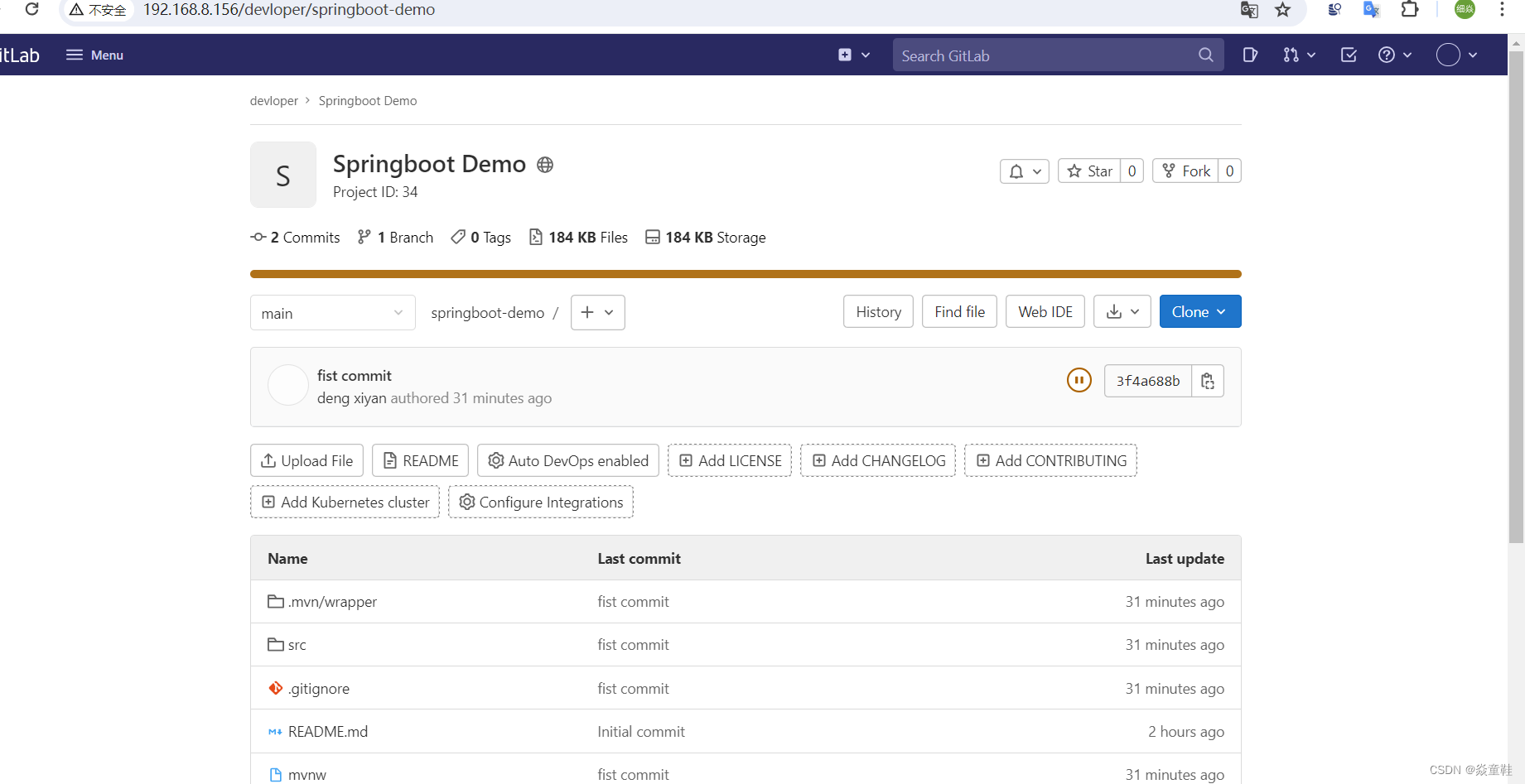

1.192.168.8.156 搭建gitlab私服

docker pull gitlab/gitlab-ce:latest

docker run --detach --hostname 192.168.8.156 --publish 443:443 --publish 80:80 --publish 2022:22 --name gitlab --restart always --volume /srv/gitlab/config:/etc/gitlab --volume /srv/gitlab/logs:/var/log/gitlab --volume /srv/gitlab/data:/var/opt/gitlab gitlab/gitlab-ce:latestroot密码查看:

docker exec -it gitlab grep "Password": /etc/gitlab/initial_root_password

新建用户dxy并用管理员root账号进行审批

结果如图并创建项目git,一个最简单的springboot项目

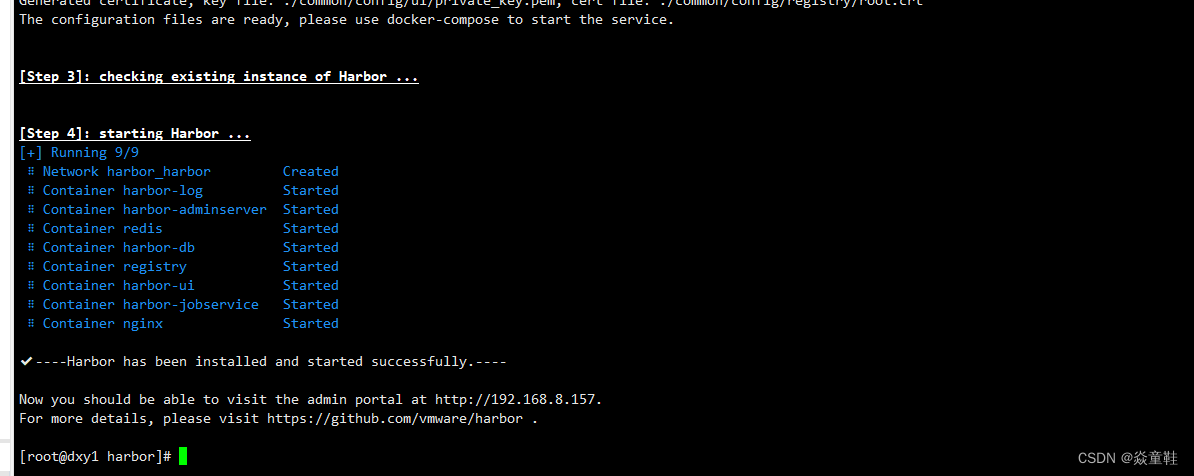

2.192.168.8.157 搭建docker私服 habor

首先,需要在服务器上安装 docker 和 docker-compose,执行如下命令。

yum install docker docker-compose接着下载 harbor 软件包

wget https://storage.googleapis.com/harbor-releases/harbor-offline-installer-v1.5.3.tgz解压并安装

tar -zxvf harbor-offline-installer-v1.5.3.tgz -C /opt/software/habor/

进入/opt/software/habor/harbor目录下,修改文件 harbor.cfg,修改如下字段

hostname = 192.168.8.157 # 修改 IP 地址为本机 IP执行如下命令,安装 harbor

./prepare

./install.sh

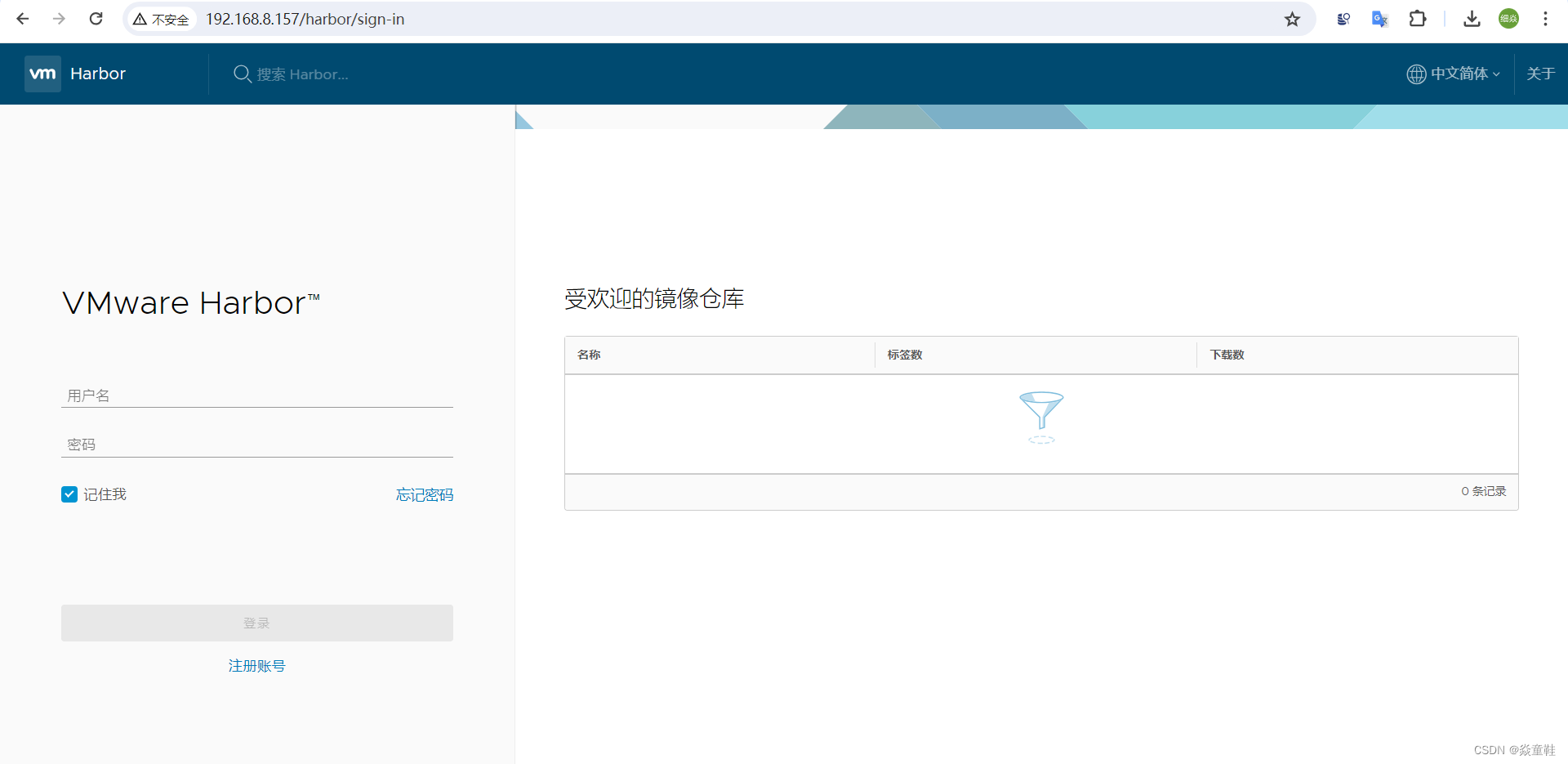

在浏览器输入 http://192.168.8.157/harbor/sign-in,进入 harbor UI 界面,如下所示。

默认用户名和密码如下

用户名:admin

密码:Harbor12345

密码在 /opt/harbor/harbor.cfg 中配置,默认是 Harbor12345修改docker配置文件/etc/docker/daemon.json内容如下

{ "insecure-registries": ["192.168.8.157"],

"registry-mirrors": ["https://z0o173bp.mirror.aliyuncs.com"]

}重启docker

systemctl daemon-reload

systemctl docker restart

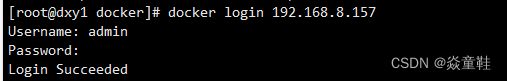

依据提示进行镜像的推送

docker tag docker.io/mysql:5.7 192.168.8.157/library/mysql:5.0

docker push 192.168.8.157/library/mysql:5.0

3.192.168.8.158 搭建Jenkins部署服务器

下载Jenkins并配置好java环境

wget http://mirrors.jenkins.io/war-stable/latest/jenkins.war启动jenkis

nohup java -jar jenkins.war --httpPort=8080 & tail -f nohup.out

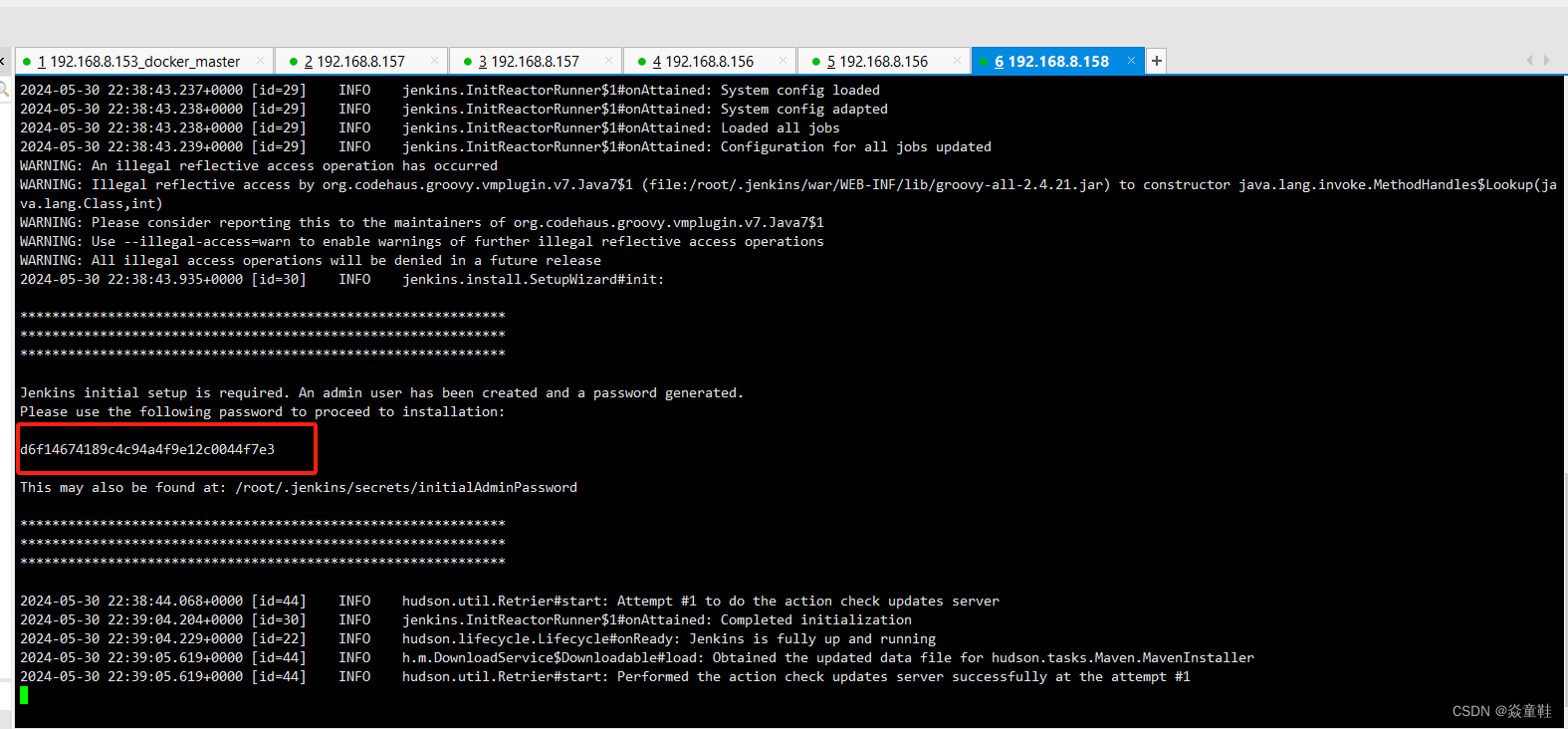

启动后的解锁密码如图忘记可以用如下命令查看

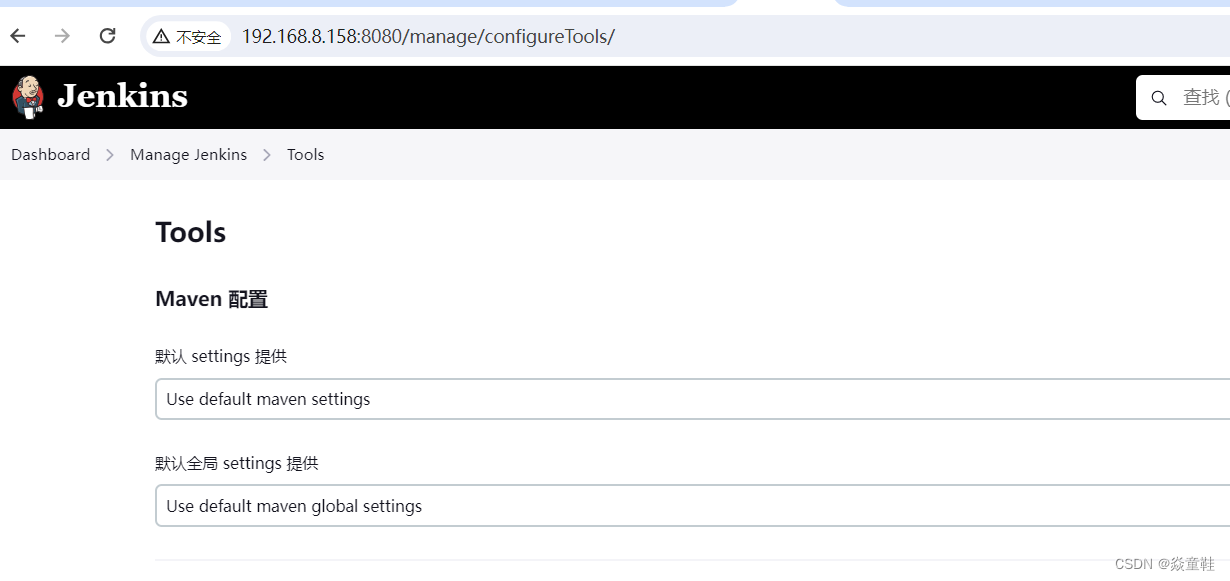

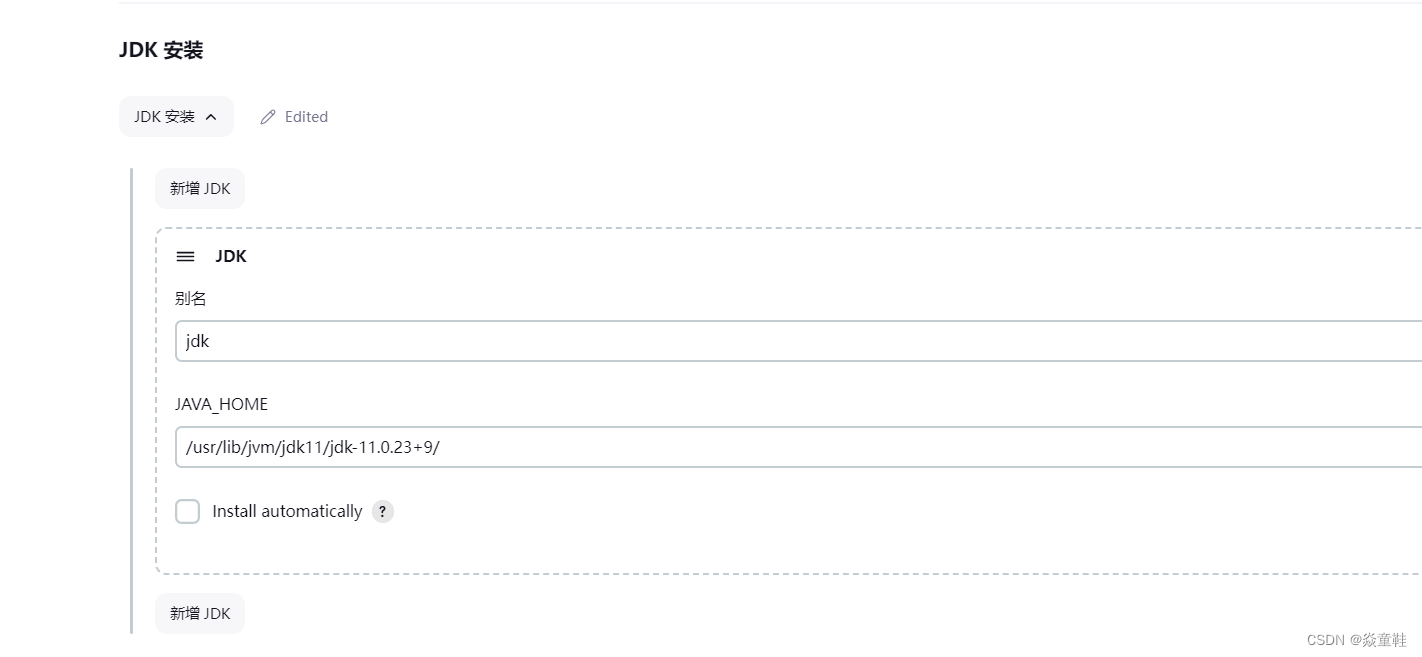

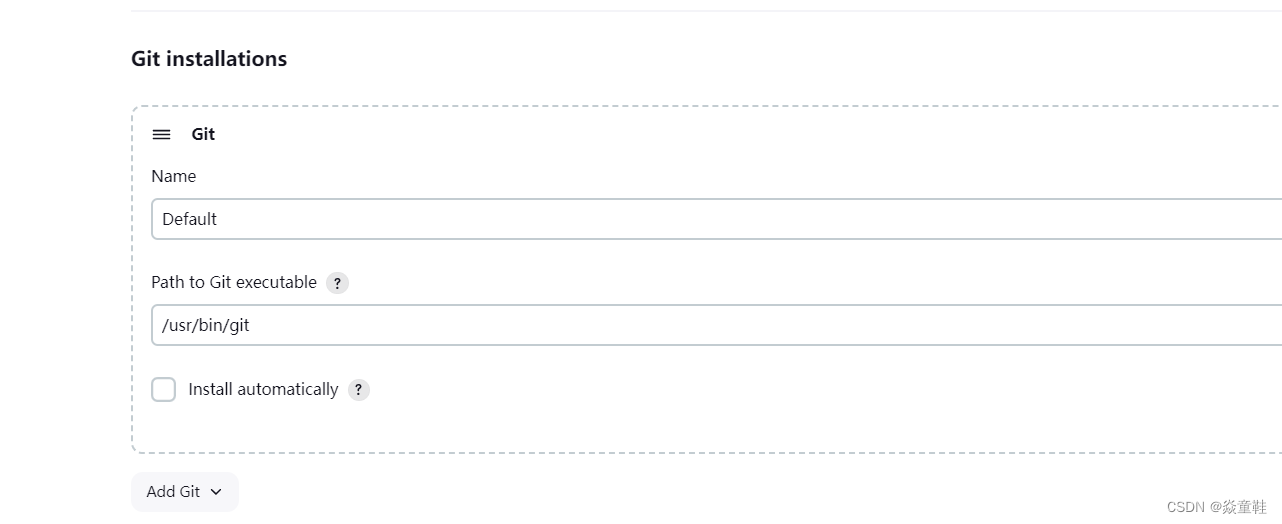

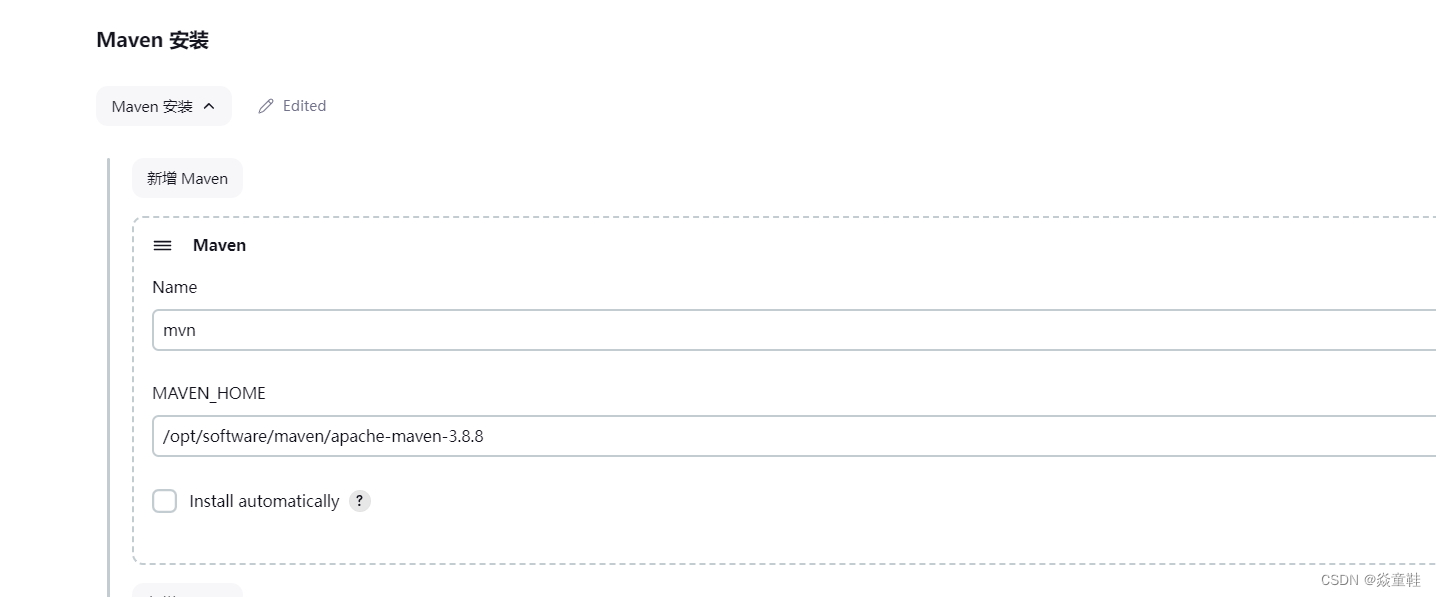

cat /root/.jenkins/secrets/initialAdminPassword安装推荐的插件并配置好maven、git和jdk如下:

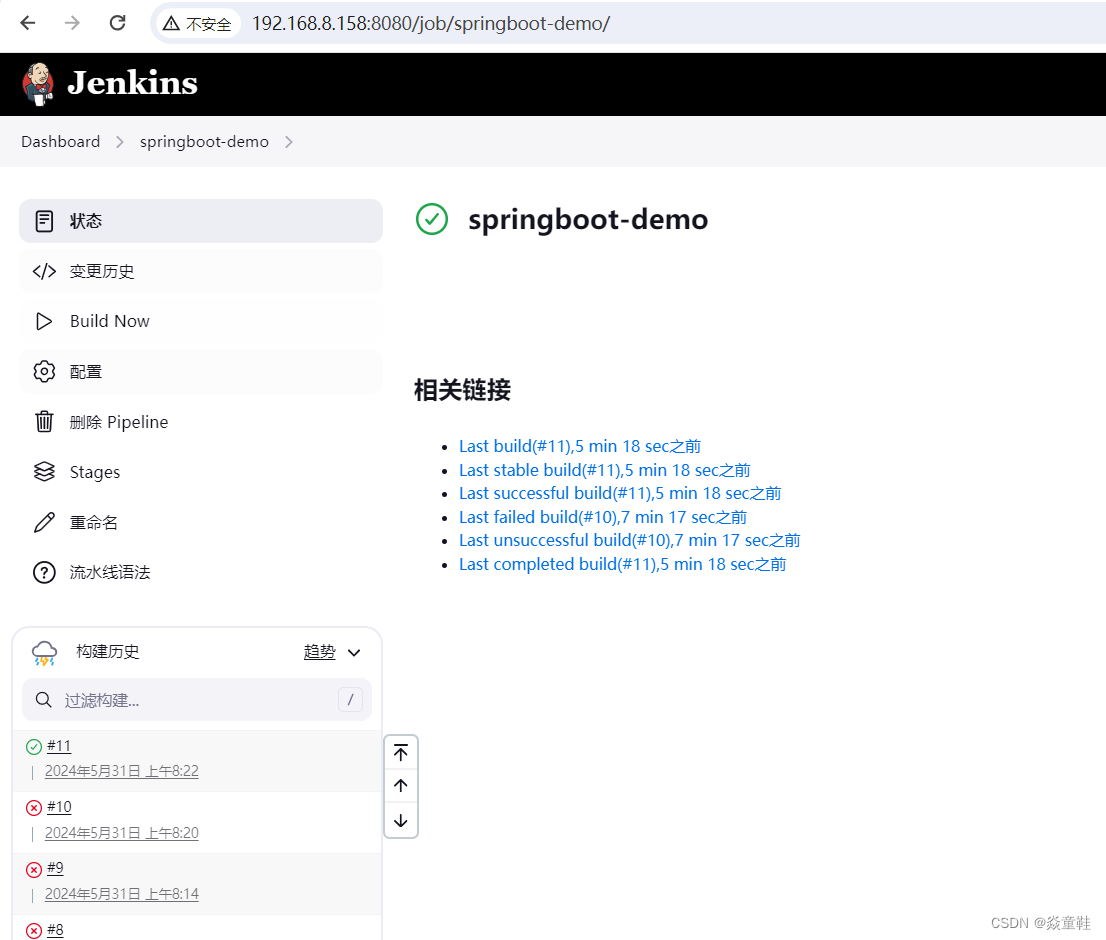

按照完成后可以新建一个流水线测试一下是否可以正常拉取代码和打包构建(Jenkins上需要配置公钥并在gitlab上添加ssh授权key)

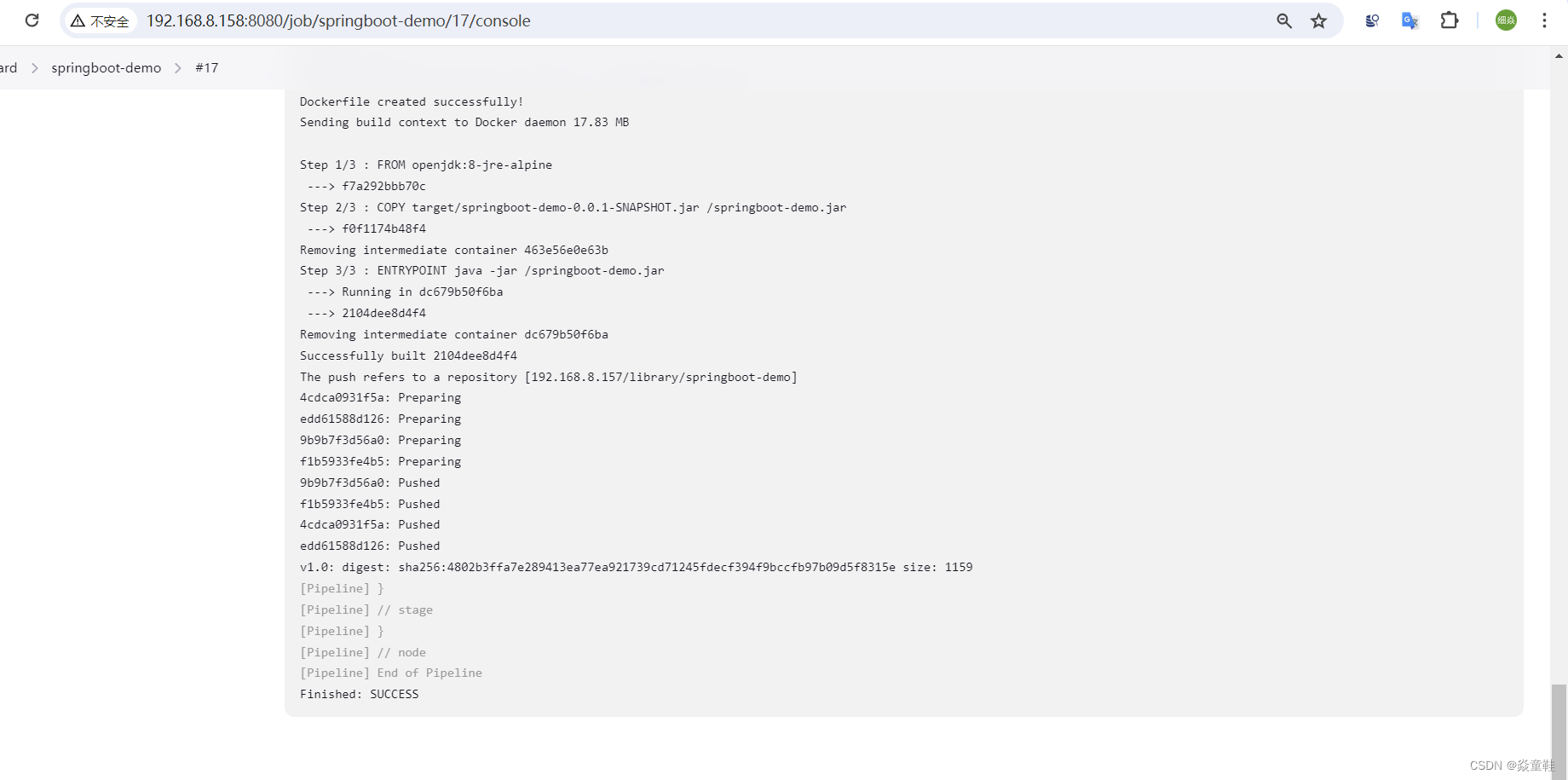

4.将Jenkins构建后的包打成镜像并上传到镜像仓库

在Jenkins的工作目录中新建scripte目录,并加一个把构建后的jar包打包成镜像的脚本文件命名为springboot-demo-build-image.sh如下:

mkdir /root/.jenkins/workspace/scripts/

vi /root/.jenkins/workspace/scripts/springboot-demo-build-image.sh

chmod +x /root/.jenkins/workspace/scripts/*.sh# 进入到springboot-demo目录

cd ../springboot-demo

# 编写Dockerfile文件

cat <<EOF > Dockerfile

FROM openjdk:8-jre-alpine

COPY target/springboot-demo-0.0.1-SNAPSHOT.jar /springboot-demo.jar

ENTRYPOINT ["java","-jar","/springboot-demo.jar"]

EOF

echo "Dockerfile created successfully!"

# 基于指定目录下的Dockerfile构建镜像

docker build -t 192.168.8.157/library/springboot-demo:v1.0 .

# push镜像,这边需要habor镜像仓库登录,在jenkins服务器上登录

docker push 192.168.8.157/library/springboot-demo:v1.0增加流水线的步骤如下:

stage('Build Image') {

sh "/root/.jenkins/workspace/scripts/springboot-demo-build-image.sh"

}

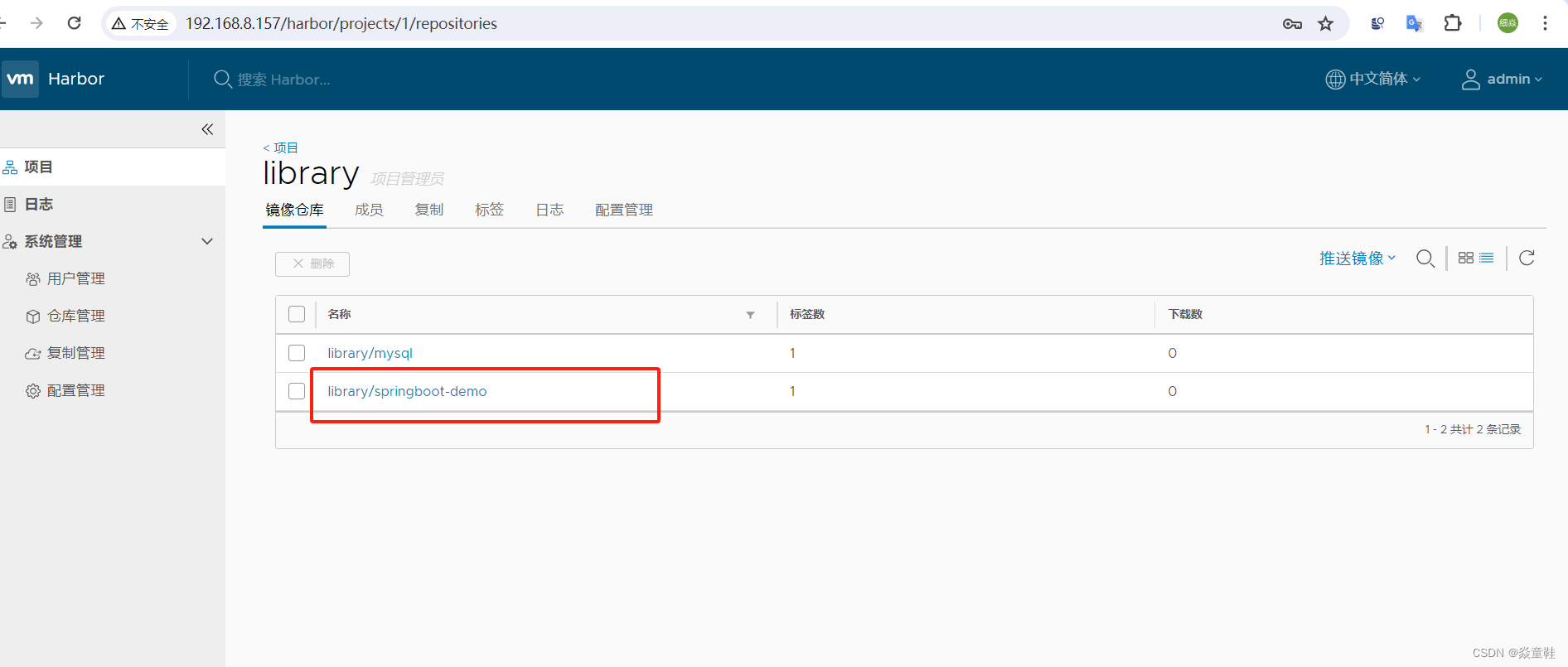

重新构建可以看的流水线上已经把镜像推送到harbor镜像仓库里了

5.k8s从镜像仓库中拉取镜像并部署

5.k8s从镜像仓库中拉取镜像并部署

修改Jenkins步骤增加如下在master节点中操作的步骤

def remote = [:]

remote.name = 'k8s master'

remote.host = '192.168.8.153'

remote.user = 'root'

remote.password = 'dxy666,,'

remote.allowAnyHosts = true

stage('Remote SSH') {

writeFile file: '/opt/project/k8s-deploy-springboot-demo.sh', text: 'ls -lrt'

sshScript remote: remote, script: "/opt/project/k8s-deploy-springboot-demo.sh"

}

在master节点的工作目录/opt/project下创建springboot-demo.yaml用于将镜像拉取并在指定机器上执行

# 以Deployment部署Pod

apiVersion: apps/v1

kind: Deployment

metadata:

name: springboot-demo

spec:

selector:

matchLabels:

app: springboot-demo

replicas: 1

template:

metadata:

labels:

app: springboot-demo

spec:

containers:

- name: springboot-demo

image: 192.168.8.157/library/springboot-demo:v1.0

ports:

- containerPort: 8080

---

# 创建Pod的Service

apiVersion: v1

kind: Service

metadata:

name: springboot-demo

spec:

ports:

- port: 80

protocol: TCP

targetPort: 8080

selector:

app: springboot-demo

---

# 创建Ingress,定义访问规则

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: springboot-demo

spec:

rules:

- host: springboot.k8s.com

http:

paths:

- path: /

backend:

serviceName: springboot-demo

servicePort: 80创建k8s的inress网络yaml文件指定在w1上

# 确保nginx-controller运行到w1节点上

kubectl label node w1 name=ingress apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: nginx-configuration

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: tcp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: udp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: nginx-ingress-clusterrole

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses/status

verbs:

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: nginx-ingress-role

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

resourceNames:

# Defaults to "<election-id>-<ingress-class>"

# Here: "<ingress-controller-leader>-<nginx>"

# This has to be adapted if you change either parameter

# when launching the nginx-ingress-controller.

- "ingress-controller-leader-nginx"

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""

resources:

- endpoints

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: nginx-ingress-role-nisa-binding

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: nginx-ingress-role

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: nginx-ingress-clusterrole-nisa-binding

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nginx-ingress-clusterrole

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

annotations:

prometheus.io/port: "10254"

prometheus.io/scrape: "true"

spec:

# wait up to five minutes for the drain of connections

terminationGracePeriodSeconds: 300

serviceAccountName: nginx-ingress-serviceaccount

hostNetwork: true

nodeSelector:

name: ingress

kubernetes.io/os: linux

containers:

- name: nginx-ingress-controller

image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.26.1

args:

- /nginx-ingress-controller

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --publish-service=$(POD_NAMESPACE)/ingress-nginx

- --annotations-prefix=nginx.ingress.kubernetes.io

securityContext:

allowPrivilegeEscalation: true

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

# www-data -> 33

runAsUser: 33

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- name: http

containerPort: 80

- name: https

containerPort: 443

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

---

kubectl apply -f mandatory.yaml

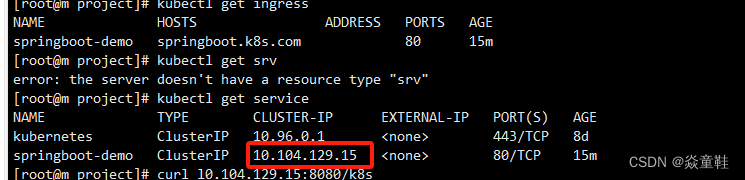

查看对应ingress是否ok

kubectl get ingress

修改host文件中对应域名未指定service对应ip即可

10.104.129.15 springboot.k8s.com

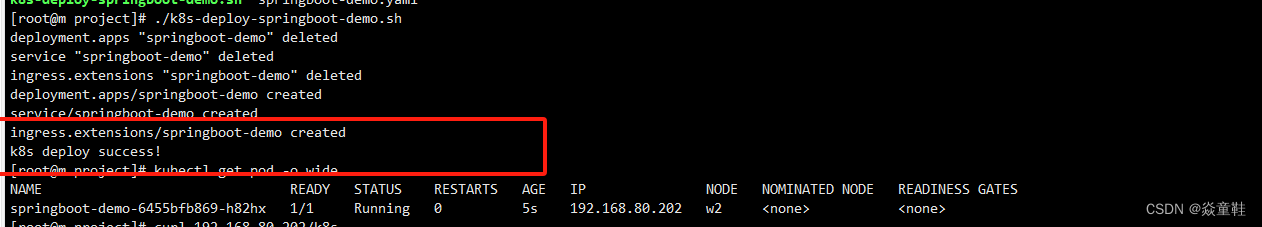

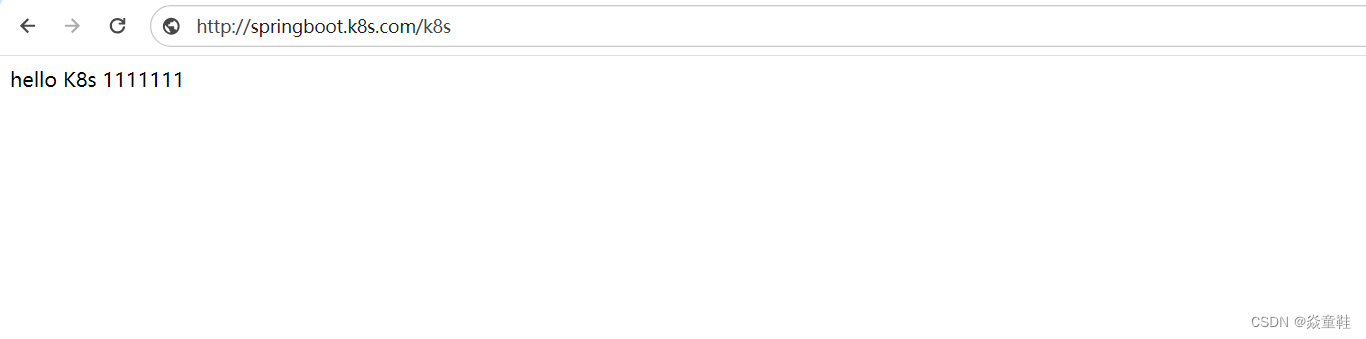

6.访问对应地址查看是否使用了镜像构建

先手动执行一下部署yaml看下是否有格式的问题如下:

再次提交从0000->改成1111

7.遗留问题

gitlab hook无法触发Jenkins自动构建报错如下:

尝试修改-Djava.awt.headless=true -Dhudson.security.csrf.GlobalCrumbIssuerConfiguration.DISABLE_CSRF_PROTECTION=true 后仍旧失败可能是这个版本的Jenkins没有关闭跨站点防护功能,主题流程基本走通,后续有时间在换个版本看看。

1112

1112

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?