上节课我们一起学习了自动化采集工具Flume,这节课我们一起来把我们前面学习的知识综合起来,做一个小项目。

第一部分:项目分析

我们要做的小项目是关于黑马训练营的日志分析项目,用到的日志文件大家可以到:http://download.csdn.net/detail/u012453843/9680664这个地址下载。日志文件中的内容如下(仅拿出来两行内容),可以看到一共有5列,每列代表的意思是:第一列是IP,第二列是时间,第三列是请求资源路径,第四列是访问状态(200代表访问成功),第五列是本次访问产生的流量。

27.19.74.143 - - [30/May/2013:17:38:20 +0800] "GET /static/image/common/faq.gif HTTP/1.1" 200 1127

110.52.250.126 - - [30/May/2013:17:38:20 +0800] "GET /data/cache/style_1_widthauto.css?y7a HTTP/1.1" 200 1292

上面的数据比较重要的数据是请求的资源路径,有了它我们就可以知道用户喜欢访问的资源是哪些,然后有针对性地对用户进行推销相关产品。

下面来说几个网站的关键指标。

第一个:浏览量PV

定义:页面浏览量即为PV(Page View),是指所有用户浏览页面的总和,一个独立用户每打开一个页面就被记录一次。

分析:网站总浏览量,可以考核用户对于网站的兴趣,就像收视率对于电视剧一样,但是对于网站运营者来说,更重要的是每个栏目下的浏览量。PV虽然重要但有可能水分比较大,比如我一个用户写个程序一天访问这个网站一千万次,这并不能代表这个网站就是成功的。

第二个:访客数UV(包括新访客数、新访客比例)

定义:访客数(UV)即唯一访客数,一天之内网站的独立访客数(以Cookie 为依据),一天内同一访客多次访问网站只计算1 个访客。

分析:在统计工具中,我们经常可以看到,独立访客和IP数的数据是不一样的,独立访客都多于IP数。那是因为,同一个IP地址下,可能有很多台电脑一同使用,这种情况,相信都很常见。

还有一种情况就是同一台电脑上,用户清空了缓存,使用360等工具,将cookie删除,这样一段时间后,用户再使用该电脑,进入网站,这样访问数UV也被重新加一。

当然,对于网站统计来说,关于访客数需要注意的另一个指标就是新访客数,新访客数据可以衡量,网站通过推广活动,所获得的用户数量。新访客对于总访客数的比值,可以看到网站吸引新鲜血液的能力,及如何保留旧有用户。

第三个:IP数

定义:一天之内,访问网站的不同独立IP个数加和。其中同一IP无论访问了几个页面,独立IP 数均为1。

分析:统计IP虽然不太好(因为现实中都是一个公网IP对应着多台电脑,所以从IP并不能知道具体有多少人访问)但是它依然有它的用处,由于它是我们最熟悉的一个概念,无论同一个IP上有多少电脑,或者其他用户,从某种程度上来说,独立IP的多少,是衡量网站推广活动好坏最直接的数据,我们还是可以从IP的数量来分析出那片区域访问的数量比较多,哪片访问的少,对于IP数量比较少的区域我们应该加大广告力度。

公式:对不同ip,计数

第四个:跳出率

定义:只浏览了一个页面便离开了网站的访问次数占总的访问次数的百分比,即只浏览了一个页面的访问次数/全部的访问次数汇总。

分析:跳出率是非常重要的访客黏性指标,它显示了访客对网站的兴趣程度:跳出率越低说明流量质量越好,访客对网站的内容越感兴趣,这些访客越可能是网站的有效用户、忠实用户。

该指标也可以衡量网络营销的效果,指出有多少访客被网络营销吸引到宣传产品页或网站上之后,又流失掉了,可以说就是煮熟的鸭子飞了。比如,网站在某媒体上打广告推广,分析从这个推广来源进入的访客指标,其跳出率可以反映出选择这个媒体是否合适,广告语的撰写是否优秀,以及网站入口页的设计是否用户体验良好。

公式:(1)统计一天内只出现一条记录的ip,称为跳出数

(2)跳出数/PV

第五个;板块热度排行榜

定义:版块的访问情况排行。

分析:巩固热点版块成绩,加强冷清版块建设。同时对学科建设也有影响。

公式:按访问次数、停留时间统计排序

第二部分:项目开发步骤

1.flume(采集数据)

2.对数据进行清洗(采用MapReduce对数据进行清洗)

3.使用hive进行数据的多维分析

4.把hive分析结果通过sqoop导出到mysql中

5.提供视图工具供用户使用

第三部分:实战

首先我们来删除我们hive以前创建的表(避免影响我们的结果),如下图所示,我们先到hive的bin目录,然后使用./hive来启动hive。

删除表之后我们来建表,如下所示,我们创建了一张分区表,是以logdate来作为分区条件,列分隔符为'\t',创建的表在HDFS的位置是根目录下的cleaned(过滤完数据之后的目录)。

hive> create external table hmbbs (ip string,logtime string,url string) partitioned by (logdate string) row format delimited fields terminated by '\t' location '/cleaned';

OK

Time taken: 0.391 seconds

hive>

cleaned在HDFS上的位置如下图所示。

创建完一个hive表后,在元数据库中就有相应的信息了,如下所示

[root@itcast03 ~]# vim daily.sh

~

"daily.sh" [New] 0L, 0C written

[root@itcast03 ~]# ls

anaconda-ks.cfg hbase-0.96.2-hadoop2-bin.tar.gz NationUDF.jar test.sh b.c install.log pep.txt time b.j install.log.syslog Pictures Videos daily.sh jdk-7u80-linux-x64.gz Public wc.txt Desktop logs Documents Music student.txt Downloads mysql-connector-java-5.1.40-bin.jar Templates

[root@itcast03 ~]#

初步建完的daily.sh是没有执行权限的(如下所示,可以看到daily.sh只有读和写的权限)

[root@itcast03 ~]# ls -l

total 293608

-rw-------. 1 root root 3390 Oct 23 05:52 anaconda-ks.cfg

-rw-r--r--. 1 root root 42 Nov 6 16:22 b.c

-rw-r--r--. 1 root root 51 Nov 6 16:25 b.j

-rw-r--r--. 1 root root 0 Nov 12 00:31 daily.sh

drwxr-xr-x. 2 root root 4096 Oct 23 06:13 Desktop

drwxr-xr-x. 2 root root 4096 Oct 23 06:13 Documents

drwxr-xr-x. 2 root root 4096 Oct 23 06:13 Downloads

-rw-r--r--. 1 root root 79367504 Oct 30 18:54 hbase-0.96.2-hadoop2-bin.tar.gz

-rw-r--r--. 1 root root 41918 Oct 23 05:52 install.log

-rw-r--r--. 1 root root 14303 Oct 23 05:52 install.log.syslog

-rw-r--r--. 1 root root 153530841 Oct 23 08:36 jdk-7u80-linux-x64.gz

-rw-r--r--. 1 root root 3965 Oct 29 23:45 logs

drwxr-xr-x. 2 root root 4096 Oct 23 06:13 Music

-rw-r--r--. 1 root root 990927 Oct 28 09:11 mysql-connector-java-5.1.40-bin.jar

-rw-r--r--. 1 root root 49649292 Nov 7 22:13 NationUDF.jar

-rw-r--r--. 1 root root 16 Nov 6 14:49 pep.txt

drwxr-xr-x. 2 root root 4096 Oct 23 06:13 Pictures

drwxr-xr-x. 2 root root 4096 Oct 23 06:13 Public

-rw-r--r--. 1 root root 16870735 Oct 28 06:40 sqoop-1.4.6.bin__hadoop-2.0.4-alpha.tar.gz

-rw-r--r--. 1 root root 28 Nov 6 12:37 student.txt

drwxr-xr-x. 2 root root 4096 Oct 23 06:13 Templates

-rwxr--r--. 1 root root 317 Oct 30 00:22 test.sh

-rw-r--r--. 1 root root 98542 Nov 12 00:51 time

drwxr-xr-x. 2 root root 4096 Oct 23 06:13 Videos

-rw-r--r--. 1 root root 56 Oct 29 23:11 wc.txt

[root@itcast03 ~]#

我们来增加以下执行权限,增加完权限之后我们再查看一下,发现已经有了执行权限了。

[root@itcast03 ~]#chmod +x daily.sh

[root@itcast03 ~]# ls -l

total 293608

-rw-------. 1 root root 3390 Oct 23 05:52 anaconda-ks.cfg

-rw-r--r--. 1 root root 42 Nov 6 16:22 b.c

-rw-r--r--. 1 root root 51 Nov 6 16:25 b.j

-rwxr-xr-x. 1 root root 0 Nov 12 00:31 daily.sh

drwxr-xr-x. 2 root root 4096 Oct 23 06:13 Desktop

drwxr-xr-x. 2 root root 4096 Oct 23 06:13 Documents

drwxr-xr-x. 2 root root 4096 Oct 23 06:13 Downloads

-rw-r--r--. 1 root root 79367504 Oct 30 18:54 hbase-0.96.2-hadoop2-bin.tar.gz

-rw-r--r--. 1 root root 41918 Oct 23 05:52 install.log

-rw-r--r--. 1 root root 14303 Oct 23 05:52 install.log.syslog

-rw-r--r--. 1 root root 153530841 Oct 23 08:36 jdk-7u80-linux-x64.gz

-rw-r--r--. 1 root root 3965 Oct 29 23:45 logs

drwxr-xr-x. 2 root root 4096 Oct 23 06:13 Music

-rw-r--r--. 1 root root 990927 Oct 28 09:11 mysql-connector-java-5.1.40-bin.jar

-rw-r--r--. 1 root root 49649292 Nov 7 22:13 NationUDF.jar

-rw-r--r--. 1 root root 16 Nov 6 14:49 pep.txt

drwxr-xr-x. 2 root root 4096 Oct 23 06:13 Pictures

drwxr-xr-x. 2 root root 4096 Oct 23 06:13 Public

-rw-r--r--. 1 root root 16870735 Oct 28 06:40 sqoop-1.4.6.bin__hadoop-2.0.4-alpha.tar.gz

-rw-r--r--. 1 root root 28 Nov 6 12:37 student.txt

drwxr-xr-x. 2 root root 4096 Oct 23 06:13 Templates

-rwxr--r--. 1 root root 317 Oct 30 00:22 test.sh

-rw-r--r--. 1 root root 98629 Nov 12 00:54 time

drwxr-xr-x. 2 root root 4096 Oct 23 06:13 Videos

-rw-r--r--. 1 root root 56 Oct 29 23:11 wc.txt

[root@itcast03 ~]#

下面我们需要一个MapReduce程序来帮我们清洗一下数据,代码如下:

package com.mapreduce.clean;

import java.io.IOException;

import java.text.SimpleDateFormat;

import java.util.Locale;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.conf.Configured;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.Tool;

import org.apache.hadoop.util.ToolRunner;

/**

* Cleaner类为何要继承Configured类呢?因为我们要使用Tool接口类,而Tool接口类继承了Configurable类,

* Configurable类有void setConf(Configuration conf);和Configuration

* getConf();两个接口需要实现

* 这就是说,如果我们直接继承Tool类,那么我们将不得不实现三个接口。但是我们的Configured类却已经帮我们实现了Configurable

* 类的两个接口,因此我们为了方便,继承Configurable类,仅实现Tool类的run方法就可以了。

*

* @author wanghaijie

*

*/

public class Cleaner extends Configured implements Tool {

/**

* 该方法是Tool接口类的一个接口,类似于一个线程,我们需要实现它的run方法。

*/

@Override

public int run(String[] args) throws Exception {

// 因为这是单独起的一个线程,因此变量必须是final类型的,第一个参数是输入路径,也就是我们的原始数据的路径

final String inputPath = args[0];

// 第二个参数是输出路径,也就是经过清洗后的数据将要存放到哪儿。

final String outPath = args[1];

//我們的Job需要一个配置类,因此我们便new一个配置类。

final Configuration conf = new Configuration();

//得到一个作业的实例

final Job job=Job.getInstance(conf);

//告诉job要运行的是哪个类的main方法

job.setJarByClass(Cleaner.class);

//把外界传过来的原始数据的路径设置到job当中,从而job知道到哪儿读取数据了。

FileInputFormat.setInputPaths(job, inputPath);

//把自己写的Mapper类加载进来

job.setMapperClass(MyMapper.class);

//指定输出的key(k2),我们清洗完数据后,其实还是以一行一行的内容输出,因此输出的key还是行号

job.setMapOutputKeyClass(LongWritable.class);

//指定输出的value(v2),清洗完数据后,数据依然是一行一行的文本,因此以Text形式输出。

job.setMapOutputValueClass(Text.class);

//把自己写的Reducer类加载进来

job.setReducerClass(MyReducer.class);

//指定k3的输出形式,也就是Reducer处理完之后将以什么方式来输出key,显然我们还应该用Text来输出

job.setOutputKeyClass(Text.class);

//指定v3的输出形式,也就是Reducer处理完之后将以什么方式来输出value,由于我们已经把清洗后的数据

//以Text的形式输出了,其它的我们已经不需要了,因此对于value来说,我们穿个Null值就行了

job.setOutputValueClass(NullWritable.class);

//告诉job将清洗后的数据写到HDFS的哪个路径之下。

FileOutputFormat.setOutputPath(job, new Path(outPath));

//等待作业完成,指定参数true是指打印进度给用户看

job.waitForCompletion(true);

return 0;

}

public static void main(String[] args) throws Exception{

ToolRunner.run(new Cleaner(), args);

}

/**

* 自定义一个Mapper类,Mapper类的输入是<k1,v1>,输出是<k2,v2>

* @author wanghaijie

*

*/

static class MyMapper extends Mapper<LongWritable,Text,LongWritable,Text>{

//实例化一个转换类

LogParser parser=new LogParser();

//之所以把v2放到map方法的外部是为了一次实例化多次调用,避免资源的浪费

Text v2=new Text();

@Override

protected void map(LongWritable key, Text value, Mapper<LongWritable, Text, LongWritable, Text>.Context context)

throws IOException, InterruptedException {

//获取到日志文件的一行内容(value就是v1的值)

final String line=value.toString();

//将这一行内容经过转换类转化为一个数组

final String[] parsed=parser.parse(line);

//数组中第一个元素的值便是IP

final String ip=parsed[0];

//数组中第二个元素的值是log产生的时间

final String logtime=parsed[1];

//数组中第三个元素的值是url

String url=parsed[2];

//我们要过滤掉以"GET /static"或"GET /uc_server"开头的数据(我们姑且认为这两个开头的数据是坏数据)

if(url.startsWith("GET /static") || url.startsWith("GET /uc_server")){

return;

}

//如果是GET请求,我们截取"GET"和" HTTP/1.1"之间的数据,比如"GET /static/image/common/faq.gif HTTP/1.1"

//我们要得到的是"/static/image/common/faq.gif"

if(url.startsWith("GET")){

url=url.substring("GET ".length()+1, url.length()-" HTTP/1.1".length());

}

//如果是POST请求,我们截取"POST"和" HTTP/1.1"之间的数据,比如"POST /api/manyou/my.php HTTP/1.0"

//我们要得到的是"/api/manyou/my.php HTTP/1.0"

if(url.startsWith("POST")){

url=url.substring("POST ".length()+1,url.length()-" HTTP/1.1".length());

}

//v2的输出形式是:ip logtime url

v2.set(ip+"\t"+logtime+"\t"+url);

//k2和k1一样,都是数值型

context.write(key, v2);

}

}

/**

* 自定义一个Reducer类,输入是<k2,v2>,输出是<k3,v3>

* @author wanghaijie

*

*/

static class MyReducer extends Reducer<LongWritable, Text,Text,NullWritable>{

@Override

protected void reduce(LongWritable k2, Iterable<Text> v2s,

Reducer<LongWritable, Text, Text, NullWritable>.Context context) throws IOException, InterruptedException {

//Reducer要做的工作其实非常简单,就是把<k2,v2>的v2的值给输出出去,k3的类型是Text,v3其实是多余的,那么我们用Null来表示。序列化形式的

//Null是NullWritable.get()

for(Text v2:v2s){

context.write(v2, NullWritable.get());

}

};

}

}

class LogParser{

//第一个FORMAT用来匹配日志文件中的英文时间,英文时间是[30/May/2013:17:38:20 +0800]这种格式的

public static final SimpleDateFormat FORMAT = new SimpleDateFormat("d/MMM/yyyy:HH:mm:ss", Locale.ENGLISH);

//第二个FORMAT是我们要把时间转换成的格式,如20130530173820

public static final SimpleDateFormat DATEFORMAT=new SimpleDateFormat("yyyyMMddHHmmss");

public static void main(String[] args){

final String s1="27.19.74.143 - - [30/May/2013:17:38:20 +0800] \"GET /static/image/common/faq.gif HTTP/1.1\" 200 1127";

LogParser parser=new LogParser();

final String[] array=parser.parse(s1);

System.out.println("样例数据:"+s1);

System.out.format("解析结果:ip=%s, time=%s, url=%s, status=%s, traffic=%s",array[0],array[1],array[2],array[3],array[4]);

}

/**

* 解析日志的行记录

* @param line

* @return 数组含有5个元素,分别是ip、时间、url、状态、流量

*/

public String[] parse(String line){

String ip=parseIP(line);

String time;

try{

time=parseTime(line);

}catch(Exception e){

time="null";

}

String url;

try{

url=parseURL(line);

}catch(Exception e){

url="null";

}

String status=parseStatus(line);

String traffic=parseTraffic(line);

return new String[]{ip,time,url,status,traffic};

}

/**

* 获取本次浏览所消耗的流量

* 字符串中关于流量的信息如:"GET /static/image/common/faq.gif HTTP/1.1" 200 1127

* 我们要得到的是1127,为了得到它,我们从最后一个"\"后的空格开始,截取到最后,然后去掉两端的空格,就剩"200 1127"

* 然后我们把"200 1127"以空格为分隔符,数组的第二个元素的值就是"1127"

* @param line

* @return

*/

private String parseTraffic(String line){

final String trim=line.substring(line.lastIndexOf("\"")+1).trim();

String traffic=trim.split(" ")[1];

return traffic;

}

/**

* 截取访问结果Status

* 字符串中关于Status的信息如:"GET /static/image/common/faq.gif HTTP/1.1" 200 1127

* 我们要得到的是200,为了得到它,我们从最后一个"\"后的空格开始,截取到最后,然后去掉两端的空格,就剩"200 1127"

* 然后我们把"200 1127"以空格为分隔符,数组的第一个元素的值就是"200"

* @param line

* @return

*/

private String parseStatus(String line){

String trim;

try{

trim=line.substring(line.lastIndexOf("\"")+1).trim();

}catch(Exception e){

trim="null";

}

String status=trim.split(" ")[0];

return status;

}

/**

* 截取字符串中的URL

* 字符串中关于URL的信息如:"GET /static/image/common/faq.gif HTTP/1.1"

* 我们截取的话当然应该从"\"的下一个字母开始,到下一个"\"结束(字符串截取包括前面,不包括后面)

* @param line

* @return

*/

private String parseURL(String line){

final int first=line.indexOf("\"");

final int last=line.lastIndexOf("\"");

String url=line.substring(first+1,last);

return url;

}

/**

* 将英文时间转变为如:20130530135026这样形式的时间

* 字符串中关于时间的信息如: [30/May/2013:17:38:20 +0800] ,我们截取其中的时间,截取的开始位置是"["后面的"3",

* 结束的位置是"+0800",然后去掉前后的空格就是我们想要的英文时间"30/May/2013:17:38:20"

* 有了英文时间,我们便使用FORMAT.parse方法将time转换为时间,然后使用DATEFORMAT.format方法将时间转换为我们想要的"20130530173820"

* @param line

* @return

*/

private String parseTime(String line){

final int first=line.indexOf("[");

final int last=line.indexOf("+0800]");

String time=line.substring(first+1,last).trim();

try{

return DATEFORMAT.format(FORMAT.parse(time));

}catch(Exception e){

e.printStackTrace();

}

return "";

}

/**

* 截取字符串中的IP 字符串如:27.19.74.143 - - [30/May/2013:17:38:20 +0800]

* 我们以"- -"为分割符,数组的第一个值便是IP的值

* @param line

* @return

*/

private String parseIP(String line){

String ip=line.split("- -")[0].trim();

return ip;

}

}

下面我们把MapReduce类所在的工程打成jar包,为了更方便的调用main方法,我在打包的过程中指定一下jar包的main方法入口,打包的过程如下图所示。

打完包之后我们把Cleaner.jar放到itcast03服务器上,如下图所示

下面我们先来测试一下该jar包是否可用,当然测试之前我们需要把日志数据传到HDFS上,这里我已经上传过了,在HDFS根目录下有个flume的目录,flume目录下有个20161109目录,日志文件就保存在这个目录下,如下图所示。这是我用flume采集上去的,大家如果不会的话,可以参考:http://blog.csdn.net/u012453843/article/details/53091240这篇博客进行学习。

好,有了jar包和原数据,下面我们便来测试一下jar包是否真的管用。执行信息如下所示,由于我们在打包的时候已经指定了Cleaner.jar的main入口方法,因此我们这里不需要指定main了,只需要指定原始数据所在的目录以及要将清理后的数据放到哪个目录下,这里我们的原始数据在/flume/20161109目录下,清理后的数据我们也放到flume目录下,起名为out1112。

[root@itcast03 ~]#hadoop jar Cleaner.jar /flume/20161109 /flume/out1112

16/11/12 17:13:53 INFO client.RMProxy: Connecting to ResourceManager at itcast03/169.254.254.30:8032

16/11/12 17:13:53 WARN mapreduce.JobSubmitter: Hadoop command-line option parsing not performed. Implement the Tool interface and execute your application with ToolRunner to remedy this.

16/11/12 17:13:54 INFO input.FileInputFormat: Total input paths to process : 1

16/11/12 17:13:54 INFO mapreduce.JobSubmitter: number of splits:1

16/11/12 17:13:54 INFO Configuration.deprecation: user.name is deprecated. Instead, use mapreduce.job.user.name

16/11/12 17:13:54 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

16/11/12 17:13:54 INFO Configuration.deprecation: mapred.output.value.class is deprecated. Instead, use mapreduce.job.output.value.class

16/11/12 17:13:54 INFO Configuration.deprecation: mapred.mapoutput.value.class is deprecated. Instead, use mapreduce.map.output.value.class

16/11/12 17:13:54 INFO Configuration.deprecation: mapreduce.map.class is deprecated. Instead, use mapreduce.job.map.class

16/11/12 17:13:54 INFO Configuration.deprecation: mapred.job.name is deprecated. Instead, use mapreduce.job.name

16/11/12 17:13:54 INFO Configuration.deprecation: mapreduce.reduce.class is deprecated. Instead, use mapreduce.job.reduce.class

16/11/12 17:13:54 INFO Configuration.deprecation: mapred.input.dir is deprecated. Instead, use mapreduce.input.fileinputformat.inputdir

16/11/12 17:13:54 INFO Configuration.deprecation: mapred.output.dir is deprecated. Instead, use mapreduce.output.fileoutputformat.outputdir

16/11/12 17:13:54 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

16/11/12 17:13:54 INFO Configuration.deprecation: mapred.output.key.class is deprecated. Instead, use mapreduce.job.output.key.class

16/11/12 17:13:54 INFO Configuration.deprecation: mapred.mapoutput.key.class is deprecated. Instead, use mapreduce.map.output.key.class

16/11/12 17:13:54 INFO Configuration.deprecation: mapred.working.dir is deprecated. Instead, use mapreduce.job.working.dir

16/11/12 17:13:54 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1478920720232_0002

16/11/12 17:13:54 INFO impl.YarnClientImpl: Submitted application application_1478920720232_0002 to ResourceManager at itcast03/169.254.254.30:8032

16/11/12 17:13:54 INFO mapreduce.Job: The url to track the job: http://itcast03:8088/proxy/application_1478920720232_0002/

16/11/12 17:13:54 INFO mapreduce.Job: Running job: job_1478920720232_0002

16/11/12 17:14:02 INFO mapreduce.Job: Job job_1478920720232_0002 running in uber mode : false

16/11/12 17:14:02 INFO mapreduce.Job: map 0% reduce 0%

16/11/12 17:14:13 INFO mapreduce.Job: map 100% reduce 0%

16/11/12 17:14:20 INFO mapreduce.Job: map 100% reduce 100%

16/11/12 17:14:21 INFO mapreduce.Job: Job job_1478920720232_0002 completed successfully

16/11/12 17:14:21 INFO mapreduce.Job: Counters: 43

File System Counters

FILE: Number of bytes read=14465214

FILE: Number of bytes written=29091773

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=61084306

HDFS: Number of bytes written=12756677

HDFS: Number of read operations=6

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=8929

Total time spent by all reduces in occupied slots (ms)=4877

Map-Reduce Framework

Map input records=548162

Map output records=169859

Map output bytes=14120449

Map output materialized bytes=14465214

Input split bytes=112

Combine input records=0

Combine output records=0

Reduce input groups=169859

Reduce shuffle bytes=14465214

Reduce input records=169859

Reduce output records=169859

Spilled Records=339718

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=456

CPU time spent (ms)=8750

Physical memory (bytes) snapshot=298487808

Virtual memory (bytes) snapshot=1680007168

Total committed heap usage (bytes)=150654976

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=61084194

File Output Format Counters

Bytes Written=12756677

[root@itcast03 ~]#

执行完命令之后我们到HDFS的flume目录下去看看是否生成了out1112目录以及该目录下是否有我们过滤后的数据,如下图所示,发现是没有问题的。过滤完后确实是按我们的需求只剩下了符合我们阅读习惯的三列数据,分别是IP、时间、访问路径。

下面我们来用脚本调用Cleaner.jar,我们需要定义一个时间变量,定义变量的好处是以后就不用手动改变它了,每隔一段时间它会自动执行,我们定义的时间变量名是CURRENT,如下所示,其中echo $CURRENT只是用来测试该时间变量是否可用。%y显示的是2016的后两位16,%Y则显示的是全部。

[root@itcast03 ~]#vim daily.sh

CURRENT=`date +%Y%m%d`

echo $CURRENT

我们先来看看这样写的日期变量能不能取到我们想要的值,如下所示,发现可以正常取得今天的日期。

[root@itcast03 ~]#./daily.sh

20161112

那么假如我们想要显示昨天的日期的话,可以这样来写,如下所示:

[root@itcast03 ~]#vim daily.sh

CURRENT=`date -d "1 day ago" +%Y%m%d`

echo $CURRENT

我们来执行一下daily.sh,看看是不是昨天的日期,如下所示,发现确实显示出了昨天的时间。

[root@itcast03 ~]#./daily.sh

20161111

下面我们来用脚本执行Cleaner.jar,脚本需要的shell命令如下,之所以选择前3天是因为我的HDFS上有前3天采集的原始数据(目录名称是"20161109"),因此我就用前3天了,当然了,大家可以随便选一个日期,但是要有这个日期所对应的目录。我们把清洗好的数据放到cleaned目录下。还有需要注意的一点是hadoop命令存在于hadoop-2.2.0的bin目录下,正确的做法是写绝对路径,包括Cleaner.jar我们也要写绝对路径,这样最保险。

[root@itcast03 ~]#vim daily.sh

CURRENT=`date -d "3 day ago" +%Y%m%d`

/itcast/hadoop-2.2.0/bin/hadoop jar /root/Cleaner.jar /flume/$CURRENT /cleaned/$CURRENT

下面我们来执行daily.sh脚本,如下所示,发现执行成功。

[root@itcast03 ~]# ./daily.sh

16/11/12 21:40:29 INFO client.RMProxy: Connecting to ResourceManager at itcast03/169.254.254.30:8032

16/11/12 21:40:30 WARN mapreduce.JobSubmitter: Hadoop command-line option parsing not performed. Implement the Tool interface and execute your application with ToolRunner to remedy this.

16/11/12 21:40:30 INFO input.FileInputFormat: Total input paths to process : 1

16/11/12 21:40:30 INFO mapreduce.JobSubmitter: number of splits:1

16/11/12 21:40:30 INFO Configuration.deprecation: user.name is deprecated. Instead, use mapreduce.job.user.name

16/11/12 21:40:30 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

16/11/12 21:40:30 INFO Configuration.deprecation: mapred.output.value.class is deprecated. Instead, use mapreduce.job.output.value.class

16/11/12 21:40:30 INFO Configuration.deprecation: mapred.mapoutput.value.class is deprecated. Instead, use mapreduce.map.output.value.class

16/11/12 21:40:30 INFO Configuration.deprecation: mapreduce.map.class is deprecated. Instead, use mapreduce.job.map.class

16/11/12 21:40:30 INFO Configuration.deprecation: mapred.job.name is deprecated. Instead, use mapreduce.job.name

16/11/12 21:40:30 INFO Configuration.deprecation: mapreduce.reduce.class is deprecated. Instead, use mapreduce.job.reduce.class

16/11/12 21:40:30 INFO Configuration.deprecation: mapred.input.dir is deprecated. Instead, use mapreduce.input.fileinputformat.inputdir

16/11/12 21:40:30 INFO Configuration.deprecation: mapred.output.dir is deprecated. Instead, use mapreduce.output.fileoutputformat.outputdir

16/11/12 21:40:30 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

16/11/12 21:40:30 INFO Configuration.deprecation: mapred.output.key.class is deprecated. Instead, use mapreduce.job.output.key.class

16/11/12 21:40:30 INFO Configuration.deprecation: mapred.mapoutput.key.class is deprecated. Instead, use mapreduce.map.output.key.class

16/11/12 21:40:30 INFO Configuration.deprecation: mapred.working.dir is deprecated. Instead, use mapreduce.job.working.dir

16/11/12 21:40:30 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1478920720232_0004

16/11/12 21:40:31 INFO impl.YarnClientImpl: Submitted application application_1478920720232_0004 to ResourceManager at itcast03/169.254.254.30:8032

16/11/12 21:40:31 INFO mapreduce.Job: The url to track the job: http://itcast03:8088/proxy/application_1478920720232_0004/

16/11/12 21:40:31 INFO mapreduce.Job: Running job: job_1478920720232_0004

16/11/12 21:40:37 INFO mapreduce.Job: Job job_1478920720232_0004 running in uber mode : false

16/11/12 21:40:37 INFO mapreduce.Job: map 0% reduce 0%

16/11/12 21:40:48 INFO mapreduce.Job: map 100% reduce 0%

16/11/12 21:40:56 INFO mapreduce.Job: map 100% reduce 100%

16/11/12 21:40:56 INFO mapreduce.Job: Job job_1478920720232_0004 completed successfully

16/11/12 21:40:57 INFO mapreduce.Job: Counters: 43

File System Counters

FILE: Number of bytes read=14465214

FILE: Number of bytes written=29091779

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=61084306

HDFS: Number of bytes written=12756677

HDFS: Number of read operations=6

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=9129

Total time spent by all reduces in occupied slots (ms)=4909

Map-Reduce Framework

Map input records=548162

Map output records=169859

Map output bytes=14120449

Map output materialized bytes=14465214

Input split bytes=112

Combine input records=0

Combine output records=0

Reduce input groups=169859

Reduce shuffle bytes=14465214

Reduce input records=169859

Reduce output records=169859

Spilled Records=339718

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=451

CPU time spent (ms)=8690

Physical memory (bytes) snapshot=300904448

Virtual memory (bytes) snapshot=1681092608

Total committed heap usage (bytes)=150654976

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=61084194

File Output Format Counters

Bytes Written=12756677

执行完之后我们到HDFS上看看我们的/cleaned目录下有没有生成的20161109的目录,如下图所示,发现在HDFS的根目录下的cleaned目录下确实生成了一个20161109的目录,在该目录后有生成的结果文件part-r-00000,我们点击该文件可以看到过滤后的文件,发现过滤的没问题。

虽然这时cleaned目录下已经有数据了,那么我们这时如果去查询hive表hmbbs的话,是查询不到数据的,如下所示。这是为什么呢?因为我们建hmbbs表的时候建的是分区表,意味着我们必须查询到具体的某个分区才能查到数据。现在我们用mapreduce在HDFS的cleaned的目录下生成一个以时间命名的目录,但是并没有通知hive,因此hive是不知道有这个表的存在的。

[root@itcast03 ~]#cd /itcast/apache-hive-0.13.0-bin/bin

[root@itcast03 bin]# ./hive

16/11/12 21:51:12 INFO Configuration.deprecation: mapred.reduce.tasks is deprecated. Instead, use mapreduce.job.reduces

16/11/12 21:51:12 INFO Configuration.deprecation: mapred.min.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize

16/11/12 21:51:12 INFO Configuration.deprecation: mapred.reduce.tasks.speculative.execution is deprecated. Instead, use mapreduce.reduce.speculative

16/11/12 21:51:12 INFO Configuration.deprecation: mapred.min.split.size.per.node is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.node

16/11/12 21:51:12 INFO Configuration.deprecation: mapred.input.dir.recursive is deprecated. Instead, use mapreduce.input.fileinputformat.input.dir.recursive

16/11/12 21:51:12 INFO Configuration.deprecation: mapred.min.split.size.per.rack is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.rack

16/11/12 21:51:12 INFO Configuration.deprecation: mapred.max.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.maxsize

16/11/12 21:51:12 INFO Configuration.deprecation: mapred.committer.job.setup.cleanup.needed is deprecated. Instead, use mapreduce.job.committer.setup.cleanup.needed

Logging initialized using configuration in jar:file:/itcast/apache-hive-0.13.0-bin/lib/hive-common-0.13.0.jar!/hive-log4j.properties

hive> select * from hmbbs;

OK

Time taken: 2.038 seconds

hive>

那么我们怎么通知hive呢?我们采用命令行的方式来通知hive。hive为我们提供了一种机制,让我们可以不进入hive命令行模式就可以使用hive表的相关命令。我们先举个例子,如下所示,发现确实帮我们查询出了hive表信息,要知道我们可是在普通模式下执行的,并没有进入到hive命令行模式(不过我们要在hive的bin目录下执行该命令,如果配置了hive的环境变量的话,在任何目录下都可以执行该命令)。

[root@itcast03 bin]# hive -e "show tables;";

16/11/12 22:02:36 INFO Configuration.deprecation: mapred.reduce.tasks is deprecated. Instead, use mapreduce.job.reduces

16/11/12 22:02:36 INFO Configuration.deprecation: mapred.min.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize

16/11/12 22:02:36 INFO Configuration.deprecation: mapred.reduce.tasks.speculative.execution is deprecated. Instead, use mapreduce.reduce.speculative

16/11/12 22:02:36 INFO Configuration.deprecation: mapred.min.split.size.per.node is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.node

16/11/12 22:02:36 INFO Configuration.deprecation: mapred.input.dir.recursive is deprecated. Instead, use mapreduce.input.fileinputformat.input.dir.recursive

16/11/12 22:02:36 INFO Configuration.deprecation: mapred.min.split.size.per.rack is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.rack

16/11/12 22:02:36 INFO Configuration.deprecation: mapred.max.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.maxsize

16/11/12 22:02:36 INFO Configuration.deprecation: mapred.committer.job.setup.cleanup.needed is deprecated. Instead, use mapreduce.job.committer.setup.cleanup.needed

Logging initialized using configuration in jar:file:/itcast/apache-hive-0.13.0-bin/lib/hive-common-0.13.0.jar!/hive-log4j.properties

OK

hmbbs

Time taken: 0.762 seconds, Fetched: 1 row(s)

[root@itcast03 bin]#

下面我们来在我们的脚本中来执行hive表相关的命令来给hmbbs表添加一个具体的分区,为了排查问题方便,我们一个脚本命令一个脚本命令的来测试,把所有的脚本命令都测试成功之后,我们可以一起执行所有命令。现在我们先把第一个脚本命令注释掉(注释用"#"),添加第二个脚本命令(绿色的内容,该命令是将数据加入到我们的hive表hmbbs当中),如下所示。

[root@itcast03 ~]#vim daily.sh

CURRENT=`date -d "3 day ago" +%Y%m%d`

#/itcast/hadoop-2.2.0/bin/hadoop jar /root/Cleaner.jar /flume/$CURRENT /cleaned/$CURRENT

/itcast/apache-hive-0.13.0-bin/bin/hive -e "alter table hmbbs add partition (logdate=$CURRENT) location '/cleaned/$CURRENT'"

下面我们执行daily.sh脚本,命令仍然是./daily.sh,如下所示,执行过程信息我就不粘贴了,太占空间了。

[root@itcast03 ~]./daily.sh

下面我们来查询出hmbbs表的前10条数据(注意我这里是在/root目录下执行hive命令的,是因为我配置了hive目录的环境变量),发现确实查询出了前10条数据。

[root@itcast03 ~]#hive -e "select * from hmbbs limit 10";

16/11/12 23:26:24 INFO Configuration.deprecation: mapred.reduce.tasks is deprecated. Instead, use mapreduce.job.reduces

16/11/12 23:26:24 INFO Configuration.deprecation: mapred.min.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize

16/11/12 23:26:24 INFO Configuration.deprecation: mapred.reduce.tasks.speculative.execution is deprecated. Instead, use mapreduce.reduce.speculative

16/11/12 23:26:24 INFO Configuration.deprecation: mapred.min.split.size.per.node is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.node

16/11/12 23:26:24 INFO Configuration.deprecation: mapred.input.dir.recursive is deprecated. Instead, use mapreduce.input.fileinputformat.input.dir.recursive

16/11/12 23:26:24 INFO Configuration.deprecation: mapred.min.split.size.per.rack is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.rack

16/11/12 23:26:24 INFO Configuration.deprecation: mapred.max.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.maxsize

16/11/12 23:26:24 INFO Configuration.deprecation: mapred.committer.job.setup.cleanup.needed is deprecated. Instead, use mapreduce.job.committer.setup.cleanup.needed

Logging initialized using configuration in jar:file:/itcast/apache-hive-0.13.0-bin/lib/hive-common-0.13.0.jar!/hive-log4j.properties

OK

110.52.250.126 20130530173820 data/cache/style_1_widthauto.css?y7a 20161109

110.52.250.126 20130530173820 source/plugin/wsh_wx/img/wsh_zk.css 20161109

110.52.250.126 20130530173820 data/cache/style_1_forum_index.css?y7a 20161109

110.52.250.126 20130530173820 source/plugin/wsh_wx/img/wx_jqr.gif 20161109

27.19.74.143 20130530173820 data/attachment/common/c8/common_2_verify_icon.png 20161109

27.19.74.143 20130530173820 data/cache/common_smilies_var.js?y7a 20161109

8.35.201.165 20130530173822 data/attachment/common/c5/common_13_usergroup_icon.jpg 20161109

220.181.89.156 20130530173820 thread-24727-1-1.html 20161109

211.97.15.179 20130530173822 data/cache/style_1_forum_index.css?y7a 20161109

211.97.15.179 20130530173822 data/cache/style_1_widthauto.css?y7a 20161109

Time taken: 1.101 seconds, Fetched: 10 row(s)

[root@itcast03 ~]#

那么,我们为什么可以查询到数据呢?因为我们刚才在元数据表中增加了一个分区,如下图所示,我们用hive命令查询数据,它会先去元数据库的相关表中去查询该表在HDFS上分区所在的位置,然后再去HDFS相应的分区目录下查询出数据。

下面我们来学习第三条脚本命令,该命令用来查询访问该网站的PV值(PV是指访问该网站的所有点击量之和),命令如下绿色字体内容。

[root@itcast03 ~]#vim daily.sh

CURRENT=`date -d "3 day ago" +%Y%m%d`

#/itcast/hadoop-2.2.0/bin/hadoop jar /root/Cleaner.jar /flume/$CURRENT /cleaned/$CURRENT

#/itcast/apache-hive-0.13.0-bin/bin/hive -e "alter table hmbbs add partition (logdate=$CURRENT) location '/cleaned/$CURRENT'"

/itcast/apache-hive-0.13.0-bin/bin/hive -e "select count(*) from hmbbs where logdate=$CURRENT"

写完了命令,我们来执行脚本daily.sh,我们发现它自动启动MapReduce来进行计算了。

[root@itcast03 ~]#./daily.sh

16/11/12 23:49:24 INFO Configuration.deprecation: mapred.reduce.tasks is deprecated. Instead, use mapreduce.job.reduces

16/11/12 23:49:24 INFO Configuration.deprecation: mapred.min.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize

16/11/12 23:49:24 INFO Configuration.deprecation: mapred.reduce.tasks.speculative.execution is deprecated. Instead, use mapreduce.reduce.speculative

16/11/12 23:49:24 INFO Configuration.deprecation: mapred.min.split.size.per.node is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.node

16/11/12 23:49:24 INFO Configuration.deprecation: mapred.input.dir.recursive is deprecated. Instead, use mapreduce.input.fileinputformat.input.dir.recursive

16/11/12 23:49:24 INFO Configuration.deprecation: mapred.min.split.size.per.rack is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.rack

16/11/12 23:49:24 INFO Configuration.deprecation: mapred.max.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.maxsize

16/11/12 23:49:24 INFO Configuration.deprecation: mapred.committer.job.setup.cleanup.needed is deprecated. Instead, use mapreduce.job.committer.setup.cleanup.needed

Logging initialized using configuration in jar:file:/itcast/apache-hive-0.13.0-bin/lib/hive-common-0.13.0.jar!/hive-log4j.properties

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Starting Job = job_1478920720232_0005, Tracking URL = http://itcast03:8088/proxy/application_1478920720232_0005/

Kill Command = /itcast/hadoop-2.2.0/bin/hadoop job -kill job_1478920720232_0005

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1

2016-11-12 23:49:38,485 Stage-1 map = 0%, reduce = 0%

2016-11-12 23:49:44,731 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 1.7 sec

2016-11-12 23:49:52,095 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 2.9 sec

MapReduce Total cumulative CPU time: 2 seconds 900 msec

Ended Job = job_1478920720232_0005

MapReduce Jobs Launched:

Job 0: Map: 1 Reduce: 1 Cumulative CPU: 2.9 sec HDFS Read: 12756871 HDFS Write: 7 SUCCESS

Total MapReduce CPU Time Spent: 2 seconds 900 msec

OK

169859

Time taken: 24.464 seconds, Fetched: 1 row(s)

[root@itcast03 ~]#

上面我们只是查询出了PV的值,但我们并没有保存起来,我们现在来把查询出来的数据保存到一张表当中,我们同样在daily.sh来写脚本运行(下面绿色字体)。这里需要注意的是,现在的时间已经变成了11月13号,刚才还是11月12号,这时前3天的日期是20161110而不是20161109了,为了还用20161109的数据,我们把CURRENT的日期改成4天前。

[root@itcast03 ~]#vim daily.sh

CURRENT=`date -d "4 day ago" +%Y%m%d`

#/itcast/hadoop-2.2.0/bin/hadoop jar /root/Cleaner.jar /flume/$CURRENT /cleaned/$CURRENT

#/itcast/apache-hive-0.13.0-bin/bin/hive -e "alter table hmbbs add partition (logdate=$CURRENT) location '/cleaned/$CURRENT'"

/itcast/apache-hive-0.13.0-bin/bin/hive -e "create table pv_$CURRENT row format delimited fields terminated by '\t' as select count(*) from hmbbs where logdate=$CURRENT"

我们来执行daily.sh脚本,如下所示

[root@itcast03 ~]# ./daily.sh

16/11/13 00:25:36 INFO Configuration.deprecation: mapred.reduce.tasks is deprecated. Instead, use mapreduce.job.reduces

16/11/13 00:25:36 INFO Configuration.deprecation: mapred.min.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize

16/11/13 00:25:36 INFO Configuration.deprecation: mapred.reduce.tasks.speculative.execution is deprecated. Instead, use mapreduce.reduce.speculative

16/11/13 00:25:36 INFO Configuration.deprecation: mapred.min.split.size.per.node is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.node

16/11/13 00:25:36 INFO Configuration.deprecation: mapred.input.dir.recursive is deprecated. Instead, use mapreduce.input.fileinputformat.input.dir.recursive

16/11/13 00:25:36 INFO Configuration.deprecation: mapred.min.split.size.per.rack is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.rack

16/11/13 00:25:36 INFO Configuration.deprecation: mapred.max.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.maxsize

16/11/13 00:25:36 INFO Configuration.deprecation: mapred.committer.job.setup.cleanup.needed is deprecated. Instead, use mapreduce.job.committer.setup.cleanup.needed

Logging initialized using configuration in jar:file:/itcast/apache-hive-0.13.0-bin/lib/hive-common-0.13.0.jar!/hive-log4j.properties

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Starting Job = job_1478920720232_0013, Tracking URL = http://itcast03:8088/proxy/application_1478920720232_0013/

Kill Command = /itcast/hadoop-2.2.0/bin/hadoop job -kill job_1478920720232_0013

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1

2016-11-13 00:25:50,065 Stage-1 map = 0%, reduce = 0%

2016-11-13 00:25:55,364 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 1.31 sec

2016-11-13 00:26:01,654 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 2.35 sec

MapReduce Total cumulative CPU time: 2 seconds 350 msec

Ended Job = job_1478920720232_0013

Moving data to: hdfs://ns1/user/hive/warehouse/pv_20161109

Table default.pv_20161109 stats: [numFiles=1, numRows=1, totalSize=7, rawDataSize=6]

MapReduce Jobs Launched:

Job 0: Map: 1 Reduce: 1 Cumulative CPU: 2.35 sec HDFS Read: 12756871 HDFS Write: 82 SUCCESS

Total MapReduce CPU Time Spent: 2 seconds 350 msec

OK

Time taken: 22.356 seconds

执行完脚本之后,我们来查询一下hive表都有哪些,如下所示,发现多了一张pv_20161109表

[root@itcast03 ~]# hive -e "show tables;";

16/11/13 00:26:30 INFO Configuration.deprecation: mapred.reduce.tasks is deprecated. Instead, use mapreduce.job.reduces

16/11/13 00:26:30 INFO Configuration.deprecation: mapred.min.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize

16/11/13 00:26:30 INFO Configuration.deprecation: mapred.reduce.tasks.speculative.execution is deprecated. Instead, use mapreduce.reduce.speculative

16/11/13 00:26:30 INFO Configuration.deprecation: mapred.min.split.size.per.node is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.node

16/11/13 00:26:30 INFO Configuration.deprecation: mapred.input.dir.recursive is deprecated. Instead, use mapreduce.input.fileinputformat.input.dir.recursive

16/11/13 00:26:30 INFO Configuration.deprecation: mapred.min.split.size.per.rack is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.rack

16/11/13 00:26:30 INFO Configuration.deprecation: mapred.max.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.maxsize

16/11/13 00:26:30 INFO Configuration.deprecation: mapred.committer.job.setup.cleanup.needed is deprecated. Instead, use mapreduce.job.committer.setup.cleanup.needed

Logging initialized using configuration in jar:file:/itcast/apache-hive-0.13.0-bin/lib/hive-common-0.13.0.jar!/hive-log4j.properties

OK

hmbbs

pv_20161109

Time taken: 0.661 seconds, Fetched: 3 row(s)

下面我们来查询一下pv_20161109这张表中的数据,如下所示,发现存储着我们的PV值:169859

[root@itcast03 ~]# hive -e "select * from pv_20161109";

16/11/13 00:27:11 INFO Configuration.deprecation: mapred.reduce.tasks is deprecated. Instead, use mapreduce.job.reduces

16/11/13 00:27:11 INFO Configuration.deprecation: mapred.min.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize

16/11/13 00:27:11 INFO Configuration.deprecation: mapred.reduce.tasks.speculative.execution is deprecated. Instead, use mapreduce.reduce.speculative

16/11/13 00:27:11 INFO Configuration.deprecation: mapred.min.split.size.per.node is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.node

16/11/13 00:27:11 INFO Configuration.deprecation: mapred.input.dir.recursive is deprecated. Instead, use mapreduce.input.fileinputformat.input.dir.recursive

16/11/13 00:27:11 INFO Configuration.deprecation: mapred.min.split.size.per.rack is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.rack

16/11/13 00:27:11 INFO Configuration.deprecation: mapred.max.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.maxsize

16/11/13 00:27:11 INFO Configuration.deprecation: mapred.committer.job.setup.cleanup.needed is deprecated. Instead, use mapreduce.job.committer.setup.cleanup.needed

Logging initialized using configuration in jar:file:/itcast/apache-hive-0.13.0-bin/lib/hive-common-0.13.0.jar!/hive-log4j.properties

OK

169859

Time taken: 0.999 seconds, Fetched: 1 row(s)

[root@itcast03 ~]#

下面我们来查询浏览次数最多的前20名客户(VIP客户),我们还在我们的daily.sh脚本中写shell命令,如下,需要说明的是,在sql语句中一般情况下如果用group函数的话,查询的内容最多是group分组的字段以及count函数,但是常量除外,也就是说,我们可以在select 语句之后加任意的常量值,我们这里便把变量$CURRENT加到了select语句当中。

[root@itcast03 ~]# vim daily.sh

CURRENT=`date -d "4 day ago" +%Y%m%d`

#/itcast/hadoop-2.2.0/bin/hadoop jar /root/Cleaner.jar /flume/$CURRENT /cleaned/$CURRENT

#/itcast/apache-hive-0.13.0-bin/bin/hive -e "alter table hmbbs add partition (logdate=$CURRENT) location '/cleaned/$CURRENT'"

#/itcast/apache-hive-0.13.0-bin/bin/hive -e "create table pv_$CURRENT row format delimited fields terminated by '\t' as select count(*) from hmbbs where logdate=$CURRENT"

/itcast/apache-hive-0.13.0-bin/bin/hive -e "create table vip_$CURRENT row format delimited fields terminated by '\t' as select $CURRENT,ip,count(*) as hits from hmbbs where logdate=$CURRENT group by ip having hits>20 order by hits desc limit 20"

下面我们来执行脚本,如下所示。

[root@itcast03 ~]# ./daily.sh

16/11/13 00:53:22 INFO Configuration.deprecation: mapred.reduce.tasks is deprecated. Instead, use mapreduce.job.reduces

16/11/13 00:53:22 INFO Configuration.deprecation: mapred.min.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize

16/11/13 00:53:22 INFO Configuration.deprecation: mapred.reduce.tasks.speculative.execution is deprecated. Instead, use mapreduce.reduce.speculative

16/11/13 00:53:22 INFO Configuration.deprecation: mapred.min.split.size.per.node is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.node

16/11/13 00:53:22 INFO Configuration.deprecation: mapred.input.dir.recursive is deprecated. Instead, use mapreduce.input.fileinputformat.input.dir.recursive

16/11/13 00:53:22 INFO Configuration.deprecation: mapred.min.split.size.per.rack is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.rack

16/11/13 00:53:22 INFO Configuration.deprecation: mapred.max.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.maxsize

16/11/13 00:53:22 INFO Configuration.deprecation: mapred.committer.job.setup.cleanup.needed is deprecated. Instead, use mapreduce.job.committer.setup.cleanup.needed

Logging initialized using configuration in jar:file:/itcast/apache-hive-0.13.0-bin/lib/hive-common-0.13.0.jar!/hive-log4j.properties

Total jobs = 2

Launching Job 1 out of 2

Number of reduce tasks not specified. Estimated from input data size: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Starting Job = job_1478920720232_0014, Tracking URL = http://itcast03:8088/proxy/application_1478920720232_0014/

Kill Command = /itcast/hadoop-2.2.0/bin/hadoop job -kill job_1478920720232_0014

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1

2016-11-13 00:53:37,402 Stage-1 map = 0%, reduce = 0%

2016-11-13 00:53:43,755 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.32 sec

2016-11-13 00:53:51,120 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 4.97 sec

MapReduce Total cumulative CPU time: 4 seconds 970 msec

Ended Job = job_1478920720232_0014

Launching Job 2 out of 2

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Starting Job = job_1478920720232_0015, Tracking URL = http://itcast03:8088/proxy/application_1478920720232_0015/

Kill Command = /itcast/hadoop-2.2.0/bin/hadoop job -kill job_1478920720232_0015

Hadoop job information for Stage-2: number of mappers: 1; number of reducers: 1

2016-11-13 00:53:59,723 Stage-2 map = 0%, reduce = 0%

2016-11-13 00:54:06,019 Stage-2 map = 100%, reduce = 0%, Cumulative CPU 1.34 sec

2016-11-13 00:54:12,282 Stage-2 map = 100%, reduce = 100%, Cumulative CPU 2.5 sec

MapReduce Total cumulative CPU time: 2 seconds 500 msec

Ended Job = job_1478920720232_0015

Moving data to: hdfs://ns1/user/hive/warehouse/vip_20161109

Table default.vip_20161109 stats: [numFiles=1, numRows=20, totalSize=553, rawDataSize=533]

MapReduce Jobs Launched:

Job 0: Map: 1 Reduce: 1 Cumulative CPU: 4.97 sec HDFS Read: 12756871 HDFS Write: 60477 SUCCESS

Job 1: Map: 1 Reduce: 1 Cumulative CPU: 2.5 sec HDFS Read: 60829 HDFS Write: 630 SUCCESS

Total MapReduce CPU Time Spent: 7 seconds 470 msec

OK

Time taken: 47.862 seconds

执行完脚本之后,我们查询一下hive所有的表,如下所示,可以看到有一张vip_20161109表

[root@itcast03 ~]# hive -e "show tables;";

16/11/13 00:54:31 INFO Configuration.deprecation: mapred.reduce.tasks is deprecated. Instead, use mapreduce.job.reduces

16/11/13 00:54:31 INFO Configuration.deprecation: mapred.min.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize

16/11/13 00:54:31 INFO Configuration.deprecation: mapred.reduce.tasks.speculative.execution is deprecated. Instead, use mapreduce.reduce.speculative

16/11/13 00:54:31 INFO Configuration.deprecation: mapred.min.split.size.per.node is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.node

16/11/13 00:54:31 INFO Configuration.deprecation: mapred.input.dir.recursive is deprecated. Instead, use mapreduce.input.fileinputformat.input.dir.recursive

16/11/13 00:54:31 INFO Configuration.deprecation: mapred.min.split.size.per.rack is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.rack

16/11/13 00:54:31 INFO Configuration.deprecation: mapred.max.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.maxsize

16/11/13 00:54:31 INFO Configuration.deprecation: mapred.committer.job.setup.cleanup.needed is deprecated. Instead, use mapreduce.job.committer.setup.cleanup.needed

Logging initialized using configuration in jar:file:/itcast/apache-hive-0.13.0-bin/lib/hive-common-0.13.0.jar!/hive-log4j.properties

OK

hmbbs

pv_20161109

vip_20161109

Time taken: 0.582 seconds, Fetched: 4 row(s)

下面我们来查看一下vip这张表。

[root@itcast03 ~]# hive -e "select * from vip_20161109";

16/11/13 00:55:09 INFO Configuration.deprecation: mapred.reduce.tasks is deprecated. Instead, use mapreduce.job.reduces

16/11/13 00:55:09 INFO Configuration.deprecation: mapred.min.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize

16/11/13 00:55:09 INFO Configuration.deprecation: mapred.reduce.tasks.speculative.execution is deprecated. Instead, use mapreduce.reduce.speculative

16/11/13 00:55:09 INFO Configuration.deprecation: mapred.min.split.size.per.node is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.node

16/11/13 00:55:09 INFO Configuration.deprecation: mapred.input.dir.recursive is deprecated. Instead, use mapreduce.input.fileinputformat.input.dir.recursive

16/11/13 00:55:09 INFO Configuration.deprecation: mapred.min.split.size.per.rack is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.rack

16/11/13 00:55:09 INFO Configuration.deprecation: mapred.max.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.maxsize

16/11/13 00:55:09 INFO Configuration.deprecation: mapred.committer.job.setup.cleanup.needed is deprecated. Instead, use mapreduce.job.committer.setup.cleanup.needed

Logging initialized using configuration in jar:file:/itcast/apache-hive-0.13.0-bin/lib/hive-common-0.13.0.jar!/hive-log4j.properties

OK

20161109 61.50.141.7 4855

20161109 222.133.189.179 3942

20161109 60.10.5.65 1889

20161109 220.181.89.156 1877

20161109 123.147.245.79 1571

20161109 61.135.249.210 1378

20161109 49.72.74.77 1160

20161109 180.173.113.181 969

20161109 122.70.237.247 805

20161109 125.45.155.27 735

20161109 173.199.114.195 672

20161109 221.221.153.8 632

20161109 119.255.57.50 575

20161109 139.227.126.111 575

20161109 157.56.93.85 561

20161109 222.141.54.75 533

20161109 58.63.138.37 520

20161109 123.126.50.182 512

20161109 61.135.249.206 508

20161109 222.36.188.206 503

Time taken: 1.056 seconds, Fetched: 20 row(s)

[root@itcast03 ~]#

下面我们来查询一下UV的值,就是每个IP一天内无论浏览多少次网站都只算一次,如下所示。

[root@itcast03 ~]# vim daily.sh

CURRENT=`date -d "4 day ago" +%Y%m%d`

#/itcast/hadoop-2.2.0/bin/hadoop jar /root/Cleaner.jar /flume/$CURRENT /cleaned/$CURRENT

#/itcast/apache-hive-0.13.0-bin/bin/hive -e "alter table hmbbs add partition (logdate=$CURRENT) location '/cleaned/$CURRENT'"

#/itcast/apache-hive-0.13.0-bin/bin/hive -e "create table pv_$CURRENT row format delimited fields terminated by '\t' as select count(*) from hmbbs where logdate=$CURRENT"

#/itcast/apache-hive-0.13.0-bin/bin/hive -e "create table vip_$CURRENT row format delimited fields terminated by '\t' as select $CURRENT,ip,count(*) as hits from hmbbs where logdate=$CURRENT group by ip having hits>20 order by hits desc limit 20"

/itcast/apache-hive-0.13.0-bin/bin/hive -e "create table uv_$CURRENT row format delimited fields terminated by '\t' as select count(distinct ip) from hmbbs where logdate=$CURRENT"

下面我们来执行daily.sh,如下所示。

[root@itcast03 ~]# ./daily.sh

16/11/13 01:08:59 INFO Configuration.deprecation: mapred.reduce.tasks is deprecated. Instead, use mapreduce.job.reduces

16/11/13 01:08:59 INFO Configuration.deprecation: mapred.min.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize

16/11/13 01:08:59 INFO Configuration.deprecation: mapred.reduce.tasks.speculative.execution is deprecated. Instead, use mapreduce.reduce.speculative

16/11/13 01:08:59 INFO Configuration.deprecation: mapred.min.split.size.per.node is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.node

16/11/13 01:08:59 INFO Configuration.deprecation: mapred.input.dir.recursive is deprecated. Instead, use mapreduce.input.fileinputformat.input.dir.recursive

16/11/13 01:08:59 INFO Configuration.deprecation: mapred.min.split.size.per.rack is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.rack

16/11/13 01:08:59 INFO Configuration.deprecation: mapred.max.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.maxsize

16/11/13 01:08:59 INFO Configuration.deprecation: mapred.committer.job.setup.cleanup.needed is deprecated. Instead, use mapreduce.job.committer.setup.cleanup.needed

Logging initialized using configuration in jar:file:/itcast/apache-hive-0.13.0-bin/lib/hive-common-0.13.0.jar!/hive-log4j.properties

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Starting Job = job_1478920720232_0016, Tracking URL = http://itcast03:8088/proxy/application_1478920720232_0016/

Kill Command = /itcast/hadoop-2.2.0/bin/hadoop job -kill job_1478920720232_0016

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1

2016-11-13 01:09:12,694 Stage-1 map = 0%, reduce = 0%

2016-11-13 01:09:19,008 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.41 sec

2016-11-13 01:09:25,294 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 4.9 sec

MapReduce Total cumulative CPU time: 4 seconds 900 msec

Ended Job = job_1478920720232_0016

Moving data to: hdfs://ns1/user/hive/warehouse/uv_20161109

Table default.uv_20161109 stats: [numFiles=1, numRows=1, totalSize=6, rawDataSize=5]

MapReduce Jobs Launched:

Job 0: Map: 1 Reduce: 1 Cumulative CPU: 4.9 sec HDFS Read: 12756871 HDFS Write: 81 SUCCESS

Total MapReduce CPU Time Spent: 4 seconds 900 msec

OK

Time taken: 24.247 seconds

执行完脚本之后,我们来查看一下hive现在都有哪些表,发现确实多了一张uv_20161109表。

[root@itcast03 ~]# hive -e "show tables";

16/11/13 01:09:44 INFO Configuration.deprecation: mapred.reduce.tasks is deprecated. Instead, use mapreduce.job.reduces

16/11/13 01:09:44 INFO Configuration.deprecation: mapred.min.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize

16/11/13 01:09:44 INFO Configuration.deprecation: mapred.reduce.tasks.speculative.execution is deprecated. Instead, use mapreduce.reduce.speculative

16/11/13 01:09:44 INFO Configuration.deprecation: mapred.min.split.size.per.node is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.node

16/11/13 01:09:44 INFO Configuration.deprecation: mapred.input.dir.recursive is deprecated. Instead, use mapreduce.input.fileinputformat.input.dir.recursive

16/11/13 01:09:44 INFO Configuration.deprecation: mapred.min.split.size.per.rack is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.rack

16/11/13 01:09:44 INFO Configuration.deprecation: mapred.max.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.maxsize

16/11/13 01:09:44 INFO Configuration.deprecation: mapred.committer.job.setup.cleanup.needed is deprecated. Instead, use mapreduce.job.committer.setup.cleanup.needed

Logging initialized using configuration in jar:file:/itcast/apache-hive-0.13.0-bin/lib/hive-common-0.13.0.jar!/hive-log4j.properties

OK

hmbbs

pv_20161109

uv_20161109

vip_20161109

Time taken: 0.588 seconds, Fetched: 5 row(s)

接着我们来查询一下uv_20161109这张表中的数据,如下所示,发现UV的值是:10413

[root@itcast03 ~]# hive -e "select * from uv_20161109";

16/11/13 01:10:10 INFO Configuration.deprecation: mapred.reduce.tasks is deprecated. Instead, use mapreduce.job.reduces

16/11/13 01:10:10 INFO Configuration.deprecation: mapred.min.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize

16/11/13 01:10:10 INFO Configuration.deprecation: mapred.reduce.tasks.speculative.execution is deprecated. Instead, use mapreduce.reduce.speculative

16/11/13 01:10:10 INFO Configuration.deprecation: mapred.min.split.size.per.node is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.node

16/11/13 01:10:10 INFO Configuration.deprecation: mapred.input.dir.recursive is deprecated. Instead, use mapreduce.input.fileinputformat.input.dir.recursive

16/11/13 01:10:10 INFO Configuration.deprecation: mapred.min.split.size.per.rack is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.rack

16/11/13 01:10:10 INFO Configuration.deprecation: mapred.max.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.maxsize

16/11/13 01:10:10 INFO Configuration.deprecation: mapred.committer.job.setup.cleanup.needed is deprecated. Instead, use mapreduce.job.committer.setup.cleanup.needed

Logging initialized using configuration in jar:file:/itcast/apache-hive-0.13.0-bin/lib/hive-common-0.13.0.jar!/hive-log4j.properties

OK

10413

Time taken: 0.956 seconds, Fetched: 1 row(s)

下面我们来查询一下每天注册的用户数量,我们依然在daily.sh脚本中输入命令(注意:我这里没有把每天的用户注册数量写到某张表里面,而只是查询而已),如下所示。

[root@itcast03 ~]# vim daily.sh

CURRENT=`date -d "4 day ago" +%Y%m%d`

#/itcast/hadoop-2.2.0/bin/hadoop jar /root/Cleaner.jar /flume/$CURRENT /cleaned/$CURRENT

#/itcast/apache-hive-0.13.0-bin/bin/hive -e "alter table hmbbs add partition (logdate=$CURRENT) location '/cleaned/$CURRENT'"

#/itcast/apache-hive-0.13.0-bin/bin/hive -e "create table pv_$CURRENT row format delimited fields terminated by '\t' as select count(*) from hmbbs where logdate=$CURRENT"

#/itcast/apache-hive-0.13.0-bin/bin/hive -e "create table vip_$CURRENT row format delimited fields terminated by '\t' as select $CURRENT,ip,count(*) as hits from hmbbs where logdate=$CURRENT group by ip having hits>20 order by hits desc limit 20"

#/itcast/apache-hive-0.13.0-bin/bin/hive -e "create table uv_$CURRENT row format delimited fields terminated by '\t' as select count(distinct ip) from hmbbs where logdate=$CURRENT"

/itcast/apache-hive-0.13.0-bin/bin/hive -e "select count(*) from hmbbs where logdate=$CURRENT and instr(url,'member.php?mod=register')>0"

下面我们来执行daily.sh脚本,可以看到查询出来的结果是28人。

[root@itcast03 ~]# ./daily.sh

16/11/13 01:22:28 INFO Configuration.deprecation: mapred.reduce.tasks is deprecated. Instead, use mapreduce.job.reduces

16/11/13 01:22:28 INFO Configuration.deprecation: mapred.min.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize

16/11/13 01:22:28 INFO Configuration.deprecation: mapred.reduce.tasks.speculative.execution is deprecated. Instead, use mapreduce.reduce.speculative

16/11/13 01:22:28 INFO Configuration.deprecation: mapred.min.split.size.per.node is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.node

16/11/13 01:22:28 INFO Configuration.deprecation: mapred.input.dir.recursive is deprecated. Instead, use mapreduce.input.fileinputformat.input.dir.recursive

16/11/13 01:22:28 INFO Configuration.deprecation: mapred.min.split.size.per.rack is deprecated. Instead, use mapreduce.input.fileinputformat.split.minsize.per.rack

16/11/13 01:22:28 INFO Configuration.deprecation: mapred.max.split.size is deprecated. Instead, use mapreduce.input.fileinputformat.split.maxsize

16/11/13 01:22:28 INFO Configuration.deprecation: mapred.committer.job.setup.cleanup.needed is deprecated. Instead, use mapreduce.job.committer.setup.cleanup.needed

Logging initialized using configuration in jar:file:/itcast/apache-hive-0.13.0-bin/lib/hive-common-0.13.0.jar!/hive-log4j.properties

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Starting Job = job_1478920720232_0017, Tracking URL = http://itcast03:8088/proxy/application_1478920720232_0017/

Kill Command = /itcast/hadoop-2.2.0/bin/hadoop job -kill job_1478920720232_0017

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1

2016-11-13 01:22:41,748 Stage-1 map = 0%, reduce = 0%

2016-11-13 01:22:48,155 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.23 sec

2016-11-13 01:22:54,417 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 3.41 sec

MapReduce Total cumulative CPU time: 3 seconds 410 msec

Ended Job = job_1478920720232_0017

MapReduce Jobs Launched:

Job 0: Map: 1 Reduce: 1 Cumulative CPU: 3.41 sec HDFS Read: 12756871 HDFS Write: 3 SUCCESS

Total MapReduce CPU Time Spent: 3 seconds 410 msec

OK

28

Time taken: 22.633 seconds, Fetched: 1 row(s)

[root@itcast03 ~]#

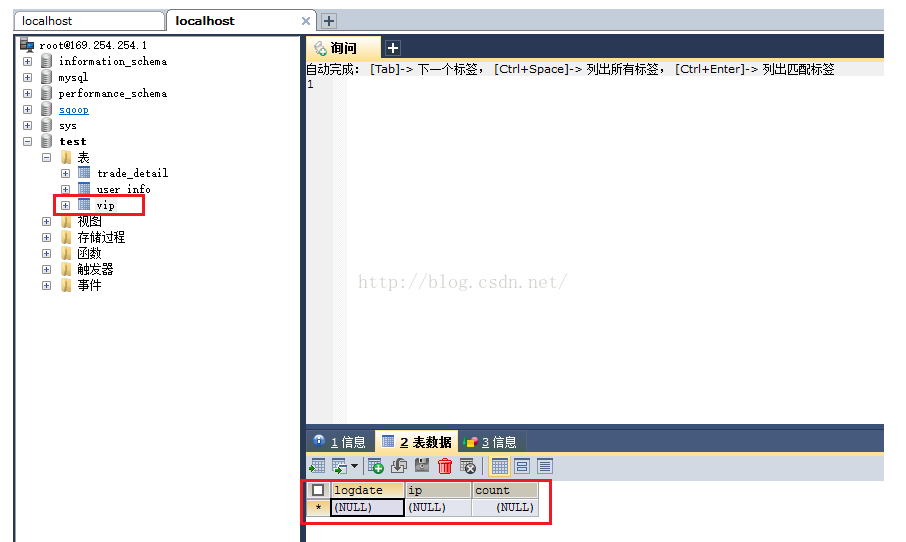

最后我们把我们刚才查询出来的浏览网站次数最多的前20名的信息导出到数据库表中。首先我们在我们的关系型数据库中新建一张vip表,如下图所示。

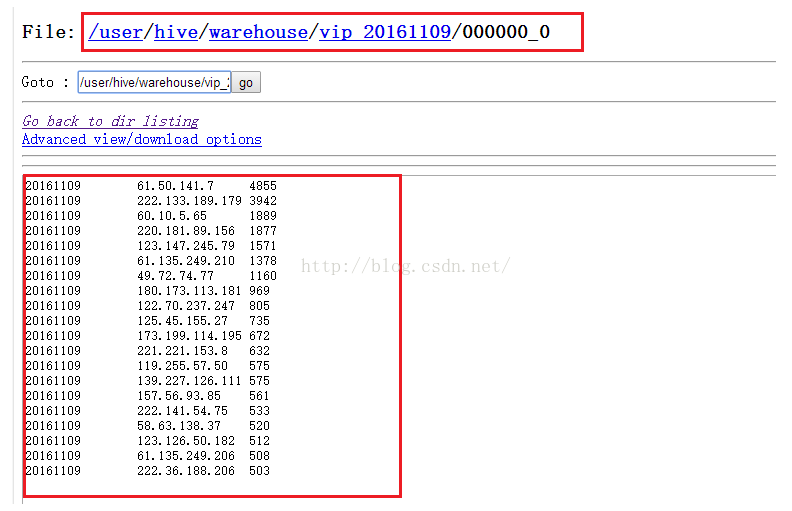

然后我们把HDFS上的VIP表中的信息导出来,VIP表在HDFS的位置如下图所示。

我们依然在daily.sh脚本中执行sqoop相关的shell命令,这里需要注意的是,命令中/user/hive/warehouse/vip_$CURRENT要用双引号来包含,而不能用单引号,否则$CURRENT无法解析。

[root@itcast03 ~]# vim daily.sh

CURRENT=`date -d "4 day ago" +%Y%m%d`

#/itcast/hadoop-2.2.0/bin/hadoop jar /root/Cleaner.jar /flume/$CURRENT /cleaned/$CURRENT

#/itcast/apache-hive-0.13.0-bin/bin/hive -e "alter table hmbbs add partition (logdate=$CURRENT) location '/cleaned/$CURRENT'"

#/itcast/apache-hive-0.13.0-bin/bin/hive -e "create table pv_$CURRENT row format delimited fields terminated by '\t' as select count(*) from hmbbs where logdate=$CURRENT"

#/itcast/apache-hive-0.13.0-bin/bin/hive -e "create table vip_$CURRENT row format delimited fields terminated by '\t' as select $CURRENT,ip,count(*) as hits from hmbbs where logdate=$CURRENT group by ip having hits>20 order by hits desc limit 20"

#/itcast/apache-hive-0.13.0-bin/bin/hive -e "create table uv_$CURRENT row format delimited fields terminated by '\t' as select count(distinct ip) from hmbbs where logdate=$CURRENT"

#/itcast/apache-hive-0.13.0-bin/bin/hive -e "select count(*) from hmbbs where logdate=$CURRENT and instr(url,'member.php?mod=register')>0"

/itcast/sqoop-1.4.6/bin/sqoop export --connect jdbc:mysql://169.254.254.1:3306/test --username root --password root --export-dir "/user/hive/warehouse/vip_$CURRENT" --table vip --fields-terminated-by '\t'

下面我们来执行daily.sh脚本,如下所示,执行成功。

[root@itcast03 ~]# ./daily.sh

Warning: /itcast/sqoop-1.4.6/../hbase does not exist! HBase imports will fail.

Please set $HBASE_HOME to the root of your HBase installation.

Warning: /itcast/sqoop-1.4.6/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /itcast/sqoop-1.4.6/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

Warning: /itcast/sqoop-1.4.6/../zookeeper does not exist! Accumulo imports will fail.

Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation.

16/11/13 01:47:25 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6

16/11/13 01:47:25 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

16/11/13 01:47:25 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

16/11/13 01:47:25 INFO tool.CodeGenTool: Beginning code generation

Sun Nov 13 01:47:25 CST 2016 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

16/11/13 01:47:25 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `vip` AS t LIMIT 1

16/11/13 01:47:26 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `vip` AS t LIMIT 1

16/11/13 01:47:26 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /itcast/hadoop-2.2.0

Note: /tmp/sqoop-root/compile/3307c34445798be97edc02e8d0d14b08/vip.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

16/11/13 01:47:27 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-root/compile/3307c34445798be97edc02e8d0d14b08/vip.jar

16/11/13 01:47:27 INFO mapreduce.ExportJobBase: Beginning export of vip

16/11/13 01:47:27 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

16/11/13 01:47:28 INFO Configuration.deprecation: mapred.reduce.tasks.speculative.execution is deprecated. Instead, use mapreduce.reduce.speculative

16/11/13 01:47:28 INFO Configuration.deprecation: mapred.map.tasks.speculative.execution is deprecated. Instead, use mapreduce.map.speculative

16/11/13 01:47:28 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

16/11/13 01:47:28 INFO client.RMProxy: Connecting to ResourceManager at itcast03/169.254.254.30:8032

16/11/13 01:47:31 INFO input.FileInputFormat: Total input paths to process : 1

16/11/13 01:47:31 INFO input.FileInputFormat: Total input paths to process : 1

16/11/13 01:47:31 INFO mapreduce.JobSubmitter: number of splits:4

16/11/13 01:47:31 INFO Configuration.deprecation: mapred.job.classpath.files is deprecated. Instead, use mapreduce.job.classpath.files

16/11/13 01:47:31 INFO Configuration.deprecation: user.name is deprecated. Instead, use mapreduce.job.user.name

16/11/13 01:47:31 INFO Configuration.deprecation: mapred.cache.files.filesizes is deprecated. Instead, use mapreduce.job.cache.files.filesizes

16/11/13 01:47:31 INFO Configuration.deprecation: mapred.cache.files is deprecated. Instead, use mapreduce.job.cache.files

16/11/13 01:47:31 INFO Configuration.deprecation: mapred.map.tasks.speculative.execution is deprecated. Instead, use mapreduce.map.speculative

16/11/13 01:47:31 INFO Configuration.deprecation: mapred.reduce.tasks is deprecated. Instead, use mapreduce.job.reduces

16/11/13 01:47:31 INFO Configuration.deprecation: mapred.mapoutput.value.class is deprecated. Instead, use mapreduce.map.output.value.class

16/11/13 01:47:31 INFO Configuration.deprecation: mapreduce.map.class is deprecated. Instead, use mapreduce.job.map.class

16/11/13 01:47:31 INFO Configuration.deprecation: mapred.job.name is deprecated. Instead, use mapreduce.job.name

16/11/13 01:47:31 INFO Configuration.deprecation: mapreduce.inputformat.class is deprecated. Instead, use mapreduce.job.inputformat.class

16/11/13 01:47:31 INFO Configuration.deprecation: mapred.input.dir is deprecated. Instead, use mapreduce.input.fileinputformat.inputdir

16/11/13 01:47:31 INFO Configuration.deprecation: mapreduce.outputformat.class is deprecated. Instead, use mapreduce.job.outputformat.class

16/11/13 01:47:31 INFO Configuration.deprecation: mapred.cache.files.timestamps is deprecated. Instead, use mapreduce.job.cache.files.timestamps

16/11/13 01:47:31 INFO Configuration.deprecation: mapred.mapoutput.key.class is deprecated. Instead, use mapreduce.map.output.key.class

16/11/13 01:47:31 INFO Configuration.deprecation: mapred.working.dir is deprecated. Instead, use mapreduce.job.working.dir

16/11/13 01:47:31 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1478920720232_0019

16/11/13 01:47:32 INFO impl.YarnClientImpl: Submitted application application_1478920720232_0019 to ResourceManager at itcast03/169.254.254.30:8032

16/11/13 01:47:32 INFO mapreduce.Job: The url to track the job: http://itcast03:8088/proxy/application_1478920720232_0019/

16/11/13 01:47:32 INFO mapreduce.Job: Running job: job_1478920720232_0019

16/11/13 01:47:38 INFO mapreduce.Job: Job job_1478920720232_0019 running in uber mode : false

16/11/13 01:47:38 INFO mapreduce.Job: map 0% reduce 0%

16/11/13 01:47:52 INFO mapreduce.Job: map 100% reduce 0%

16/11/13 01:47:54 INFO mapreduce.Job: Job job_1478920720232_0019 completed successfully

16/11/13 01:47:55 INFO mapreduce.Job: Counters: 27

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=413456

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=2070

HDFS: Number of bytes written=0

HDFS: Number of read operations=19

HDFS: Number of large read operations=0

HDFS: Number of write operations=0

Job Counters

Launched map tasks=4

Rack-local map tasks=4

Total time spent by all maps in occupied slots (ms)=48728

Total time spent by all reduces in occupied slots (ms)=0

Map-Reduce Framework

Map input records=20

Map output records=20

Input split bytes=601

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=234

CPU time spent (ms)=3470

Physical memory (bytes) snapshot=371515392

Virtual memory (bytes) snapshot=3365302272

Total committed heap usage (bytes)=62390272

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=0

16/11/13 01:47:55 INFO mapreduce.ExportJobBase: Transferred 2.0215 KB in 26.7272 seconds (77.4492 bytes/sec)

16/11/13 01:47:55 INFO mapreduce.ExportJobBase: Exported 20 records

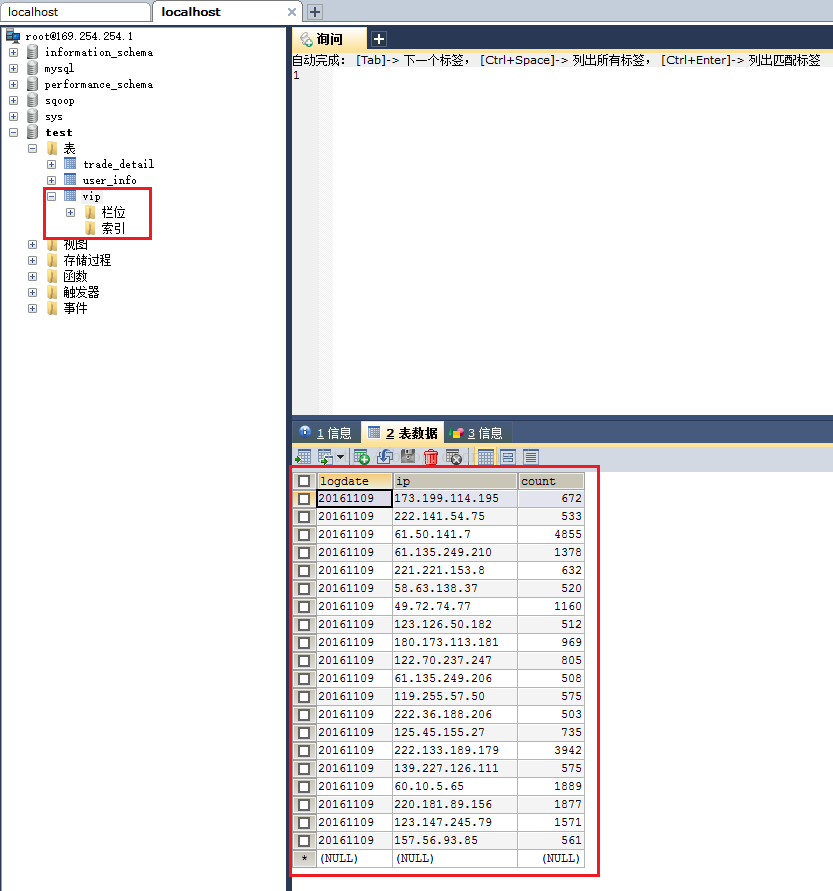

执行成功后我们再到我们的关系型数据库的vip表中看看数据有没有被导入进来,如下图所示,发现数据已经被成功导入进来了!!!

以后工作中要多用脚本来执行,而且设定好之间,让脚本每隔多长时间自动执行,这样就不用人工操作了。好了,至此,Hadoop系列的所有课程我们便一起学完了!!

1446

1446

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?