1、首先按照上一篇博客进行JNI配置,接下来在上一篇博客配置完成的NDKTEST工程基础上进行修改(我的这里用的工程名字是ffmpeg_test);

2、下载ffmpeg for android的so文件,也可以自己编译,这里有别人编译好的下载:

http://download.csdn.net/detail/zhjin8510/8539759

下载后包含我们需要的头文件以及so文件,其so文件是包括libavcodec.so,libavcodec-56.so,libavfilter.so……各个分开的库;

也可以下载:

http://download.csdn.net/detail/huangyifei_1111/9025229

此处的so文件是单个的libffmpeg.so,但未提供头文件,头文件仍需在第一个地址下载或者从ffmpeg源代码里整理出来;

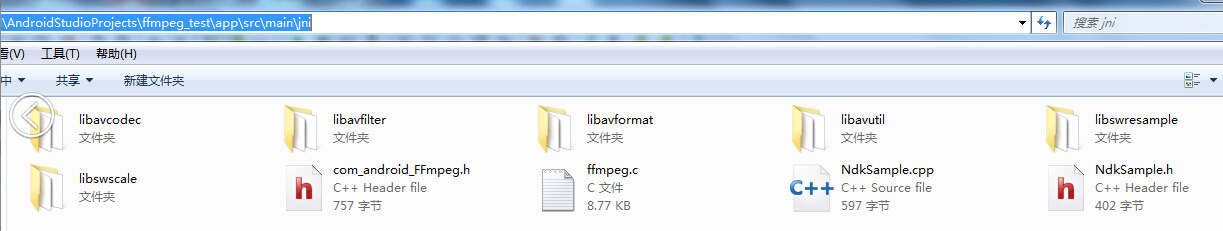

3、将头文件解压到目录下,如图:

4、在main目录下创建jniLibs文件夹,然后在jniLibs中创建armeabi-v7a文件夹,把so文件放在这个目录里,这些so文件成为预编译的so文件(似乎必须创建为这个和平台相关的目录,不然ndk编译能通过,但java层无法从jni创建的so文件链接到预编译的so文件,总之不管从什么目录引入预编译的so文件,但jniLibs/armeabi-v7a下必须有这些so文件)。

5、在java层中的Acitvity中加入函数定义:

public native String GetFFmpegVersion();

public native int H264DecoderInit(int width, int height);

public native int H264DecoderRelease();

public native int H264Decode(byte[] in, int insize, byte[] out);6、参考http://www.tuicool.com/articles/zMNf63v在jni目录下创建android_FFmpeg.c和android_FFmpeg.h。

android_FFmpeg.c:

//

// Created by Administrator on 2016/1/13.

//

#include <math.h>

//#include “include/libavutil/cpu.h“

#include "libavformat/avformat.h"

#include "libavcodec/avcodec.h"

#include "libavcodec/version.h"

#include "libavutil/channel_layout.h"

#include "libavutil/common.h"

#include "libavutil/imgutils.h"

#include "libavutil/mathematics.h"

#include "libavutil/samplefmt.h"

#include "libavutil/avutil.h"

#include "android/log.h"

#include "android_FFmpeg.h"

#include "libavformat/avformat.h"

#define LOG_TAG "H264Android.c"

#define LOGD(...) __android_log_print(ANDROID_LOG_DEBUG,LOG_TAG,__VA_ARGS__)

#ifdef __cplusplus

extern "C" {

#endif

//Video

struct AVCodecContext *pAVCodecCtx = NULL;

struct AVCodec *pAVCodec;

struct AVPacket mAVPacket;

struct AVFrame *pAVFrame = NULL;

//Audio

struct AVCodecContext *pAUCodecCtx = NULL;

struct AVCodec *pAUCodec;

struct AVPacket mAUPacket;

struct AVFrame *pAUFrame = NULL;

int iWidth = 0;

int iHeight = 0;

int *colortab = NULL;

int *u_b_tab = NULL;

int *u_g_tab = NULL;

int *v_g_tab = NULL;

int *v_r_tab = NULL;

//short *tmp_pic=NULL;

unsigned int *rgb_2_pix = NULL;

unsigned int *r_2_pix = NULL;

unsigned int *g_2_pix = NULL;

unsigned int *b_2_pix = NULL;

void DeleteYUVTab() {

// av_free(tmp_pic);

av_free(colortab);

av_free(rgb_2_pix);

}

void CreateYUVTab_16() {

int i;

int u, v;

// tmp_pic = (short*)av_malloc(iWidth*iHeight*2); // iWidth * iHeight * 16bits

colortab = (int *) av_malloc(4 * 256 * sizeof(int));

u_b_tab = &colortab[0 * 256];

u_g_tab = &colortab[1 * 256];

v_g_tab = &colortab[2 * 256];

v_r_tab = &colortab[3 * 256];

for (i = 0; i < 256; i++) {

u = v = (i - 128);

u_b_tab[i] = (int) (1.772 * u);

u_g_tab[i] = (int) (0.34414 * u);

v_g_tab[i] = (int) (0.71414 * v);

v_r_tab[i] = (int) (1.402 * v);

}

rgb_2_pix = (unsigned int *) av_malloc(3 * 768 * sizeof(unsigned int));

r_2_pix = &rgb_2_pix[0 * 768];

g_2_pix = &rgb_2_pix[1 * 768];

b_2_pix = &rgb_2_pix[2 * 768];

for (i = 0; i < 256; i++) {

r_2_pix[i] = 0;

g_2_pix[i] = 0;

b_2_pix[i] = 0;

}

for (i = 0; i < 256; i++) {

r_2_pix[i + 256] = (i & 0xF8) << 8;

g_2_pix[i + 256] = (i & 0xFC) << 3;

b_2_pix[i + 256] = (i) >> 3;

}

for (i = 0; i < 256; i++) {

r_2_pix[i + 512] = 0xF8 << 8;

g_2_pix[i + 512] = 0xFC << 3;

b_2_pix[i + 512] = 0x1F;

}

r_2_pix += 256;

g_2_pix += 256;

b_2_pix += 256;

}

void DisplayYUV_16(unsigned int *pdst1, unsigned char *y, unsigned char *u,

unsigned char *v, int width, int height, int src_ystride,

int src_uvstride, int dst_ystride) {

int i, j;

int r, g, b, rgb;

int yy, ub, ug, vg, vr;

unsigned char* yoff;

unsigned char* uoff;

unsigned char* voff;

unsigned int* pdst = pdst1;

int width2 = width / 2;

int height2 = height / 2;

if (width2 > iWidth / 2) {

width2 = iWidth / 2;

y += (width - iWidth) / 4 * 2;

u += (width - iWidth) / 4;

v += (width - iWidth) / 4;

}

if (height2 > iHeight)

height2 = iHeight;

for (j = 0; j < height2; j++) {

yoff = y + j * 2 * src_ystride;

uoff = u + j * src_uvstride;

voff = v + j * src_uvstride;

for (i = 0; i < width2; i++) {

yy = *(yoff + (i << 1));

ub = u_b_tab[*(uoff + i)];

ug = u_g_tab[*(uoff + i)];

vg = v_g_tab[*(voff + i)];

vr = v_r_tab[*(voff + i)];

b = yy + ub;

g = yy - ug - vg;

r = yy + vr;

rgb = r_2_pix[r] + g_2_pix[g] + b_2_pix[b];

yy = *(yoff + (i << 1) + 1);

b = yy + ub;

g = yy - ug - vg;

r = yy + vr;

pdst[(j * dst_ystride + i)] = (rgb)

+ ((r_2_pix[r] + g_2_pix[g] + b_2_pix[b]) << 16);

yy = *(yoff + (i << 1) + src_ystride);

b = yy + ub;

g = yy - ug - vg;

r = yy + vr;

rgb = r_2_pix[r] + g_2_pix[g] + b_2_pix[b];

yy = *(yoff + (i << 1) + src_ystride + 1);

b = yy + ub;

g = yy - ug - vg;

r = yy + vr;

pdst[((2 * j + 1) * dst_ystride + i * 2) >> 1] = (rgb)

+ ((r_2_pix[r] + g_2_pix[g] + b_2_pix[b]) << 16);

}

}

}

/*

* Class: FFmpeg

* Method: H264DecoderInit

* Signature: (II)I

*/

JNIEXPORT jint JNICALL Java_nbicc_ffmpeg_1test_MainActivity_H264DecoderInit(

JNIEnv * env, jobject jobj, jint width, jint height) {

iWidth = width;

iHeight = height;

if (pAVCodecCtx != NULL) {

avcodec_close(pAVCodecCtx);

pAVCodecCtx = NULL;

}

if (pAVFrame != NULL) {

av_free(pAVFrame);

pAVFrame = NULL;

}

// Register all formats and codecs

av_register_all();

LOGD("avcodec register success");

//CODEC_ID_PCM_ALAW

pAVCodec = avcodec_find_decoder(AV_CODEC_ID_H264);

if (pAVCodec == NULL)

return -1;

//init AVCodecContext

pAVCodecCtx = avcodec_alloc_context3(pAVCodec);

if (pAVCodecCtx == NULL)

return -1;

/* we do not send complete frames */

if (pAVCodec->capabilities & CODEC_CAP_TRUNCATED)

pAVCodecCtx->flags |= CODEC_FLAG_TRUNCATED; /* we do not send complete frames */

/* open it */

if (avcodec_open2(pAVCodecCtx, pAVCodec, NULL) < 0)

return avcodec_open2(pAVCodecCtx, pAVCodec, NULL);

av_init_packet(&mAVPacket);

pAVFrame = av_frame_alloc();

if (pAVFrame == NULL)

return -1;

//pImageConvertCtx = sws_getContext(pAVCodecCtx->width, pAVCodecCtx->height, PIX_FMT_YUV420P, pAVCodecCtx->width, pAVCodecCtx->height,PIX_FMT_RGB565LE, SWS_BICUBIC, NULL, NULL, NULL);

//LOGD("sws_getContext return =%d",pImageConvertCtx);

LOGD("avcodec context success");

CreateYUVTab_16();

LOGD("create yuv table success");

return 1;

}

/*

* Class: com_android_concox_FFmpeg

* Method: H264DecoderRelease

* Signature: ()I

*/

JNIEXPORT jint JNICALL Java_nbicc_ffmpeg_1test_MainActivity_H264DecoderRelease(

JNIEnv * env, jobject jobj) {

if (pAVCodecCtx != NULL) {

avcodec_close(pAVCodecCtx);

pAVCodecCtx = NULL;

}

if (pAVFrame != NULL) {

av_free(pAVFrame);

pAVFrame = NULL;

}

DeleteYUVTab();

return 1;

}

/*

* Class: com_android_concox_FFmpeg

* Method: H264Decode

* Signature: ([BI[B)I

*/

JNIEXPORT jint JNICALL Java_nbicc_ffmpeg_1test_MainActivity_H264Decode(JNIEnv* env,

jobject thiz, jbyteArray in, jint inbuf_size, jbyteArray out) {

int i;

jbyte * inbuf = (jbyte*) (*env)->GetByteArrayElements(env, in, 0);

jbyte * Picture = (jbyte*) (*env)->GetByteArrayElements(env, out, 0);

av_frame_unref(pAVFrame);

mAVPacket.data = inbuf;

mAVPacket.size = inbuf_size;

LOGD("mAVPacket.size:%d\n ", mAVPacket.size);

int len = -1, got_picture = 0;

len = avcodec_decode_video2(pAVCodecCtx, pAVFrame, &got_picture,

&mAVPacket);

LOGD("len:%d\n", len);

if (len < 0) {

LOGD("len=-1,decode error");

return len;

}

if (got_picture > 0) {

LOGD("GOT PICTURE");

/*pImageConvertCtx = sws_getContext (pAVCodecCtx->width,

pAVCodecCtx->height, pAVCodecCtx->pix_fmt,

pAVCodecCtx->width, pAVCodecCtx->height,

PIX_FMT_RGB565LE, SWS_BICUBIC, NULL, NULL, NULL);

sws_scale (pImageConvertCtx, pAVFrame->data, pAVFrame->linesize,0, pAVCodecCtx->height, pAVFrame->data, pAVFrame->linesize);

*/

DisplayYUV_16((int*) Picture, pAVFrame->data[0], pAVFrame->data[1],

pAVFrame->data[2], pAVCodecCtx->width, pAVCodecCtx->height,

pAVFrame->linesize[0], pAVFrame->linesize[1], iWidth);

} else

LOGD("GOT PICTURE fail");

(*env)->ReleaseByteArrayElements(env, in, inbuf, 0);

(*env)->ReleaseByteArrayElements(env, out, Picture, 0);

return len;

}

/*

* Class: com_android_concox_FFmpeg

* Method: GetFFmpegVersion

* Signature: ()I

*/

JNIEXPORT jstring JNICALL Java_nbicc_ffmpeg_1test_MainActivity_GetFFmpegVersion(

JNIEnv * env, jobject jobj) {

char buff[128] = {0};

int aa= avcodec_version();

sprintf(buff, "version %d", aa);

return (*env)->NewStringUTF(env, buff);

}

#ifdef __cplusplus

}

#endifandroid_FFmpeg.h:

//

// Created by Administrator on 2016/1/13.

//

#ifndef FFMPEG_TEST_COM_ANDROID_FFMPEG_H

#define FFMPEG_TEST_COM_ANDROID_FFMPEG_H

#include "jni.h"

#include <stdio.h>

#include <string.h>

JNIEXPORT jint JNICALL Java_nbicc_ffmpeg_1test_MainActivity_H264DecoderInit(JNIEnv * env, jobject jobj, jint width, jint height);

JNIEXPORT jint JNICALL Java_nbicc_ffmpeg_1test_MainActivity_H264DecoderRelease(JNIEnv * env, jobject jobj);

JNIEXPORT jint JNICALL Java_nbicc_ffmpeg_1test_MainActivity_H264Decode(JNIEnv* env, jobject thiz, jbyteArray in, jint inbuf_size, jbyteArray out);

JNIEXPORT jstring JNICALL Java_nbicc_ffmpeg_1test_MainActivity_GetFFmpegVersion(JNIEnv * env, jobject jobj);

#endif //FFMPEG_TEST_COM_ANDROID_FFMPEG_H

注意:接口函数名字Java_nbicc_ffmpeg_1test_MainActivity_XXXX要根据自己的工程目录修改,修改方法参考上一篇博客结尾处;

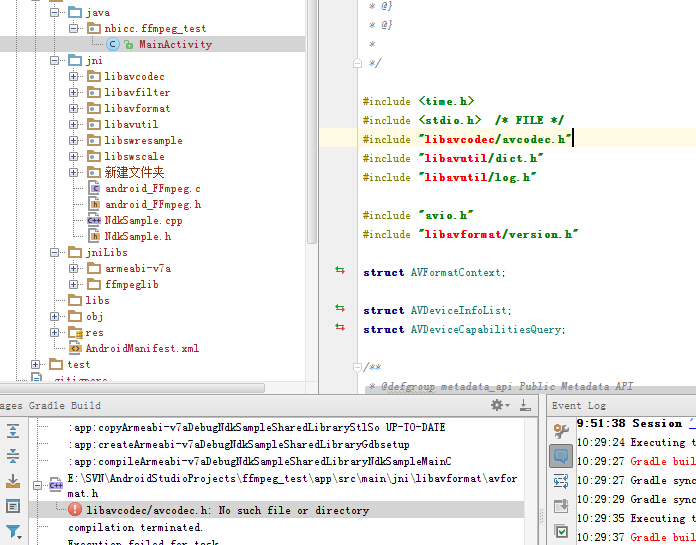

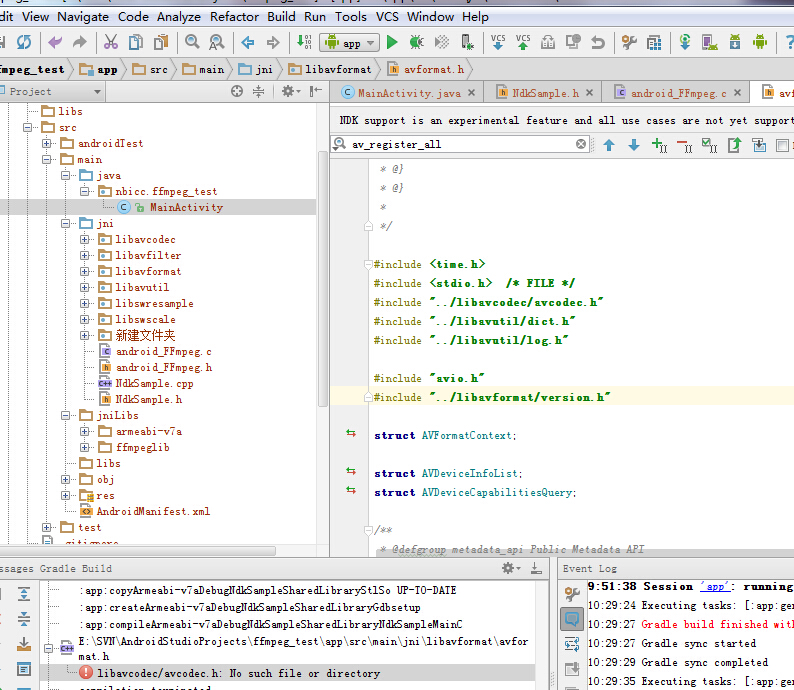

7、此时点击编译,提示android_ffmpeg.c中引用的ffmpeg的h文件找不到,双击错误信息定位:

将红色的语句修改,前面加上../即可:

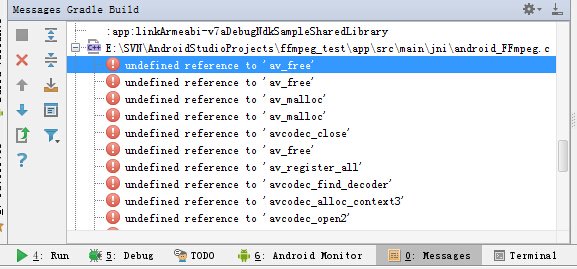

按照这个方法处理,直到所有.h文件都修改完毕,此时提示错误:

恭喜,也就是说头文件引用全部对了,但缺少函数库;

8、打开Module下的gadle.build文件,将android.productFlavors项修改为:

android.productFlavors {

create("arm7") {

ndk.with {

ndk.abiFilters.add("armeabi-v7a")

//ndk.abiFilters.add("armeabi")

File curDir = file('./')

curDir = file(curDir.absolutePath)

String libsDir = curDir.absolutePath+"\\src\\main\\jniLibs\\armeabi-v7a\\" //"-L" +

ldLibs += libsDir + "libijkffmpeg.so"

}

}

//create("arm8") {//按照我想生成的平台不需要

//ndk.abiFilters.add("arm64-v8a")

//}

}以上是引用单个打包好的libffmpeg.so,也可以用未打包的多个so文件:

android.productFlavors {

create("arm7") {

ndk.with {

ndk.abiFilters.add("armeabi-v7a")

//ndk.abiFilters.add("armeabi")

File curDir = file('./')

curDir = file(curDir.absolutePath)

String libsDir = curDir.absolutePath+"\\src\\main\\jniLibs\\armeabi-v7a\\" //"-L" +

ldLibs += libsDir + "libavcodec.so"

ldLibs += libsDir + "libavcodec-56.so"

ldLibs += libsDir + "libavfilter.so"

ldLibs += libsDir + "libavfilter-5.so"

ldLibs += libsDir + "libavformat.so"

ldLibs += libsDir + "libavformat-56.so"

ldLibs += libsDir + "libavutil.so"

ldLibs += libsDir + "libavutil-54.so"

ldLibs += libsDir + "libswresample.so"

ldLibs += libsDir + "libswresample-1.so"

ldLibs += libsDir + "libswscale.so"

ldLibs += libsDir + "libswscale-3.so"

}

}

//create("arm8") {

//ndk.abiFilters.add("arm64-v8a")

//}

}注意:curDir.absolutePath就是步骤4所创建的目录;

完成后再点编译,OK,通过了,没有错误,此时进入app\build\intermediates\binaries\debug\arm7\lib\armeabi-v7a查看生成的libNDKTEST.so文件,是不是比上一篇博客中生成的大了些。

9、在Acitivity中使用ffmpeg的函数,看在手机上能不能成功运行:

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

String ret = sayHello("string input");

Log.i("JNI_INFO", ret);

String ret2 = GetFFmpegVersion();

Log.i("JNI_INFO", ret2);

}完整的代码为:

package nbicc.ffmpeg_test;

import android.os.Bundle;

import android.support.design.widget.FloatingActionButton;

import android.support.design.widget.Snackbar;

import android.support.v7.app.AppCompatActivity;

import android.support.v7.widget.Toolbar;

import android.view.View;

import android.view.Menu;

import android.view.MenuItem;

import android.widget.TextView;

import android.util.Log;

public class MainActivity extends AppCompatActivity {

private TextView tv=null;

public native static String sayHello(String str);

public native String GetFFmpegVersion();

public native int H264DecoderInit(int width, int height);

public native int H264DecoderRelease();

public native int H264Decode(byte[] in, int insize, byte[] out);

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

String ret = sayHello("string input");

Log.i("JNI_INFO", ret);

String ret2 = GetFFmpegVersion();

Log.i("JNI_INFO", ret2);

}

static {

System.loadLibrary("NDKTEST");

}

}

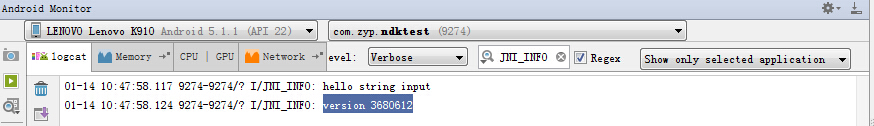

连接手机安装运行后,Android Monitor显示结果:

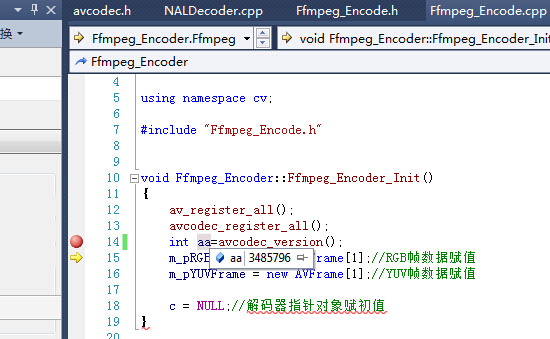

说明ffmpeg的version是3680612…….看不懂这串数字什么意思,但和我在vs中用的ffmpeg相同函数的输出是同一类东西:

OK,接下来就进入正式的安卓解码开发吧!

2815

2815

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?