Docker网络

为 容器编排 集群部署 做铺垫

理解Docker0

操作之前,将所有镜像和容器清空,单纯操作网络,方便理解

测试

# 获取当前ip地址 ip addr

# lo :本机回环地址

# enp0s3 :aliyun内网地址

# docker0 :docker生成的网卡

[root@localhost ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: enp0s3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 08:00:27:01:7a:d0 brd ff:ff:ff:ff:ff:ff

inet 192.168.111.191/23 brd 192.168.111.255 scope global noprefixroute dynamic enp0s3

valid_lft 85628sec preferred_lft 85628sec

inet6 fe80::846b:f00b:132c:e06d/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:88:c2:fa:a7 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

三个网络

# 问题:docker是如何处理容器网络访问的?

# 容器外能否ping通容器内部?

# 以tomcat为例

docker run -d -P --name tomcat01 tomcat

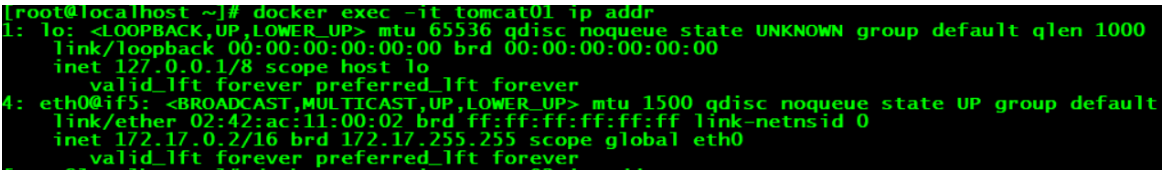

# 查看容器的内部网络地址 ip addr ,发现容器启动的时候会得到一个 eth0@if5 的ip地址,是Docker分配的

[root@localhost ~]# docker exec -it tomcat01 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

4: eth0@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

# 思考:linux能不能ping通容器内部

[root@localhost ~]# ping 172.17.0.2

PING 172.17.0.2 (172.17.0.2) 56(84) bytes of data.

64 bytes from 172.17.0.2: icmp_seq=1 ttl=64 time=0.070 ms

64 bytes from 172.17.0.2: icmp_seq=2 ttl=64 time=0.049 ms

64 bytes from 172.17.0.2: icmp_seq=3 ttl=64 time=0.093 ms

64 bytes from 172.17.0.2: icmp_seq=4 ttl=64 time=0.048 ms

64 bytes from 172.17.0.2: icmp_seq=5 ttl=64 time=0.081 ms

64 bytes from 172.17.0.2: icmp_seq=6 ttl=64 time=0.049 ms

64 bytes from 172.17.0.2: icmp_seq=7 ttl=64 time=0.047 ms

64 bytes from 172.17.0.2: icmp_seq=8 ttl=64 time=0.116 ms

64 bytes from 172.17.0.2: icmp_seq=9 ttl=64 time=0.053 ms

64 bytes from 172.17.0.2: icmp_seq=10 ttl=64 time=0.077 ms

64 bytes from 172.17.0.2: icmp_seq=11 ttl=64 time=0.054 ms

64 bytes from 172.17.0.2: icmp_seq=12 ttl=64 time=0.048 ms

64 bytes from 172.17.0.2: icmp_seq=13 ttl=64 time=0.071 ms

64 bytes from 172.17.0.2: icmp_seq=14 ttl=64 time=0.048 ms

64 bytes from 172.17.0.2: icmp_seq=15 ttl=64 time=0.047 ms

64 bytes from 172.17.0.2: icmp_seq=16 ttl=64 time=0.123 ms

64 bytes from 172.17.0.2: icmp_seq=17 ttl=64 time=0.047 ms

^C

--- 172.17.0.2 ping statistics ---

17 packets transmitted, 17 received, 0% packet loss, time 15999ms

rtt min/avg/max/mdev = 0.047/0.065/0.123/0.026 ms

# 联系上面的容器内部查看ip的操作

# 5: vethad20ddc@if4: 和 4: eth0@if5:

[root@localhost ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: enp0s3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 08:00:27:01:7a:d0 brd ff:ff:ff:ff:ff:ff

inet 192.168.111.191/23 brd 192.168.111.255 scope global noprefixroute dynamic enp0s3

valid_lft 83012sec preferred_lft 83012sec

inet6 fe80::846b:f00b:132c:e06d/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:88:c2:fa:a7 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:88ff:fec2:faa7/64 scope link

valid_lft forever preferred_lft forever

5: vethad20ddc@if4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether 22:66:b7:47:47:45 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::2066:b7ff:fe47:4745/64 scope link

valid_lft forever preferred_lft forever

-

我们每启动一个docker容器,docker就会给docker容器分配一个ip,只要安装了docker,就会有一个网卡docker0桥接模式,使用的技术是evth-pair技术

再次测试ip addr

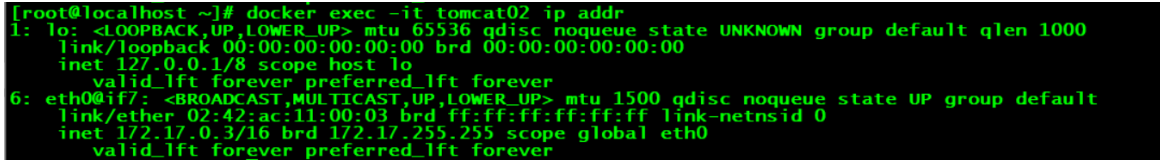

- 再启动一个tomcat02

docker run -d -P --name tomcat02 tomcat

# 查看容器内ip情况

[root@localhost ~]# docker exec -it cefb489bcb51 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

6: eth0@if7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:03 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.3/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

# 查看linux ip情况

[root@localhost ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: enp0s3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 08:00:27:01:7a:d0 brd ff:ff:ff:ff:ff:ff

inet 192.168.111.191/23 brd 192.168.111.255 scope global noprefixroute dynamic enp0s3

valid_lft 84897sec preferred_lft 84897sec

inet6 fe80::846b:f00b:132c:e06d/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:d4:f1:fe:cf brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:d4ff:fef1:fecf/64 scope link

valid_lft forever preferred_lft forever

5: veth1beee03@if4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether 6e:56:36:f2:64:6a brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::6c56:36ff:fef2:646a/64 scope link

valid_lft forever preferred_lft forever

7: vethc94a5e8@if6: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether 2a:cd:89:5a:e0:9e brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet6 fe80::28cd:89ff:fe5a:e09e/64 scope link

valid_lft forever preferred_lft forever

可以看出来多了一组6 7 ,每启动一个容器,docker分配的ip都是成对出现的(会多一对网卡)

- 通过测试结果,我们发现这个容器带来的网卡,都是一对对的

- veth-pair(Virtual Ethernet - VETH) 是一对的虚拟设备接口,他们都是成对出现的,一段连着协议栈,一段彼此相连,数据从一端出,从另一端进。

- 正因为有这个特性,veth-pair充当一个桥梁,连接各种虚拟网络设备

- openStac,Docker容器之间的连接,OVS的连接,都是使用veth-pair 技术

- 下面来测试tomcat01和tomcat02是否能ping通

# 查看tomcat01的ip

[root@localhost ~]# docker exec -it tomcat01 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

4: eth0@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

# 查看tomcat02的ip

[root@localhost ~]# docker exec -it tomcat02 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

6: eth0@if7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:03 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.3/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

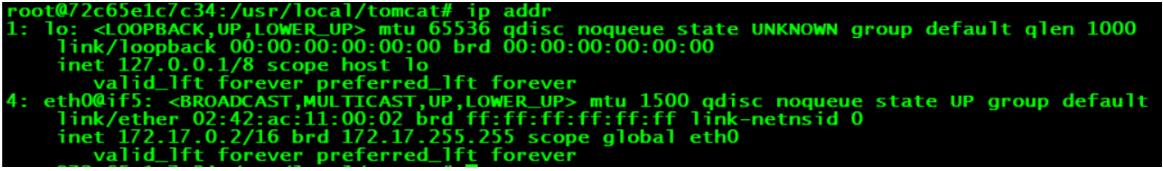

# tomcat01 ping tomcat02 可以ping通

root@72c65e1c7c34:/usr/local/tomcat# ping 172.17.0.3

PING 172.17.0.3 (172.17.0.3) 56(84) bytes of data.

64 bytes from 172.17.0.3: icmp_seq=1 ttl=64 time=0.175 ms

64 bytes from 172.17.0.3: icmp_seq=2 ttl=64 time=0.073 ms

64 bytes from 172.17.0.3: icmp_seq=3 ttl=64 time=0.076 ms

64 bytes from 172.17.0.3: icmp_seq=4 ttl=64 time=0.063 ms

64 bytes from 172.17.0.3: icmp_seq=5 ttl=64 time=0.064 ms

64 bytes from 172.17.0.3: icmp_seq=6 ttl=64 time=0.149 ms

64 bytes from 172.17.0.3: icmp_seq=7 ttl=64 time=0.073 ms

^C

--- 172.17.0.3 ping statistics ---

7 packets transmitted, 7 received, 0% packet loss, time 7ms

rtt min/avg/max/mdev = 0.063/0.096/0.175/0.042 ms

结论:tomcat01和tomcat02是共用的一个路由器:docker0

所有的容器不指定网络的情况下,都是docker0路由的,docker会给我们的容器分配一个默认的可用ip

Docker使用的是Linux的桥接

Docker中所有的网络接口都是虚拟的,因为虚拟的转发效率高, 如内网传递文件的时候速度会很快

只要容器删除,对应的一对网桥就没有了

–link

思考一个场景:我们编写了一个微服务 database url=ip:,项目不重启,数据库ip换掉了,我们希望可以处理这个问题,可以用名字来访问容器?

[root@localhost ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

cefb489bcb51 tomcat "catalina.sh run" 2 hours ago Up 2 hours 0.0.0.0:49154->8080/tcp, :::49154->8080/tcp tomcat02

72c65e1c7c34 tomcat "catalina.sh run" 2 hours ago Up 2 hours 0.0.0.0:49153->8080/tcp, :::49153->8080/tcp tomcat01

# 使用容器名称是否可以ping通?

[root@localhost ~]# docker exec -it tomcat01 ping tomcat02

ping: tomcat02: Name or service not known

# 需要借助--link 解决网络联通问题

[root@localhost ~]# docker run -d -P --name tomcat03 --link tomcat02 tomcat

2d2101d11ea9783e09816922a36af7587ed0df996dc72ebb47f8c3d6007ac36c

[root@localhost ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

2d2101d11ea9 tomcat "catalina.sh run" About a minute ago Up About a minute 0.0.0.0:49155->8080/tcp, :::49155->8080/tcp tomcat03

cefb489bcb51 tomcat "catalina.sh run" 2 hours ago Up 2 hours 0.0.0.0:49154->8080/tcp, :::49154->8080/tcp tomcat02

72c65e1c7c34 tomcat "catalina.sh run" 2 hours ago Up 2 hours 0.0.0.0:49153->8080/tcp, :::49153->8080/tcp tomcat01

# 能否反向ping通

[root@localhost ~]# docker exec -it tomcat03 ping tomcat02

PING tomcat02 (172.17.0.3) 56(84) bytes of data.

64 bytes from tomcat02 (172.17.0.3): icmp_seq=1 ttl=64 time=0.098 ms

64 bytes from tomcat02 (172.17.0.3): icmp_seq=2 ttl=64 time=0.064 ms

64 bytes from tomcat02 (172.17.0.3): icmp_seq=3 ttl=64 time=0.067 ms

64 bytes from tomcat02 (172.17.0.3): icmp_seq=4 ttl=64 time=0.064 ms

64 bytes from tomcat02 (172.17.0.3): icmp_seq=5 ttl=64 time=0.064 ms

64 bytes from tomcat02 (172.17.0.3): icmp_seq=6 ttl=64 time=0.108 ms

^C

--- tomcat02 ping statistics ---

6 packets transmitted, 6 received, 0% packet loss, time 5ms

rtt min/avg/max/mdev = 0.064/0.077/0.108/0.020 ms

[root@localhost ~]# docker exec -it tomcat02 ping tomcat03

ping: tomcat03: Name or service not known

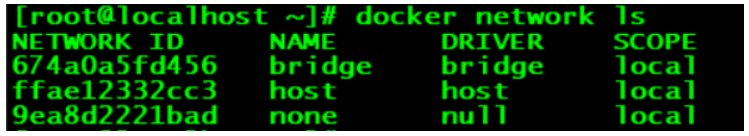

# 查看网络信息

[root@localhost ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

674a0a5fd456 bridge bridge local # 本地

ffae12332cc3 host host local

9ea8d2221bad none null local

# 查看网卡信息

[root@localhost ~]# docker inspect 674a0a5fd456

[

{

"Name": "bridge",

"Id": "674a0a5fd45657796a4653c19ec56e63fd4db39d38d1393549e2a2a365e8e37d",

"Created": "2021-06-28T10:34:19.66050121+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "172.17.0.0/16",

"Gateway": "172.17.0.1" # 默认网关,即docker0

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"6dc2e56fd0fac553beeeb9f0bbbf0a8be2a384fbdd7650d3c1958a490eb9d642": {

"Name": "tomcat03",

"EndpointID": "dd12f53b7dbdc1b9c9179f602cb4724cb0f4f99ca4d3a2011563c5558378ee4e",

"MacAddress": "02:42:ac:11:00:04",

"IPv4Address": "172.17.0.4/16",

"IPv6Address": ""

},

"9a0634536a9c32292d31ba4a06c710ab69800dc36eeebc157f7703b44b80b9a7": {

"Name": "tomcat01",

"EndpointID": "a1d11eb40afe5b77c6956c72f0ca1d2adeec808c4ae7f51dd67fd8c74541dc9d",

"MacAddress": "02:42:ac:11:00:02",

"IPv4Address": "172.17.0.2/16",

"IPv6Address": ""

},

"b57bca4a2492424eb6829f7e6403fa146be806ef7d1ed629694b8f58b28bfd18": {

"Name": "tomcat02",

"EndpointID": "aa63c7783d04a04bb8716b34fa9778cfadd030d8dbf7e2c85ffff4201560440e",

"MacAddress": "02:42:ac:11:00:03",

"IPv4Address": "172.17.0.3/16",

"IPv6Address": ""

}

},

"Options": {

"com.docker.network.bridge.default_bridge": "true",

"com.docker.network.bridge.enable_icc": "true",

"com.docker.network.bridge.enable_ip_masquerade": "true",

"com.docker.network.bridge.host_binding_ipv4": "0.0.0.0",

"com.docker.network.bridge.name": "docker0",

"com.docker.network.driver.mtu": "1500"

},

"Labels": {}

}

]

探究 inspect

tomcat01 02 03

查看tomcat03的详细信息

原理探究

# 查看tomcat03的hosts信息

[root@localhost ~]# docker exec -it tomcat03 cat /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

172.17.0.3 tomcat02 b57bca4a2492 # 所以tomcat03可以ping通tomcat02

172.17.0.4 6dc2e56fd0fa # tomcat03自身

# 再来查看下tomcat02

[root@localhost ~]# docker exec -it tomcat02 cat /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

172.17.0.3 b57bca4a2492 # 只有tomcat02自身

# 若要使tomcat02 ping通tomcat03,需要重启tomcat02加配置

本质探究:–link 就是我们在hosts配置中增加了一个172.17.0.3 的 tomcat02 b57bca4a2492

目前不建议使用–link,比起docker0,建议使用自定义网络.

docker0缺点:不支持容器名连接访问

自定义网络

查看所有的docker网络[root@localhost ~]# docker network lsNETWORK ID NAME DRIVER SCOPE674a0a5fd456 bridge bridge localffae12332cc3 host host local9ea8d2221bad none null local

网络模式

bridge:桥接模式. 在 docker上搭桥 (默认,自己创建的时候也使用这个模式)

none:不配置网络(一般不使用)

host:和宿主机共享网络

container:容器网络联通(用的少,局限很大)

测试

# 我们直接启动的命令 --net bridge,而这个就是我们的docker0

docker run -d -P --name tomcat01 tomcat

docker run -d -P --name tomcat01 --net bridge tomcat

# docker0特点:1. 默认 2. 域名不能访问 3. --link可以打通连接

# 我们可以自定义一个网络

[root@localhost ~]# docker network create --help

Usage: docker network create [OPTIONS] NETWORK

Create a network

Options:

--attachable Enable manual container attachment

--aux-address map Auxiliary IPv4 or IPv6 addresses used by Network driver (default map[])

--config-from string The network from which to copy the configuration

--config-only Create a configuration only network

-d, --driver string Driver to manage the Network (default "bridge")

--gateway strings IPv4 or IPv6 Gateway for the master subnet

--ingress Create swarm routing-mesh network

--internal Restrict external access to the network

--ip-range strings Allocate container ip from a sub-range

--ipam-driver string IP Address Management Driver (default "default")

--ipam-opt map Set IPAM driver specific options (default map[])

--ipv6 Enable IPv6 networking

--label list Set metadata on a network

-o, --opt map Set driver specific options (default map[])

--scope string Control the network's scope

--subnet strings Subnet in CIDR format that represents a network segment

# --driver bridge 规定网络模式 默认是网桥

# --subnet 192.168.0.0/16 规定子网 192.168.0.0/16 192.168.0.2 ~ 192.168.255.255

# --gateway 192.168.0.1 规定网关名

[root@localhost ~]# docker network create --driver bridge --subnet 192.168.0.0/16 --gateway 192.168.0.1 mynet

d642a24a250ddef9662f7091362596c6ea76d809f0e1b1d8418a5747dce2d921

# 列出所有网络

[root@localhost ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

674a0a5fd456 bridge bridge local

ffae12332cc3 host host local

d642a24a250d mynet bridge local

9ea8d2221bad none null local

# 查看指定网络详细信息

[root@localhost ~]# docker inspect d642a24a250d

[

{

"Name": "mynet",

"Id": "d642a24a250ddef9662f7091362596c6ea76d809f0e1b1d8418a5747dce2d921",

"Created": "2021-06-28T14:27:24.063041512+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "192.168.0.0/16",

"Gateway": "192.168.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {},

"Options": {},

"Labels": {}

}

]

# 在自己的网络启动

[root@localhost ~]# docker run -d -P --name tomcat-net-01 --net mynet tomcat

[root@localhost ~]# docker run -d -P --name tomcat-net-02 --net mynet tomcat

# 查看ip

[root@localhost ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: enp0s3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 08:00:27:01:7a:d0 brd ff:ff:ff:ff:ff:ff

inet 192.168.111.191/23 brd 192.168.111.255 scope global noprefixroute dynamic enp0s3

valid_lft 70380sec preferred_lft 70380sec

inet6 fe80::846b:f00b:132c:e06d/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:44:50:c8:c1 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:44ff:fe50:c8c1/64 scope link

valid_lft forever preferred_lft forever

10: br-d642a24a250d: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:86:d2:82:2f brd ff:ff:ff:ff:ff:ff

inet 192.168.0.1/16 brd 192.168.255.255 scope global br-d642a24a250d

valid_lft forever preferred_lft forever

inet6 fe80::42:86ff:fed2:822f/64 scope link

valid_lft forever preferred_lft forever

12: veth5d49e7b@if11: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-d642a24a250d state UP group default

link/ether 12:25:f6:b1:16:8d brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::1025:f6ff:feb1:168d/64 scope link

valid_lft forever preferred_lft forever

14: veth380147e@if13: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br-d642a24a250d state UP group default

link/ether a6:50:b2:1b:01:73 brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet6 fe80::a450:b2ff:fe1b:173/64 scope link

valid_lft forever preferred_lft forever

再次测试是否可以ping

# 查看ip

# 查出的ip是 192.168.0.2

[root@localhost ~]# docker inspect tomcat-net-01

# 查出的ip是 192.168.0.3

[root@localhost ~]# docker inspect tomcat-net-02

# 测试是否能ping通

# ping ip

[root@localhost ~]# docker exec -it tomcat-net-01 ping 192.168.0.3

PING 192.168.0.3 (192.168.0.3) 56(84) bytes of data.

64 bytes from 192.168.0.3: icmp_seq=1 ttl=64 time=0.111 ms

64 bytes from 192.168.0.3: icmp_seq=2 ttl=64 time=0.062 ms

64 bytes from 192.168.0.3: icmp_seq=3 ttl=64 time=0.070 ms

64 bytes from 192.168.0.3: icmp_seq=4 ttl=64 time=0.081 ms

64 bytes from 192.168.0.3: icmp_seq=5 ttl=64 time=0.070 ms

64 bytes from 192.168.0.3: icmp_seq=6 ttl=64 time=0.068 ms

64 bytes from 192.168.0.3: icmp_seq=7 ttl=64 time=0.070 ms

^C

--- 192.168.0.3 ping statistics ---

7 packets transmitted, 7 received, 0% packet loss, time 1005ms

rtt min/avg/max/mdev = 0.062/0.076/0.111/0.015 ms

# ping [名字]

[root@localhost ~]# docker exec -it tomcat-net-01 ping tomcat-net-02

PING tomcat-net-02 (192.168.0.3) 56(84) bytes of data.

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=1 ttl=64 time=0.068 ms

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=2 ttl=64 time=0.065 ms

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=3 ttl=64 time=0.060 ms

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=4 ttl=64 time=0.065 ms

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=5 ttl=64 time=0.063 ms

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=6 ttl=64 time=0.066 ms

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=7 ttl=64 time=0.074 ms

^C

--- tomcat-net-02 ping statistics ---

7 packets transmitted, 7 received, 0% packet loss, time 7ms

rtt min/avg/max/mdev = 0.060/0.065/0.074/0.011 ms

我们自定义的网络docker都已经帮我们维护好了对应的关系,推荐使用自定义网络

自定义网络好处

- redis:不同的集群使用不同的网络,保证集群是安全和健康的

- mysql:不同的集群使用不同的网络,保证集群是安全和健康的

网络连通

# 现在再启动两个tomcat

[root@localhost ~]# docker run -d -P --name tomcat01 tomcat

bb981cf01e5ac7a5aca1657ecbc030fa7c0fea8f86af1f20242d8198ed84dac9

[root@localhost ~]# docker run -d -P --name tomcat02 tomcat

f162c6c2bc87cc8955652f6d7dae20623a3f9bc357462bd068e83ca840d9a224

处于不同网段是无法ping通的,需要将这两个网络打通,这里的打通不是网卡的打通,因为网卡打通网络就变了

实际上的打通: 容器可以与mynet连接

打通

[root@localhost ~]# docker network --help

Usage: docker network COMMAND

Manage networks

Commands:

connect Connect a container to a network # 使用这个命令打通

create Create a network

disconnect Disconnect a container from a network

inspect Display detailed information on one or more networks

ls List networks

prune Remove all unused networks

rm Remove one or more networks

Run 'docker network COMMAND --help' for more information on a command.

# 打通命令

[root@localhost ~]# docker network connect --help

Usage: docker network connect [OPTIONS] NETWORK CONTAINER

Connect a container to a network

Options:

--alias strings Add network-scoped alias for the container

--driver-opt strings driver options for the network

--ip string IPv4 address (e.g., 172.30.100.104)

--ip6 string IPv6 address (e.g., 2001:db8::33)

--link list Add link to another container

--link-local-ip strings Add a link-local address for the container

测试打通tomcat01 - mynet

# 打通 tomcat01 和 mynet

[root@localhost ~]# docker network connect mynet tomcat01

# 查看网络信息

# 连通之后将tomcat01加到了mynet网段中

[root@localhost ~]# docker network inspect mynet

[

{

"Name": "mynet",

"Id": "d642a24a250ddef9662f7091362596c6ea76d809f0e1b1d8418a5747dce2d921",

"Created": "2021-06-28T14:27:24.063041512+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "192.168.0.0/16",

"Gateway": "192.168.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"0d201a7005a2f1ec91e8c84f198bfd0b191efd7fc558e8d47e2f37e8a409217f": {

"Name": "tomcat-net-02",

"EndpointID": "965a834538bb56dc68e07af40e947a8a0e2ad4710131b87b2c8672a4060db588",

"MacAddress": "02:42:c0:a8:00:03",

"IPv4Address": "192.168.0.3/16",

"IPv6Address": ""

},

"bb981cf01e5ac7a5aca1657ecbc030fa7c0fea8f86af1f20242d8198ed84dac9": {

"Name": "tomcat01", # 连通之后将tomcat01加到了mynet网段中

"EndpointID": "1a9a877b9bf5165890bbb6c03e6ab727f205ef1ad10b8c9c9ac0d4615492dbd0",

"MacAddress": "02:42:c0:a8:00:04",

"IPv4Address": "192.168.0.4/16",

"IPv6Address": ""

},

"ca3ca4b2ca9bf2df3fcebea7b97e3ce4d103ff4db90ce9340efc1a293cd8859a": {

"Name": "tomcat-net-01",

"EndpointID": "1418d84aa7a7729e174b0de7d16624aaf37eb99b840a3a3889db3dd88bc0e4e4",

"MacAddress": "02:42:c0:a8:00:02",

"IPv4Address": "192.168.0.2/16",

"IPv6Address": ""

}

},

"Options": {},

"Labels": {}

}

]

# 连通之后将tomcat01加到了mynet网段中

# 之后 tomcat-net-01 tomcat-net-02 tomcat01 可两两互通,但是tomcat02是不通的

打通原理:一个容器两个ip:公网ip 和 私网ip

结论:假设要跨网络操作别人的网段,就需要使用 docker connect 进行连通

实战:部署redis集群 有问题 暂鸽

分片 + 高可用 + 负载均衡

- 创建一个网段

docker network create redis --subnet 172.38.0.0/16

# 查看信息

[root@localhost ~]# docker network inspect redis

[

{

"Name": "redis",

"Id": "99fe646b16089b694bda63f4a40de2e2e5d627499e3baed9fb3ecc90a4565f99",

"Created": "2021-06-28T16:12:28.921356782+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "172.38.0.0/16"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {},

"Options": {},

"Labels": {}

}

]

- 通过脚本创建6个redis服务

for port in $(seq 1 6);

do

mkdir -p /mydata/redis/node-${port}/conf

touch /mydata/redis/node-${port}/conf/redis.conf

cat << EOF >/mydata/redis/node-${port}/conf/redis.conf

port 6379

bind 0.0.0.0

cluster-enabled yes

cluster-config-file nodes.conf

cluster-node-timeout 5000

cluster-announce-ip 172.38.0.1${port}

cluster-announce-port 6379

cluster-announce-bus-port 16379

appendonly yes

EOF

done

- 查看下信息

[root@localhost ~]# cd /mydata/

[root@localhost mydata]# cd redis

[root@localhost redis]# ls

node-1 node-2 node-3 node-4 node-5 node-6

[root@localhost redis]# cd node-1

[root@localhost node-1]# ls

conf

[root@localhost node-1]# cd conf

[root@localhost conf]# ls

redis.conf

# 查看写入的配置信息

[root@localhost conf]# cat redis.conf

port 6379

bind ...

cluster-enabled yes

cluster-config-file nodes.conf

cluster-node-timeout 5

cluster-announce-ip 172.38..11

cluster-announce-port 6379

cluster-announce-bus-port 16379

appendonly yes

- 启动命令 脚本有问题

docker run -p 6371:6379 -p 16371:16379 --name redis-1 \

-v /mydata/redis/node-1/data:/data \

-v /mydata/redis/node-1/conf/redis.conf:/etc/redis/redis.conf \

-d --net redis --ip 172.38.0.11 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

# 运行日志

[root@localhost ~]# docker run -p 6371:6379 -p 16371:16379 --name redis-1 \

> -v /mydata/redis/node-1/data:/data \

> -v /mydata/redis/node-1/conf/redis.conf:/etc/redis/redis.conf \

> -d --net redis --ip 172.38.0.11 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

Unable to find image 'redis:5.0.9-alpine3.11' locally

5.0.9-alpine3.11: Pulling from library/redis

cbdbe7a5bc2a: Pull complete

dc0373118a0d: Pull complete

cfd369fe6256: Pull complete

3e45770272d9: Pull complete

558de8ea3153: Pull complete

a2c652551612: Pull complete

Digest: sha256:83a3af36d5e57f2901b4783c313720e5fa3ecf0424ba86ad9775e06a9a5e35d0

Status: Downloaded newer image for redis:5.0.9-alpine3.11

95f3bf4958e0b392c5628d91d8cd096cf93ca547c0c26fed7530ae1b3722f434

# 依次启动

docker run -p 6376:6379 -p 16376:16379 --name redis-6 \

-v /mydata/redis/node-6/data:/data \

-v /mydata/redis/node-6/conf/redis.conf:/etc/redis/redis.conf \

-d --net redis --ip 172.38.0.16 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

SpringBoot微服务打包Docker镜像

本地测试没问题后,点击clean 再package打包

打包结果:

之后创建DockerFile文件,

发布的时候发布的是打包好的jar包,和DockerFile文件

步骤:

- 构架SpringBoot项目

- 打包应用(xxx.jar)

- 编写DockerFile(为了将xxx.jar拷贝到容器内部)

- 构建镜像(拷贝jar包作为镜像)

- 发布运行

260

260

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?