前言

因为个人比较穷,所以只用一台服务器作为示例,请大家见谅,只要大家会举一反三,这些都不是问题,写得不好的地方,请大家多多包涵,阿导不胜感激。

准备工作

cd /

mkdir dao

cd dao

mkdir zookeeper kafka

zookeeper

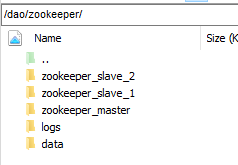

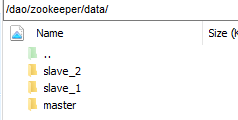

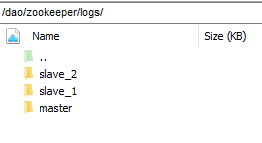

好了,话不多说,先来一波 zookeeper 的配置,因为过去搭建过主从结构的 zookeeper 集群,我这边文件夹命名没有去改动,按照道理来说,这三个 zookeeper 节点地位相当,没有主次之分,我这边创建了8个文件夹,首先在 zookeeper 目录下创建 data 和 logs,然后分别在data 和 logs 下面创建 master 、slave_1 、slave_2,命令如下所示:

cd /dao/zookeeper

mkdir data logs

cd data

mkdir master slave_1 slave_2

cd …/logs

mkdir master slave_1 slave_2

这个时候需要下载一个 zookeeper,然后解压,拷贝三份,具体操作如下:

cd /dao/zookeeper

wget http://mirrors.hust.edu.cn/apache/zookeeper/zookeeper-3.4.10/zookeeper-3.4.10.tar.gz

tar -zxvf zookeeper-3.4.10.tar.gz

cp -r zookeeper-3.4.10 zookeeper_master

cp -r zookeeper-3.4.10 zookeeper_slave_1

mv zookeeper-3.4.10 zookeeper_slave_2

目录如下图

嗯,接下来看看 zookeeper 重要的配置了,首先在 data 下面三个文件夹内添加 myid 文件,文件内容分别为 1,2,3,命令如下:

vi /dao/zookeeper/data/master/myid

输入 i ,然后 1,最后保存退出(wq)

vi /dao/zookeeper/data/slave_1/myid

输入 i ,然后 2,最后保存退出(wq)

vi /dao/zookeeper/data/slave_2/myid

输入 i ,然后 3,最后保存退出(wq)

然后我们看看 zoo.cfg 的配置

vi /dao/zookeeper/zookeeper_master/conf/zoo.cfg

# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

dataDir=/dao/zookeeper/data/master

dataLogDir=/dao/zookeeper/logs/master

# the port at which the clients will connect

clientPort=2191

# the maximum number of client connections.

# increase this if you need to handle more clients

#maxClientCnxns=60

#

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

# http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

#

# The number of snapshots to retain in dataDir

#autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to "0" to disable auto purge feature

#autopurge.purgeInterval=1

server.1=127.0.0.1:2888:3888

server.2=127.0.0.1:2889:3889

server.3=127.0.0.1:2890:3890

vi /dao/zookeeper/zookeeper_slave_1/conf/zoo.cfg

# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

dataDir=/dao/zookeeper/data/slave_1

dataLogDir=/dao/zookeeper/logs/slave_1

# the port at which the clients will connect

clientPort=2192

# the maximum number of client connections.

# increase this if you need to handle more clients

#maxClientCnxns=60

#

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

# http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

#

# The number of snapshots to retain in dataDir

#autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to "0" to disable auto purge feature

#autopurge.purgeInterval=1

server.1=127.0.0.1:2888:3888

server.2=127.0.0.1:2889:3889

server.3=127.0.0.1:2890:3890

vi /dao/zookeeper/zookeeper_slave_2/conf/zoo.cfg

# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

dataDir=/dao/zookeeper/data/slave_2

dataLogDir=/dao/zookeeper/logs/slave_2

# the port at which the clients will connect

clientPort=2193

# the maximum number of client connections.

# increase this if you need to handle more clients

#maxClientCnxns=60

#

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

# http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

#

# The number of snapshots to retain in dataDir

#autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to "0" to disable auto purge feature

#autopurge.purgeInterval=1

server.1=127.0.0.1:2888:3888

server.2=127.0.0.1:2889:3889

server.3=127.0.0.1:2890:3890

配置(主要就是 clientPort 配置不同 )已经搞定,是时候来一波启动了,当然你可以写脚本,启动方式都差不多,到 zookeeper 文件夹下,然后启动启动就行了,具体命令如下:

cd /dao/zookeeper/zookeeper_master/bin/

./zkServer.sh start

cd /dao/zookeeper/zookeeper_slave_1/bin/

./zkServer.sh start

cd /dao/zookeeper/zookeeper_slave_2/bin/

./zkServer.sh start

其他就不说了哈,反正我不会(嘿嘿),直接看看 kafka

kafka

一样先创建一个 logs,然后创建三个文件夹 kafka_1 、kafka_2 、kafka_3,具体命令如下:

cd /dao/kafka

mkdir logs

cd logs

mkdir kafka_1 kafka_2 kafka_3

然后下载 kafka ,解压并拷贝三份

wget http://mirrors.hust.edu.cn/apache/kafka/2.0.1/kafka_2.11-2.0.1.tgz

tar -zxvf kafka_2.11-2.0.1.tgz

cp -r kafka_2.11-2.0.1 kafka_1

cp -r kafka_2.11-2.0.1 kafka_2

mv kafka_2.11-2.0.1 kafka_3

又到了看看主要配置的时候了,来来跟踪阿导的脚本,如下操作

vi /dao/kafka/kafka_1/config/server.properties

broker.id=0

port=9092

listeners=PLAINTEXT://{内网 IP}:9092

advertised.listeners=PLAINTEXT://{外网 IP 或者 域名}:9092

message.max.byte=5242880

default.replication.factor=2

replica.fetch.max.bytes=5242880

num.network.threads=3

num.io.threads=8

socket.send.buffer.bytes=102400

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

log.dirs=/dao/kafka/logs/kafka_1/

num.partitions=1

num.recovery.threads.per.data.dir=1

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

log.retention.hours=168

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000

zookeeper.connect=localhost:2191,localhost:2192,localhost:2193

zookeeper.connection.timeout.ms=6000

group.initial.rebalance.delay.ms=0

vi /dao/kafka/kafka_1/config/consumer.properties

bootstrap.servers={外网 IP}:9092

group.id=logGroup

zookeeper.connect=localhost:2191,localhost:2192,localhost:2193

vi /dao/kafka/kafka_2/config/server.properties

broker.id=1

port=9093

listeners=PLAINTEXT://{内网 IP}:9093

advertised.listeners=PLAINTEXT://{外网 IP 或者 域名}:9093

message.max.byte=5242880

default.replication.factor=2

replica.fetch.max.bytes=5242880

num.network.threads=3

num.io.threads=8

socket.send.buffer.bytes=102400

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

log.dirs=/dao/kafka/logs/kafka_2/

num.partitions=1

num.recovery.threads.per.data.dir=1

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

log.retention.hours=168

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000

zookeeper.connect=localhost:2191,localhost:2192,localhost:2193

zookeeper.connection.timeout.ms=6000

group.initial.rebalance.delay.ms=0

vi /dao/kafka/kafka_2/config/consumer.properties

bootstrap.servers={外网 IP}:9093

group.id=logGroup

zookeeper.connect=localhost:2191,localhost:2192,localhost:2193

vi /dao/kafka/kafka_3/config/server.properties

broker.id=2

port=9094

listeners=PLAINTEXT://{内网 IP}:9094

advertised.listeners=PLAINTEXT://{外网 IP 或者 域名}:9094

message.max.byte=5242880

default.replication.factor=2

replica.fetch.max.bytes=5242880

num.network.threads=3

num.io.threads=8

socket.send.buffer.bytes=102400

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

log.dirs=/dao/kafka/logs/kafka_2/

num.partitions=1

num.recovery.threads.per.data.dir=1

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

log.retention.hours=168

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000

zookeeper.connect=localhost:2191,localhost:2192,localhost:2193

zookeeper.connection.timeout.ms=6000

group.initial.rebalance.delay.ms=0

vi /dao/kafka/kafka_3/config/consumer.properties

bootstrap.servers={外网 IP}:9094

group.id=logGroup

zookeeper.connect=localhost:2191,localhost:2192,localhost:2193

总结一下,主要以下配置不同

- server.properties

broker.id

port

listeners

advertised.listeners

log.dirs

- consumer.properties

bootstrap.servers={外网 IP}:9094

启动试试

sh /dao/kafka/kafka_1/bin/kafka-server-start.sh /dao/kafka/kafka_1/config/server.properties &

sh /dao/kafka/kafka_2/bin/kafka-server-start.sh /dao/kafka/kafka_2/config/server.properties &

sh /dao/kafka/kafka_3/bin/kafka-server-start.sh /dao/kafka/kafka_3/config/server.properties &

启动成功展示如下,一般启动出错都是你的 IP 没写对,我也是摸滚了好久。。。

940

940

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?