Windows10下 opencv2.4.9+dlib19.7+VS2017 配置教程

1.opencv2.4.9 配置教程:

**

1.1 opencv2.4.9 下载

下载地址:https://sourceforge.net/projects/opencvlibrary/files/opencv-win/2.4.9/opencv-2.4.9.exe/download

其他版本的可以去https://opencv.org/releases.html 下载

1.2opencv2.4.9 解压

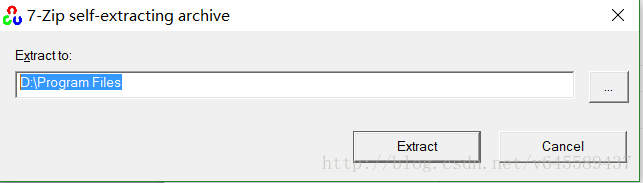

我选择的解压目录为 D:\Program Files\

解压的过程

解压过程 在这里引用的图片

- 解压完成后在D:\Program Files\opencv看到文件

- -

1.3接下来添加环境变量

我的计算机是64位的所以选择了写x64 ,如果是32 位的就替换为x86

VC11.0 VS2012 Microsoft Visual Studio 2012

VC12.0 VS2013 Microsoft Visual Studio 2013

VC13.0 VS2014 Microsoft Visual Studio 2014

VC14.0 VS2015 Microsoft Visual Studio 2015

VC12对应的是 visual studio 2013

VC13对应的是 visual studio 2014

VC14对应的是Visual Studio 2015

vc15对应的是 visual studio 2017

opencv2.4.9 没有vc15文件夹,所以选择最高的VC12

当然你可以选择安装最新版本的opencv,选择对应的版本。

- 在path 中添加D:\Program Files\opencv\build\x64\vc12\bin

-在用户中的变量里创建opencv项 值为: D:\Program Files\opencv\build

-

- 注:环境变量配置后,需重启系统才能生效。

-

1.4VS配置

我使用的是visual studio 2017 社区版

首先新建一个空的项目

- 在右下角找到属性管理器界面

- 展开release ,右键,找到属性

- 先选择VC++目录

- 找到右侧的可执行目录,点击右边,选择编辑

- 新建一个位置

然后修改包含目录(根据自己安装路径做相应的修改)

- D:\Program Files\opencv\build\include

- D:\Program Files\opencv\build\include\opencv2

- D:\Program Files\opencv\build\include\opencv

- 接下来修改库目录

**这里注意X86,代表为32位,x64代表64位(虽然是个不专业的解释,但可以防止混淆),我这里是release状态64位的配置;

链接库的配置* 注意 debug模式与release模式配置不同

- 找到 链接器* -> 输入 -> 库目录;右键编辑

- 如果你使用的模式release

请添加:

opencv_objdetect249.lib

opencv_ts249.lib

opencv_video249.lib

opencv_nonfree249.lib

opencv_ocl249.lib

opencv_photo249.lib

opencv_stitching249.lib

opencv_superres249.lib

opencv_videostab249.lib

opencv_calib3d249.lib

opencv_contrib249.lib

opencv_core249.lib

opencv_features2d249.lib

opencv_flann249.lib

opencv_gpu249.lib

opencv_highgui249.lib

opencv_imgproc249.lib

opencv_legacy249.lib

opencv_ml249.lib 如果你是用的debug模式,请添加

opencv_ml249d.lib

opencv_calib3d249d.lib

opencv_contrib249d.lib

opencv_core249d.lib

opencv_features2d249d.lib

opencv_flann249d.lib

opencv_gpu249d.lib

opencv_highgui249d.lib

opencv_imgproc249d.lib

opencv_legacy249d.lib

opencv_objdetect249d.lib

opencv_ts249d.lib

opencv_video249d.lib

opencv_nonfree249d.lib

opencv_ocl249d.lib

opencv_photo249d.lib

opencv_stitching249d.lib

opencv_superres249d.lib

opencv_videostab249d.lib

区别是Debug版用到的dll和lib文件都有后缀d,Release版使用的不带d。

请注意release与debug模式不要弄混,想了解debug和release的区别,请参考 Debug与Release版本的区别详解http://blog.csdn.net/ithzhang/article/details/7575483

我使用的release,只配置了此状态下的设置

1.5测试是否安装成功

#include <stdio.h>

#include <tchar.h>

#include <opencv2/opencv.hpp>

using namespace std;

using namespace cv;

int _tmain(int argc, _TCHAR* argv[]) {

const char* imagename = "lena.jpg";

//从文件中读入图像

Mat img = imread(imagename);

//如果读入图像失败

if (img.empty())

{

fprintf(stderr, "Can not load image %s\n", imagename);

return -1;

}

//显示图像,在哪个个窗口显示

imshow("image", img); //void namedWindow(const string& winname, int flags = WINDOW_AUTOSIZE)//用来创建窗口的函数

//此函数等待按键,按键盘任意键就返回

waitKey();

return 0;

}这里使用的图片为

在这里不要忘记 设置状态为release ,X64模式,然后再生成解决方案,运行

运行成功是会显示此图片

注意事项:一定要把图片文件添加到资源文件里,右键资源文件进行添加

如果出现计算机中丢失:opencv_core249d.dll 解决办法,很可能是release和debug状态下的配置的dll文件有问题

详见:

http://blog.csdn.net/eddy_zheng/article/details/49976005

2 dlib配置

–

2.1首先下载dlib

可以去官网下载 http://dlib.net/

2.2解压文件

我解压的目录是 D:\Program files

2.3安装cmake

2.4编译dlib

- 有兴趣的可以直接去官网看官方的教程:官方教程

首先还是要有cmake,而且要添加到环境变量。打开命令提示符,然后在命令行下打开dlib文件夹的目录

- mkdir build

- cd build

- cmake -G “Visual Studio 15 2017 Win64” ..

- cmake –build . –config Release

这里有四点点注意事项

- (1)一定要vs 2015或者更高的版本,据说之前的版本对于C++11的支持不够好。

(2)build的时候一定要是Release模式。因为Debug模式超级!超级!慢!亲测。参考:Why is dlib slow?

(3)版本对应问题 VC12对应的是 visual studio 2013 VC13对应的是 visual studio 2014

VC14对应的是Visual Studio 2015 vc15对应的是 visual studio 2017

如果你的VS版本和我的不一样,请将这行代码cmake -G “Visual Studio 15 2017 Win64” ..

修改为你目前的版本 build完成之后会在*\dlib-19.2\build\dlib\Release文件夹下生成dlib.lib。

(4)cmake –build . –config Release

这一行命令 build,config前 为 两个减号 “–”, 由于markdown 编辑器有毒,把两个减号显示为一个破折号。源代码如下所示:

另一种方法使用cmake-gui来实行这一步 如果想使用cmake-gui执行这一步请参考: http://blog.csdn.net/nkwavelet2009/article/details/69524964

2.5配置VS

- 新建工程

- 属性管理器工程名展开,右键release|X64 ,点击属性

(1)打开项目属性,配置包含目录

D:\Program Files\dlib-19.7

(2)配置连接器——输入——附加依赖项:

请根据你的实际目录输入,我计算机dlib.lib 位置为:

D:\Program Files\dlib-19.7\build\dlib\Release\dlib.lib

(3)配置预处理器 C/C++——预处理器——预处理器定义

DLIB_JPEG_SUPPORT

DLIB_PNG_SUPPORT

2.6测试

可以将将一下代码复制到工程中,这是example中的示例程序

#include <dlib/svm_threaded.h>

#include <dlib/gui_widgets.h>

#include <dlib/array.h>

#include <dlib/array2d.h>

#include <dlib/image_keypoint.h>

#include <dlib/image_processing.h>

#include <iostream>

#include <fstream>

using namespace std;

using namespace dlib;

// ----------------------------------------------------------------------------------------

template <

typename image_array_type

>

void make_simple_test_data(

image_array_type& images,

std::vector<std::vector<rectangle> >& object_locations

)

/*!

ensures

- #images.size() == 3

- #object_locations.size() == 3

- Creates some simple images to test the object detection routines. In particular,

this function creates images with white 70x70 squares in them. It also stores

the locations of these squares in object_locations.

- for all valid i:

- object_locations[i] == A list of all the white rectangles present in images[i].

!*/

{

images.clear();

object_locations.clear();

images.resize(3);

images[0].set_size(400, 400);

images[1].set_size(400, 400);

images[2].set_size(400, 400);

// set all the pixel values to black

assign_all_pixels(images[0], 0);

assign_all_pixels(images[1], 0);

assign_all_pixels(images[2], 0);

// Now make some squares and draw them onto our black images. All the

// squares will be 70 pixels wide and tall.

std::vector<rectangle> temp;

temp.push_back(centered_rect(point(100, 100), 70, 70));

fill_rect(images[0], temp.back(), 255); // Paint the square white

temp.push_back(centered_rect(point(200, 300), 70, 70));

fill_rect(images[0], temp.back(), 255); // Paint the square white

object_locations.push_back(temp);

temp.clear();

temp.push_back(centered_rect(point(140, 200), 70, 70));

fill_rect(images[1], temp.back(), 255); // Paint the square white

temp.push_back(centered_rect(point(303, 200), 70, 70));

fill_rect(images[1], temp.back(), 255); // Paint the square white

object_locations.push_back(temp);

temp.clear();

temp.push_back(centered_rect(point(123, 121), 70, 70));

fill_rect(images[2], temp.back(), 255); // Paint the square white

object_locations.push_back(temp);

}

// ----------------------------------------------------------------------------------------

class very_simple_feature_extractor : noncopyable

{

/*!

WHAT THIS OBJECT REPRESENTS

This object is a feature extractor which goes to every pixel in an image and

produces a 32 dimensional feature vector. This vector is an indicator vector

which records the pattern of pixel values in a 4-connected region. So it should

be able to distinguish basic things like whether or not a location falls on the

corner of a white box, on an edge, in the middle, etc.

Note that this object also implements the interface defined in dlib/image_keypoint/hashed_feature_image_abstract.h.

This means all the member functions in this object are supposed to behave as

described in the hashed_feature_image specification. So when you define your own

feature extractor objects you should probably refer yourself to that documentation

in addition to reading this example program.

!*/

public:

template <

typename image_type

>

inline void load(

const image_type& img

)

{

feat_image.set_size(img.nr(), img.nc());

assign_all_pixels(feat_image, 0);

for (long r = 1; r + 1 < img.nr(); ++r)

{

for (long c = 1; c + 1 < img.nc(); ++c)

{

unsigned char f = 0;

if (img[r][c]) f |= 0x1;

if (img[r][c + 1]) f |= 0x2;

if (img[r][c - 1]) f |= 0x4;

if (img[r + 1][c]) f |= 0x8;

if (img[r - 1][c]) f |= 0x10;

// Store the code value for the pattern of pixel values in the 4-connected

// neighborhood around this row and column.

feat_image[r][c] = f;

}

}

}

inline unsigned long size() const { return feat_image.size(); }

inline long nr() const { return feat_image.nr(); }

inline long nc() const { return feat_image.nc(); }

inline long get_num_dimensions(

) const

{

// Return the dimensionality of the vectors produced by operator()

return 32;

}

typedef std::vector<std::pair<unsigned int, double> > descriptor_type;

inline const descriptor_type& operator() (

long row,

long col

) const

/*!

requires

- 0 <= row < nr()

- 0 <= col < nc()

ensures

- returns a sparse vector which describes the image at the given row and column.

In particular, this is a vector that is 0 everywhere except for one element.

!*/

{

feat.clear();

const unsigned long only_nonzero_element_index = feat_image[row][col];

feat.push_back(make_pair(only_nonzero_element_index, 1.0));

return feat;

}

// This block of functions is meant to provide a way to map between the row/col space taken by

// this object's operator() function and the images supplied to load(). In this example it's trivial.

// However, in general, you might create feature extractors which don't perform extraction at every

// possible image location (e.g. the hog_image) and thus result in some more complex mapping.

inline const rectangle get_block_rect(long row, long col) const { return centered_rect(col, row, 3, 3); }

inline const point image_to_feat_space(const point& p) const { return p; }

inline const rectangle image_to_feat_space(const rectangle& rect) const { return rect; }

inline const point feat_to_image_space(const point& p) const { return p; }

inline const rectangle feat_to_image_space(const rectangle& rect) const { return rect; }

inline friend void serialize(const very_simple_feature_extractor& item, std::ostream& out) { serialize(item.feat_image, out); }

inline friend void deserialize(very_simple_feature_extractor& item, std::istream& in) { deserialize(item.feat_image, in); }

void copy_configuration(const very_simple_feature_extractor& item) {}

private:

array2d<unsigned char> feat_image;

// This variable doesn't logically contribute to the state of this object. It is here

// only to avoid returning a descriptor_type object by value inside the operator() method.

mutable descriptor_type feat;

};

// ----------------------------------------------------------------------------------------

int main()

{

try

{

// Get some data

dlib::array<array2d<unsigned char> > images;

std::vector<std::vector<rectangle> > object_locations;

make_simple_test_data(images, object_locations);

typedef scan_image_pyramid<pyramid_down<5>, very_simple_feature_extractor> image_scanner_type;

image_scanner_type scanner;

// Instead of using setup_grid_detection_templates() like in object_detector_ex.cpp, let's manually

// setup the sliding window box. We use a window with the same shape as the white boxes we

// are trying to detect.

const rectangle object_box = compute_box_dimensions(1, // width/height ratio

70 * 70 // box area

);

scanner.add_detection_template(object_box, create_grid_detection_template(object_box, 2, 2));

// Since our sliding window is already the right size to detect our objects we don't need

// to use an image pyramid. So setting this to 1 turns off the image pyramid.

scanner.set_max_pyramid_levels(1);

// While the very_simple_feature_extractor doesn't have any parameters, when you go solve

// real problems you might define a feature extractor which has some non-trivial parameters

// that need to be setup before it can be used. So you need to be able to pass these parameters

// to the scanner object somehow. You can do this using the copy_configuration() function as

// shown below.

very_simple_feature_extractor fe;

/*

setup the parameters in the fe object.

...

*/

// The scanner will use very_simple_feature_extractor::copy_configuration() to copy the state

// of fe into its internal feature extractor.

scanner.copy_configuration(fe);

// Now that we have defined the kind of sliding window classifier system we want and stored

// the details into the scanner object we are ready to use the structural_object_detection_trainer

// to learn the weight vector and threshold needed to produce a complete object detector.

structural_object_detection_trainer<image_scanner_type> trainer(scanner);

trainer.set_num_threads(4); // Set this to the number of processing cores on your machine.

// The trainer will try and find the detector which minimizes the number of detection mistakes.

// This function controls how it decides if a detection output is a mistake or not. The bigger

// the input to this function the more strict it is in deciding if the detector is correctly

// hitting the targets. Try reducing the value to 0.001 and observing the results. You should

// see that the detections aren't exactly on top of the white squares anymore. See the documentation

// for the structural_object_detection_trainer and structural_svm_object_detection_problem objects

// for a more detailed discussion of this parameter.

trainer.set_match_eps(0.95);

object_detector<image_scanner_type> detector = trainer.train(images, object_locations);

// We can easily test the new detector against our training data. This print

// statement will indicate that it has perfect precision and recall on this simple

// task. It will also print the average precision (AP).

cout << "Test detector (precision,recall,AP): " << test_object_detection_function(detector, images, object_locations) << endl;

// The cross validation should also indicate perfect precision and recall.

cout << "3-fold cross validation (precision,recall,AP): "

<< cross_validate_object_detection_trainer(trainer, images, object_locations, 3) << endl;

/*

It is also worth pointing out that you don't have to use dlib::array2d objects to

represent your images. In fact, you can use any object, even something like a struct

of many images and other things as the "image". The only requirements on an image

are that it should be possible to pass it to scanner.load(). So if you can say

scanner.load(images[0]), for example, then you are good to go. See the documentation

for scan_image_pyramid::load() for more details.

*/

// Let's display the output of the detector along with our training images.

image_window win;

for (unsigned long i = 0; i < images.size(); ++i)

{

// Run the detector on images[i]

const std::vector<rectangle> rects = detector(images[i]);

cout << "Number of detections: " << rects.size() << endl;

// Put the image and detections into the window.

win.clear_overlay();

win.set_image(images[i]);

win.add_overlay(rects, rgb_pixel(255, 0, 0));

cout << "Hit enter to see the next image.";

cin.get();

}

}

catch (exception& e)

{

cout << "\nexception thrown!" << endl;

cout << e.what() << endl;

}

}选择release,X64 模式,然后选择生成解决方案,然后运行

运行结果

出现小方块说明配置成功了

可能遇到的问题

1 无法找到 MSVCP120D.dll

可能原因:

请检查release与debug模式下配置的连接库配置,参考本文1.4的内容

本应该是在release模式下运行,但选择的是debug模式,请设置成正确的模式 没有把资源文件(如图片,.dat等文件)添加进来

2.如果出现计算机中丢失:opencv_core249d.dll 解决办法,很可能是release或debug状态下的配置的dll文件有问题

- 详见: 快速在VS2013中永久配置OpenCV2.4.9,详细图文,计算机中丢失:opencv_core249d.dll 解决办法

- http://blog.csdn.net/eddy_zheng/article/details/49976005

参考文献:

- http://www.cnblogs.com/cuteshongshong/p/4057193.html VS2013+opencv2.4.9(10)配置

- http://blog.csdn.net/ithzhang/article/details/7575483 Debug与Release版本的区别详解

- http://blog.csdn.net/eddy_zheng/article/details/49976005 快速在VS2013中永久配置OpenCV2.4.9,详细图文,计算机中丢失:opencv_core249d.dll 解决办法

- http://blog.csdn.net/xingchenbingbuyu/article/details/53236541 Dlib在Visual Studio 2015上的编译和配置

本教程的完成离不开zhengkai同学的帮助和支持,特此表示鸣谢

–

Reserved all right © 2017

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?