Chapter 7

第七章

Other Neural Network Types

其它神经网络类型

? Understanding the Elman Neural Network

? Understanding the Jordan Neural Network

? The ART1 Neural Network

? Evolving with NEAT

We have primarily looked at feedforward neural networks so far in this book. All connections in a neural network do not need to be forward. It is also possible to create recurrent connections. This chapter will introduce neural networks that are allowed to form recurrent connections.

在本书中,我们主要研究前馈神经网络。并不是神经网络中的所有连接都需要前馈。还可以创建递归连接。本章将介绍允许形成递归连接的神经网络。

Though not a recurrent neural network, we will also look at the ART1 neural network. This network type is interesting because it does not have a distinct learning phase like most other neural networks. The ART1 neural network learns as it recognizes patterns. In this way it is always learning, much like the human brain.

虽然不是一个递归神经网络,我们也会看看ART1神经网络。这种网络类型是有趣的因为它没有一个明显的学习阶段,像大多数其他的神经网络。ART1神经网络学习它的识别模式。这样不断的学习,就像人的大脑。

This chapter will begin by looking at Elman and Jordan neural networks. These networks are often called simple recurrent neural networks (SRN).

本章将从Elman和约旦神经网络开始。这些网络通常被称为简单递归神经网络(SRN)。

7.1 The Elman Neural Network

7.1 Elman神经网络

Elman and Jordan neural networks are recurrent neural networks that have additional layers and function very similarly to the feedforward networks in previous chapters. They use training techniques similar to feedforward neural networks as well.

Elman神经网络和约旦神经网络是具有附加层的递归神经网络,其功能与前几章中的前馈网络非常相似。他们使用类似前馈神经网络的训练技术。

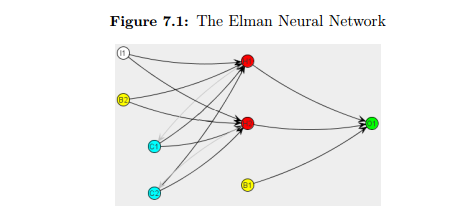

Figure 7.1 shows an Elman neural network.

如图所示,Elman神经网络使用上下文神经元。它们被标记为C1和C2。上下文神经元允许反馈。反馈是前一次迭代的输出作为连续迭代的输入时使用的。注意,上下文神经元是从隐藏的神经元输出中提取的。这些连接没有权重。它们只是一个从隐藏神经元到上下文神经元的输出管道。上下文神经元记住这个输出,然后在下一次迭代中将其反馈给隐藏的神经元。

Therefore, the context layer is always feeding the hidden layer its own output from the previous iteration. The connection from the context layer to the hidden layer is weighted. This synapse will learn as the network is trained. Context layers allow a neural network to recognize context.

因此,上下文层总是向隐藏层反馈前面的迭代中自己的输出。从上下文层到隐藏层的连接是加权的。这个突触会随着网络的训练而学习。上下文层允许神经网络识别上下文。

To see how important context is to a neural network, consider how the previous networks were trained. The order of the training set elements did not really matter. The training set could be jumbled in any way needed and the network would still train in the same manner. With an Elman or a Jordan neural network, the order becomes very important. The training set element previously supported is still affecting the neural network. This becomes very important for predictive neural networks and makes Elman neural networks very useful for temporal neural networks.

要了解上下文对神经网络的重要性,请考虑以前的网络是如何训练的。训练集元素的顺序并不重要。训练集可以以任何需要的方式混乱,网络仍然以同样的方式训练。使用Elman或约旦神经网络,顺序变得非常重要。先前支持的训练集元素仍在影响神经网络。这对预测神经网络非常重要,使得Elman神经网络在时态神经网络中非常有用。

Chapter 8 will delve more into temporal neural networks. Temporal networks attempt to see trends in data and predict future data values. Feedforward networks can also be used for prediction, but the input neurons are structured differently. This chapter will focus on how neurons are structured for simple recurrent neural networks. Dr. Jeffrey Elman created the Elman neural network. Dr. Elman used an XOR pattern to test his neural network. However, he did not use a typical XOR pattern like we’ve seen in previous chapters. He used a XOR pattern collapsed to just one input neuron. Consider the following XOR truth table.

第8章将深入研究时态神经网络。时态网络试图查看数据的趋势并预测未来的数据值。前馈网络也可以用于预测,但输入神经元的结构不同。本章将重点讨论神经元是如何构造为简单递归神经网络的。Jeffrey Elman博士创建了Elman神经网络。Elman博士使用异或模式测试他的神经网络。但是,他没有使用我们前面章节中所看到的典型的XOR模式。他使用异或模式折叠到一个输入神经元。考虑下面的XOR真值表。

1.0 XOR 0.0 = 1.0

0.0 XOR 0.0 = 0.0

0.0 XOR 1.0 = 1.0

1.0 XOR 1.0 = 0.0

Now, collapse this to a string of numbers. To do this simply read the numbers left-to-right, line-by-line. This produces the following:

现在,把它折叠成一串数字。要做到这一点,只需一行一行地读左到右的数字。这产生如下:

1.0 , 0.0 , 1.0 , 0.0 , 0.0 , 0.0 , 0.0 , 1.0 , 1.0 , 1.0 , 1.0 , 0.0

We will create a neural network that accepts one number from the above list and should predict the next number. This same data will be used with a Jordan neural network later in this chapter. Sample input to this neural network would be as follows:

我们将创建一个神经网络,接受上面列表中的一个数字,并预测下一个数字。同样的数据将在本章后面的约旦神经网络中使用。此神经网络的样本输入如下:

Input Neurons : 1.0 ==> Output Neurons : 0.0

Input Neurons : 0.0 ==> Output Neurons : 1.0

Input Neurons : 1.0 ==> Output Neurons : 0.0

Input Neurons : 0.0 ==> Output Neurons : 0.0

Input Neurons : 0.0 ==> Output Neurons : 0.0

Input Neurons : 0.0 ==> Output Neurons : 0.0

It would be impossible to train a typical feedforward neural network for this. The training information would be contradictory. Sometimes an input of 0 results in a 1; other times it results in a 0. An input of 1 has similar issues.

训练一个典型的前馈神经网络是不可能的。培训信息将是矛盾的。有时0的输入结果是1,其他时间则是0。1的输入也有类似的问题。

The neural network needs context; it should look at what comes before. We will review an example that uses an Elman and a feedforward network to attempt to predict the output. An example of the Elman neural network can be found at the following location.

神经网络需要上下文,它应该查看之前的内容。我们将回顾

第七章

Other Neural Network Types

其它神经网络类型

? Understanding the Elman Neural Network

? Understanding the Jordan Neural Network

? The ART1 Neural Network

? Evolving with NEAT

We have primarily looked at feedforward neural networks so far in this book. All connections in a neural network do not need to be forward. It is also possible to create recurrent connections. This chapter will introduce neural networks that are allowed to form recurrent connections.

在本书中,我们主要研究前馈神经网络。并不是神经网络中的所有连接都需要前馈。还可以创建递归连接。本章将介绍允许形成递归连接的神经网络。

Though not a recurrent neural network, we will also look at the ART1 neural network. This network type is interesting because it does not have a distinct learning phase like most other neural networks. The ART1 neural network learns as it recognizes patterns. In this way it is always learning, much like the human brain.

虽然不是一个递归神经网络,我们也会看看ART1神经网络。这种网络类型是有趣的因为它没有一个明显的学习阶段,像大多数其他的神经网络。ART1神经网络学习它的识别模式。这样不断的学习,就像人的大脑。

This chapter will begin by looking at Elman and Jordan neural networks. These networks are often called simple recurrent neural networks (SRN).

本章将从Elman和约旦神经网络开始。这些网络通常被称为简单递归神经网络(SRN)。

7.1 The Elman Neural Network

7.1 Elman神经网络

Elman and Jordan neural networks are recurrent neural networks that have additional layers and function very similarly to the feedforward networks in previous chapters. They use training techniques similar to feedforward neural networks as well.

Elman神经网络和约旦神经网络是具有附加层的递归神经网络,其功能与前几章中的前馈网络非常相似。他们使用类似前馈神经网络的训练技术。

Figure 7.1 shows an Elman neural network.

如图所示,Elman神经网络使用上下文神经元。它们被标记为C1和C2。上下文神经元允许反馈。反馈是前一次迭代的输出作为连续迭代的输入时使用的。注意,上下文神经元是从隐藏的神经元输出中提取的。这些连接没有权重。它们只是一个从隐藏神经元到上下文神经元的输出管道。上下文神经元记住这个输出,然后在下一次迭代中将其反馈给隐藏的神经元。

Therefore, the context layer is always feeding the hidden layer its own output from the previous iteration. The connection from the context layer to the hidden layer is weighted. This synapse will learn as the network is trained. Context layers allow a neural network to recognize context.

因此,上下文层总是向隐藏层反馈前面的迭代中自己的输出。从上下文层到隐藏层的连接是加权的。这个突触会随着网络的训练而学习。上下文层允许神经网络识别上下文。

To see how important context is to a neural network, consider how the previous networks were trained. The order of the training set elements did not really matter. The training set could be jumbled in any way needed and the network would still train in the same manner. With an Elman or a Jordan neural network, the order becomes very important. The training set element previously supported is still affecting the neural network. This becomes very important for predictive neural networks and makes Elman neural networks very useful for temporal neural networks.

要了解上下文对神经网络的重要性,请考虑以前的网络是如何训练的。训练集元素的顺序并不重要。训练集可以以任何需要的方式混乱,网络仍然以同样的方式训练。使用Elman或约旦神经网络,顺序变得非常重要。先前支持的训练集元素仍在影响神经网络。这对预测神经网络非常重要,使得Elman神经网络在时态神经网络中非常有用。

Chapter 8 will delve more into temporal neural networks. Temporal networks attempt to see trends in data and predict future data values. Feedforward networks can also be used for prediction, but the input neurons are structured differently. This chapter will focus on how neurons are structured for simple recurrent neural networks. Dr. Jeffrey Elman created the Elman neural network. Dr. Elman used an XOR pattern to test his neural network. However, he did not use a typical XOR pattern like we’ve seen in previous chapters. He used a XOR pattern collapsed to just one input neuron. Consider the following XOR truth table.

第8章将深入研究时态神经网络。时态网络试图查看数据的趋势并预测未来的数据值。前馈网络也可以用于预测,但输入神经元的结构不同。本章将重点讨论神经元是如何构造为简单递归神经网络的。Jeffrey Elman博士创建了Elman神经网络。Elman博士使用异或模式测试他的神经网络。但是,他没有使用我们前面章节中所看到的典型的XOR模式。他使用异或模式折叠到一个输入神经元。考虑下面的XOR真值表。

1.0 XOR 0.0 = 1.0

0.0 XOR 0.0 = 0.0

0.0 XOR 1.0 = 1.0

1.0 XOR 1.0 = 0.0

Now, collapse this to a string of numbers. To do this simply read the numbers left-to-right, line-by-line. This produces the following:

现在,把它折叠成一串数字。要做到这一点,只需一行一行地读左到右的数字。这产生如下:

1.0 , 0.0 , 1.0 , 0.0 , 0.0 , 0.0 , 0.0 , 1.0 , 1.0 , 1.0 , 1.0 , 0.0

We will create a neural network that accepts one number from the above list and should predict the next number. This same data will be used with a Jordan neural network later in this chapter. Sample input to this neural network would be as follows:

我们将创建一个神经网络,接受上面列表中的一个数字,并预测下一个数字。同样的数据将在本章后面的约旦神经网络中使用。此神经网络的样本输入如下:

Input Neurons : 1.0 ==> Output Neurons : 0.0

Input Neurons : 0.0 ==> Output Neurons : 1.0

Input Neurons : 1.0 ==> Output Neurons : 0.0

Input Neurons : 0.0 ==> Output Neurons : 0.0

Input Neurons : 0.0 ==> Output Neurons : 0.0

Input Neurons : 0.0 ==> Output Neurons : 0.0

It would be impossible to train a typical feedforward neural network for this. The training information would be contradictory. Sometimes an input of 0 results in a 1; other times it results in a 0. An input of 1 has similar issues.

训练一个典型的前馈神经网络是不可能的。培训信息将是矛盾的。有时0的输入结果是1,其他时间则是0。1的输入也有类似的问题。

The neural network needs context; it should look at what comes before. We will review an example that uses an Elman and a feedforward network to attempt to predict the output. An example of the Elman neural network can be found at the following location.

神经网络需要上下文,它应该查看之前的内容。我们将回顾

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

1511

1511

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?