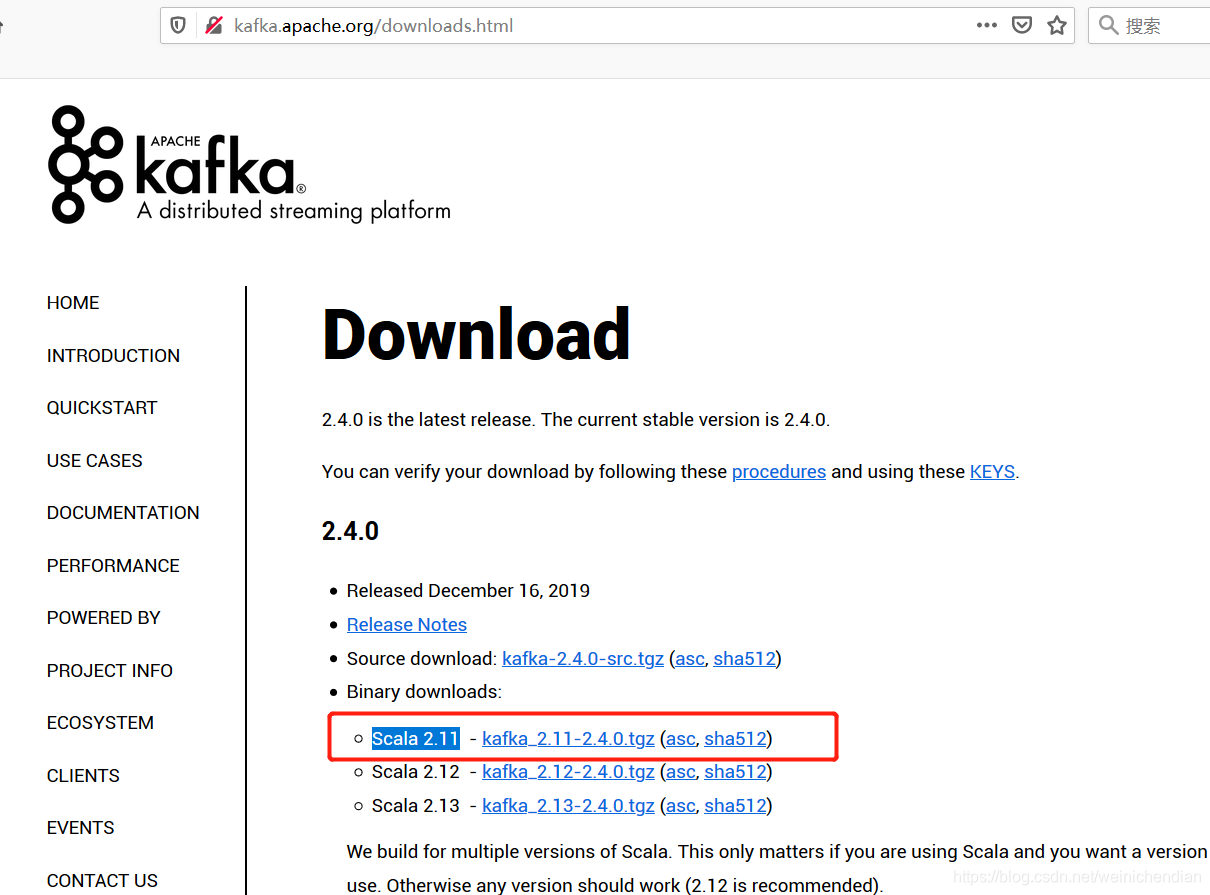

1.服务端安装包下载

下载路径,我下载的是Scala 2.11版本。

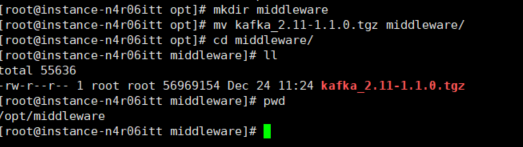

2.上传安装包到服务器。可以用ftp。

3.解压缩。

[root@instance-n4r06itt middleware]# tar zxvf kafka_2.11-1.1.0.tgz

4.创建日志文件夹。

[root@instance-n4r06itt kafka_2.11-1.1.0]# mkdir logs

[root@instance-n4r06itt kafka_2.11-1.1.0]# ll

total 56

drwxr-xr-x 3 root root 4096 Mar 24 2018 bin

drwxr-xr-x 2 root root 4096 Mar 24 2018 config

drwxr-xr-x 2 root root 4096 Dec 24 11:43 libs

-rw-r--r-- 1 root root 28824 Mar 24 2018 LICENSE

drwxr-xr-x 2 root root 4096 Dec 24 11:43 logs

-rw-r--r-- 1 root root 336 Mar 24 2018 NOTICE

drwxr-xr-x 2 root root 4096 Mar 24 2018 site-docs

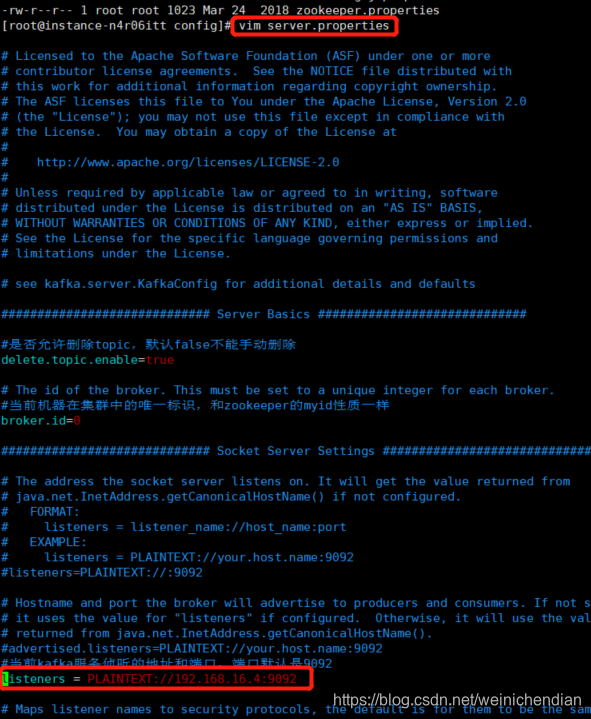

5.修改配置文件。

[root@instance-n4r06itt config]# pwd

/opt/middleware/kafka_2.11-1.1.0/config

[root@instance-n4r06itt config]# vim server.properties

由于我只有一台服务器,没有用虚拟机,先模拟单机模式。主要配置topic是否可以删除、kafka监听地址、zookeeper本地就可以、日志路径。

#是否允许删除topic,默认false不能手动删除

delete.topic.enable=true

#当前kafka服务侦听的地址和端口,端口默认是9092

listeners = PLAINTEXT://106.12.175.83:9092

# A comma separated list of directories under which to store log files

log.dirs=/opt/middleware/kafka_2.11-1.1.0/logs

# root directory for all kafka znodes.

zookeeper.connect=localhost:2181

6.添加环境变量。

kafka安装路径保存一下,/opt/middleware/kafka_2.11-1.1.0

[root@instance-n4r06itt config]# vim /etc/profile

#KAFKA_HOME

export KAFKA_HOME=/opt/middleware/kafka_2.11-1.1.0

export PATH=$PATH:$KAFKA_HOME/bin

保存使其立即生效

[root@instance-n4r06itt config]# source /etc/profile

7.启动zookeeper。

[root@instance-n4r06itt bin]# ./zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /opt/middleware/zookeeper-3.4.8/bin/../conf/zoo.cfg

Mode: standalone

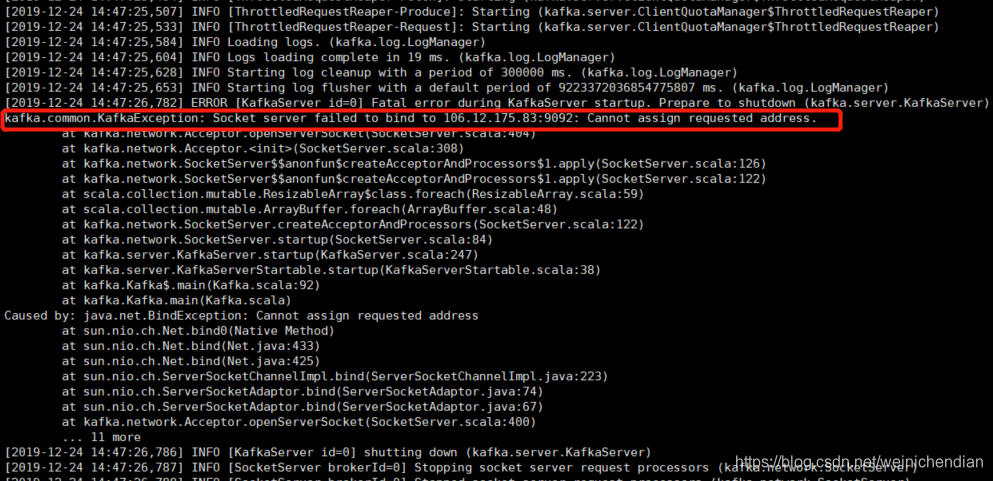

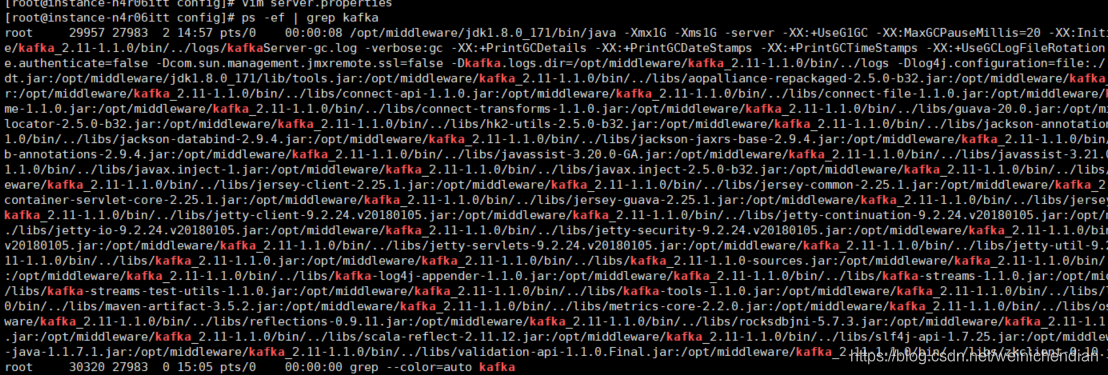

8.启动kafka。

[root@instance-n4r06itt bin]# pwd

/opt/middleware/kafka_2.11-1.1.0/bin

[root@instance-n4r06itt bin]# ./kafka-server-start.sh ../config/server.properties &

出现异常

kafka.common.KafkaException: Socket server failed to bind to 106.12.175.83:9092: Cannot assign requested address.

这是我自己配置的一个服务器地址,怀疑是不是端口没有开放的原因。但是实际原因不是端口的问题,我之前配置的106.12.175.83是我百度云服务器的外网地址。我们需要拿到linux本地inet

这是我自己配置的一个服务器地址,怀疑是不是端口没有开放的原因。但是实际原因不是端口的问题,我之前配置的106.12.175.83是我百度云服务器的外网地址。我们需要拿到linux本地inet

[root@instance-n4r06itt bin]# ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.16.4 netmask 255.255.240.0 broadcast 192.168.31.255

inet6 fe80::f816:3eff:fea7:c7de prefixlen 64 scopeid 0x20<link>

ether fa:16:3e:a7:c7:de txqueuelen 1000 (Ethernet)

RX packets 3215936 bytes 626854111 (597.8 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 2958679 bytes 627972940 (598.8 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1000 (Local Loopback)

RX packets 891689 bytes 554427225 (528.7 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 891689 bytes 554427225 (528.7 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

然后重新修改配置文件

[root@instance-n4r06itt config]# vim server.properties

再次重新启动kafka

[root@instance-n4r06itt bin]# ./kafka-server-start.sh ../config/server.properties &

[2019-12-24 14:57:49,519] INFO [ThrottledRequestReaper-Fetch]: Starting (kafka.server.ClientQuotaManager$ThrottledRequestReaper)

[2019-12-24 14:57:49,522] INFO [ThrottledRequestReaper-Produce]: Starting (kafka.server.ClientQuotaManager$ThrottledRequestReaper)

[2019-12-24 14:57:49,545] INFO [ThrottledRequestReaper-Request]: Starting (kafka.server.ClientQuotaManager$ThrottledRequestReaper)

[2019-12-24 14:57:49,596] INFO Loading logs. (kafka.log.LogManager)

[2019-12-24 14:57:49,616] INFO Logs loading complete in 20 ms. (kafka.log.LogManager)

[2019-12-24 14:57:49,639] INFO Starting log cleanup with a period of 300000 ms. (kafka.log.LogManager)

[2019-12-24 14:57:49,653] INFO Starting log flusher with a default period of 9223372036854775807 ms. (kafka.log.LogManager)

[2019-12-24 14:57:50,700] INFO Awaiting socket connections on 192.168.16.4:9092. (kafka.network.Acceptor)

[2019-12-24 14:57:50,826] INFO [SocketServer brokerId=0] Started 1 acceptor threads (kafka.network.SocketServer)

[2019-12-24 14:57:50,867] INFO [ExpirationReaper-0-Produce]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2019-12-24 14:57:50,872] INFO [ExpirationReaper-0-Fetch]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2019-12-24 14:57:50,884] INFO [ExpirationReaper-0-DeleteRecords]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2019-12-24 14:57:50,920] INFO [LogDirFailureHandler]: Starting (kafka.server.ReplicaManager$LogDirFailureHandler)

[2019-12-24 14:57:51,028] INFO Creating /brokers/ids/0 (is it secure? false) (kafka.zk.KafkaZkClient)

[2019-12-24 14:57:51,042] INFO Result of znode creation at /brokers/ids/0 is: OK (kafka.zk.KafkaZkClient)

[2019-12-24 14:57:51,043] INFO Registered broker 0 at path /brokers/ids/0 with addresses: ArrayBuffer(EndPoint(192.168.16.4,9092,ListenerName(PLAINTEXT),PLAINTEXT)) (kafka.zk.KafkaZkClient)

[2019-12-24 14:57:51,045] WARN No meta.properties file under dir /opt/middleware/kafka_2.11-1.1.0/logs/meta.properties (kafka.server.BrokerMetadataCheckpoint)

[2019-12-24 14:57:51,263] INFO [ExpirationReaper-0-topic]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2019-12-24 14:57:51,276] INFO [ExpirationReaper-0-Heartbeat]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2019-12-24 14:57:51,308] INFO [ExpirationReaper-0-Rebalance]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2019-12-24 14:57:51,320] INFO Creating /controller (is it secure? false) (kafka.zk.KafkaZkClient)

[2019-12-24 14:57:51,333] INFO Result of znode creation at /controller is: OK (kafka.zk.KafkaZkClient)

[2019-12-24 14:57:51,360] INFO [GroupCoordinator 0]: Starting up. (kafka.coordinator.group.GroupCoordinator)

[2019-12-24 14:57:51,392] INFO [GroupCoordinator 0]: Startup complete. (kafka.coordinator.group.GroupCoordinator)

[2019-12-24 14:57:51,413] INFO [GroupMetadataManager brokerId=0] Removed 0 expired offsets in 2 milliseconds. (kafka.coordinator.group.GroupMetadataManager)

[2019-12-24 14:57:51,476] INFO [ProducerId Manager 0]: Acquired new producerId block (brokerId:0,blockStartProducerId:0,blockEndProducerId:999) by writing to Zk with path version 1 (kafka.coordinator.transaction.ProducerIdManager)

[2019-12-24 14:57:51,592] INFO [TransactionCoordinator id=0] Starting up. (kafka.coordinator.transaction.TransactionCoordinator)

[2019-12-24 14:57:51,636] INFO [TransactionCoordinator id=0] Startup complete. (kafka.coordinator.transaction.TransactionCoordinator)

[2019-12-24 14:57:51,664] INFO [Transaction Marker Channel Manager 0]: Starting (kafka.coordinator.transaction.TransactionMarkerChannelManager)

[2019-12-24 14:57:51,828] INFO [/config/changes-event-process-thread]: Starting (kafka.common.ZkNodeChangeNotificationListener$ChangeEventProcessThread)

[2019-12-24 14:57:51,873] INFO Kafka version : 1.1.0 (org.apache.kafka.common.utils.AppInfoParser)

[2019-12-24 14:57:51,874] INFO Kafka commitId : fdcf75ea326b8e07 (org.apache.kafka.common.utils.AppInfoParser)

[2019-12-24 14:57:51,876] INFO [KafkaServer id=0] started (kafka.server.KafkaServer)

kafka启动成功。

kafka启动成功。

9.基本使用。

9.1 创建Topic

zookeeper 为zk集群地址,使用任意一个节点都行。

replication-factor 为复制的份数,Kafka实际上会将一个消息复制多份存储,保证不丢失。

partitions 分区,真实的物理节点。

[root@instance-n4r06itt kafka_2.11-1.1.0]# bin/kafka-topics.sh --create --zookeeper 127.0.0.1:2181 --replication-factor 1 --partitions 1 --topic my-test

Created topic "my-test".

[2019-12-24 15:15:44,092] INFO [ReplicaFetcherManager on broker 0] Removed fetcher for partitions my-test-0 (kafka.server.ReplicaFetcherManager)

[2019-12-24 15:15:44,182] INFO [Log partition=my-test-0, dir=/opt/middleware/kafka_2.11-1.1.0/logs] Loading producer state from offset 0 with message format version 2 (kafka.log.Log)

[2019-12-24 15:15:44,191] INFO [Log partition=my-test-0, dir=/opt/middleware/kafka_2.11-1.1.0/logs] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 44 ms (kafka.log.Log)

[2019-12-24 15:15:44,193] INFO Created log for partition my-test-0 in /opt/middleware/kafka_2.11-1.1.0/logs with properties {compression.type -> producer, message.format.version -> 1.1-IV0, file.delete.delay.ms -> 60000, max.message.bytes -> 1000012, min.compaction.lag.ms -> 0, message.timestamp.type -> CreateTime, min.insync.replicas -> 1, segment.jitter.ms -> 0, preallocate -> false, min.cleanable.dirty.ratio -> 0.5, index.interval.bytes -> 4096, unclean.leader.election.enable -> false, retention.bytes -> -1, delete.retention.ms -> 86400000, cleanup.policy -> [delete], flush.ms -> 9223372036854775807, segment.ms -> 604800000, segment.bytes -> 1073741824, retention.ms -> 604800000, message.timestamp.difference.max.ms -> 9223372036854775807, segment.index.bytes -> 10485760, flush.messages -> 9223372036854775807}. (kafka.log.LogManager)

[2019-12-24 15:15:44,194] INFO [Partition my-test-0 broker=0] No checkpointed highwatermark is found for partition my-test-0 (kafka.cluster.Partition)

[2019-12-24 15:15:44,196] INFO Replica loaded for partition my-test-0 with initial high watermark 0 (kafka.cluster.Replica)

[2019-12-24 15:15:44,221] INFO [Partition my-test-0 broker=0] my-test-0 starts at Leader Epoch 0 from offset 0. Previous Leader Epoch was: -1 (kafka.cluster.Partition)

[2019-12-24 15:15:44,250] INFO [ReplicaAlterLogDirsManager on broker 0] Added fetcher for partitions List() (kafka.server.ReplicaAlterLogDirsManager)

9.2 查看Topic

bin/kafka-topics.sh --describe --zookeeper 127.0.0.1:2181

[root@instance-n4r06itt kafka_2.11-1.1.0]# bin/kafka-topics.sh --describe --zookeeper 127.0.0.1:2181

Topic:my-test PartitionCount:1 ReplicationFactor:1 Configs:

Topic: my-test Partition: 0 Leader: 0 Replicas: 0 Isr: 0

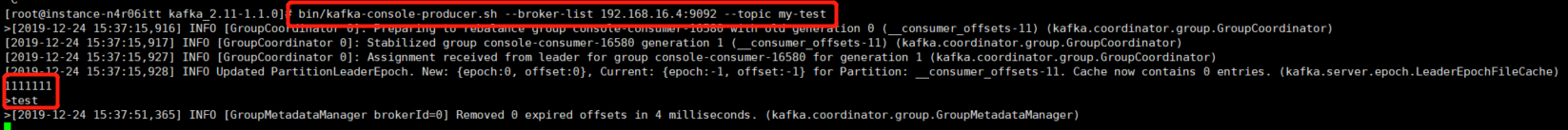

9.3 创建生产者

bin/kafka-console-producer.sh --broker-list 192.168.16.4:9092 --topic my-test

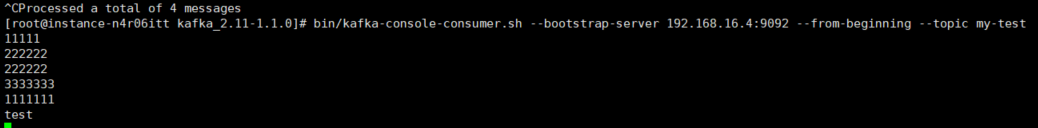

9.4 创建消费者

bin/kafka-console-consumer.sh --bootstrap-server 192.168.16.4:9092 --from-beginning --topic my-test

9.5 遇到的问题。

9.5 遇到的问题。

kafka生产者、消费者,生产的数据必须为server.properties里面的listeners IP加PORT。不然不能正常消费。

至此,linux系统安装kafka成功。

4211

4211

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?