实现无缝滑屏怎么实现

Originally published at LinkedIn Pulse.

最初发布于LinkedIn Pulse 。

Early last month, I presented a half-day tutorial on at this year’s virtual CVPR 2020. This is a very unique experience, and I would like to share some of the highlights of the tutorial.

上个月初,我在今年的虚拟CVPR 2020上提供了为期半天的教程 。这是一次非常独特的体验,我想分享该教程的一些重点。

The Problem: AI for Big Data

问题:大数据的人工智能

The tutorial focused on a critical problem that arises as AI moves from experimentation to production; that is, how to seamlessly scale AI to distributed Big Data. Today, AI researchers and data scientists need to go through a mountain of pains to apply AI models to production dataset that is stored in distributed Big Data cluster.

本教程重点讨论了AI从实验到生产的过程中出现的关键问题。 也就是说,如何无缝地将AI扩展到分布式大数据 。 如今,AI研究人员和数据科学家需要花很多精力将AI模型应用于存储在分布式大数据集群中的生产数据集。

Conventional approaches usually set up two separate clusters, one dedicated to Big Data processing, and the other dedicated to deep learning (e.g., a GPU cluster), with “connector” (or glue code) deployed in between. Unfortunately, this “connector approach” not only introduces a lot of overheads (e.g., data copy, extra cluster maintenance, fragmented workflow, etc.), but also suffers from impedance mismatches that arise from crossing boundaries between heterogeneous components (more on this in the next section).

常规方法通常设置两个单独的集群,一个集群专用于大数据处理,另一个集群专用于深度学习(例如GPU集群),并在两者之间部署“连接器”(或粘合代码)。 不幸的是,这种“连接器方法”不仅带来了很多开销(例如,数据复制,额外的集群维护,零散的工作流程等),而且还遭受了因异构组件之间的边界交叉而引起的阻抗不匹配的问题(更多内容请参见下一节)。

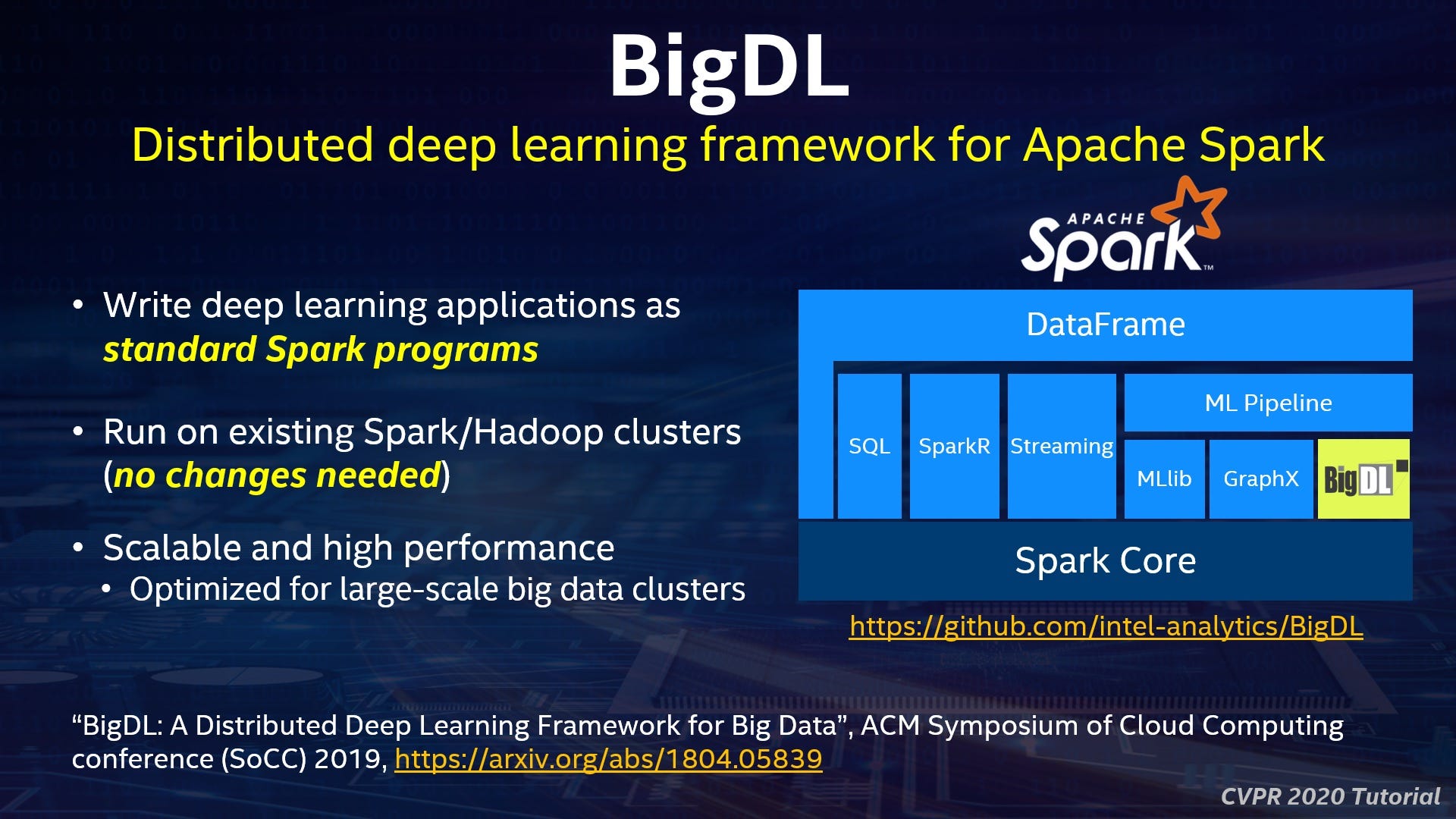

To address these challenges, we have developed open source technologies that directly support new AI algorithms on Big Data platforms. As shown in the slide below, this includes BigDL (distributed deep learning framework for Apache Spark) and Analytics Zoo (distributed Tensorflow, Keras and PyTorch on Apache Spark/Flink & Ray).

为了应对这些挑战,我们开发了开源技术,这些技术直接支持大数据平台上的新AI算法。 如下面的幻灯片所示,其中包括BigDL (用于Apache Spark的分布式深度学习框架)和Analytics Zoo (在Apache Spark / Flink和Ray上的分布式Tensorflow,Keras和PyTorch)。

激励示例:JD.com (A Motivating Example: JD.com)

Before diving into the technical details of BigDL and Analytics Zoo, I shared a motivating example in the tutorial. JD is one of the largest online shopping websites in China; they have stored hundreds of millions of merchandise pictures in HBase, and built an end-to-end object feature extraction application to process these pictures (for image-similarity search, picture deduplication, etc.). While object detection and feature extraction are standard computer vision algorithms, this turns out to be a fairly complex data analysis pipeline when scaling to hundreds of millions pictures in production, as shown in the slide below.

在深入探讨BigDL和Analytics Zoo的技术细节之前,我在教程中分享了一个激励人的示例。 京东是中国最大的在线购物网站之一; 他们在HBase中存储了数亿张商品图片,并构建了端到端的对象特征提取应用程序来处理这些图片(用于图片相似性搜索,图片重复数据删除等)。 虽然对象检测和特征提取是标准的计算机视觉算法,但在将生产规模扩展到数亿张图片时,事实证明这是一个相当复杂的数据分析流程,如下面的幻灯片所示。

Previously JD engineers had built the solution on a 5-node GPU cluster following a “connector approach”: reading data from HBase, partitioning and processing the data across the cluster, and then running the deep learning models on Caffe. This turns out to be very complex and error-prone (because the data partitioning, load balancing, fault tolerance, etc., need to be manually managed). In addition, it also reveals an impedance mismatch of the “connector approach” (HBase + Caffe in this case) — reading data from HBase takes about half of the time (because the task parallelism is tied to the number of GPU cards in the system, which is too low for interacting with HBase to read the data).

以前,JD工程师按照“连接器方法”在5节点GPU集群上构建了该解决方案:从HBase读取数据,在集群中对数据进行分区和处理,然后在Caffe上运行深度学习模型。 事实证明,这非常复杂且容易出错(因为需要手动管理数据分区,负载平衡,容错等)。 此外,它还揭示了“连接器方法”(在这种情况下为HBase + Caffe)的阻抗不匹配-从HBase读取数据大约需要一半的时间(因为任务并行性与系统中GPU卡的数量有关) ,对于与HBase进行交互以读取数据而言太低了)。

To overcome these problems, JD engineers have used BigDL to implement the end-to-end solution (including data loading, partitioning, pre-processing, DL model inference, etc.) as one unified pipeline, running on a single Spark cluster in a distributed fashion. This not only greatly improves the development productivity, but also delivers about 3.83x speedup compared to the GPU solution. You may refer to [1] and [2] for more details of this particular application.

为了克服这些问题,JD工程师使用BigDL来实现端到端解决方案(包括数据加载,分区,预处理,DL模型推断等)作为一个统一的管道,并在一个单一的Spark集群中运行。分布式时尚。 与GPU解决方案相比,这不仅大大提高了开发效率,而且提供了约3.83倍的加速。 您可以参考[1]和[2]以获得该特定应用程序的更多详细信息。

技术:BigDL框架 (The Technology: BigDL Framework)

In the last several years, we have been driving open source technologies that seamlessly scale AI for distributed Big Data. In 2016, we open sourced BigDL, a distributed deep learning framework for Apache Spark. It is implemented as a standard library on Spark, and provides an expressive, “data-analytics integrated” deep learning programming model. As a result, users can built new deep learning applications as standard Spark programs, running on existing Big Data clusters without any change, as shown in the slide below.

在过去的几年中,我们一直在推动开放源代码技术,这些技术可以无缝地扩展AI以实现分布式大数据。 2016年,我们开源了BigDL ,这是一个用于Apache Spark的分布式深度学习框架。 它被实现为Spark上的标准库,并提供了一个富有表现力的“数据分析集成”深度学习编程模型。 结果,用户可以构建新的深度学习应用程序作为标准Spark程序,而无需进行任何更改即可在现有的大数据集群上运行,如下面的幻灯片所示。

Contrary to the conventional wisdom of the machine learning community (that fine-grained data access and in-place updates are critical for efficient distributed training), BigDL provides scalable distributed training directly on top of the functional compute model (with copy-on-write and coarse-grained operations) of Spark. It has implemented an efficient AllReduce like operation using existing primitives (e.g., shuffle, broadcast, in-memory cache, etc.) in Spark, which is shown to have similar performance characteristics compared to Ring AllReduce. You may refer to our SoCC 2019 paper [2] for more details.

与机器学习社区的传统智慧(细粒度的数据访问和就地更新对于有效的分布式培训至关重要)相反,BigDL直接在功能计算模型(使用写时复制)的基础上提供可扩展的分布式培训。和粗粒度操作)。 它使用Spark中的现有原语(例如,随机,广播,内存中的缓存等)实现了高效的类似AllReduce的操作,与Ring AllReduce相比,它具有相似的性能特征。 您可以参考我们的SoCC 2019 论文 [2]了解更多详细信息。

技术:Analytics Zoo平台 (The Technology: Analytics Zoo Platform)

While BigDL provides a Spark-native framework for users to build deep learning applications, Analytics Zoo tries to solve a more general problem: how to seamlessly apply any AI models (which can be developed using TensroFlow, PyTorch, Keras, Caffe, etc.) to production data stored in Big Data clusters, in a distributed and scalable fashion.

虽然BigDL为用户提供了Spark原生框架来构建深度学习应用程序,但Analytics Zoo试图解决一个更普遍的问题:如何无缝应用任何AI模型(可以使用TensroFlow,PyTorch,Keras,Caffe等开发)以分布式和可扩展方式访问存储在大数据集群中的生产数据。

As shown in the slide above, Analytics Zoo is implemented as a higher level platform on top of DL frameworks and distributed data analytics systems. In particular, it provides an “end-to-end pipeline layer” that seamlessly unites TensorFlow, Keras, PyTorch, Spark and Ray programs into an integrated pipeline, which that can transparently scale out to large (Big Data or K8s) clusters for distributed training and inference.

如上面的幻灯片所示,Analytics Zoo被实现为DL框架和分布式数据分析系统之上的更高级别的平台。 特别是,它提供了一个“端到端管道层”,该层将TensorFlow,Keras,PyTorch,Spark和Ray程序无缝地集成到一个集成管道中,该管道可以透明地扩展到大型(大数据或K8s)集群以进行分布式训练和推理。

As a concrete example, the slide below shows how Analytics Zoo users can write TensorFlow or PyToch code directly inline with Spark program; as a result, the program can first process the Big Data (stored in Hive, HBase, Kafka, Parquet, etc.) using Spark, and then feed the in-memory Spark RDD or Dataframes directly to the TensorFlow/PyToch model for distributed training or inference. Under the hood, Analytics Zoo automatically handles the data partitioning, model replication, data format transformation, distributed parameter synchronization, etc., so that the TensorFlow/PyToch model can be seamless applied to the distributed Big Data.

作为一个具体的示例,下面的幻灯片显示了Analytics Zoo用户如何直接在Spark程序中内联编写TensorFlow或PyToch代码; 因此,程序可以首先使用Spark处理大数据(存储在Hive,HBase,Kafka,Parquet等中),然后将内存中的Spark RDD或数据帧直接馈送到TensorFlow / PyToch模型以进行分布式训练或推断。 在后台,Analytics Zoo会自动处理数据分区,模型复制,数据格式转换,分布式参数同步等,因此TensorFlow / PyToch模型可以无缝地应用于分布式大数据。

摘要 (Summary)

In the tutorial, I also shared more details on how to build scalable AI pipelines for Big Data using Analytics Zoo, including advanced features (such as RayOnSpark, AutoML for time series, etc.) and real-world use cases (such as Mastercard, Azure, CERN, SK Telecom, etc.). For additional information, please see:

在本教程中,我还分享了有关如何使用Analytics Zoo为大数据构建可扩展AI管道的更多详细信息,包括高级功能(例如RayOnSpark,时间序列的AutoML等)和实际使用案例(例如Mastercard, Azure,CERN,SK Telecom等)。 有关更多信息,请参见:

My CVPR 2020 tutorial website (https://jason-dai.github.io/cvpr2020/)

我的CVPR 2020教程网站( https://jason-dai.github.io/cvpr2020/ )

Analytics Zoo Github repo (https://github.com/intel-analytics/analytics-zoo)

Analytics Zoo Github存储库( https://github.com/intel-analytics/analytics-zoo )

Powered-By page for Analytics Zoo (https://analytics-zoo.github.io/master/#powered-by/)

Analytics Zoo的Powered-By页面( https://analytics-zoo.github.io/master/#powered-by/ )

翻译自: https://medium.com/swlh/seamlessly-scaling-ai-for-distributed-big-data-5b589ead2434

实现无缝滑屏怎么实现

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?