卷积神经网络pytorch

介绍 (Introduction)

PyTorch is a deep learning framework developed by Facebook’s AI Research lab (FAIR). Thanks to its C++ and CUDA backend, the N-dimensional arrays called Tensors can be used in GPU as well.

PyTorch是由Facebook的AI研究实验室(FAIR)开发的深度学习框架。 由于其C ++和CUDA后端,称为Tensors的N维数组也可以在GPU中使用。

A Convolutional Neural Network (ConvNet/CNN) is a Deep Learning which takes an input image and assigns importance(weights and biases) to various features to help in distinguishing images.

卷积神经网络(ConvNet / CNN)是一种深度学习,它接受输入图像并为各种功能分配重要性(权重和偏差),以帮助区分图像。

A Neural Network is broadly classified into 3 layers:

神经网络大致分为三层:

- Input Layer 输入层

- Hidden Layer (can consist of one or more such layers) 隐藏层(可以包含一个或多个这样的层)

- Output Layer 输出层

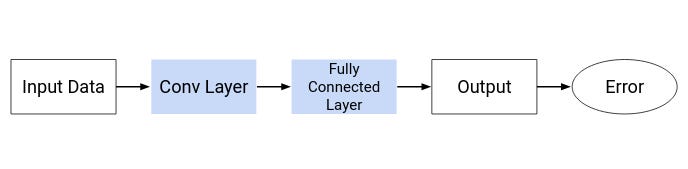

The Hidden layer can be further divided mainly into 2 layers-

隐藏层主要可以进一步分为2层-

- Convolution Layer: this extracts features from the given input images. 卷积层:这将从给定的输入图像中提取特征。

- Full Connected Dense Layers: it assigns importance to the features from the convolution layer to generate output. 完全连接的密集层:它赋予卷积层要素以生成输出的重要性。

A convolutional neural network process generally involves two steps-

卷积神经网络过程通常包括两个步骤:

- Forward Propagation: the weights and biases are randomly initialized and this generates the output at the end. 正向传播:权重和偏差被随机初始化,并最终生成输出。

- Back Propagation: the weights and biases are randomly initialized in the beginning and depending on the error, the values are updated. Forward propagation happens with these updated values again and again for newer outputs to minimize the error.+ 反向传播:权重和偏差在开始时就随机初始化,并根据误差来更新值。 对于这些更新的值,一次又一次地进行正向传播,以使更新的输出最小化误差。

Activation functions are mathematical equations assigned to each neuron in the neural network and determine whether it should be activated or not depending on its importance(weight) in the image.

激活函数是分配给神经网络中每个神经元的数学方程式,并根据其在图像中的重要性(权重)确定是否应激活它。

There are two types of activation functions:

有两种类型的激活功能:

- Linear Activation Functions: It takes the inputs, multiplied by the weights for each neuron, and creates an output signal proportional to the input. However, the problem with Linear Activation Functions is that it gives the output as a constant thus making it impossible for backpropagation to be used as there is no relation to the input. Further, it collapses all layers into a single layer thus turning the neural network into a single layer network. 线性激活函数:它采用输入乘以每个神经元的权重,并生成与输入成比例的输出信号。 但是,线性激活函数的问题在于,它以

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?