算法 博士

During a design project my with my team last year I stumbled on a peculiar situation. As we were trying to figure out how people decide the system of values related to endangered species to save, we noticed that in 2017 the Department of Conservation in New Zealand let an algorithm (the Threatened Species Strategy algorithm) to decide the faith of more than 150 species, based on factors like social contribution to the people, contribution to securing the widest range of taxonomic lineages, conservation status, rate of decline and conservation dependency. Put more simply, New Zealand entrusted blindly an environmental crisis to just a few lines of code, and apparently they are doing a great job. Relying on an algorithm has become today’s fashion. And why shouldn’t it? With a database in hand and a machine able to read instructions you too can do anything! Do you want to sell a paint for $432,500? Here’s the code! Do you want to advertise your product to a specific persona? Say no more! Do you need to put a stop on your country’s crime rate? Try this code!

去年在我与团队一起进行的设计项目中,我偶然发现了一个特殊的情况。 当我们试图弄清人们如何决定与濒危物种有关的价值体系时,我们注意到2017年新西兰 环境保护部采用了一种算法( ``受威胁物种战略''算法 )来决定根据对人类的社会贡献,对确保最广泛的生物分类谱系,保护状况,下降速度和保护依赖性的贡献等150种。 简而言之,新西兰只将几行代码盲目地托付了一场环境危机,显然,它们正在做得很好。 依靠算法已成为当今的时尚。 为什么不呢? 有了数据库,机器就能读取指令,您也可以做任何事情! 您想以432,500美元的价格出售油漆吗? 这是代码! 您想将产品宣传给特定角色吗? 别说了! 您需要阻止您所在国家的犯罪率吗? 试试这个代码!

The problem, however, lies in the belief that people feel like they do not have the right to question or doubt those decision processes, even if it is fundamentally important to their lives. But because algorithms are considered something sophisticated and mathematically intellectual, they do not have enough authority to have an opinion about it. So those algorithms take on a life of their own in term of authenticity and like in some kind of cyber religion they rule masses of followers that trust any kind of belief just because “an algorithm said so”. The rise of those WMDs (widespread, mysterious and destructive algorithms) is increasingly having an impact on our lives, making decisions that for most people are undoubtedly right. So who gets hired or fired? Who gets that loan? Who gets insurance? Are you admitted into the college you wanted to get into? Law enforcement is also starting to use machine learning for predictive policing. Some judges use machine-generated risk scores to determine how long an individual is going to spend in prison.

然而,问题在于人们相信人们觉得自己无权质疑或怀疑这些决策过程,即使这对他们的生活至关重要。 但是,由于算法被认为是某种复杂且具有数学智能的算法,因此它们没有足够的权限对此发表意见。 因此,这些算法在真实性方面要靠自己的命来生存,就像在某种网络宗教中一样,他们统治着大量信奉任何信仰的追随者,仅仅是因为“一个算法这么说”。 这些大规模杀伤性武器 (广泛,神秘和破坏性的算法)的兴起对我们的生活产生越来越大的影响,做出对大多数人来说无疑是正确的决定。 那么谁被录用或被解雇呢? 谁得到那笔贷款? 谁可以获得保险? 您被录取了想进入的大学吗? 执法部门也开始将机器学习用于预测性警务。 一些法官使用机器生成的风险评分来确定一个人要在监狱中待多久。

The purpose of this essay is to analyze the role of the algorithm, if they act fairly, in what cases it might be weaponizable and if we can we do something about it before you and I could end up on an endangered species list.

本文的目的是分析算法的作用,如果算法起作用,在什么情况下它可能是可武器化的,如果我们能够在您和我最终将其列入濒临灭绝物种清单之前对其进行处理。

偏见代码 (A bias code)

First thing first: what even is an algorithm? We can see it as a set of instructions, typically to solve a class of problems, perform calculation, data processing and other tasks. Technically speaking it does not rely on machines to be solved and it does not require to be complicated. In a game, for example, the player is the one who executes an algorithm. He is given a task (winning a match, being first in a race, reaching the last level) and to do so he has to view the outcomes and has to input decision, to then get the results displayed by the computer. The player has to execute an algorithm in order to win. The similarity between the actions expected from the player and computer algorithms is too uncanny to be dismissed. Lev Manovich sees an algorithm as a “halve that, combined with data structures, forms the ontology of the world according to a computer: they are equally important for a program to work”. That means that in any case, in any situation (machine or human) the one thing necessary to make an algorithm work is a database, a structured collection of data where every item is equally significant. In effect, the composition and structure of the database is the one thing (often underestimated) that we should put under inspection, as the quality of its components (or worse, the lack of) determines the source of most problems.

首先第一件事:什么是算法? 我们可以将其视为一组指令,通常用于解决一类问题,执行计算,数据处理和其他任务。 从技术上讲,它不依赖于要解决的机器,也不需要复杂。 例如,在游戏中,玩家是执行算法的玩家。 给他一个任务(赢得一场比赛,赢得比赛的第一名,达到最后的水平),为此,他必须查看结果并输入决策,然后将结果显示在计算机上。 玩家必须执行算法才能获胜。 玩家期望的动作与计算机算法之间的相似性太不可思议了,无法忽略。 列夫·马诺维奇 ( Lev Manovich)将算法视为“一半,结合数据结构,根据计算机形成世界的本体论:它们对于程序的工作同样重要”。 这意味着,在任何情况下(无论是机器还是人工),使算法起作用的必要条件是数据库 ,即结构化的数据集合,其中每个项目都具有同等重要的意义。 实际上,数据库的组成和结构是我们应该检查的一件事(常常被低估了),因为其组件的质量(或更糟糕的是,缺少)决定了大多数问题的根源。

Let’s imagine a design product where an algorithm is tasked to scan people’s face. If during the machine learning technique the database, whether intentionally or not, is made of non-diverse kind of people, any face that deviates too much will be harder, if not impossible to detect. However, there are just enough times that we can consider something “unintentional”. Too often people of colour, Muslims, immigrants and LGBTQ communities are the ones forgot or worse targeted. In all those cases we are talking about algorithm bias. We must not forget that those codes are set up by the people in power, by companies and by people who simply just want to help themselves. Even when something has been set on “default”, it always means a careless way of acting and, consequentially, a harmful one. After all, artefacts have politics, and most of them will probably not go along with the varied spectrum of thinking of the users. The tentacles of the matrix of domination have a comfortable home here and unlike human bias, the digital ones are much more spreadable and faster. However, not all is lost. Those databases do not materialize from anything. We have to act and we have to do it fast: if we do not intervene of course it will increase inequality and discriminatory practices. Luckily we still have one line of defence and it is our best weapon yet: us designers.

让我们想象一下一个设计产品,其中使用一种算法来扫描人的脸。 如果在机器学习技术中,无论数据库是否有意(不是有意地)都是由不同种类的人组成的,那么任何偏差太大的面Kong都将变得更难甚至无法发现。 但是,有足够的时间我们可以考虑“无意的”事情。 有色人种,穆斯林,移民和LGBTQ社区常常是被遗忘或目标更糟的人。 在所有这些情况下,我们都在谈论算法偏差 。 我们一定不要忘记,这些守则是由当权者,公司和仅仅想自助的人制定的。 即使在“默认”上设置了某些内容,也始终意味着一种粗心的行为方式,因此也就意味着有害的行为。 毕竟,人工制品具有政治色彩,而且其中大多数可能不会与使用者的思维方式息息相关。 统治矩阵的触角在这里拥有舒适的家,与人为偏见不同,数字触角更易于传播和更快。 但是,并非全部丢失。 这些数据库没有实现。 我们必须采取行动,我们必须Swift采取行动:如果我们不干预,当然会加剧不平等和歧视性做法。 幸运的是,我们仍然只有一道防线,这是我们迄今为止最好的武器:美国设计师。

我们的角色 (Our Role)

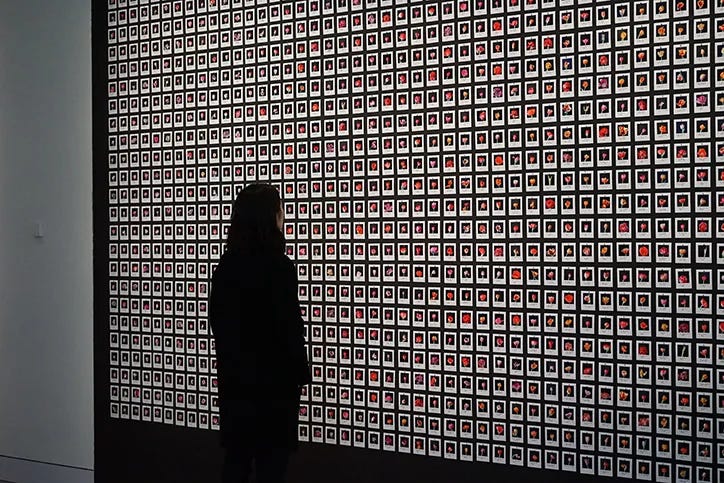

The first thing to keep in mind is that the accountability does not lie on the algorithm, (easy to cry wolf with an inanimate code) but rather on the designer behind it. Who designs the database matters more than the database itself. Is he/she creating a full-spectrum database with diverse individuals who can check each other’s blind spots? Is he/she projecting a moral value on the final result of the artefact? Is he/she thinking of the right opinion to introduce into this already full ecosystem? Of course, we are only humans and as humans, we can make many, many mistakes. The catch here is that errors can be corrected, codes can be changed and software can be updated. But when our design has the power to create immense harm to people factors like social health, equality and representation have to be set as priorities, not as an afterthought. Something that we should also keep in mind is why we design. Having the chance to design an algorithm does not mean that we have to do it. Do we really need to build a code capable of revealing sexual orientation just because we can? Do we have the right to decide if height has something to do with education? All those cases are always and will always be soaked in bias to the point where the “magical and unquestionable code” could even create new ones. In a pessimistic world like the one described by me here is there even hope? When an algorithm tells me what is the fastest path to choose, the perfect partner to date or the most probable food I am going to eat tonight, what even is free choice? Is my opinion still relevant? Are we just numbers in a database? One could rightly decide that we should get rid of those algorithms once and for all but I (as probably the entire population of Silicon Valley) disagree: we should change our idea of algorithm rather than trying to delete it. The reason is simple: those algorithms will not go away, but we can still do something to avoid a crisis. We should see the codes for what they really are: tools (and not creators) that can help us during our tasks. Look no further than projects like Tulipmania, a beautiful and critical piece of art by Anna Ridler that use an algorithm to make a point on systems of social and economic currency. It seems easy to overlook the labour that goes into making a database, ignoring the network of human decisions involved in machine learning but here the artist does not want you to forget that what you see is real: real flowers, hand-picked in a real market, photographed, labelled and arranged by her decisions. Here the algorithm is an instrument in the hands of a creative, not the dictator of an absolute truth. Essential part of the artwork, not maker of it.

首先要牢记的是,责任制不在于算法上(用无生命的代码容易哭泣),而在于算法背后的设计者 。 谁设计数据库比数据库本身更重要。 他/她是否在创建一个可以查看彼此盲点的不同个人的全谱数据库? 他/她是否在艺术品的最终结果上体现出道德价值? 他/她是否正在考虑将正确的意见引入这个已经完整的生态系统中? 当然,我们只是人类,作为人类,我们会犯很多很多错误。 这里的要点是可以纠正错误,可以更改代码和可以更新软件。 但是,当我们的设计有能力对诸如社会健康之类的人造成巨大伤害时,必须将平等和代表性作为首要任务,而不是事后考虑。 我们设计时也要牢记。 有机会设计算法并不意味着我们必须这样做。 我们是否真的需要仅仅因为我们可以而建立一个能够揭示性取向的代码? 我们是否有权决定身高是否与教育有关? 所有这些情况总是并且将始终被偏向“魔术性和毫无疑问的代码”甚至可以创建新代码的地步。 在像我所描述的那样悲观的世界里,甚至还有希望吗? 当算法告诉我什么是最快的选择路径,迄今为止的最佳搭档或今晚我将要吃的最可能的食物时,自由选择又是什么? 我的意见仍然有意义吗? 我们只是数据库中的数字吗? 一个人可以正确地决定,我们应该一劳永逸地摆脱这些算法,但我(可能是整个硅谷的居民)不同意:我们应该改变算法的观念,而不是试图删除它。 原因很简单:这些算法不会消失,但是我们仍然可以做一些避免危机的事情。 我们应该看到真正的代码:在执行任务期间可以为我们提供帮助的工具(而非创建者)。 像“ 郁金香狂热”这样的项目,别无所求 ,这是安娜·里德勒 ( Anna Ridler)创作的精美而关键的艺术品,它使用一种算法来指出社会和经济货币体系。 似乎很容易忽略创建数据库的工作,而忽略了涉及机器学习的人为决策网络,但是在这里,艺术家并不想让您忘记所看到的是真实的:真实的花朵,手工采摘的真实花朵市场,根据她的决定进行拍照,标记和安排。 在这里,算法是创造者手中的工具,而不是绝对真理的独裁者。 艺术品的主要部分,而不是制作者。

结论 (In conclusion)

In creating a world where we value inclusion and where technology works for all of us, not just some of us, algorithms need to be put in place. We should regulate their use, apply laws where we need to and have the right to doubt and question the morals behind those codes. We are not dealing with any supernatural force: the algorithm is human, imperfect and often driven by secondary reasons. We should remember to put equality as a priority and not to substitute them to any kind of human work: we should coexist and use them as an instrument to make our life easier.

在创建一个我们重视包容性并且技术对我们所有人,不仅仅是我们中的某些人有效的世界中,需要采用算法。 我们应该规范它们的使用,在需要的地方应用法律,并有权怀疑和质疑这些法规背后的道德。 我们没有处理任何超自然的力量:该算法是人为的,不完善的并且通常由次要原因驱动。 我们应该记住,将平等作为重中之重,而不是将其替代为任何人类工作:我们应该共存并将其用作使我们的生活更轻松的工具。

参考书目 (Bibliography)

Agüera y Arcas, B.(2018) ‘Do algorithms reveal sexual orientation or just expose our stereotypes?’, in Medium, [accessed 18/6/2019].

Agüeray Arcas,B.(2018)' 算法是揭示性取向还是仅仅暴露了我们的刻板印象? ',在Medium中 ,[访问18/6/2019]。

Costanza-Chock, S. (2019) ‘Design Justice, A.I., and Escape from the Matrix of Domination’ in Journal of Design and Science, [accessed 19/6/2019].

Costanza-Chock,S.(2019),《 设计与科学杂志 》中的`` 设计正义,人工智能和从统治矩阵中逃脱 '',[访问19/6/2019]。

Graeme, E. (2017) ‘Threatened Species Strategy Algorithm’, in Department of Conservation New Zealand, [accessed 19/6/2019].

Graeme,E.(2017年),《 濒危物种战略算法 》, 新西兰环境保护部 ,[访问日期19/6/2019]。

Hosanagar, K. (2018) ‘ Free Will in an Algorithmic World’ in Medium, [accessed 20/6/2019]

Hosanagar,K.(2018年),`` 算法世界中的自由意志 '', 媒体 ,[访问20/6/2019]

Manovich, L. (1999) ‘The Digital’, in Millenium Film Journal №34

马诺维奇(1999)“ 数字电影”,《千年电影杂志》第34期

OBVIOUS, (2018) ‘Portrait of Edmond de Belamy’

明显,(2018)' 埃德蒙德·贝拉米的肖像 '

O’ Neil, C. (2016) ‘Weapon of Math Destruction’, in Crown Books

O'Neil,C.(2016年),《 数学破坏的武器 》,在皇冠书中

O’ Neil, C. (2016) ‘Death by Algorithm’, in PBS, [accessed 19/6/2019]

O'Neil,C.(2016年),“ 算法中的死亡 ”, PBS ,[访问19/6/2019]

Ridler, A. (2019) ‘Tulipmania’.

Ridler,A.(2019年)`` Tulipmania ''。

算法 博士

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?