人工智能ai应用高管指南

Co-authored by Fahim Hassan and Helen Gezahegn

Fahim Hassan和Helen Gezahegn合着

It’s no secret that humans are fallible and susceptible to biases, nor it is a secret that these biases can influence algorithms to behave in discriminatory ways. However, it is hard to get a sense of how pervasiveness these biases are in the technology that we use in our everyday lives. In today’s technology-driven world, we need to critically think about the impact of artificial intelligence on society and how it intersects gender, class and race.

人类容易犯错误并容易受到偏见,这已不是什么秘密,这些偏见也会影响算法以歧视性方式行事,这已不是秘密。 但是,很难理解这些偏见在我们日常生活中使用的技术中有多普遍。 在当今技术驱动的世界中,我们需要批判性地思考人工智能对社会的影响以及它如何与性别,阶级和种族相交。

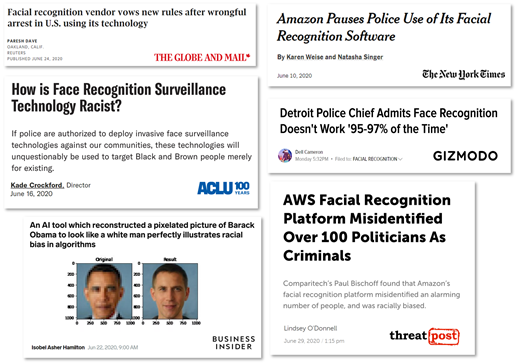

Speaking of race — just imagine being wrongfully arrested because of racial biases in algorithms. That’s exactly what happened to Robert Julian-Borchak Williams — an African American in Michigan who was arrested from his home in front of his wife and young children. He didn’t commit any crime but a facial recognition software used by the police suspected him for shoplifting. His experience of being wrongfully jailed epitomizes how flawed technology in the hands of law enforcement can magnify the discrimination against black communities.

说到种族-想象一下由于算法中的种族偏见而被错误逮捕。 这就是密歇根州的非洲裔美国人罗伯特·朱利安·伯查克·威廉姆斯(Robert Julian-Borchak Williams)的遭遇,他在妻子和年幼的孩子面前从家中被捕。 他没有犯任何罪行,但是警方使用的面部识别软件怀疑他入店行窃。 他被错误判入狱的经历集中体现了执法人员手中的技术缺陷会如何加剧对黑人社区的歧视。

Biases in facial recognition technology is now trending all over the media. Recently, a number of tech companies (including giants like Amazon, IBM, Microsoft etc.) made announcements of ceasing the design and development of facial-recognition services or products and stop selling them to state and local police departments and law-enforcement agencies. Several researchers have pointed out the limitations and inaccuracies of these technologies and voiced concerns on how it can perpetuate discrimination and racial profiling. Robert Junior’s case clearly shows that, while these decisions by technology giants can be considered as baby steps towards the right direction, these will clearly not solve the problem of racism of science that is deeply rooted in its history.

面部识别技术的偏见现在在所有媒体中都在流行。 最近,许多科技公司(包括亚马逊,IBM,微软等巨头) 宣布停止面部识别服务或产品的设计和开发,并停止将其出售给州和地方警察局以及执法机构。 一些研究人员指出了这些技术的局限性和不准确性,并对如何使歧视和种族歧视永久化表示关切。 罗伯特·朱尼(Robert Junior)的案子清楚地表明,虽然科技巨头的这些决定可以看作是朝着正确方向迈出的第一步,但这些显然不能解决根深蒂固的科学种族主义问题。

As we realize the dangerous consequences of applying biased AI technology, we also realize our need to unlearn the racism we’ve all been taught. It raises the question — how do we ensure the technology we create can unlearn it too?

当我们意识到应用有偏见的AI技术带来的危险后果时,我们也意识到我们有必要取消我们所学的种族主义。 这就提出了一个问题- 我们如何确保我们创建的技术也可以不学习它?

To answer the question, we need to understand how technology reinforces oppression and perpetuates racial stereotypes.

要回答这个问题,我们需要了解技术如何增强压迫感并延续种族定型观念。

A great primer on this topic is the book “Algorithms of Oppression” by Safiya Umoja Noble — an Associate Professor of Information Studies at the University of California, Los Angeles(1). In plain language, Safiya explains the mathematical foundations behind the automated decisions and how human bias is embedded in key concepts such as “big data”, “search engine” and “algorithm”. By using a wide range of examples, she showed that even though the algorithmic decision making seems like a fair and objective process, in reality, it reflects the human biases, and racial stereotypes. The book encourages the readers to pay attention to the search engines and other information portals they use for finding information and ask critical questions “Is this the right information? For whom?”

关于这一主题的出色入门书籍是加利福尼亚大学洛杉矶分校信息研究副教授Safiya Umoja Noble撰写的“ 压迫算法 ”一书。 Safiya用通俗易懂的语言解释了自动决策背后的数学基础,以及人的偏见如何嵌入到“大数据”,“搜索引擎”和“算法”等关键概念中。 通过使用大量示例,她表明,尽管算法决策似乎是一个公平客观的过程,但实际上,它反映了人类的偏见和种族刻板印象。 该书鼓励读者注意他们用于查找信息的搜索引擎和其他信息门户,并提出一些关键问题: “这是正确的信息吗? 为了谁?”

Another great read on the same topic is “Weapons of Math Destruction” by Cathy O’Neil — a Harvard-trained mathematician and a data scientist who is famous for her blog mathbabe.org (2). Like Safiya, Cathy also uses several case studies to raise awareness about the social risks of using algorithms that influence policy decisions and amplify inequality and biasness. These cases are based on her own career in Wall Street and her extensive investigative research. For example — algorithms in hiring and firing people in the modern workplace, providing access to credit and many more. As a vocal advocate of algorithmic fairness, she has shared her experiences through lectures and presentations (check out her TED talk, this short video animation or her lecture at Google).

在同一主题上的另一本精彩读物是凯西·奥尼尔 ( Cathy O'Neil)的 “ 数学破坏武器 ”,这是哈佛训练过的数学家,也是以她的博客mathbabe.org (2)闻名的数据科学家。 像萨菲亚(Safiya)一样,凯茜(Cathy)还使用一些案例研究来提高人们对使用影响政策决策并扩大不平等和偏见的算法的社会风险的认识。 这些案例基于她在华尔街的职业生涯和广泛的调查研究。 例如,在现代工作场所中雇用和解雇人员,提供信贷途径等的算法。 作为算法公平的倡导者,她通过讲座和演示文稿分享了她的经验(请查看她的TED演讲 , 这个简短的视频动画或她在Google的演讲 )。

So what can we do to address these biases in algorithms?

那么我们该如何解决算法中的这些偏差呢?

First of all, we have to understand the design process of these biased, pervasive technologies and this is exactly what has been the key emphasis of the book Race After Technology: Abolitionist Tools for the New Jim Code by Ruha Benjamin (3). As an Associate Professor of African American Studies at Princeton University, Ruha studies and teaches the relationship between race, technology, and justice. She describes her job is to “to create an environment in which they (students) can stretch themselves beyond the limits they may inadvertently set for their intellectual growth.” This philosophy is instilled into her books and presentations as well. In addition to her academic articles, she speaks tirelessly about the collective effort and civic engagement that is required to fight this battle. A quick-and-easy way to get more familiar with her work is this video presentation hosted at Harvard’s Berkman Klein Center for Internet & Society where she discusses more details about “a range of discriminatory designs that encode inequity: by explicitly amplifying racial hierarchies, by ignoring but thereby replicating social divisions, or by aiming to fix racial bias but ultimately doing quite the opposite.”

首先,我们必须了解这些有偏见的,无处不在的技术的设计过程,而这正是Ruha Benjamin (3)所著《 竞速技术:新吉姆代码的废除工具 》一书的重点。 作为普林斯顿大学非裔美国人研究副教授,鲁哈研究并教授种族,技术和正义之间的关系。 她描述了她的工作是“创造一个环境,使他们(学生)可以超越自己可能无意为自己的智力成长设定的极限。” 这种哲学也灌输到她的书和演讲中。 除了她的学术文章外,她还不辞辛劳地谈到了为这场战斗所需要的集体努力和公民参与。 在哈佛大学伯克曼·克莱因(Berkman Klein)互联网与社会中心主持的这段视频演示中,一种快速便捷的方式来熟悉她的作品,她在其中讨论了有关“一系列编码不平等的歧视性设计的更多细节:通过显着扩大种族等级,通过忽略但从而复制社会分化,或旨在解决种族偏见,但最终相反。”

Secondly, we can team up to overcome these flawed design approaches. The common thread that will knit us together is empathy which is central to Joy Buolamwini’s spoken word performance at the World Economic Forum “Compassion through Computation: Fighting Algorithmic Bias”. Joy, a computer scientist at the MIT Media Lab, has been a champion of algorithmic fairness. She founded Algorithmic Justice League (ASJ) — an organization with interdisciplinary researchers dedicated to design more inclusive technology and to mitigate the harmful impact of AI on society. In collaboration with the Center on Privacy & Technology at Georgetown Law, ASJ developed The Safe Face Pledge that helps organizations to commit publicly on algorithmic fairness.

其次,我们可以合作克服这些有缺陷的设计方法。 将我们联系在一起的共同思路是同情心,这是乔伊·布拉姆维尼 ( Joy Buolamwini )在世界经济论坛“ 通过计算的同情心:对抗算法偏差 ”的口头表达能力中至关重要的。 麻省理工学院媒体实验室的计算机科学家乔伊(Joy)一直是算法公平的拥护者。 她成立了算法正义联盟 (ASJ),该组织拥有跨学科研究人员,致力于设计更具包容性的技术并减轻AI对社会的有害影响。 ASJ 与Georgetown Law的隐私与技术中心合作,开发了The Safe Face Pledge ,可以帮助组织就算法公平性公开承诺。

If you are more interested in streaming, you can watch the Ted Talk by Joy Buolamwini “How I’m fighting bias in algorithms”. There are several podcasts worth eavesdropping; for example — Respecting data with Miranda Mowbray, How to design a moral algorithm with Derek Leben and the discussion on AI Ethics and legal regulation at the University of Oxford.

如果您对流媒体更感兴趣,可以观看Joy Buolamwini的Ted Talk的“ 我如何在算法中克服偏见 ”。 有几个值得窃听的播客。 例如- 与Miranda Mowbray尊重数据 , 如何与Derek Leben设计道德算法以及牛津大学关于AI伦理学和法律法规的讨论 。

Now if you made it this far along the list and hungry to learn more, check out the following -

现在,如果您在列表中走得这么远并且渴望了解更多信息,请查看以下内容-

● Dear Algorithmic Bias, a discussion at Google with Logan Browning (that’s right! She is from Netflix’s Dear White People!) and Avriel Epps-Darling (a PhD student at Harvard studying the intersection between music, technology, youth of color and their gendered identities).

● Dear Algorithmic Bias , 亲爱的 Google与Logan Browning的讨论(是的!她来自Netflix的 亲爱的白人 !)和Avriel Epps-Darling (哈佛大学的一名博士生,研究音乐,技术,有色人种及其性别身份之间的交集)。

● A related talk is “Can Algorithms Reduce Inequality?” by Rediet Abebe. Rediet is a computer science researcher and an Assistant Professor at University of California (Berkeley). She also organized the workshop on AI for Social Good in 2019 and co-founded Black in AI with Timnit Gebru. Timnit, a computer scientist at Google, is another inspiring figure in the area of algorithmic bias.

●一个相关的话题是“ 算法可以减少不平等吗? ”由Rediet Abebe撰写 。 Rediet是一位计算机科学研究员,也是加州大学伯克利分校的助理教授。 她还于2019年组织了AI促进社会 公益研讨会 ,并与Timnit Gebru共同创立了AI 领域的Black 。 Google的计算机科学家Timnit是算法偏差领域的另一个鼓舞人心的人物。

● Another fierce critic of facial recognition technology is Deborah Raji — an engineering student from University of Toronto who is currently working as a technology fellow at the research institute AI Now. For a quick read, check out The New York Times article that covered her academic work.

●面部识别技术的另一位激烈批评者是多伦多大学的工程专业学生Deborah Raj i,他目前在AI Now研究所担任技术研究员 。 要快速阅读,请查看《纽约时报》报道她的学术著作的文章 。

● Stay tuned for the documentary film “Coded Bias”. Inspired by the work of Joy Buolamwini, the film explores two key questions: “what is the impact of Artificial Intelligence’s increasing role in governing our liberties? And what are the consequences for people stuck in the crosshairs due to their race, color, and gender?” For behind the scene stories, you can also watch the Q&A with the filmmaker Shalini Kantayya and other influential researchers mentioned above — Safiya Noble, Deborah Raji and Joy Buolamwini.

●请继续关注纪录片“ 编码的偏见 ”。 影片以乔伊·布拉姆维尼 ( Joy Buolamwini)的作品为灵感 ,探讨了两个关键问题: “人工智能在控制我们自由方面的日益重要的作用是什么? 由于种族,肤色和性别的原因,那些留在十字准线中的人会有什么后果?” 对于幕后故事,您还可以 与电影制作人Shalini Kantayya 和上面提到的其他有影响力的研究人员— Safiya Noble,Deborah Raji和Joy Buolamwini一起观看问答。

呼吁采取集体行动 (Call for a collective action)

Reading on these topics can help us understand our world better — a world that is getting shaped by the rapid innovation in technology. These issues impact our society at large, so we need to have discussions beyond the academic circle and among our friends and family members (let’s spice up the dinner table conversation, shall we?). If it is for us, we, humans, need to be at the center of the design process — as simple as that. To make such participatory designs a reality, let’s pay closer attention to the design of both new and existing technologies. Anytime we see the media hype on technology saving the world, let’s talk about how it has been designed. For whom? To do what? And as we talk, let’s add our own life experiences in relation to the books we are reading, podcasts that we are listening to, and the videos we are watching (or hopefully binge watching!).

阅读这些主题可以帮助我们更好地了解我们的世界,这个世界正因技术的快速创新而形成。 这些问题影响到整个社会,因此我们需要在学术界之外以及在我们的朋友和家人之间进行讨论(让我们来谈谈餐桌上的调味品,对吧?)。 如果是对我们来说,我们人类就是设计过程的中心,就这么简单。 为了使这种参与式设计成为现实,让我们更加关注新技术和现有技术的设计。 每当我们看到媒体对技术拯救世界的大肆宣传时,就让我们来谈谈它是如何设计的。 为了谁? 做什么? 在我们交谈时,让我们增加与阅读的书籍,正在收听的播客和正在观看的视频(或希望暴饮暴食的视频)有关的生活经验。

作者 (Authors)

Fahim Hassan and Helen Gezahegn are BIPOC students at the University of Alberta, Canada. Fahim is a PhD student in public health with an interest in machine learning. He is also an advisory council member for Alberta Health Services. Helen is studying computing science; she is also the president of a student group called Ada’s Team that’s dedicated to promoting diversity in STEM with a special focus on technology at the University of Alberta.

Fahim Hassan和Helen Gezahegn是加拿大阿尔伯塔大学的BIPOC学生。 Fahim是公共卫生领域的博士生,对机器学习感兴趣。 他还是艾伯塔省卫生服务咨询委员会成员。 海伦正在研究计算机科学。 她还是一个名为Ada团队的学生团体的主席,该团体致力于促进STEM的多样性,特别是阿尔伯塔大学的技术重点。

免责声明 (Disclaimer)

Authors are responsible for their views. Opinions do not reflect the view of the employer(s) or the university.

作者对其观点负责。 观点不反映雇主或大学的观点。

致谢 (Acknowledgement)

Thanks to JuSong Baek for the illustration and Susie Moloney for her thoughtful review and feedback. We also appreciate the encouragement from the members of Black Graduate Students Association and Ada’s Team at the University of Alberta, Canada.

感谢JuSong Baek的插图和Susie Moloney的周到评论和反馈。 我们也感谢黑人研究生协会和加拿大艾伯塔大学Ada团队的鼓励。

(1) Noble SU. Algorithms of oppression : how search engines reinforce racism. New York: New York University Press; 2018.

(1)高贵的苏 压迫算法:搜索引擎如何增强种族主义。 纽约:纽约大学出版社; 2018。

(2) O’Neil C. Weapons of math destruction : how big data increases inequality and threatens democracy. First ed. New York: Crown; 2016.

(2)O'Neil C.数学破坏的武器:大数据如何加剧不平等并威胁民主。 第一版。 纽约:皇冠; 2016。

(3) Benjamin R. Race after technology : abolitionist tools for the new Jim code. Cambridge, England ;Medford, Massachusetts: Polity Press; 2019.

(3)Benjamin R.追求技术:废除新Jim编码的工具。 英国剑桥;马萨诸塞州梅德福:政治出版社; 2019。

翻译自: https://towardsdatascience.com/addressing-racial-bias-in-ai-a-guide-for-curious-minds-ebdf403696e3

人工智能ai应用高管指南

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?