线性回归 梯度下降

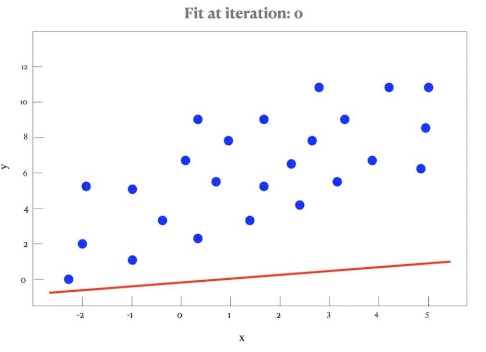

Linear Regression is one of the most popular supervised machine learning. It predicts values within a continuous range, (e.g. sale prices, life expectancy, temperature, etc) instead of trying to classify them into categories (e.g. car, bus, bike and others). The main goal of the linear regression is to find the best fit line which explains the relationship between the data.

大号 inear回归是最流行的监督的机器学习的一个。 它可以预测连续范围内的值(例如销售价格,预期寿命,温度等),而不是试图将其分类(例如汽车,公共汽车,自行车等)。 线性回归的主要目标是找到能解释数据之间关系的最佳拟合线。

The best-fitting line (or regression line) is represented by:

最佳拟合线(或回归线)表示为:

where m is the slope of the line known as angular gradient and b is the point at which the line crosses the y-axis also known as linear gradient.

其中m是直线的斜率,称为角度梯度 , b是直线与y轴交叉的点,也称为线性梯度 。

The main challenge to find the regression line is to determine the value of m and b, such that the line corresponding to those values is the best fitting line, therefore, the line that provides the minimum error.

找到回归线的主要挑战是确定m和b的值,以使与那些值相对应的线是最佳拟合线,因此,提供最小误差的线。

How to find the best m e b values?

如何找到最佳的meb值?

A possible alternative would be to use methods like Ordinary Least Squares (OLS) — which is an analytical and non-iterative solution. OLS is a type of linear least squares method to estimate the unknown parameters. The OLS is defined by:

一种可能的替代方法是使用普通最小二乘(OLS)之类的方法 ,这是一种分析且非迭代的解决方案。 OLS是一种用于估计未知参数的线性最小二乘法。 OLS通过以下方式定义:

where x is the independent variables, x ̄ is the average of independent variables, y is the dependent variables y ̄ is the average of dependent variables.

其中x是自变量,X̄是平均自变量的, 易 S中的因变量ÿ̄是平均因变量。

The OLS can be a good option to solve the problem of linear regression, because it has coefficients and linear equations. Nevertheless, apply OLS to complex and non- linear machine learning algorithms, such as Neural networks, Support Vector Machines, etc. will not be feasible. This is due to the fact that the OLS solution isn’t scalable.

OLS可以解决线性回归问题,因为它具有系数和线性方程。 然而,将OLS应用于复杂和非线性的机器学习算法(如神经网络,支持向量机等)将不可行。 这是因为OLS解决方案不可扩展。

Instead of OLS we will find the numerical approximation by an iterative method. The Gradient descent is one of the best optimisation algorithms that approximate a solution by an iterative procedure able to efficiently explore the parameter space, instead of obtaining an exact analytical solution.

代替OLS,我们将通过迭代方法找到数值近似值。 梯度下降法是最好的优化算法之一,它通过能够有效探索参数空间而不是获得精确解析解的迭代过程来近似解。

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

9206

9206

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?