简单解释bp神经网络

What would you do with an artificial brain? Do your homework? Beat the stock market? Take over the world? The possibilities are endless. What if I told you that humans have already built an artificial brain?

你会用人工大脑做什么? 做你的作业? 击败股市? 统治世界? 可能性是无止境。 如果我告诉你人类已经建立了一个人造大脑怎么办?

In fact, we have! — well kinda. It’s not as complex as our brains, but they’re extremely powerful tools that have the power to change the world. They’re called Neural Networks.

其实我们有! —好吧。 它不像我们的大脑那么复杂,但是它们是强大的工具,可以改变世界。 他们被称为神经网络 。

什么是神经网络? (What’s a Neural Network?)

A Neural Network (or NN for short) is a group of connected nodes that are able to take in information, process it, and then produce an output.

神经网络(或简称NN)是一组连接的节点 ,它们能够接收信息,对其进行处理,然后产生输出 。

One example of a NN would be our brains! It consists of lots and lots of connected biological neurons that take in information (words on the screen), processes it, then produces an output (understanding the words and storing the information).

NN的一个例子就是我们的大脑 ! 它由许多相互连接的生物神经元组成 ,它们吸收信息 (屏幕上的单词),对其进行处理 ,然后产生输出 (理解单词并存储信息)。

生物神经网络: (Biological Neural Networks:)

Understanding how biological neurons work are key for the intuitive understanding of artificial neurons. First let’s go through the physical parts of a biological neuron, which will be compared later with the parts of an artificial neuron.

了解神经元是如何工作的生物是 人工神经元的直观理解 关键 。 首先让我们看一下生物神经元的物理部分,然后再与人工神经元的部分进行比较。

Parts of a biological neuron:

生物神经元的部分:

Dendrites: Takes in inputs that came from other neurons

树突状 :接受来自其他神经元的输入

Cell Body: Combines all the information that the dendrites collected and does some computation. It could send a signal (which will vary according to strength) or not send a signal depending on the computation

细胞体 : 结合所有树突收集的信息并进行一些计算 。 它可以发送信号(根据强度而变化)或不发送信号,具体取决于计算

Axon: Take in the computation of the cell body and transport the signal

轴突 :参与细胞体的计算并传输信号

Axon Terminals: Send out the signal to other neurons

轴突末端 : 将信号发送给其他神经元

Note: Before moving on, it’s absolutely necessary to familiarize yourself with the key definitions of ML and Deep Learning in order to get the intuitive feeling behind ANN’s. Hop on to my short article for those key definitions.

注意:继续进行之前,绝对有必要熟悉ML和深度学习的关键定义,以便获得ANN背后的直观感觉。 有关这些关键定义,请跳到我的简短文章。

人工神经网络: (Artificial Neural Networks:)

Now onto the main topic of the article: Artificial Neural Networks (ANN)! ANN’s are not physical NN’s like our brains (but they can be!), they’re actually just software running on computers!

现在进入文章的主要主题: 人工神经网络(ANN) ! ANN不是像我们的大脑那样的物理NN ( 但是可以! ),它们实际上只是在计算机上运行的软件!

Let’s first look at the components of an ANN then dive into how they interact with each other.

首先让我们看一下ANN的组件,然后深入研究它们之间的交互方式。

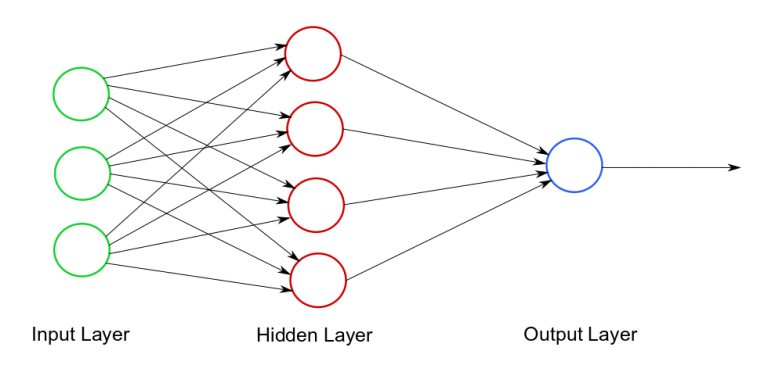

Parts of a neural network:

神经网络的组成部分:

Nodes: Takes in the inputs of previous nodes, does some computation, and outputs it. Nodes are similar to the cell body, dendrites, and axon terminals in biological NN’s

节点 :接收先前节点的输入 ,进行一些计算,然后将其输出 。 节点类似于生物神经网络中的细胞体,树突和轴突末端

Synapses: Transfers signals of the outputs of one node to inputs of the next node. Like the axons in biological NN’s

突触 : 将一个节点的输出信号传输到下一节点的输入。 就像生物神经网络中的轴突一样

Input Layer: Where the input data (in the form of numbers) is inserted

输入层 : 当输入数据 (以数字形式)被插入

Hidden Layer: The layer(s) that aren’t an input or an output (Bonus: If you have more than 1 layer, your NN graduates from a Simple NN to a Deep NN)

隐藏层 : 既不是输入层也不是输出层 (奖励:如果您有多个层,则您的NN从简单NN升级为深度NN)

Output Layer: The layer with the final output of the NN

输出层 :具有NN最终输出的层

神经网络如何工作? (How do Neural Networks work?)

How the NN actually works:

NN实际如何工作:

First there will be some input data which we will place into the input layer. The input data is in the form of numbers

首先,将有一些输入数据我们将放置到输入层中 。 输入数据为数字形式

This will be sent through the synapses. While the data from the previous node is being sent through the synapse, it will be multiplied by the parameter and a bias will be added. This amplifies or dampens the signal

这将通过突触发送 。 当来自前一个节点的数据通过突触发送时,它将与参数相乘并添加一个偏差 。 这会放大或衰减信号

This signal will be imputed into the next node, which along with all the other signals, will be summed together then passed into the synapses

该信号将被推算到下一个节点,该节点将与所有其他信号一起求和 ,然后传递到突触中

Repeat the “compute + send” steps for as many layers as there are

对尽可能多的图层重复 “计算+发送”步骤

The final output will be some number(s), which we can interpret into our result

最终输出将是一些数字 ,我们可以将其解释为结果

These 5 steps (+ another step explained a bit later) are exactly the same steps that our brains go through to process information! ANN’s are modeled after our brains!

这5个步骤 (以及稍后再解释的另一个步骤) 与我们的大脑处理信息的步骤完全相同! 人工神经网络是按照我们的大脑建模的 !

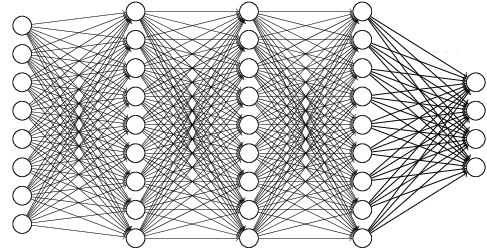

The image above looks a bit complicated, lots of nodes in layers, with loads of synapses connecting them, but it’s still not reflective of real NN’s. In real life, NN’s have thousands of nodes for each layer with dozens of layers. Though the process is all the same. But the question remains: how is the computer going to compute all of this?

上面的图像看起来有点复杂,层中有许多节点,突触负载将它们连接起来,但是仍然不能反映真实的NN。 在现实生活中, NN的每一层都有成千上万个节点,其中有几十个层 。 虽然过程都是一样的 。 但是问题仍然存在:计算机将如何计算所有这些?

编程NN: (Programming NN’s:)

Everything in NN’s are numbers, which are represented with vectors (list of numbers), which will interact with each other through dot products. We do this because computers are insanely fast at processing with vectors and dot products.

NN中的所有内容都是数字, 用向量 (数字列表) 表示 ,这些向量将通过点积 相互交互 。 我们这样做是因为计算机在处理矢量和点积方面非常快。

Let’s run through the process again but with matrices this time:

让我们再次运行该过程,但是这次使用矩阵:

Input layer will be a vector of the input data (Ex: single image or a single list of attributes)

输入层将是输入数据的向量 (例如:单个图像或单个属性列表)

This vector will be passed on to every single node in the next layer

此向量将传递 到 下一层的 每个单个节点

In each node there will be a vector of parameters, each taking the place of the synapses. The 2 vectors will dot product with each other to produce a single number. A bias will be added to this number in order to produce an output

在每个节点中 ,将有一个参数向量 ,每个参数都代替突触。 这两个向量将彼此加点以产生单个数字。 偏差将被添加到该数字以产生输出

Now the whole layer is a bunch of outputs, which we combine into a vector

现在整个层是一堆输出 ,我们将它们合并成一个向量

This vector will be passed along. The process repeats until it reaches the final layer

该向量将被传递 。 重复该过程,直到到达最后一层

Notice how in step 3, we multiply our inputs by some constant, then add in another constant? That sounds exactly like a linear function from grade 9! Yup that equation you had to memorize, y = mx + b, has a real life application (finally)! The y is the output, m is the parameter, x is the input, and b is the bias! We call step 3 a linear layer.

请注意 ,在第3步中,我们如何将输入乘以某个常数 ,然后添加另一个常数 ? 听起来完全像是9年级的线性函数 ! 是的,您必须记住的方程y = mx + b (最终)具有实际应用! y是输出 , m是参数 , x是输入 , b是偏置 ! 我们将步骤3称为线性层。

If you’re paying extra attention, something might just have popped up: a bunch of linear layers in the NN could be combined into 1 layer, so why all those layers? That’s an excellent point, which will be addressed with the key missing step — the non-linearity.

如果您要格外注意,可能会突然弹出:NN中的一堆线性层可以合并 为1层,那么 为什么要所有这些层呢? 这是一个很好的观点,将通过缺少的关键步骤 ( 非线性)解决 。

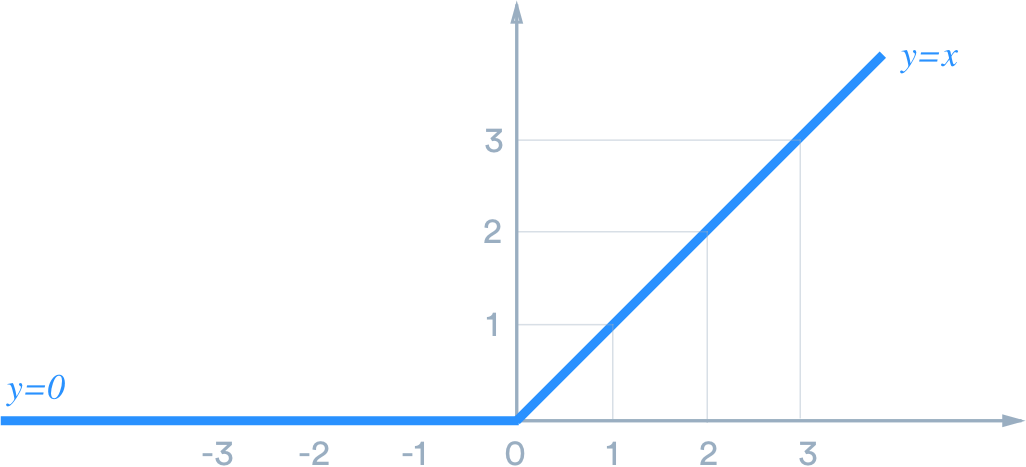

非线性函数: (The Non-Linearity Function:)

By adding in the non-linearity between each linear layer, we are able to separate them! All we have to do is apply the non-linearity function to all outputs of the linear layer.

通过添加每个线性层之间的非线性,我们可以将它们分开! 我们要做的就是将 非线性函数应用于线性层的所有输出 。

There are countless non-linearity functions out there. The most popular and simple non-linearity is called ReLU. Stick with that one because simple, fast and easy to understand!

那里有无数的非线性函数 。 最流行和最简单的非线性称为ReLU 。 坚持使用它,因为它简单,快速且易于理解!

Note: the layers of non-linear functions are called activation layers

注意:非线性函数层称为激活层

Hold on, why do we have to have so many layers? Can’t 1 just do? The answer: The Universal Approximation Theorem

等等,为什么我们必须要有这么多层? 1不能做吗? 答案:通用逼近定理

The Universal Approximation Theorem states that if you have enough linear layers and non linear layers, you can approximate any function to any arbitrary accuracy. In other words, NN’s can do anything (in theory at least)!

通用逼近定理指出,如果您有足够的线性层和非线性层,则可以将任意函数近似为任意精度。 换句话说, NN可以做任何事情 (至少在理论上如此 )!

Now that we understand how NNs work, we can dive into the process of training them! Hop onto my next article to find out more!

现在我们了解了NN的工作原理,我们可以深入研究它们的训练过程! 跳到我的下一篇文章以了解更多信息!

Thanks for reading and happy learning! :D

感谢您的阅读和学习愉快! :D

Special thanks goes out to Eason Wu for proofreading my article and Davide Radaelli for helping me out!

特别感谢Eason Wu校对我的文章,以及Davide Radaelli的帮助!

Gain Access to Expert View — Subscribe to DDI Intel

获得访问专家视图的权限- 订阅DDI Intel

简单解释bp神经网络

440

440

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?