有类路由和无类路由

Well, where did those last two years go? Apologies for my tardiness, time really got the better of me. The good news is, we’ve had a further two years of production experience, improving performance, catching bugs, adding features and completely changing our infrastructure.

那么,最近两年去哪儿了? 抱歉,我的时间太长了。 好消息是,我们还有两年的生产经验,提高了性能,发现了错误,添加了功能并彻底改变了我们的基础架构。

In the final part of this series I’m going to run a retrospective on Locer (Localisation, Errors and Routing), what went well, what we had to improve and what I would do differently.

在本系列的最后一部分,我将回顾Locer( Loc alisation, E rrors和R outing),进展顺利,我们需要改进的地方以及我将做些不同的事情。

If you have just come into this series I recommend catching up on Part 1 and Part 2 first.

如果您刚刚进入本系列,我建议您先阅读第1 部分和第2部分 。

一切顺利😃 (What went well 😃)

Locer is now routing frontend requests for the following brands:

Locer现在正在路由以下品牌的前端请求:

It is currently handling an average of half a billion requests a month.

目前,它平均每月处理十亿个请求。

节点 (Node)

In the conclusion of Part 2 I discuss why we selected Node over Go. There were two main factors in selecting JavaScript:

在第2部分的总结中,我讨论了为什么选择Go上的 Node 。 选择JavaScript有两个主要因素:

ContributorsWe wanted to make sure developers felt free and empowered contribute to the project. While Go is growing within the company, (thanks to Kubernetes). The team that would use Locer day-to-day are mainly using JavaScript. We wanted to keep the language and tools familiar to eliminate context switching.

贡献者我们想确保开发人员感到自由和有能力为该项目做出贡献。 随着Go在公司内部的发展,(感谢Kubernetes )。 每天使用Locer的团队主要使用JavaScript。 我们希望使语言和工具保持熟悉,以消除上下文切换。

Locer has now had 23 different contributors to the core codebase along with 43 submitting changes to configuration and new routing rules. I considered this a huge success.

Locer现在对核心代码库有23个不同的贡献者,还有43个提交对配置和新路由规则的更改。 我认为这是巨大的成功。

Performance and scalabilityWe knew Go would outperform Node but we wanted to make sure our language selection would not become a bottleneck. We needed to ensure the application itself added as little overhead as possible while proxying requests and also following the requirements set out in Part 1.

性能和可伸缩性我们知道Go的性能将胜过Node,但是我们想确保我们的语言选择不会成为瓶颈。 我们需要确保应用程序本身在代理请求时并遵循第1部分中列出的要求时,尽可能减少开销。

We know through both Jaeger and and also server timing headers how much time of a request consists of network responses from an upstream service and how much is Locer itself.

通过Jaeger和服务器计时标头,我们知道请求的多少时间由上游服务的网络响应组成,而Locer本身又有多少时间。

This example trace shows that Locer called the product listing application, which fetched some data and rendered a response. Locer received this response and added only 1.55ms of processing time. We allowed a budget of 5ms for this processing and so far Locer has remained under it.

此示例跟踪显示Locer调用了产品列表应用程序,该应用程序获取了一些数据并给出了响应。 Locer收到了此响应,仅增加了1.55ms的处理时间。 我们为该处理预留了5ms的预算,到目前为止,Locer仍处于处理之下。

Since the majority of work is asynchronous with a computation time of less than 5ms, we’re able to run a relative low number of instances and handle all of our traffic through one node application.

由于大多数工作都是异步的,计算时间不到5毫秒,因此我们能够运行相对较少的实例,并通过一个节点应用程序处理所有流量。

Thoughts on NodeSomething that has been really amazing to see since we released Locer is whenever Node releases a new version into LTS it became much more efficient.

关于Node的想法自从我们发布Locer以来,令人惊奇的是,每当Node向LTS发行新版本时,效率就会大大提高。

While we could potentially save money on operational costs if the application was written in Go, we saved on engineering costs opting for JavaScript. Over time those savings will decrease but we are still very happy with our choice. It’s also worth considering that when the cost saving intersect, we may already be in the position of evolving our architecture again.

如果使用Go编写应用程序,虽然可以节省运营成本的钱,但是选择JavaScript可以节省工程成本。 随着时间的推移,这些节省将减少,但是我们仍然对选择感到满意。 还值得考虑的是,当节省成本相交时,我们可能已经可以重新架构。

固定 (Fastify)

As discussed in Part 2 maximising requests per second with a consistent latency was massively important to the project. Fastify helped us achieve that.

如第2部分所述 ,以一致的延迟最大化每秒请求对项目非常重要。 Fastify帮助我们实现了这一目标。

Fastify is a web framework highly focused on providing the best developer experience with the least overhead and a powerful plugin architecture. It is inspired by Hapi and Express and as far as we know, it is one of the fastest web frameworks in town.

Fastify是一个Web框架,高度专注于以最少的开销和强大的插件体系结构提供最佳的开发人员体验。 它受到Hapi和Express的启发,据我们所知,它是该镇中最快的Web框架之一。

As well as performance there were some other key features within Fastify that we loved.

除了性能以外,我们喜欢的Fastify中还有其他一些关键功能。

LoggingWithin our Node apps we already use Pino for logging and Fastify comes with it out of the box with it available on the request object.

记录在我们的节点Apps,我们已经使用皮诺用于记录和Fastify,用它的开箱与它的请求对象上可用。

import fastify from 'fastify';const app = ({

logger: true

});

app.get('/', options, (req, res) => {

request.log.info('Some info about the current request');

reply.send({ hello: 'world' });

});HooksHooks allow you to listen to specific events in the application or request/response lifecycle. We use them for a range of things: registering redirects, adding server timing headers and a really simple example adding a “x-powered-by” to the response headers.

挂钩 挂钩使您可以侦听应用程序或请求/响应生命周期中的特定事件。 我们将它们用于很多方面:注册重定向,添加服务器计时标头和一个非常简单的示例,在响应标头中添加“ x-by-by”。

import fp from 'fastify-plugin';export default () => {

return fp((app, options, next) => {

app.addHook('preHandler', (req, res, done) => {

res.header('x-powered-by', 'locer');

done();

});

next();

});

};DecoratorsThe decorators API allows customisation of the core Fastify objects, such as the server instance itself and any request and reply objects used during the HTTP request lifecycle. Again, you can find decorators throughout the Locer code. We found it most useful when handling errors.

装饰 器装饰器API允许自定义核心Fastify对象,例如服务器实例本身以及HTTP请求生命周期中使用的任何请求和答复对象。 同样,您可以在整个Locer代码中找到装饰器。 我们发现它在处理错误时最有用。

app.decorateReply('errorPage', (statusCode, req, res, error) => {

req.log.error(error);

res.code(statusCode)

.type('text/html')

.send(`

<div>

Error: ${error.message}

Status code: ${statusCode}

</div>

`);

});From there, whenever you have access to the response object (I have used the express naming convention Fastify referrers to it as Reply) you can respond with your error page:

从那里,只要您可以访问响应对象(我已使用快速命名约定Fastify引用它的快速引用者作为Reply ),您都可以使用错误页面进行响应:

import got from 'got';app.get('/', options, async (req, res) {

try {

const { statusCode, body } = await got('/api');

if (statusCode === 200) {

res.send(body)

} else {

res.errorPage(statusCode, req, res, 'Invalid status code');

}

catch(error) {

res.errorPage(503, req, res, 'Fetch failed');

}

});Thoughts on FastifyWe love Fastify! We already had a lot of IP built around Express but the great news was it just worked out of the box with Fastify. If you are looking at a project and have traditionally always opted for Express then consider moving, you won’t be disappointed.

关于紧固的想法我们喜欢紧固! 我们已经在Express周围建立了很多IP,但是好消息是Fastify可以立即使用它。 如果您正在看一个项目,并且传统上总是选择Express,那么考虑搬家,您不会失望的。

我们在哪里改进improvements (Where we made improvements 🤔)

With any piece of software, you will find things to improve and ways to fine-tune performance over time. Locer was no different, while we tested it rigorously there are some things we didn’t see until it was in the wild.

使用任何软件,您都会发现需要改进的地方以及随时间推移微调性能的方法。 Locer没什么不同,尽管我们经过严格的测试,但有些东西直到野外才发现。

内存和CPU消耗的改善 (Memory and CPU consumption improvements)

As part of keeping our costs down and the application stable, we were constantly looking to improve its performance within our cluster. One of the biggest contributors to that were the V8 improvements on Node releases, but here are some additional changes we made that could help you.

为了降低成本并保持应用程序稳定,我们一直在寻求在集群中提高其性能。 最大的贡献者之一是对Node版本的V8改进,但这是我们进行的一些其他更改可为您提供帮助。

Fastify requesting buffers and returning promisesWhen the project started we were always inspecting the response from our services to understand how to handle the request. This added a large overhead of having to process large HTML responses. Fastify however allows lets us to deal with buffers and promises as responses. This change massively improved our application’s CPU usage.

固定请求缓冲区并返回承诺当项目开始时,我们总是检查服务响应,以了解如何处理请求。 这增加了必须处理大型HTML响应的大量开销。 然而,Fastify允许我们将缓冲区和promise作为响应来处理。 此更改极大地提高了我们应用程序的CPU使用率。

This change also meant we could also now proxy other types of requests like ones for images.

此更改还意味着我们现在还可以代理其他类型的请求,例如图像请求。

Tuning your Docker imageWe spent a long time tuning our Docker images with multi-staged builds and the correct base image for what we needed. We managed to go from over 800mb to around 100mb.

调整Docker映像我们花了很长时间调整了具有多阶段构建的Docker映像,并根据需要使用了正确的基础映像。 我们设法从800mb以上扩展到100mb附近。

For a good explanation on how you can do this, read this great article: 3 simple tricks for smaller Docker images

有关如何执行此操作的详细说明,请阅读以下精彩文章: 针对较小Docker映像的3个简单技巧

One change from this article though - when starting your image don’t do it from the scope of NPM or YARN but from node itself.

不过,本文有一个变化-启动映像时,不要从NPM或YARN的范围开始,而要从节点本身开始。

CMD ["node", "index.js"]This can save you an additional 70Mb of RAM.

服务就绪 (Service readiness)

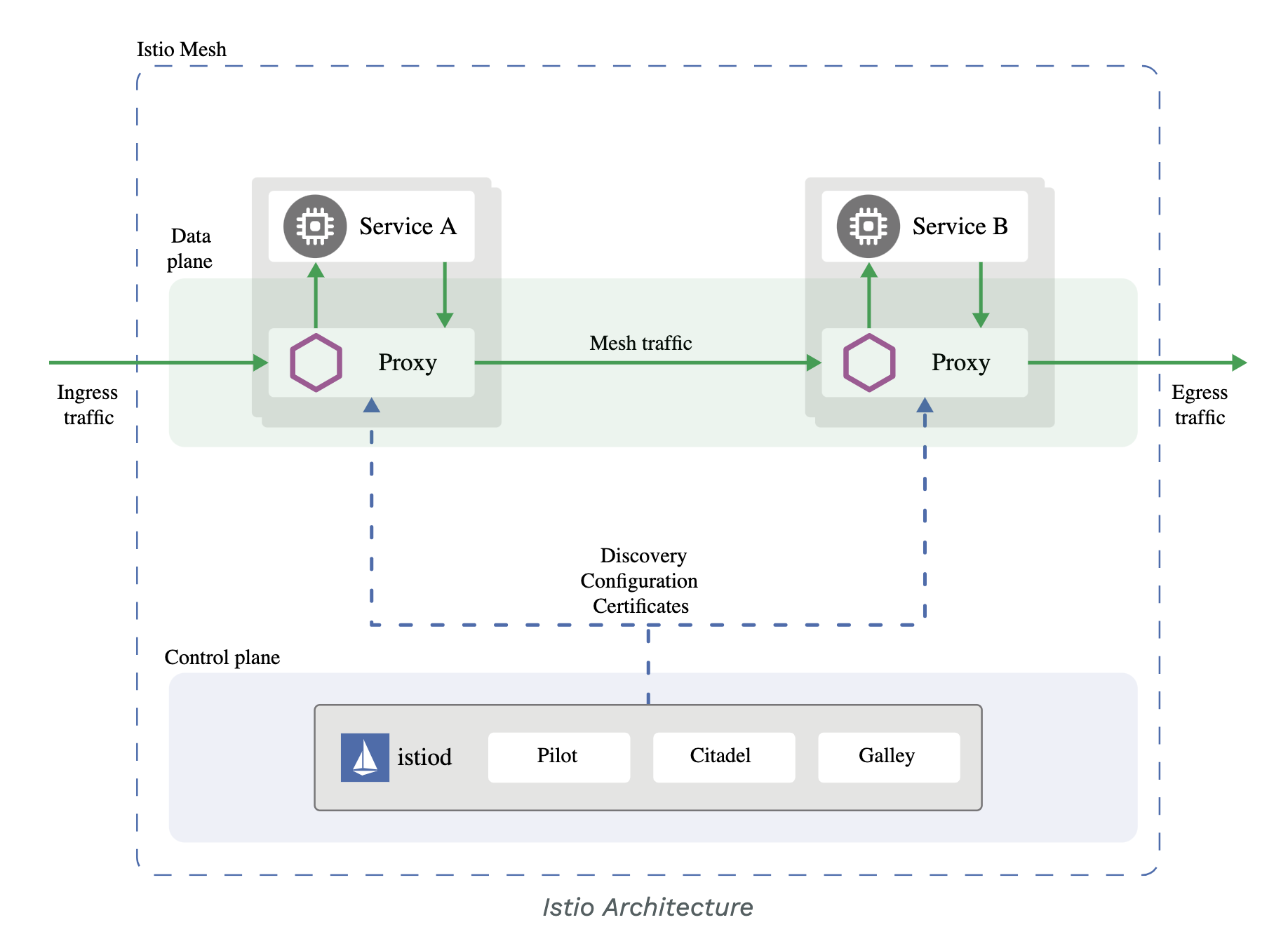

One of the problems we encountered when moving to Kubernetes and Istio (our service mesh) was around boot time dependencies. Locer needs to call our country service before it can serve traffic - this is how it can validate locales in URL patterns. The problem was that since Locer started so quickly, the call would happen before the Istio sidecar was available to handle network requests.

在迁移到Kubernetes和Istio (我们的服务网格 )时,我们遇到的问题之一是启动时间相关性。 Locer必须先致电我们的国家/地区服务,然后才能为流量提供服务-这是它可以验证URL模式中的语言环境的方式。 问题在于,由于Locer启动如此之快,因此呼叫将在Istio边车可用于处理网络请求之前发生。

This meant the application would start and, due to the healthcheck endpoint being available Kubernetes would add it to the pool of available pods. The boot time dependency would fail as Istio is not ready and therefore application would be in a stuck state receiving requests it could not process. We solved this in two ways:

这意味着应用程序将启动,并且由于运行状况检查端点可用,因此Kubernetes会将其添加到可用容器池中。 由于Istio尚未准备就绪,因此启动时间相关性将失败,因此应用程序将处于阻塞状态,无法接收其处理请求。 我们通过两种方式解决了这个问题:

Boot dependency retriesWhen the app started, if it failed to fetch from the country service it would use a retry with an exponential backoff. This would allow the Istio sidecar time to start and handle our requests.

引导依赖项重试当应用程序启动时,如果无法从国家/地区服务中获取,它将使用具有指数补偿的重试。 这将使Istio边车有时间启动并处理我们的请求。

Readiness endpointPreviously we were only using healthcheck endpoints which meant we started allowing traffic straight away. We now had an endpoint that would return a 5xx until country service had been successfully fetched. This would then set the response to a 200 letting Kubernetes know Locer was ready to accept traffic.

就绪端点以前,我们仅使用运行状况检查端点,这意味着我们开始立即允许流量。 现在,我们有了一个端点,该端点将返回5xx,直到成功获取国家/地区服务为止。 然后,这会将响应设置为200,让Kubernetes知道Locer已准备好接受流量。

我可能会做些什么💡 (What I might do differently 💡)

While we are very happy with what we have, if I was starting now based on the direction our architecture took and the industry has moved, there are a few things I might do differently.

尽管我们对自己的现状感到非常满意,但是如果我现在根据架构的发展方向以及行业的发展情况开始工作,那么我可能会做一些不同的事情。

During its inception and to production we were running all of our Microservices in ElasticBeanstalk. Once Locer was up and running it became apparent our infrastructure did not scale in the way we wanted, so myself and a small team started investigating moving the frontend to Kubernetes. We wanted ease of deployments but also wanted to include a service mesh for observability, so it was agreed our new infrastructure would include both Kubernetes and Istio together.

在创建和生产过程中,我们正在ElasticBeanstalk中运行所有微服务 。 一旦Locer启动并运行,很明显我们的基础架构无法按照我们想要的方式扩展,因此我本人和一个小团队开始研究将前端迁移到Kubernetes。 我们希望简化部署,但也希望包括一个可观察性的服务网格,因此我们同意我们的新基础架构将同时包括Kubernetes和Istio。

While Locer still worked within this architecture it also introduced some complexity when we started to build serverside A/B testing. Istio has the concept of traffic management which allows you to do things like load balancing, weighted traffic (A/B) and canary releases. Here is an example of weighted routing rules:

尽管Locer仍在此体系结构中工作,但当我们开始构建服务器端A / B测试时,它也引入了一些复杂性。 Istio具有流量管理的概念,可让您执行负载平衡,加权流量(A / B)和Canary发布之类的操作。 这是加权路由规则的示例:

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService metadata:

name: reviews

spec:

hosts:

- reviews

http:

- route:

- destination:

host: reviews

subset: v1

weight: 75

- destination:

host: reviews

subset: v2

weight: 25This would mean 75% of customers get the control application and 25% get the experimental variant.

这意味着75%的客户可以使用控制应用程序,而25%的用户可以使用实验版本 。

We could could apply these conditions to individual microservices, giving teams the power to run multiple versions of an application with Istio doing all the routing for you.

我们可以将这些条件应用于单个微服务,从而使团队可以运行多个版本的应用程序,而Istio可以为您完成所有路由。

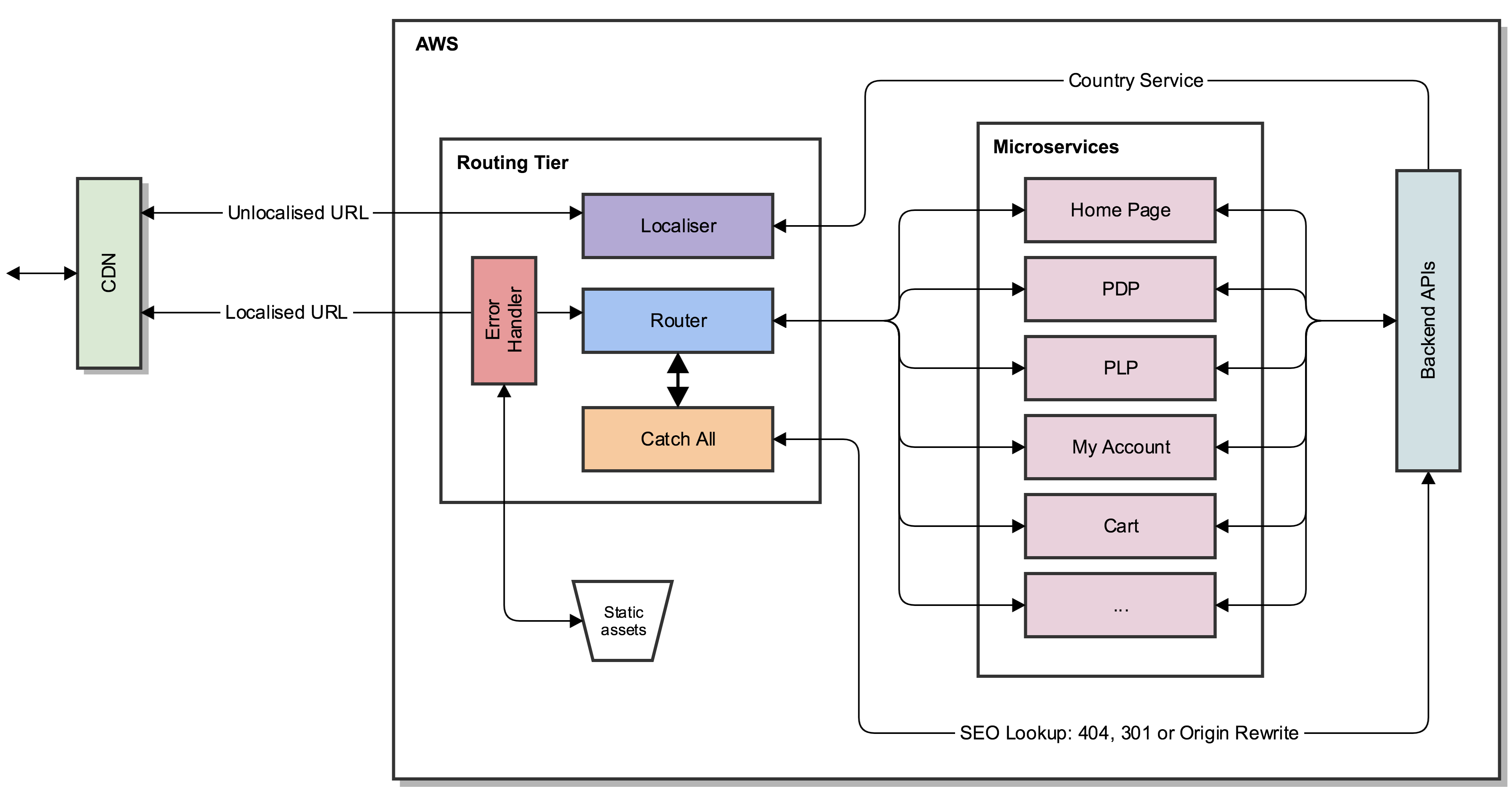

Where does Locer come into all of this? Well if you think back to the original architecture diagram.

Locer在所有这一切中发挥了什么作用? 好吧,如果您回想一下原始的架构图。

Locer is the ingress for all the microservices, the configuration that matches request to a service is shipped as a helm chart:

Locer是所有微服务的入口,将与请求匹配的服务的配置作为舵图发送 :

localised:

homepage:

route: /

service: http://((.Env.RELEASE_NAME))-homepageThe problem is we now can’t use istio-ingress-gateway to select which virtual service a request should go to as Locer is effectively hardcoded to one service. So, any service behind Locer will miss-out on the powerful traffic management within Istio.

问题是我们现在无法使用istio-ingress-gateway来选择请求应转到哪个虚拟服务,因为Locer已有效地硬编码到一个服务。 因此,Locer背后的任何服务都将错过Istio内部强大的流量管理功能。

You might be thinking, then remove Locer and let Istio handle routing? This makes sense until you revisit our original requirements:

您可能在想,然后删除Locer并让Istio处理路由? 在您重新考虑我们的原始要求之前,这才有意义:

Localisation — If the URL was not localised, add a brand specific localisation pattern to the URL and

302the customer to that new URL本地化 -如果未本地化URL,则将特定于品牌的本地化模式添加到URL,并将

302客户添加到该新URLRouting — based on the inbound URL, proxy the request to the correct Microservice

路由 -根据入站URL,将请求代理到正确的微服务

Serve errors — if the proxied request returned either a

4xxor a5xxlet the routing tier send the correct error page in the correct language服务错误 -如果代理请求返回

4xx或5xx让路由层以正确的语言发送正确的错误页面Catch all — If for some reason the inbound URL doesn’t match a pattern for a Microservice, try and return them some relevant content

全部捕获 -如果由于某种原因入站URL与微服务的模式不匹配,请尝试向它们返回一些相关内容

It’s not just routing that is required, we also need some scripting and the ability to test the code.

不仅需要路由,我们还需要一些脚本和测试代码的能力。

将WebAssembly引入Envoy和Istio (Introducing WebAssembly into Envoy and Istio)

In March 2020 it was announced we would be able to extend envoy proxies and run them as sidecars using WebAssembly.

2020年3月,我们宣布将能够扩展特使代理,并使用WebAssembly将其作为边车运行。

This would essentially allow Locer to move from an ingress to sidecar for every microservice. We could then take advantage of the Istio advanced traffic management throughout the stack and not just on Locer.

从本质上讲,这将使Locer对于每个微服务都从入口转移到边车。 然后,我们可以在整个堆栈中而不是仅在Locer上利用Istio高级流量管理。

This change does look really tempting as it would align us closer to the service mesh architecture. To create a wasm module, you need a language where you can compile a garbage collector into a binary. Certain languages, like Scala and Elm, have yet to compile to WebAssembly but we can use C, C++, Rust, Go, Java and C# compilers instead.

这项更改确实吸引人,因为它将使我们更接近服务网格体系结构。 要创建wasm模块,您需要一种可以将垃圾收集器编译为二进制文件的语言。 某些语言(例如Scala和Elm)尚未编译到WebAssembly,但是我们可以使用C,C ++,Rust,Go,Java和C#编译器代替。

As mentioned before this application is used and owned by the frontend teams and we wanted to use JavaScript to take advantage of their knowledge in the ecosystem and to avoid context switching. So what is most interesting for me is AssemblyScript.

如前所述,该应用程序由前端团队使用和拥有,我们希望使用JavaScript来利用他们在生态系统中的知识并避免上下文切换。 所以对我来说最有趣的是AssemblyScript 。

This would allow developers using Typescript to migrate Locer into an envoy sidecar proxy while retaining their existing development and testing toolchain.

这将允许使用Typescript的开发人员将Locer迁移到特使辅助代理中,同时保留其现有的开发和测试工具链。

结论 (Conclusion)

All of the original requirements for Locer were delivered and lots of new powerful features were added over the last two years. Frontend developers are now empowered to own and change routing rules with confidence. Most importantly it has never been the cause of a P1 issue. It reliably responds to every request for three large websites while also being cheap and performant.

在过去两年中,Locer满足了所有原始要求,并添加了许多新的强大功能。 前端开发人员现在可以放心地拥有和更改路由规则。 最重要的是,它从来不是导致P1问题的原因。 它可以可靠地响应对三个大型网站的所有请求,而且价格便宜且性能卓越。

Since it’s been live, our standards along with the industries standards and demands have changed and Locer in its current incarnation was not built around the service mesh architecture. The thing to remember when writing software is that’s ok, things change and move forward and it’s important to not be too precious or egotistical about code - it solves a problem for that moment in time you need it to and when it’s right to change you should embrace that.

自启用以来,我们的标准以及行业标准和需求都发生了变化,Locer目前的化身并不是围绕服务网格体系结构构建的。 编写软件时要记住的事情是,事情会发生变化并向前发展,重要的是不要对代码过于珍贵或过于自负-它可以在需要的时候解决问题,在正确的时候就应该改变。拥抱那个。

I can’t say what the future holds for service mesh as a technology but looking at it, moving Locer into a sidecar using WebAssembly does seem the right decision. However the technology is still VERY new, I would like to see the direction it goes in and we do not have a need or requirement to transition as of yet.

我不能说服务网作为一种技术的未来会怎样,但是纵观它,使用WebAssembly将Locer迁移到边车似乎是正确的决定。 但是,该技术仍然是非常新的,我希望看到它的发展方向,并且到目前为止我们还没有过渡的需求。

We have a solution for A/B testing without using Istio’s weighted traffic management (Something I will write about soon), so for now, Locer will live on using Node and we couldn’t be happier with it.

我们有一个不使用Istio加权流量管理的A / B测试解决方案(我很快会写一些内容),所以就目前而言,Locer将继续使用Node,我们对此感到不满意。

翻译自: https://medium.com/ynap-tech/microservices-solving-a-problem-like-routing-2020-update-e623adcc3fc1

有类路由和无类路由

2128

2128

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?