java消费kafka消息

Hello guys! Today I want to speak about producing and consuming messages with Java, Spring, Apache Camel and Kafka. Many applications today use streaming of events and message publishing systems to communicate each other. One of the last I’ve used is Apache Kafka, a distributed streaming platform which mostly makes simple publishing and subscribing to topics and getting great performance by parallelizing the consumers. This article is for anyone who want to use Kafka and maybe would do a simple basic usage exploiting the abstraction provided by another framework : Apache Camel.

大家好! 今天,我想谈谈使用Java,Spring,Apache Camel和Kafka生成和使用消息。 如今,许多应用程序使用事件流和消息发布系统相互通信。 我使用过的最后一个应用程序是Apache Kafka ,它是一个分布式流平台,主要使发布和订阅主题变得简单,并通过并行化使用者来获得出色的性能。 本文适用于想要使用Kafka的任何人,并且可能会利用另一个框架Apache Camel提供的抽象来做一个简单的基本用法。

Apache Camel is an enterprise integration framework (I like to call it the integration swiss knife) which is comprehensive of hundreds of ready-to-use components for the integration with libraries, frameworks and techniques known in the enterprise industry and in the open-source world.

Apache Camel是一个企业集成框架(我喜欢称之为集成瑞士刀),它包含数百种现成的组件,用于与企业行业和开源中已知的库,框架和技术进行集成。世界。

目标 (Goal)

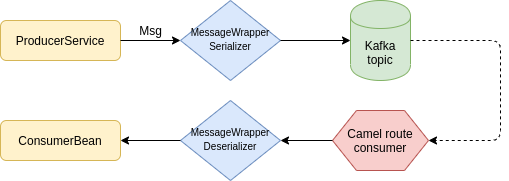

In my last project, I’ve used Apache Camel to give the project the flexibility we needed with the routes and, while doing this, I’ve also used the abstraction that Camel offers to deal with Kafka topics. Our goal was to share a way for our microservices projects to produce and consume messages from every Kafka topic without worrying the nature of the message and using an abstraction model which encapsulates all the possible messages we could exchange.

在上一个项目中,我使用Apache Camel为该项目提供了路由所需的灵活性,同时,我还使用了Camel提供的抽象来处理Kafka主题。 我们的目标是为我们的微服务项目共享一种方法,以产生和使用来自每个Kafka主题的消息,而不必担心消息的性质,并使用一种抽象模型来封装我们可以交换的所有可能的消息。

模型-MessageWrapper (The model — MessageWrapper)

First of all we have started defining a single model with the goal of abstracting each message we would exchange. With this in mind, we have defined the following class :

首先,我们已经开始定义一个模型,其目的是抽象出我们将交换的每条消息。 考虑到这一点,我们定义了以下类:

In the MessageWrapper model, we have the following fields :

在MessageWrapper模型中,我们具有以下字段:

timestamp : stores when the message was published to the Kafka topic

timestamp :存储将消息发布到Kafka主题的时间

callerModule : contains info about who was the publisher of the message (if needed)

callerModule :包含有关谁是消息的发布者的信息(如果需要)

messageType : custom field to define the type of messages in terms of Java classes we would like to exchange with Kafka topics

messageType :自定义字段,用于根据我们想与Kafka主题交换的Java类来定义消息的类型

payload : a string with JSON representation, encapsulating the real message exchanged

有效负载 :具有JSON表示形式的字符串,封装了交换的真实消息

And then we have the MessageType enum, defined as follows :

然后我们有MessageType枚举,定义如下:

Through this enumeration, we are saying that we can exchange two type of messages : simple strings and items (more complex objects). Just to give you an idea, Item class could be as follows :

通过这种枚举 ,我们说我们可以交换两种类型的消息:简单的字符串和项(更复杂的对象)。 为了给您一个想法,Item类可能如下:

ProducerService (The ProducerService)

Now we need a central place to encapsulate all the messages going towards a Kafka topic within the MessageWrapper and this place is the ProducerService class. We have called “Producer” because it is only called when producing a message to Kafka and not when consuming the message. The ProducerService class is implemented as follows :

现在,我们需要一个中心位置,将所有指向Kafka主题的消息封装在MessageWrapper中,这个位置是ProducerService类。 我们之所以称为“生产者”,是因为仅在向Kafka生成消息时才调用它,而在使用消息时则不会调用它。 ProducerService类的实现如下:

As I’ve done with the models, I’m going to explain you the part of this class. Basically, the central component is the ProducerTemplate of the Camel framework : this is a generic component making the function of publishing and sending an object (that we call also payload) to a specific endpoint; in our case, the endpoint is the one specifying the Kafka topic.

正如我对模型所做的那样,我将向您解释该课程的一部分。 基本上,中心组件是Camel框架的ProducerTemplate :这是一个通用组件,具有将对象(也称为有效负载)发布和发送到特定端点的功能; 在我们的例子中,端点是指定Kafka主题的端点。

In the ProducerService class, we have two sendBody methods : the simplest (lines 47–49) sends a payload as it is directly to the Camel endpoint; instead, the other sendBody method (lines 35–40) is useful to us in order to exchange message in a standard form and does the following steps :

在ProducerService类中,我们有两个sendBody方法:最简单的方法(第47-49行)将负载直接发送到Camel端点; 相反,另一个sendBody方法(第35-40行)对我们很有用,以便以标准形式交换消息并执行以下步骤:

- takes a payload and converts it to the JSON format 接收有效载荷并将其转换为JSON格式

use the encapsulateMessage method to build the MessageWrapper object

使用encapsulateMessage方法来构建MessageWrapper对象

- send the MessageWrapper object to the Camel endpoint relative to the Kafka topic 将MessageWrapper对象发送到相对于Kafka主题的Camel端点

Therefore, with this class we can take any type of message, encapsulate inside a MessageWrapper object and publish to a Kafka topic.

因此,使用此类,我们可以获取任何类型的消息,将其封装在MessageWrapper对象中并发布到Kafka主题。

邮件序列化和反序列化 (Message Serialization and Deserialization)

When producing and consuming a message to the Kafka topic, we have the option to specify a custom serializer, as well as a custom deserializer. We have the goal to exchange messages in an interchangeable and unique way, so this is the case to use custom components for serializing and deserializing.

在生成和使用有关Kafka主题的消息时,我们可以选择指定自定义序列化程序以及自定义反序列化程序。 我们的目标是以一种可互换且独特的方式交换消息,因此使用自定义组件进行序列化和反序列化就是这种情况。

Then we have implemented a serializer, the MessageWrapperSerializer class, which has the only responsibility to convert the MessageWrapper in a JSON string, through the serialize method, as follows :

然后,我们实现了一个序列化程序MessageWrapperSerializer类,该类唯一负责通过serialize方法将JSON中的MessageWrapper转换为JSON字符串,如下所示:

And then we have implemented the respective deserializer, the MessageWrapperDeserializer class, to take the JSON which is being consumed from the Kafka topic, converting it to a MessageWrapper object and take the payload of our interest, with the deserialize method, as follows :

然后,我们实现了各自的反序列化器MessageWrapperDeserializer类,以获取从Kafka主题消费的JSON,将其转换为MessageWrapper对象,并使用反序列化方法获取我们感兴趣的有效负载,如下所示:

With all of these class, what does it remain us to do? The configurations and running the example.

对于所有这些课程,我们还有什么要做? 配置和运行示例。

环境配置 (Environment configurations)

In order to speed up environment configuration I’ve used Docker with the following docker-compose.yml settings :

为了加快环境配置,我将Docker与以下docker-compose.yml设置结合使用:

With this configurations, it is quite fast to setup the environment and get Zookeeper and Kafka working for you. Note : to startup the environment, from the terminal, enter inside the folder of the project and give the command :

使用此配置,可以快速设置环境并让Zookeeper和Kafka为您工作。 注意:要启动环境,请从终端进入项目文件夹,然后输入以下命令:

docker-compose upFor all the other configurations like Maven dependencies or for the complete code of this article, I leave the link to my personal Github repository for the project : https://github.com/dariux2016/template-projects/tree/master/camel-kafka-producer

对于所有其他配置(例如Maven依赖项)或本文的完整代码,我保留指向该项目的个人Github存储库的链接: https : //github.com/dariux2016/template-projects/tree/master/camel-卡夫卡制片人

Kafka配置 (Kafka configurations)

In addition to the environment, at the application level, we have to configure the parameters to correctly communicate with Kafka. Then we have created the following application.yml :

除了环境之外,在应用程序级别,我们还必须配置参数以与Kafka正确通信。 然后,我们创建了以下application.yml :

In this configuration, we’ve set some things :

在此配置中,我们进行了一些设置:

- the connection to the Kafka brokers 与Kafka经纪人的联系

- some Kafka Producer and Consumer properties, as the message serializer and deserializer that we have customized 一些Kafka Producer和Consumer属性,例如我们自定义的消息序列化程序和反序列化程序

the Kafka URI base to the Topic of our interest, which here I’ve named with EXAMPLE-TOPIC

Kafka URI是我们感兴趣的主题的基础,在这里我用EXAMPLE-TOPIC命名

骆驼配置 (Camel configurations)

What we have seen until now, it’s all oriented to producing the message (except the MessageWrapperDeserializer component). Now we see how to setup a route consuming the MessageWrapper objects from the Kafka topic in which we have published through the ProducerService.

到目前为止,我们所看到的都是针对生成消息的(MessageWrapperDeserializer组件除外)。 现在,我们了解如何设置路线,该路线使用我们通过ProducerService发布的Kafka主题中的MessageWrapper对象。

Inside the folder src/main/resources, we can create a folder “camel” in which we can place some XML files to be loaded as Camel routes. A Camel route for consuming a message is simple to be written, as follows:

在文件夹src / main / resources内,我们可以创建一个文件夹“ camel”,在其中可以放置一些XML文件作为Camel路由加载。 消费消息的骆驼路线很容易编写,如下所示:

The URI is substituted when starting the route with properties configured in the application.yml. When consuming from that endpoint, it gets called the component which is a simple consumer of the messages arriving to the Kafka topic : the ConsumerBean.

使用application.yml中配置的属性启动路由时,将替换URI。 从该端点进行消费时,它被称为组件,它是到达Kafka主题的消息的简单消费方: ConsumerBean 。

A consumer bean is nothing else than a Spring component class, as follows :

消费者bean就是Spring组件类,如下所示:

测验 (Tests)

After all the implementations, I’ve done and shared below a simple test class by which I’ve executed the tutorial and get all the things working. With this test, I’ve published two messages to the Kafka topic :

在完成所有实现之后,我已经在一个简单的测试类下完成并共享了代码,通过该类我执行了该教程并使所有工作正常进行。 通过此测试,我向Kafka主题发布了两条消息:

- a string “hello world” 字符串“ hello world”

- an item with code “A” and name “first item” 代码为“ A”且名称为“ first item”的商品

Both get encapsulated into a MessageWrapper and get published to Kafka. After the Camel routes starts consuming, by the configuration it launches the MessageWrapperDeserializer, which takes the payload and puts it inside the body part of the Camel Exchange. Doing this, the ConsumerBean will simply take the body and consume it.

两者都封装到MessageWrapper中,并发布到Kafka。 在骆驼路线开始消耗之后,通过配置,它启动MessageWrapperDeserializer ,它接收有效负载并将其放入骆驼交换的主体部分。 这样做, ConsumerBean将简单地获取并食用它。

Well, I’ve finished. I hope you find this story interesting and if you have any questions or proposal about it, please leave me a comment.

好,我完成了。 我希望您觉得这个故事很有趣,如果对此有任何疑问或建议,请给我评论。

java消费kafka消息

本文介绍如何使用Java及Apache Camel库与Kafka集成,进行消息的生产和消费。通过实例展示了具体的实现步骤。

本文介绍如何使用Java及Apache Camel库与Kafka集成,进行消息的生产和消费。通过实例展示了具体的实现步骤。

2101

2101

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?