ai 音乐创作 算法

The speculative-fiction writer Philip K. Dick used amphetamines and other stimulants to transform himself into a 24/7 writing machine. Powered by chemicals, he churned out 28 novels and more than 132 short stories (many of which used drugs as subject matter, including “A Scanner Darkly”). Nor was he alone: If pulp writers didn’t churn out as much copy as possible, they didn’t eat — and if that meant swallowing pills so you could write for two days straight, so be it.

投机小说作家菲利普·迪克(Philip K. Dick)使用苯丙胺和其他兴奋剂将自己转变为24/7的书写机器。 在化学品的推动下,他创作了28部小说和132多个短篇小说(其中许多以毒品为题材,包括“黑暗的扫描仪”)。 他也不是一个人:如果纸浆作家没有制作出尽可能多的副本,他们就不会吃饭–如果那意味着吞下药丸,这样您就可以连续写两天了,就这样。

In some ways, the writing business hasn’t changed much in the past century. For thousands of writers, the volume of copy you generate is proportional to how much you earn. Drugs are still a way to power through — I know more than one journalist or blogger who developed a nasty Adderall habit — but often it’s just a combination of caffeine and desperation.

在某些方面,写作业务在上个世纪没有太大变化。 对于成千上万的作家而言,您产生的副本数量与您的收入成正比。 毒品仍然是一种维持生存的方式-我知道不止一个新闻记者或博主养成了讨厌的Adderall习惯-但通常这只是咖啡因和绝望的结合。

I’m a journalist and editor who also writes pulp fiction on the side, so I’m as aware of the marketplace dynamics as anyone else in the writing business. Over the past year, I’ve been keeping an eye on the evolution of A.I. text generation, which is touted (by businesses) as a way of generating tons of content on the cheap, while derided (by writers) as a potential job killer.

我是一名记者和编辑,同时也在一边写纸浆小说 ,所以我和写作行业的其他人一样了解市场动态。 在过去的一年中,我一直在关注AI文本生成的发展,该技术被(企业)吹捧为一种廉价生成大量内容的方式,而被(作家)嘲笑为潜在的职业杀手。

One of the more prominent A.I. text generators has been GPT-2, a “large-scale unsupervised language model” created by OpenAI, a semi-nonprofit (it’s complicated) that wants A.I. and machine-learning tools used in virtuous ways. The relatively new GPT-3 is a further refinement of the underlying technology.

最为杰出的AI文本生成器之一就是GPT-2 ,它是OpenAI创建的一种“大规模无监督语言模型”,OpenAI是一个半营利性组织( 很复杂 ),希望以合理的方式使用AI和机器学习工具。 相对较新的GPT-3是对基础技术的进一步改进。

With a training dataset of 8 million web pages (featuring 1.5 billion parameters), GPT-2 was long-touted as capable of achieving “state-of-the-art performance on many language modeling benchmarks.” OpenAI initially refused to unleash it into the wild, fearful that it would be used to generate mountains of “fake news.”

GPT-2拥有800万个网页的训练数据集(具有15亿个参数),长期以来一直被吹捧为能够实现“在许多语言建模基准上的最新性能”。 OpenAI最初拒绝将其释放到野外,担心它会被用来产生大量的“假新闻”。

Last year, I tried an experiment where I fed GPT-2 a selection of opening lines from some of history’s greatest literary works (including Jane Austen’s “Pride and Prejudice”). My conclusion at the time was that the algorithm was capable of sticking with the subject matter for a few lines, but quickly became discombobulated and nonsensical. In other words, although A.I. is already capable of writing short news stories according to a fixed template (such as breakdowns of quarterly financial results), it didn’t seem like writers of longform and fiction pieces really had anything to fear.

去年, 我尝试了一个实验 ,为GPT-2提供了一些历史上最伟大的文学作品(包括简·奥斯丁的《傲慢与偏见》)的开场白。 我当时的结论是,该算法能够坚持主题几行,但很快就变得混乱无理。 换句话说,尽管AI已经能够按照固定的模板( 例如季度财务结果的细目分类 )编写短新闻故事,但似乎长篇小说作者和小说作者并没有真正担心的地方。

This week, I decided to see how the technology had evolved since my last run-through. Although GPT-3 is out in the ecosystem, and testable, I wanted to try something with the well-established GPT-2: A custom generator.

本周,我决定看看自上次尝试以来该技术的发展情况。 尽管GPT-3不在生态系统中并且可以测试,但我想尝试使用完善的GPT-2:自定义生成器。

A standard generator might have scraped text from millions upon millions of web pages in order to power itself, but the results are often mixed: For example, you could feed a GPT-powered platform a couple sentences of Jane Austin, only to have it spit back a mix of 2020 election news, gossip about 18th century British landed gentry, and a mangled Wikipedia entry.

一个标准的生成器可能已经从数百万个网页上抓取了文本以增强自身功能,但结果却参差不齐:例如,您可以在GPT驱动的平台上添加Jane Austin的几句话,而只是吐口水回顾2020年的选举新闻,八卦约18世纪的英国登陆士绅,以及维基百科条目破损。

A custom generator, on the other hand, allows you to provide the examples that the system uses to train itself and generate text. InferKit, created by Adam Daniel King, is an evolution of the ultra-popular Talk to Transformer, a public-facing GPT-powered tool that earned a lot of press from Wired and other venues. It also allows you to upload .txt or CSV files, which it then uses as the basis for its custom generator (uploads are capped at 20 MB or 20,000 documents).

另一方面,自定义生成器允许您提供系统用来训练自身和生成文本的示例。 由Adam Daniel King创建的InferKit是广受欢迎的Talk to Transformer的发展,Talk to Transformer是面向公众的GPT驱动的工具,在Wired和其他场所赢得了很多关注。 它还允许您上载.txt或CSV文件,然后将其用作其自定义生成器的基础(上传上限为20 MB或20,000个文档)。

For the purposes of this experiment, I fed InferKit a .txt file containing ~500,000 words of my fiction published over the past ten years, including novels, short stories, and poetry. It took about an hour to digest that data and learn from it. I then fed it a lengthy selection from the crime-fiction novella I’m writing right now:

出于本实验的目的,我向InferKit提供了一个.txt文件,该文件包含过去十年间出版的大约50万个我的小说单词,包括小说,短篇小说和诗歌。 大约花了一个小时才能消化这些数据并从中学习。 然后,我从我现在正在写的犯罪小说中选了一个冗长的选择:

Miller returned to his tiny apartment on Avenue B. A monk would have found the space nicely minimalist. The mattress on the bedroom floor was the largest piece of furniture. His money, along with an assortment of guns, rested beneath the floorboards of the short hallway that separated the bedroom from the bathroom.

米勒回到他在B大街上的小公寓里。和尚会发现这个空间极简主义。 卧室地板上的床垫是最大的家具。 他的钱和各种各样的枪支一起放在了将卧室和浴室隔开的短走廊的地板下。

On his way home, Miller had stopped at an all-night burger place on Avenue A and ordered the Big Jumbo Delight, two patties slathered with cheese and horseradish mayonnaise. He sat on the mattress, relishing each greasy swallow.

在回家的路上,米勒在A大道上一个通宵的汉堡店停下来,下令“大珍宝”(Big Jumbo Delight),两块肉饼上夹有奶酪和辣根蛋黄酱。 他坐在床垫上,放松了每一次油腻的吞咽。

The Ricky deal was a mess, because everything Ricky did was a mess. But how had he managed to score that enormous house? Conmen dreamed of that big score, and virtually all of them failed to land it. Yet Ricky, dumb Ricky, had somehow tricked his way into a mansion.

与瑞奇的交易是一团糟,因为瑞奇所做的一切都是一团糟。 但是他是如何得分的呢? Conmen梦想着获得如此高的成绩,并且几乎所有人都未能获得成功。 然而,Ricky,愚蠢的Ricky,却以某种方式欺骗了他进入豪宅的方式。

Chewing the last bite of burger, Miller told himself that he would return to the townhouse in the morning, learn whatever there was to learn. Ten thousand dollars was nothing to wave off. And if Ricky was promising him that amount, it meant there was a lot more money in the pot.

咀嚼着汉堡的最后一口,米勒告诉自己,他将在早上回到联排别墅,学习所有可以学习的东西。 一万美元可是一笔不小的收入。 如果Ricky向他保证要提供那笔钱,那意味着锅里还有很多钱。

Stripping off his clothes, Miller lay back on the bed, his hands folded behind his head. Pale light from the streetlamps trickled past the barred windows, throwing a maze of shadows over his body. By focusing on his breathing and the faint pulse of his heart, he could drive away his worries about fingerprints and the clown and his patterns and Ricky, drive it all far enough away that he could drift off…

米勒脱掉衣服,躺在床上,双手交叉在头后面。 街灯发出的苍白的光线从禁止的窗户上流了下来,在他的身上笼罩着阴影。 通过专注于呼吸和心脏微弱的搏动,他可以消除对指纹,小丑,图案和Ricky的担忧,将其驱使到足够远的距离,以至于他可以漂移……

Creak.

吱吱作响。

Oh, you bastard.

哦,你这个混蛋

Creak. Creak. Creak.

吱吱作响。 吱吱作响。 吱吱作响。

That old man knew he was home.

那个老人知道他在家。

Creak-creak-creak-creak.

吱吱作响的吱吱作响的吱吱声。

(It’s a first draft; go easy on me.)

(这是初稿;请放心。)

Here’s what an A.I. trained on ten years of my writing spat back as the next few paragraphs (I’ve inserted some paragraph breaks in order to give it a human-like flow):

以下是AI在十年写作中所训练的内容,作为接下来的几段内容(我插入了一些段落分隔符以使其像人一样流动):

The clock on the far wall chimed eight minutes too late, the door burst open, and the old man stumbled into the living room. There was a chair on the sofa, the curtains drawn. A pair of sunglasses rested on the coffee table, set on the floor.

远处的墙上的钟声已经晚了八分钟,门开了,老人跌跌撞撞地走进了客厅。 沙发上有椅子,窗帘被拉开了。 一副太阳镜放在茶几上,放在地板上。

Rick was nowhere in sight. Miller could hear a faint howl through the walls. The old man stumbled into the coffee table, leaning against it, startled at Miller’s actions. Miller sensed the fear in the man’s eyes, real, but the man had not seen him and yet. Miller waited for his wand, which never did arrive.

里克无处不在。 米勒听见从墙上传来微弱的how叫声。 那个老人跌倒在咖啡桌上,斜倚在咖啡桌上,对米勒的行为感到震惊。 米勒感觉到了那个男人眼中的恐惧,这是真的,但是那个男人还没有看到他。 米勒等待着他的魔杖,魔杖一直没有到。

The old man, his shirt torn, his jeans ripped asunder, walked over to the couch. Rising to his knees, he bent down, brushing against the back of Miller’s neck with his fingertips. That’s a little rough, Miller mused.

老人,他的衬衫破了,他的牛仔裤破破烂烂地走到沙发上。 他抬起膝盖,弯下腰,用指尖刷在米勒的脖子后面。 米勒沉思着说,这有点粗糙。

It was a long way from Grandma’s farmhouse, and Grandma knew it, and you shouldn’t do that to people, the old man thought.

老人想,这距离奶奶的农舍还有很长的路要走,奶奶知道了,你不应该这样对别人。

Taking the older man’s hand in his own, Miller took the sunglasses from the coffee table, stuffing them into his mouth. “Can I get a cup of coffee?” Miller asked. “You say I have a parole board?”

米勒握着老人的手,从茶几上拿起太阳镜,塞进他的嘴里。 “我可以喝杯咖啡吗?” 米勒问。 “你说我有一个假释委员会?”

The old man looked at Miller with dark eyes. “What is that?”

老人用黑眼睛看着米勒。 “那是什么?”

Miller blinked. “You on parole, are you?”

米勒眨了眨眼。 “你在假释上,对吗?”

Miller studied the old man’s features, reading his tense face. Dark circles under the dark eyes. And the old man’s mouth. Miller stopped when he noticed blood leaking from the corner of the man’s mouth. The old man reached into his shirt and yanked out the knife with a sad little whine, all purple and bony. Miller was certain the old man had seen him, though the man had none of that violence, no stalking in his victim’s mind.

米勒研究了老人的特征,读了他紧张的脸。 黑眼圈下有黑眼圈。 还有老人的嘴。 当米勒注意到血液从男人的嘴角渗出时,他停了下来。 那个老人伸进他的衬衫,用悲伤的小呜呜的声音把那把刀拉了出来,全是紫色和骨头。 米勒确定老人已经见过他了,尽管那个男人没有那种暴力,也没有在受害者的脑海中徘徊。

The old man brought the knife up and bit into Miller’s cheek, hard. Miller, looking at the old man as he banged on the table, only saw a good man standing, blood running down his cheeks. Miller’s throat clenched painfully, hard.

老人抬起刀,狠狠地咬着米勒的脸颊。 米勒望着那只撞在桌子上的老人,只看到一个好人站着,鲜血从他的脸颊流下。 米勒的喉咙痛苦而又紧握。

“What is it?” the old man said, spitting the knife out.

“它是什么?” 老人说,把刀吐出来。

Obviously, none of that is what I actually intended to write next. It’s not even close, unless I wanted to write some kind of horrific mashup of David Lynch and Robert Coover’s underrated surrealist classic Ghost Town. But here’s the startling thing: It’s a pretty good imitation of my prose style, even if the action is nonsensical. If you want to be charitable, you could describe the GPT-2/InferKit results as “dreamlike,” in the same way that dreams proceed without any adherence to plot mechanics or even basic physics. But if you’re willing to put concerns about logic aside, there’s a smoothness here that suggests A.I. is progressing.

显然,这些都不是我接下来真正要写的。 除非我想写大卫·林奇和罗伯特·科弗低估的超现实主义经典《 鬼城》的恐怖混搭,否则它甚至还没有结束。 但这是令人吃惊的事情:即使动作是荒谬的,这也是我的散文风格的很好模仿。 如果您想成为慈善机构,您可以将GPT-2 / InferKit的结果描述为“像梦一样”,就像梦一样进行,而无需遵守绘图原理甚至基本物理学。 但是,如果您愿意将对逻辑的关注放在一边,那么这里的流畅性就暗示着AI正在进步。

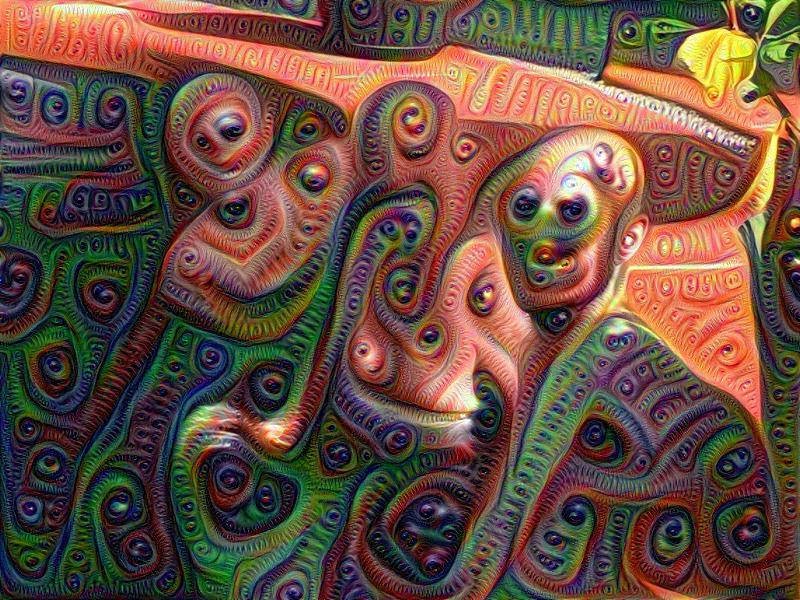

In many ways, this experiment echoes Google’s Deep Dream, which attempted to use a neural net to “learn” images. That resulted in some very trippy, dreamlike pictures. For example, this is three men in a pool:

在许多方面,该实验都与Google的Deep Dream相呼应,后者试图使用神经网络来“学习”图像。 这就产生了一些非常虚幻的,梦幻般的照片 。 例如,这是池中的三个人:

Obviously, there are still substantial roadblocks before A.I. can comfortably take over fiction writing from human beings. A “general” A.I. (i.e., a “human-like” one; think HAL in 2001) would know that a human shouldn’t stuff sunglasses in their mouth. But these specialized, learning A.I.s that are filling our current world have no idea of existence beyond their highly specialized input; there’s no meta-awareness, no sense of structure.

显然,在AI能够舒适地接管人类的小说创作之前,仍然存在很多障碍。 一个“通用”的AI(即“类人”的AI; 2001年 HAL认为)会知道,一个人不应在自己的嘴里塞太阳镜。 但是,这些充斥着当今世界的专业的,学习型的AI除了高度专业化的投入外,还没有生存的想法。 没有元意识,没有结构感。

And that sense of structure, of the broader world, is what is going to protect fiction writers for quite some time to come. Writers must make intuitive leaps; they (hopefully) have an instinctive sense of their work’s structure and its ultimate goal. Or as Nabokov put it, when discussing the writing of Lolita:

这种结构上的感觉,将在相当长的一段时间内保护小说作家。 作家必须做出直观的飞跃。 他们(希望)对工作的结构和最终目标具有直觉。 或者如纳博科夫所说,在讨论《 洛丽塔》的写作时:

“These are the nerves of the novel. These are the secret points, the subliminal co-ordinates by means of which the book is plotted — although I realize very clearly that these and other scenes will be skimmed over or not noticed, or never even reached.”

这些是小说的神经。 这些是秘密点,是本书的下层坐标,尽管我很清楚地意识到,这些场景和其他场景将被掠过或被忽视,甚至永远无法到达。”

These are nuances that necessarily elude the machines. You could upload all of Nabokov or Philip K. Dick to a platform running GPT-2 or GPT-3, and you might get a facsimile of their style and tone, but you wouldn’t get the structure or creativity. Humans writing press releases might have good reason to be frightened for their jobs over the next five years, but novelists may not have cause for concern for decades, if ever.

这些细微差别必定会掩盖机器。 您可以将Nabokov或Philip K. Dick的所有内容上传到运行GPT-2或GPT-3的平台,并且可能会获得它们的样式和基调的传真,但不会获得结构或创造力。 在未来五年中,撰写新闻稿的人可能会因为工作而受到惊吓,但是小说家可能几十年来都不会担心,即使有的话。

One marvels, though, at what Dick, high and feverish and smacking away at his typewriter keys, might have one with the concept of text predictors. Do androids dream of becoming great novelists?

但是,对于Dick感到惊讶的是,Dick兴高采烈,轻敲打字机的按键,可能会对文本预测器的概念有所了解。 机器人是否梦想成为伟大的小说家?

ai 音乐创作 算法

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?