el-input限制样式

神经风格转移,进化 (Neural Style Transfer, Evolution)

介绍 (Introduction)

A pastiche is an artistic work that imitates the style of another one. Style transfer can be defined as finding a pastiche image p whose content is similar to that of a content image c but whose style is similar to that of a style image s.

模仿是一种模仿另一种风格的艺术作品。 样式转移可以定义为找到内容与内容图像c相似但样式与样式图像s类似的仿照图像p 。

背景 (Background)

If you are familiar with optimization-based style transfer and feed-forward style transfer networks, feel free to skip this section.

The neural style transfer algorithm proposes the following definitions:

神经样式转移算法提出以下定义:

Content Similarity: two images are similar in content if their high-level features as extracted by a trained classifier are close in Euclidean distance

内容相似度 :如果两个图像的高级特征(由受过训练的分类器提取)的欧氏距离相近,则它们的内容相似

Style Similarity: two images are similar in style if their low-level features as extracted by a trained classifier share the same statistics.

样式相似度 :如果两张图像的低级特征(由经过训练的分类器提取)共享相同的统计信息,则它们的样式相似 。

Slow and Arbitrary Style Transfer

In its original formulation [R1], the neural style transfer algorithm proceeds as follows: starting from some initialization of p (e.g. c, or some random initialization), the algorithm adapts p to minimize the individual content and style loss functions described in Fig 1.

在其原始公式[R1]中,神经样式转移算法的进行如下:从p的一些初始化(例如c或某种随机初始化)开始,该算法采用p来最小化图1中描述的单个内容和样式损失函数。 。

While the above algorithm is flexible(+), it is expensive(-) to run.

尽管上述算法具有可伸缩性(+) ,但运行成本较高(-) 。

Fast and Restricted Style Transfer

In order to speed up the above process, a feed-forward convolutional network, termed a style transfer network T [R2], is introduced to learn the transformation. It takes as input a content image c and outputs the pastiche image p directly. The network is trained on many content images using the loss function described in Fig 2. At test-time, the transformation network T transforms any content image to adapt the style of a single style image tied to the feed-forward network.

为了加快上述过程,引入了称为样式传递网络T [R2]的前馈卷积网络来学习转换。 它以内容图像c作为输入 并直接输出仿照图像p 。 使用图2中描述的损失函数在许多内容图像上训练网络。在测试时,转换网络T转换任何内容图像以适应与前馈网络绑定的单个样式图像的样式。

Though the inference is fast(+), it is restricted(-) since every individual style requires a separate network to be trained

尽管推论是快速的(+) ,但它是受限制的(-),因为每种风格都需要训练一个单独的网络

限制较少的前馈样式传输网络 (Less Restricted Feed-Forward Style Transfer Network)

The previous feed-forward style transfer approach [R2] of building a separate network for each style ignores the fact that many styles probably share some degree of computation, and that this sharing is thrown away by training N networks from scratch when building a multi-style style transfer network. For instance, many impressionist paintings share similar brush strokes but differ in the color palette being used. In that case, it seems very wasteful to treat a set of N impressionist paintings as completely separate styles.

以前的为每种样式构建单独的网络的前馈样式转移方法[R2]忽略了以下事实:许多样式可能共享一定程度的计算,并且在构建多模型时从头训练N个网络会丢弃这种共享。风格风格转移网络。 例如,许多印象派绘画共享相似的笔触,但是所使用的调色板有所不同。 在那种情况下,将一组N幅印象派绘画视为完全独立的样式似乎非常浪费。

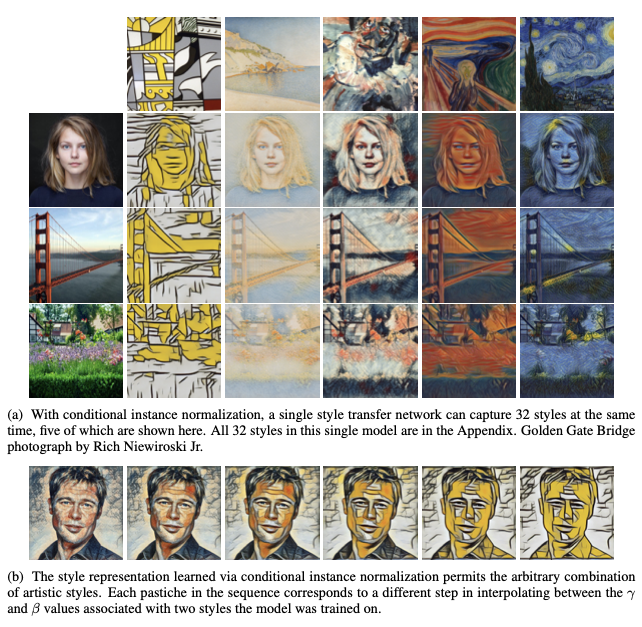

Dumoulin et al. [R3] train a single conditional style transfer network T(c, s) for N styles. Instead of learning a single set of affine parameters γ (scaling) and β (shifting), they proposed a conditional instance normalization (CIN) layer that learns a different set of parameters γs and βs for each style s. During training, a style image together with its index s are randomly chosen from a fixed set of styles s ∈ {1, 2, …, S}. The content image is then processed by a style transfer network in which the corresponding γs and βs are used in the CIN layers. Surprisingly, the network can generate images in completely different styles by using the same convolutional parameters but different affine parameters in IN layers.

Dumoulin 等。 [R3]为N个样式训练单个条件样式传输网络T(c,s) 。 他们提出了条件 实例归一化 ,而不是学习一组仿射参数γ ( 缩放 )和β ( 平移 ) (CIN)层,其学习用于每个样式秒的不同的一组参数和γSβS的。 在训练期间,从固定的一组样式s∈{1,2,…,S}中随机选择样式图像及其索引s 。 内容图像然后由其中相应γS和βS都在CIN层中使用的样式传送网络处理。 出人意料的是,网络可以使用IN层中相同的卷积参数但使用不同的仿射参数来生成完全不同样式的图像。

The introduction of CIN (Fig 4) layer allows a single network to learn style transfer for multiple styles. It exhibits the following properties:

CIN (图4)层的引入允许单个网络学习多种样式的样式转换 。 它具有以下特性:

The approach is flexible yet comparable to single-purpose style transfer networks. An added advantage is that one can stylize a single image into N painting styles with a single feed forward pass with a batch size of N (Fig 5.a).

该方法是灵活的,但可与单一用途样式传输网络相比。 另一个优点是,可以使用单次前馈传递将单个图像样式化为N种绘画样式,批量大小为N(图5.a)。

The model reduces each style image into a point in an embedding space(γs, βs), thus parsimoniously capturing the artistic style of a diversity of paintings. The embedding space representation permits one to arbitrarily combine artistic styles in novel ways not previously observed (Fig 5.b).

该模型将每个样式图像缩小为一个嵌入空间( γs,βs )中的一个点,从而简约地捕获了多种绘画的艺术风格。 嵌入的空间表示允许人们以前所未有的新颖方式任意组合艺术风格(图5.b)。

结论 (Conclusion)

The above approach is fast yet flexible to a certain extent. One problem that still remains is that to include a new style you need to include it in the set S and train the network again.

上述方法在一定程度上是快速而灵活的 。 仍然存在的一个问题是,要包含新样式,您需要将其包含在集合S中,然后再次训练网络。

翻译自: https://towardsdatascience.com/fast-and-less-restricted-style-transfer-50366c43f672

el-input限制样式

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?