朴素贝叶斯算法文本分类算法

从零开始的算法 (Algorithms From Scratch)

介绍 (Introduction)

The Naive Bayes classifier is an Eager Learning algorithm that belongs to a family of simple probabilistic classifiers based on Bayes’ Theorem.

吨他朴素贝叶斯分类器是一个渴望学习属于一个家庭基于贝叶斯定理简单的概率分类的算法。

Although Bayes Theorem — put simply, is a principled way of calculating a conditional probability without the joint probability — assumes each input is dependent upon all other variables, to use it as a classifier we remove this assumption and consider each variable to be independent of each other and refer to this simplification of Bayes Theorem for predictive modelling as the Naive Bayes classifier. In other words, Naive Bayes assumes that the presence of a predictor in a class is not related to the presence of any other predictor. This is a very strong assumption given that it is very unlikely the predictors do not interact in real-world data.

尽管简单地说是贝叶斯定理,它是一种在没有联合概率的情况下计算条件概率的原理性方法,但它假定每个输入都依赖于所有其他变量,将其用作分类器,我们删除了该假设,并认为每个变量与每个变量都独立其他,并将这种用于预测建模的贝叶斯定理的简化称为朴素贝叶斯分类器。 换句话说,朴素贝叶斯假设某个类别中某个预测变量的存在与任何其他预测变量的存在均不相关。 考虑到预测变量不太可能在现实世界的数据中不发生交互作用,因此这是一个非常强的假设。

By the way, if you are unfamiliar with Eager Learning, Eager Learning refers to the learning method in which the system aims to construct a general, input-independent target function during the training of the system. On the contrary, Algorithms such as K-Nearest Neighbors , which is a lazy learner, wait until a query is made before any generalization is made beyond the training data.

顺便说一句,如果您不熟悉Eager Learning,Eager Learning指的是一种学习方法,其中系统旨在在系统训练期间构造通用的,独立于输入的目标功能。 相反,像K-Nearest Neighbors这样的算法是懒惰的学习者,要等到进行查询后才能对训练数据进行任何概括。

Essentially, Naive Bayes (or Idiot Bayes) earned its name due to the calculations for each class being simplified to make their calculations tractable, however the classifier proven itself to be effective in many real-world scenarios, whether it is a binary classification or multi-class classification, despite its naive design and oversimplified assumptions.

本质上,朴素贝叶斯(或叫白痴贝叶斯)之所以得名,是因为简化了每个类的计算以使它们的计算更容易处理,但是分类器证明了它在许多实际场景中都是有效的,无论是二进制分类还是多分类类分类,尽管其设计过时且假设过于简单。

For the full code used in this notebook…

有关此笔记本中使用的完整代码……

创建模型 (Creating The Model)

As we stated earlier, if we are going to apply Bayes Theorem for a conditional probability classification model (Naive Bayes model) then we need to simplify the calculation.

如前所述,如果要将贝叶斯定理用于条件概率分类模型(朴素贝叶斯模型),则需要简化计算。

Before going into how we can simplify the model I am going to give a brief introduction into Marginal Probability, Joint Probability and Conditional Probability:

在讨论如何简化模型之前,我将简要介绍边际概率,联合概率和条件概率:

Marginal Probability — The probability of an event irrespective of other random variables, for instance P(A) where this means the probability of A occurring.

边际概率 -事件的概率,与其他随机变量无关,例如P(A),这意味着A发生的概率。

Joint Probability — The probability of two or more simultaneous events, for example P(A and B) or P(A, B).

联合概率 -两个或多个同时发生的事件的概率,例如P(A和B)或P(A,B)。

Conditional Probability — Probability of one or more events given the occurrence of another event, for example P(A|B) which could be stated as the Probability of A given B.

条件概率 -在发生另一事件的情况下一个或多个事件的概率,例如P(A | B),可以表示为给定B的A的概率。

A cool thing about these probabilities is that we could use them to calculate one another. The joint probability can be calculated by using the conditional probability — this is known as the product rule; See Figure 1.

关于这些概率的一件很酷的事情是,我们可以使用它们相互计算。 联合概率可以通过使用条件概率来计算-这称为乘积规则; 参见图1。

An interesting fact about the product rule is that it is symmetrical meaning that P(A, B) = P(B, A). On the other hand, the conditional probability is not symmetrical meaning that P(A|B) != P(B|A), however what we can do is, we can calculate the conditional probability using the joint probability — see Figure 2.

关于乘积规则的一个有趣的事实是它是对称的,意味着P(A,B)= P(B,A)。 另一方面,条件概率不是对称的,意味着P(A | B)!= P(B | A),但是我们可以做的是,我们可以使用联合概率来计算条件概率-见图2。

There is one problem with Figure 2; It’s often quite difficult to calculate the joint probability, so when we want to work out the conditional probability we use an alternate method. The alternate method we use is called Bayes Rule or Bayes Theorem and it’s done by using one conditional probability to workout the other — See Figure 3

图2有一个问题; 计算联合概率通常非常困难,因此,当我们要计算条件概率时,可以使用替代方法。 我们使用的另一种方法称为贝叶斯规则或贝叶斯定理,它是通过使用一种条件概率来锻炼另一种概率来完成的(请参见图3)。

Note: We may also decide to use the alternative approach to calculating the conditional probability when the reverse conditional probability is available or easier to calculate.

注意 :当反向条件概率可用或更容易计算时,我们也可能决定使用替代方法来计算条件概率。

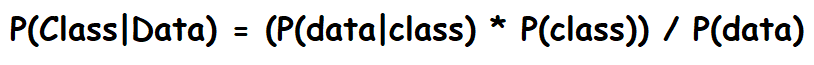

To name the terms in Bayes Theorem, we must take into consideration the context of where the equation is used — See Figure 4.

要在贝叶斯定理中命名这些术语,我们必须考虑使用该方程式的上下文-见图4。

Hence, we can restate Bayes Theorem as…

因此,我们可以将贝叶斯定理重述为…

To model this as a classification model we do this…

要将其建模为分类模型,我们需要这样做……

However, this expression is a cause of complexity in our calculation so to simplify it we remove the assumption of dependence and considering each variable independent we simplify our classifier.

但是,此表达式是我们计算复杂的原因,因此,为了简化计算,我们删除了相关性的假设,并考虑了每个独立变量,从而简化了分类器。

Note: We drop the denominator (the probability of observing the data in this instance) as it is a constant for all calculations.

注意 :由于所有计算均是常数,因此我们删除了分母(在这种情况下观察数据的概率)。

And there you have it… Well not quite. This calculation is performed for each of the class labels but we only want to know the class that is most probable for a given instance. Hence, we must find the label with the largest probability to be selected as the classification for the given instance; The name of this decision rule is called Maximum A Posteriori (MAP) — Now there you have the Naive Bayes Classifier.

在那儿……不完全是。 该计算是针对每个类标签执行的,但是我们只想知道给定实例最有可能的类。 因此,我们必须找到概率最大的标签作为给定实例的分类。 此决策规则的名称称为“ 最大后验概率(MAP)” —现在,您具有“朴素贝叶斯分类器”。

Chunking the Algorithm

分块算法

Segment the data by the class, and then compute the mean and variance of x in each class.

按类别细分数据,然后计算每个类别中x的均值和方差。

- Calculate Probability using Gaussian Probability Density Function 使用高斯概率密度函数计算概率

- Get Class Probabilities 获取班级概率

- Get Final Prediction 获得最终预测

Implementation

实作

We are going to be using the iris dataset and since the variables used in this Dataset are numeric, we will build a Gaussian Naive Bayes model.

我们将使用虹膜数据集,并且由于此数据集中使用的变量是数字,因此我们将建立一个高斯朴素贝叶斯模型。

Note: The different naive Bayes classifiers differ mainly by the assumptions they make regarding the distribution of P(Xi | y) (Source: Scikit-Learn Naive Bayes)

注意 :不同的朴素贝叶斯分类器的主要区别在于它们对P(Xi | y)的分布所做的假设( 来源 : Scikit-Learn朴素贝叶斯 )

import numpy as np

import pandas as pdfrom sklearn.datasets import load_iris

from sklearn.naive_bayes import GaussianNB

from sklearn.metrics import accuracy_score

from sklearn.model_selection import train_test_split# loading the data

iris = load_iris()

X, y = iris.data, iris.target# spliting data to train and test

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size= 0.2, random_state=1810)

X_train.shape, y_train.shape, X_test.shape, y_test.shape((120, 4), (120,), (30, 4), (30,))# scikit learn implementation

nb = GaussianNB()

nb.fit(X_train, y_train)

sklearn_preds = nb.predict(X_test)print(f"sklearn accuracy:{accuracy_score(y_test, sklearn_preds)}")

print(f"predictions: {sklearn_preds}")sklearn accuracy:1.0

predictions: [0 0 2 2 0 1 0 0 1 1 2 1 2 0 1 2 0 0 0 2 1 2 0 0 0 0 1 1 0 2]Scikit-Learn implementation gives us a perfect accuracy score on inference, Let’s build our own model and see if we can match the Scikit-learn implementation.

Scikit-Learn实现为我们提供了完美的推理准确性评分,让我们构建自己的模型,看看是否可以匹配Scikit-learn实现。

I have built a utility function get_params just so that we can get some parameters for our training data.

我建立了一个实用函数get_params ,以便我们可以为训练数据获取一些参数。

def get_params(X_train, y_train):

"""

Function to get the unique classes, number of classes and number of features in training data

"""

num_examples, num_features = X_train.shape

num_classes = len(np.unique(y_train))

return num_examples, num_features, num_classes# testing utility function

num_examples, num_features, num_classes = get_params(X_train, y_train)print(num_examples, num_features, num_classes)120 4 3Our utility function is working great so we can proceed to step one of getting the statistics (specifically mean, variance and the priors) by the class.

我们的效用函数运行良好,因此我们可以继续执行按类获取统计信息(特别是均值,方差和先验)的第一步。

def get_stats_by_class(X_train, y_train, num_examples=num_examples, num_classes=num_classes):

"""

Get stats of dataset by the class

"""

# dictionaries to store stats

class_mean = {}

class_var = {}

class_prior = {}

# loop through each class and get mean, variance and prior by class

for cls in range(num_classes):

X_cls = X_train[y_train == cls]

class_mean[str(cls)] = np.mean(X_cls, axis=0)

class_var[str(cls)] = np.var(X_cls, axis=0)

class_prior[str(cls)] = X_cls.shape[0] / num_examples

return class_mean, class_var, class_prior# output of function

cm, var, cp = get_stats_by_class(X_train, y_train)

cm, var, cp# output of function

cm, var, cp = get_stats_by_class(X_train, y_train)

print(f"mean: {cm}\n\nvariance: {var}\n\npriors: {cp}")mean: {'0': array([5.06111111, 3.48611111, 1.44722222, 0.25833333]), '1': array([5.90952381, 2.80714286, 4.25238095, 1.33809524]), '2': array([6.61904762, 2.97857143, 5.58571429, 2.02142857])}

variance: {'0': array([0.12570988, 0.15564043, 0.0286034 , 0.01243056]), '1': array([0.26324263, 0.08542517, 0.24582766, 0.04045351]), '2': array([0.43678005, 0.10930272, 0.31884354, 0.0802551 ])}

priors: {'0': 0.3, '1': 0.35, '2': 0.35}We passed the num_classes and num_examples that we got from get_params to the function as they are required to separate the data by class and calculate the priors by class. Now that we have the enough information to work out the class probabilities — Well not quite, we are dealing with continuous data and a typical assumption is that the continuous values associated with each class are distributed according to Gaussian distribution (Source: Wikipedia). As a result we built a function to calculate the density function that’s going to help us calculate the probability from a Gaussian Distribution.

我们将从get_params获得的num_classes和num_examples传递给函数,因为它们是按类分开数据并按类计算先验值所必需的。 既然我们已经掌握了足够的信息来计算类的概率,那么,我们正在处理连续数据,并且一个典型的假设是,与每个类相关联的连续值都是根据高斯分布进行分布的( 来源 : 维基百科 )。 结果,我们构建了一个函数来计算密度函数,该函数将帮助我们根据高斯分布来计算概率。

def gaussian_density_function(X, mean, std, num_examples=num_examples, num_features=num_features, eps=1e-6):

num_exambles, num_features = X_train.shape

const = -num_features/2 * np.log(2*np.pi) - 0.5 * np.sum(np.log(std + eps))

probs = 0.5 * np.sum(np.power(X - mean, 2)/(std + eps), 1)

return const - probsgaussian_density_function(X_train, cm[str(0)], var[str(0)])array([-4.34046349e+02, -1.59180054e+02, -1.61095055e+02, 9.25593725e-01,

-2.40503860e+02, -4.94829021e+02, -8.44007497e+01, -1.24647713e+02,

-2.85653665e+00, -5.72257925e+02, -3.88046018e+02, -2.24563508e+02,

2.14664687e+00, -6.59682718e+02, -1.42720100e+02, -4.38322421e+02,

-2.27259034e+02, -2.43243607e+02, -2.60192759e+02, -6.69113243e-01,

-2.12744190e+02, -1.96296373e+00, 5.27718947e-01, -8.37591818e+01,

-3.74910393e+02, -4.12550151e+02, -5.26784003e+02, 2.02972576e+00,

-7.15335962e+02, -4.20276820e+02, 1.96012133e+00, -3.00593481e+02,

-2.47461333e+02, -1.60575712e+02, -2.89201209e+02, -2.92885637e+02,

-3.13408398e+02, -3.58425796e+02, -3.91682377e+00, 1.39469746e+00,

-5.96494272e+02, -2.28962605e+02, -3.30798243e+02, -6.31249585e+02,

-2.13727911e+02, -3.30118570e+02, -1.67525014e+02, -1.76565131e+02,

9.43246044e-01, 1.79792264e+00, -5.80893842e+02, -4.89795508e+02,

-1.52006930e+02, -2.23865257e+02, -3.95841849e+00, -2.96494860e+02,

-9.76659579e+01, -3.45123893e+02, -2.61299515e+02, 7.51925529e-01,

-1.57383774e+02, -1.13127846e+02, 6.89240784e-02, -4.32253752e+02,

-2.25822704e+00, -1.95763452e+02, -2.54997829e-01, -1.66303411e+02,

-2.94088881e+02, -1.47028139e+02, -4.89549541e+02, -4.61090964e+02,

1.22387847e+00, -8.22913900e-02, 9.67128415e-01, -2.30042263e+02,

-2.90035079e+00, -2.36569499e+02, 1.42223431e+00, 9.35599166e-01,

-3.74718213e+02, -2.07417873e+02, -4.19130888e+02, 7.79051525e-01,

1.82103882e+00, -2.77364308e+02, 9.64732218e-01, -7.15058948e+01,

-2.82064236e+02, -1.89898997e+02, 9.79605922e-01, -6.24660543e+02,

1.70258877e+00, -3.17104964e-01, -4.23008651e+02, -1.32107552e+00,

-3.09809542e+02, -4.01988565e+02, -2.55855351e+02, -2.25652042e+02,

1.00821726e+00, -2.24154135e+02, 2.07961315e+00, -3.08858104e+02,

-4.95246865e+02, -4.74107852e+02, -5.24258175e+02, -5.26011925e+02,

-3.43520576e+02, -4.59462733e+02, -1.68243666e+02, 1.06990125e+00,

2.04670066e+00, -8.64641201e-01, -3.89431048e+02, -1.00629804e+02,

1.25321722e+00, -5.07813723e+02, -1.27546482e+02, -4.43687565e+02])Here is a function to calculate the class probabilities…

这是一个计算类概率的函数……

def class_probabilities(X, class_mean, class_var, class_prior, num_classes=num_classes):

"""

calculate the probability of each class given the data

"""

num_examples = X.shape[0]

probs = np.zeros((num_examples, num_classes))for cls in range(num_classes):

prior = class_prior[str(cls)]

probs_cls = gaussian_density_function(X, class_mean[str(cls)], class_var[str(cls)])

probs[:, cls] = probs_cls + np.log(prior)

return probsNow we need to make a prediction using MAP so let’s put all these steps together in a predict function and output the max probability class.

现在我们需要使用MAP进行预测,因此让我们将所有这些步骤放到一个预测函数中并输出最大概率类。

def predict(X_test, X_train, y_train):

num_examples, num_features, num_classes = get_params(X_test, y_train)

class_mean, class_std, class_prior = get_stats_by_class(X_train, y_train)

probs = class_probabilities(X_test, class_mean, class_std, class_prior)

return np.argmax(probs, 1)my_preds = predict(X_test, X_train, y_train)print(f"my predictions accuracy:{accuracy_score(y_test, my_preds)}")print(f"predictions: {my_preds}")my predictions accuracy:1.0

predictions: [0 0 2 2 0 1 0 0 1 1 2 1 2 0 1 2 0 0 0 2 1 2 0 0 0 0 1 1 0 2]As a sanity check…

作为健全性检查...

sklearn_preds == my_predsarray([ True, True, True, True, True, True, True, True, True,

True, True, True, True, True, True, True, True, True,

True, True, True, True, True, True, True, True, True,

True, True, True])and that’s the way the cookie crumbles!

这就是cookie崩溃的方式!

优点 (Pros)

- Require a small amount of training data to estimate the necessary parameters 需要少量训练数据以估计必要的参数

- Extremely fast compared to sophisticated methods 与复杂方法相比,速度极快

缺点 (Cons)

Known to be a bad estimator (In the Scikit-Learn framework, the outputs from

predict_probaaren’t taken too seriously.众所周知这是一个错误的估计值(在Scikit-Learn框架中,对

predict_proba的输出并不太重视。- The assumption of independent predictors doesn’t hold true (most of the time) in the real world 独立预测变量的假设在现实世界中(大部分时间)不成立

结语 (Wrap Up)

You’ve now learnt about Naive Bayes Classifiers and how to build one from scratch using Python. Yes, the algorithm has very over-simplified assumptions, but it is still very effective in many real world applications and is worth trying if you want very fast predictions.

您现在已经了解了朴素贝叶斯分类器,以及如何使用Python从头开始构建分类器。 是的,该算法的假设过于简单,但是在许多实际应用中仍然非常有效,如果您需要非常快速的预测,则值得尝试。

Let’s continue the conversation on LinkedIn…

让我们继续在LinkedIn上进行对话…

翻译自: https://towardsdatascience.com/algorithms-from-scratch-naive-bayes-classifier-8006cc691493

朴素贝叶斯算法文本分类算法

本文介绍了朴素贝叶斯算法在文本分类中的应用,详细讲解了如何从零开始构建一个朴素贝叶斯分类器。通过实例解析算法原理,并探讨其在机器学习中的作用。

本文介绍了朴素贝叶斯算法在文本分类中的应用,详细讲解了如何从零开始构建一个朴素贝叶斯分类器。通过实例解析算法原理,并探讨其在机器学习中的作用。

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?