之前在写SRP的过程中发现东西要写起来确实有点多,就打算先咕咕咕自己一会,然后看到URP中有个叫RenderFeature的玩意。感觉蛮好玩的,用下来写了个简单的PT来试了下效果,意外的感觉还挺不错故发此文来记录一下。

封面

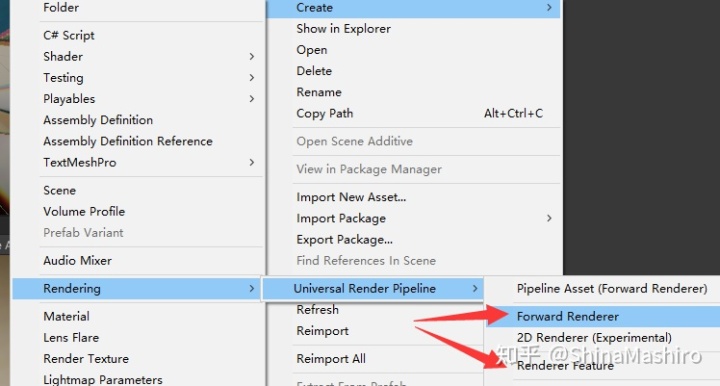

0.RenderFeature创建。

要想使用RenderFeature,目前直接下载最新的unity使用URP即可。故第一步则先需要先创建一个RenderFeature如下。(需要把创建的RenderFeature 拖入 forwardRender)

创建成功如下:

public class MRTXRenderFeature : ScriptableRendererFeature

{

class RayTracingPass: ScriptableRenderPass

{

public override void Configure(CommandBuffer cmd, RenderTextureDescriptor cameraTextureDescriptor)

{

}

public override void Execute(ScriptableRenderContext context, ref RenderingData renderingData)

{

}

public override void FrameCleanup(CommandBuffer cmd)

{

}

}

RayTracingPass mRayTracingPass;

public override void Create()

{

mRayTracingPass = new RayTracingPass();

mRayTracingPass.renderPassEvent = RenderPassEvent.AfterRenderingOpaques;

}

public override void AddRenderPasses(ScriptableRenderer renderer, ref RenderingData renderingData)

{

renderer.EnqueuePass(m_ScriptablePass);

}

}

有了这个RenderFeature我们就可以开始在任意地方插入我们的pass了。首先需要在这里头创建一个RayTraceSettings ,我们来设置渲染的时机。

public class RayTraceSettings

{

public RayTracingShader _shader ;

public RenderPassEvent mrenderPassEvent = RenderPassEvent.AfterRendering;

}

这里写完后记得在Create方法中创建一下,这里Setting的显示是反射调用的所以,这里setting的变量名一定要为settings。

public RayTraceSettings settings = new RayTraceSettings();然后在Create方法中调用 RenderFeature的Pass。并且给其renderPassEvent指定成上文settings中的mrenderPassEvent。这样就可以在RenderFeature面板中看到自己插入pass的位置了。

mRayTracingPass = new RayTracingPass();

mRayTracingPass.renderPassEvent = settings.mrenderPassEvent;

这样RenderFeature大致就先这样,接下来就需要开始了解下RaytracingShader并开始和RenderFeature开始结合了。

1.RTX-ON!

创建一个RayTracingShader

RWTexture2D<float4> RenderTarget;//返回的图片

#pragma max_recursion_depth 1

[shader("raygeneration")]

void MyRaygenShader()

{

uint2 dispatchIdx = DispatchRaysIndex().xy;//像素坐标

RenderTarget[dispatchIdx] = float4(dispatchIdx.x & dispatchIdx.y, (dispatchIdx.x & 15)/15.0, (dispatchIdx.y & 15)/15.0, 0.0);

}RayTraceShader开始向锥体内发射射线

#pragma max_recursion_depth 1

inline void GenerateCameraRay(out float3 origin, out float3 direction)

{

float2 xy = DispatchRaysIndex().xy + 0.5f; // center in the middle of the pixel.

float2 screenPos = xy / DispatchRaysDimensions().xy * 2.0f - 1.0f;//Range (-1,1)

// Un project the pixel coordinate into a ray.

float4 world = mul(_InvCameraViewProj, float4(screenPos, 0, 1));

world.xyz /= world.w;

origin = _WorldSpaceCameraPos.xyz;

direction = normalize(world.xyz - origin);

}

RWTexture2D<float4> _OutputTarget;

[shader("raygeneration")]

void OutputColorRayGenShader()

{

uint2 dispatchIdx = DispatchRaysIndex().xy;//像素坐标

uint2 dispatchDim = DispatchRaysDimensions().xy; //屏幕长宽

float3 origin;

float3 direction;

GenerateCameraRay(origin, direction);

RayDesc rayDescriptor;

rayDescriptor.Origin = origin;

rayDescriptor.Direction = direction;

rayDescriptor.TMin = 1e-5f;//射线最小距离

rayDescriptor.TMax = _CameraFarDistance;//射线最远距离,摄像机远裁面

RayIntersection rayIntersection;

rayIntersection.remainingDepth = 1;

rayIntersection.color = float4(1.0f,1.0f, 1.0f, 0.0f);

TraceRay(_AccelerationStructure, RAY_FLAG_CULL_BACK_FACING_TRIANGLES, 0xFF, 0, 1, 0, rayDescriptor, rayIntersection);

_OutputTarget[dispatchIdx] = rayIntersection.color;

}

[shader("miss")]

void MissShader(inout RayIntersection rayIntersection : SV_RayPayload)

{

float3 origin = WorldRayOrigin();

float3 direction = WorldRayDirection();

rayIntersection.color = float4(1.0f,1.0f,1.0f, 1.0f);

}这里通过_InvCameraViewProj(视图空间到投影空间的转置矩阵)获得我们射线的DIRECTION,并且通过_WorldSpaceCameraPos来获得射线的原点。

RayDesc 射线结构

每次追踪都将更新其值,直至结束。

remainingDepth 设置反射次数。RayTCurrent - Win32 appsremainingDepth 设置反射次数。

RayIntersection 用来储存射线命中后的颜色信息和剩余当前射线的剩余反射次数。

TraceRay DXR的方法用来求交

本文介绍了如何在Unity的URP中利用RenderFeature和RayTracingShader实现RTX效果。从创建RenderFeature开始,详细讲解了设置物体Shader、RayTracingAccelerationStructure的构建、Antialiasing与DOF的实现,以及各种材质效果如Diffuse、Dielectric Transparent和SkyBox的处理。最后,讨论了如何与光栅化结果混合,展示了最终效果。

本文介绍了如何在Unity的URP中利用RenderFeature和RayTracingShader实现RTX效果。从创建RenderFeature开始,详细讲解了设置物体Shader、RayTracingAccelerationStructure的构建、Antialiasing与DOF的实现,以及各种材质效果如Diffuse、Dielectric Transparent和SkyBox的处理。最后,讨论了如何与光栅化结果混合,展示了最终效果。

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?