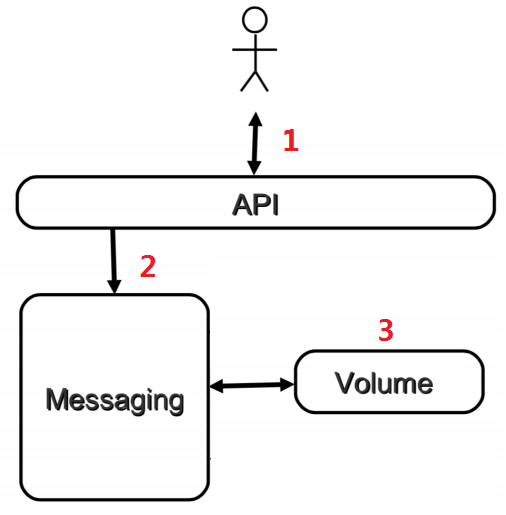

Snapshot 可以为 volume 创建快照,快照中保存了 volume当前的状态,以后可以通过snapshot 回溯。snapshot操作比较简单,流程图如下:

1、向 cinder-api 发送Snapshot请求

2、cinder-api发送消息

3、cinder-volume执行snapshot操作

[root@DevStack-Rocky-Controller-21 ~]# lvdisplay stack-volumes-lvmdriver-1

--- Logical volume ---

LV Name stack-volumes-lvmdriver-1-pool

VG Name stack-volumes-lvmdriver-1

LV UUID v2e1XT-EMCl-K8uo-CJsX-mPzs-hM2C-OAGMQg

LV Write Access read/write

LV Creation host, time DevStack-Rocky-Controller-21, 2019-06-11 19:18:14 +0800

LV Pool metadata stack-volumes-lvmdriver-1-pool_tmeta

LV Pool data stack-volumes-lvmdriver-1-pool_tdata

LV Status available

# open 3

LV Size 22.80 GiB

Allocated pool data 0.17%

Allocated metadata 0.67%

Current LE 5837

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:5

--- Logical volume ---

LV Path /dev/stack-volumes-lvmdriver-1/volume-06984f3c-44e1-42a5-b03c-08a0cfa19f73

LV Name volume-06984f3c-44e1-42a5-b03c-08a0cfa19f73

VG Name stack-volumes-lvmdriver-1

LV UUID gNIbdc-CY8b-uqjg-vWZs-U3fp-t4J7-onYFr6

LV Write Access read/write

LV Creation host, time DevStack-Rocky-Controller-21, 2019-07-01 20:42:09 +0800

LV Pool name stack-volumes-lvmdriver-1-pool

LV Status available

# open 1

LV Size 1.00 GiB

Mapped size 3.83%

Current LE 256

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:7

--- Logical volume ---

LV Path /dev/stack-volumes-lvmdriver-1/volume-45717894-ccb6-49d3-a3ca-337e3667731c

LV Name volume-45717894-ccb6-49d3-a3ca-337e3667731c

VG Name stack-volumes-lvmdriver-1

LV UUID XbwNeV-zMQ0-t1M8-OAuS-Hq1Z-dL1N-Tvx1Yq

LV Write Access read/write

LV Creation host, time DevStack-Rocky-Controller-21, 2019-07-01 20:42:13 +0800

LV Pool name stack-volumes-lvmdriver-1-pool

LV Thin origin name volume-06984f3c-44e1-42a5-b03c-08a0cfa19f73

LV Status available

# open 0

LV Size 1.00 GiB

Mapped size 3.83%

Current LE 256

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:8

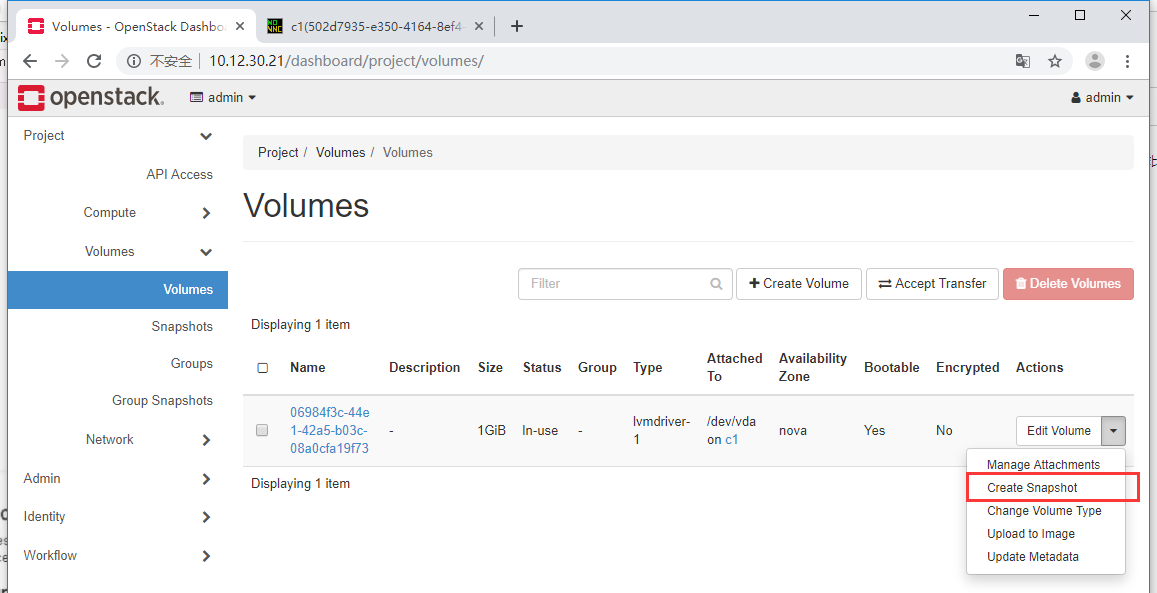

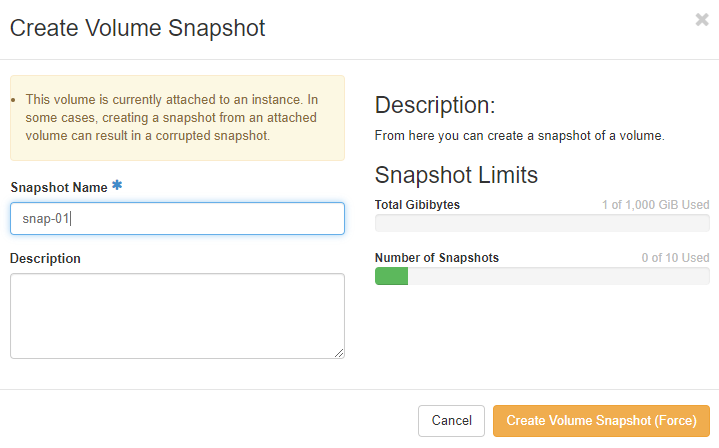

这里我们看到界面提示当前volume已经attach到某个Instance,创建snapshot可能导致数据不一致。我们可以先pause Instance,或者确认当前Instance 没有大量的磁盘IO,处于相对稳定的状态,则可以创建snapshot,否则还是建议先detach volume 再做snapshot。

[root@DevStack-Rocky-Controller-21 ~]# lvdisplay stack-volumes-lvmdriver-1

--- Logical volume ---

LV Name stack-volumes-lvmdriver-1-pool

VG Name stack-volumes-lvmdriver-1

LV UUID v2e1XT-EMCl-K8uo-CJsX-mPzs-hM2C-OAGMQg

LV Write Access read/write

LV Creation host, time DevStack-Rocky-Controller-21, 2019-06-11 19:18:14 +0800

LV Pool metadata stack-volumes-lvmdriver-1-pool_tmeta

LV Pool data stack-volumes-lvmdriver-1-pool_tdata

LV Status available

# open 3

LV Size 22.80 GiB

Allocated pool data 0.17%

Allocated metadata 0.67%

Current LE 5837

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:5

--- Logical volume ---

LV Path /dev/stack-volumes-lvmdriver-1/volume-06984f3c-44e1-42a5-b03c-08a0cfa19f73

LV Name volume-06984f3c-44e1-42a5-b03c-08a0cfa19f73

VG Name stack-volumes-lvmdriver-1

LV UUID gNIbdc-CY8b-uqjg-vWZs-U3fp-t4J7-onYFr6

LV Write Access read/write

LV Creation host, time DevStack-Rocky-Controller-21, 2019-07-01 20:42:09 +0800

LV Pool name stack-volumes-lvmdriver-1-pool

LV Status available

# open 1

LV Size 1.00 GiB

Mapped size 3.83%

Current LE 256

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:7

--- Logical volume ---

LV Path /dev/stack-volumes-lvmdriver-1/volume-45717894-ccb6-49d3-a3ca-337e3667731c

LV Name volume-45717894-ccb6-49d3-a3ca-337e3667731c

VG Name stack-volumes-lvmdriver-1

LV UUID XbwNeV-zMQ0-t1M8-OAuS-Hq1Z-dL1N-Tvx1Yq

LV Write Access read/write

LV Creation host, time DevStack-Rocky-Controller-21, 2019-07-01 20:42:13 +0800

LV Pool name stack-volumes-lvmdriver-1-pool

LV Thin origin name volume-06984f3c-44e1-42a5-b03c-08a0cfa19f73

LV Status available

# open 0

LV Size 1.00 GiB

Mapped size 3.83%

Current LE 256

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:8

--- Logical volume ---

LV Path /dev/stack-volumes-lvmdriver-1/_snapshot-6589c650-8d85-4495-8e3d-93d2c3f04c51

LV Name _snapshot-6589c650-8d85-4495-8e3d-93d2c3f04c51

VG Name stack-volumes-lvmdriver-1

LV UUID ZtIrYn-ioyR-Rfn8-8Uax-urLn-KKE5-Xoxbnt

LV Write Access read/write

LV Creation host, time DevStack-Rocky-Controller-21, 2019-07-02 20:31:24 +0800

LV Pool name stack-volumes-lvmdriver-1-pool

LV Thin origin name volume-06984f3c-44e1-42a5-b03c-08a0cfa19f73

LV Status NOT available

LV Size 1.00 GiB

Current LE 256

Segments 1

Allocation inherit

Read ahead sectors auto

cinder-volume 执行 lvcreate 创建 snapshot

Jul 2 20:31:23 DevStack-Rocky-Controller-21

devstack@c-api.service: INFO cinder.api.openstack.wsgi [None req-c5bdbbaa-7c70-406c-9259-5a68f5d6dc9d admin admin]

POST http://10.12.30.21/volume/v3/9ed27f1df9814f91b370d1003b066b0a/snapshots

Jul 2 20:31:23 DevStack-Rocky-Controller-21

devstack@c-api.service: DEBUG cinder.api.openstack.wsgi [None req-c5bdbbaa-7c70-406c-9259-5a68f5d6dc9d admin admin]

Action: 'create', calling method: create, body: {"snapshot": {"description": "", "metadata": {}, "force": true, "name": "snap-01", "volume_id": "06984f3c-44e1-42a5-b03c-08a0cfa19f73"}} {{(pid=21071) _process_stack /opt/stack/cinder/cinder/api/openstack/wsgi.py:869}}

Jul 2 20:31:23 DevStack-Rocky-Controller-21

devstack@c-api.service: INFO cinder.volume.api [None req-c5bdbbaa-7c70-406c-9259-5a68f5d6dc9d admin admin]

Volume info retrieved successfully.

Jul 2 20:31:23 DevStack-Rocky-Controller-21

devstack@c-api.service: INFO cinder.api.v2.snapshots [None req-c5bdbbaa-7c70-406c-9259-5a68f5d6dc9d admin admin]

Create snapshot from volume 06984f3c-44e1-42a5-b03c-08a0cfa19f73

Jul 2 20:31:23 DevStack-Rocky-Controller-21

devstack@c-api.service: DEBUG cinder.quota [None req-c5bdbbaa-7c70-406c-9259-5a68f5d6dc9d admin admin]

Created reservations ['0f75ca2a-d47c-4005-8859-221e553ea890', '1787c063-b59f-45c6-a2c6-0d983a73caf3', '2393b16d-b008-44f8-af30-b80c8b7c5611', '645559d2-24b9-4974-b1d6-9ca71936e267'] {{(pid=21071) reserve /opt/stack/cinder/cinder/quota.py:1029}}

Jul 2 20:31:23 DevStack-Rocky-Controller-21

devstack@c-api.service: INFO cinder.volume.api [None req-c5bdbbaa-7c70-406c-9259-5a68f5d6dc9d admin admin]

Snapshot force create request issued successfully.

Jul 2 20:31:23 DevStack-Rocky-Controller-21

devstack@c-api.service: INFO cinder.api.openstack.wsgi [None req-c5bdbbaa-7c70-406c-9259-5a68f5d6dc9d admin admin]

http://10.12.30.21/volume/v3/9ed27f1df9814f91b370d1003b066b0a/snapshots returned with HTTP 202

Jul 2 20:31:23 DevStack-Rocky-Controller-21

cinder-scheduler: DEBUG cinder.scheduler.host_manager [None req-c5bdbbaa-7c70-406c-9259-5a68f5d6dc9d admin None]

Updating capabilities for DevStack-Rocky-Controller-21@lvmdriver-1lvmdriver-1: {u'pool_name': u'lvmdriver-1', u'filter_function': None, u'goodness_function': None, u'multiattach': True, u'total_volumes': 3, u'provisioned_capacity_gb': 2.0, 'timestamp': datetime.datetime(2019, 7, 2, 12, 31, 3, 194910), u'allocated_capacity_gb': 2, 'volume_backend_name': u'lvmdriver-1', u'thin_provisioning_support': True, u'free_capacity_gb': 22.76, 'driver_version': u'3.0.0', u'location_info': u'LVMVolumeDriver:DevStack-Rocky-Controller-21:stack-volumes-lvmdriver-1:thin:0', u'total_capacity_gb': 22.8, u'thick_provisioning_support': False, u'reserved_percentage': 0, u'QoS_support': False, u'max_over_subscription_ratio': u'20.0', 'vendor_name': u'Open Source', 'storage_protocol': u'iSCSI', u'backend_state': u'up'} {{(pid=21820) update_from_volume_capability /opt/stack/cinder/cinder/scheduler/host_manager.py:358}}

Jul 2 20:31:23 DevStack-Rocky-Controller-21

cinder-scheduler: DEBUG cinder.scheduler.host_manager [None req-c5bdbbaa-7c70-406c-9259-5a68f5d6dc9d admin None]

Updating capabilities for DevStack-Rocky-Compute-22@lvmdriver-1lvmdriver-1: {u'pool_name': u'lvmdriver-1', u'filter_function': None, u'goodness_function': None, u'multiattach': True, u'total_volumes': 1, u'provisioned_capacity_gb': 0.0, 'timestamp': datetime.datetime(2019, 7, 2, 12, 30, 47, 114948), u'allocated_capacity_gb': 0, 'volume_backend_name': u'lvmdriver-1', u'thin_provisioning_support': True, u'free_capacity_gb': 22.8, 'driver_version': u'3.0.0', u'location_info': u'LVMVolumeDriver:DevStack-Rocky-Compute-22:stack-volumes-lvmdriver-1:thin:0', u'total_capacity_gb': 22.8, u'thick_provisioning_support': False, u'reserved_percentage': 0, u'QoS_support': False, u'max_over_subscription_ratio': u'20.0', 'vendor_name': u'Open Source', 'storage_protocol': u'iSCSI', u'backend_state': u'up'} {{(pid=21820) update_from_volume_capability /opt/stack/cinder/cinder/scheduler/host_manager.py:358}}

Jul 2 20:31:23 DevStack-Rocky-Controller-21

cinder-scheduler: DEBUG cinder.scheduler.base_filter [None req-c5bdbbaa-7c70-406c-9259-5a68f5d6dc9d admin None]

Starting with 2 host(s) {{(pid=21820) get_filtered_objects /opt/stack/cinder/cinder/scheduler/base_filter.py:95}}

Jul 2 20:31:23 DevStack-Rocky-Controller-21

cinder-scheduler: DEBUG cinder.scheduler.base_filter [None req-c5bdbbaa-7c70-406c-9259-5a68f5d6dc9d admin None]

Filter AvailabilityZoneFilter returned 2 host(s) {{(pid=21820) get_filtered_objects /opt/stack/cinder/cinder/scheduler/base_filter.py:125}}

Jul 2 20:31:23 DevStack-Rocky-Controller-21

cinder-scheduler: DEBUG cinder.scheduler.filters.capacity_filter [None req-c5bdbbaa-7c70-406c-9259-5a68f5d6dc9d admin None]

Checking if host DevStack-Rocky-Compute-22@lvmdriver-1lvmdriver-1 can create a 1 GB volume (None) {{(pid=21820) backend_passes /opt/stack/cinder/cinder/scheduler/filters/capacity_filter.py:62}}

Jul 2 20:31:23 DevStack-Rocky-Controller-21

cinder-scheduler: DEBUG cinder.scheduler.filters.capacity_filter [None req-c5bdbbaa-7c70-406c-9259-5a68f5d6dc9d admin None]

Checking provisioning for request of 1 GB. Backend: host 'DevStack-Rocky-Compute-22@lvmdriver-1lvmdriver-1':free_capacity_gb: 22.8, total_capacity_gb: 22.8,allocated_capacity_gb: 0, max_over_subscription_ratio: 20.0,reserved_percentage: 0, provisioned_capacity_gb: 0.0,thin_provisioning_support: True, thick_provisioning_support: False,pools: None,updated at: 2019-07-02 12:30:47.114948 {{(pid=21820) backend_passes /opt/stack/cinder/cinder/scheduler/filters/capacity_filter.py:134}}

Jul 2 20:31:23 DevStack-Rocky-Controller-21

cinder-scheduler: DEBUG cinder.scheduler.filters.capacity_filter [None req-c5bdbbaa-7c70-406c-9259-5a68f5d6dc9d admin None]

Space information for volume creation on host DevStack-Rocky-Compute-22@lvmdriver-1lvmdriver-1 (requested / avail): 1/22.8 {{(pid=21820) backend_passes /opt/stack/cinder/cinder/scheduler/filters/capacity_filter.py:172}}

Jul 2 20:31:23 DevStack-Rocky-Controller-21

cinder-scheduler: DEBUG cinder.scheduler.filters.capacity_filter [None req-c5bdbbaa-7c70-406c-9259-5a68f5d6dc9d admin None]

Checking if host DevStack-Rocky-Controller-21@lvmdriver-1lvmdriver-1 can create a 1 GB volume (None) {{(pid=21820) backend_passes /opt/stack/cinder/cinder/scheduler/filters/capacity_filter.py:62}}

Jul 2 20:31:23 DevStack-Rocky-Controller-21

cinder-scheduler: DEBUG cinder.scheduler.filters.capacity_filter [None req-c5bdbbaa-7c70-406c-9259-5a68f5d6dc9d admin None]

Checking provisioning for request of 1 GB. Backend: host 'DevStack-Rocky-Controller-21@lvmdriver-1lvmdriver-1':free_capacity_gb: 22.76, total_capacity_gb: 22.8,allocated_capacity_gb: 2, max_over_subscription_ratio: 20.0,reserved_percentage: 0, provisioned_capacity_gb: 2.0,thin_provisioning_support: True, thick_provisioning_support: False,pools: None,updated at: 2019-07-02 12:31:03.194910 {{(pid=21820) backend_passes /opt/stack/cinder/cinder/scheduler/filters/capacity_filter.py:134}}

Jul 2 20:31:23 DevStack-Rocky-Controller-21

cinder-scheduler: DEBUG cinder.scheduler.filters.capacity_filter [None req-c5bdbbaa-7c70-406c-9259-5a68f5d6dc9d admin None]

Space information for volume creation on host DevStack-Rocky-Controller-21@lvmdriver-1lvmdriver-1 (requested / avail): 1/22.76 {{(pid=21820) backend_passes /opt/stack/cinder/cinder/scheduler/filters/capacity_filter.py:172}}

Jul 2 20:31:23 DevStack-Rocky-Controller-21

cinder-scheduler: DEBUG cinder.scheduler.base_filter [None req-c5bdbbaa-7c70-406c-9259-5a68f5d6dc9d admin None]

Filter CapacityFilter returned 2 host(s) {{(pid=21820) get_filtered_objects /opt/stack/cinder/cinder/scheduler/base_filter.py:125}}

Jul 2 20:31:23 DevStack-Rocky-Controller-21

cinder-scheduler: DEBUG cinder.scheduler.base_filter [None req-c5bdbbaa-7c70-406c-9259-5a68f5d6dc9d admin None]

Filter CapabilitiesFilter returned 2 host(s) {{(pid=21820) get_filtered_objects /opt/stack/cinder/cinder/scheduler/base_filter.py:125}}

Jul 2 20:31:23 DevStack-Rocky-Controller-21

cinder-scheduler: DEBUG cinder.scheduler.filter_scheduler [None req-c5bdbbaa-7c70-406c-9259-5a68f5d6dc9d admin None]

Filtered [host 'DevStack-Rocky-Compute-22@lvmdriver-1lvmdriver-1':free_capacity_gb: 22.8, total_capacity_gb: 22.8,allocated_capacity_gb: 0, max_over_subscription_ratio: 20.0,reserved_percentage: 0, provisioned_capacity_gb: 0.0,thin_provisioning_support: True, thick_provisioning_support: False,pools: None,updated at: 2019-07-02 12:30:47.114948, host 'DevStack-Rocky-Controller-21@lvmdriver-1lvmdriver-1':free_capacity_gb: 22.76, total_capacity_gb: 22.8,allocated_capacity_gb: 2, max_over_subscription_ratio: 20.0,reserved_percentage: 0, provisioned_capacity_gb: 2.0,thin_provisioning_support: True, thick_provisioning_support: False,pools: None,updated at: 2019-07-02 12:31:03.194910] {{(pid=21820) _get_weighted_candidates /opt/stack/cinder/cinder/scheduler/filter_scheduler.py:342}}

Jul 2 20:31:23 DevStack-Rocky-Controller-21

cinder-scheduler: DEBUG cinder.scheduler.base_weight [None req-c5bdbbaa-7c70-406c-9259-5a68f5d6dc9d admin None]

Weigher CapacityWeigher returned, weigher value is {max: 456.0, min: 454.0} {{(pid=21820) get_weighed_objects /opt/stack/cinder/cinder/scheduler/base_weight.py:153}}

Jul 2 20:31:23 DevStack-Rocky-Controller-21

cinder-scheduler: DEBUG cinder.scheduler.host_manager [None req-c5bdbbaa-7c70-406c-9259-5a68f5d6dc9d admin None]

Weighed [WeighedHost [host: DevStack-Rocky-Compute-22@lvmdriver-1lvmdriver-1, weight: 1.0], WeighedHost [host: DevStack-Rocky-Controller-21@lvmdriver-1lvmdriver-1, weight: 0.0]] {{(pid=21820) get_weighed_backends /opt/stack/cinder/cinder/scheduler/host_manager.py:500}}

Jul 2 20:31:23 DevStack-Rocky-Controller-21

cinder-scheduler: DEBUG cinder.scheduler.host_manager [None req-c5bdbbaa-7c70-406c-9259-5a68f5d6dc9d admin None]

Consumed 1 GB from backend: host 'DevStack-Rocky-Controller-21@lvmdriver-1lvmdriver-1':free_capacity_gb: 21.76, total_capacity_gb: 22.8,allocated_capacity_gb: 3, max_over_subscription_ratio: 20.0,reserved_percentage: 0, provisioned_capacity_gb: 3.0,thin_provisioning_support: True, thick_provisioning_support: False,pools: None,updated at: 2019-07-02 12:31:23.800880 {{(pid=21820) consume_from_volume /opt/stack/cinder/cinder/scheduler/host_manager.py:316}}

Jul 2 20:31:23 DevStack-Rocky-Controller-21

devstack@c-api.service: INFO cinder.api.openstack.wsgi [None req-c5bdbbaa-7c70-406c-9259-5a68f5d6dc9d admin admin]

GET http://10.12.30.21/volume//

Jul 2 20:31:23 DevStack-Rocky-Controller-21

devstack@c-api.service: DEBUG cinder.api.openstack.wsgi [None req-c5bdbbaa-7c70-406c-9259-5a68f5d6dc9d admin admin]

Empty body provided in request {{(pid=21071) get_body /opt/stack/cinder/cinder/api/openstack/wsgi.py:718}}

Jul 2 20:31:23 DevStack-Rocky-Controller-21

devstack@c-api.service: DEBUG cinder.api.openstack.wsgi [None req-c5bdbbaa-7c70-406c-9259-5a68f5d6dc9d admin admin]

Calling method 'all' {{(pid=21071) _process_stack /opt/stack/cinder/cinder/api/openstack/wsgi.py:872}}

Jul 2 20:31:23 DevStack-Rocky-Controller-21

devstack@c-api.service: INFO cinder.api.openstack.wsgi [None req-c5bdbbaa-7c70-406c-9259-5a68f5d6dc9d admin admin]

http://10.12.30.21/volume// returned with HTTP 300

Jul 2 20:31:23 DevStack-Rocky-Controller-21

cinder-volume: DEBUG oslo_concurrency.processutils [None req-c5bdbbaa-7c70-406c-9259-5a68f5d6dc9d admin None]

Running cmd (subprocess): sudo cinder-rootwrap /etc/cinder/rootwrap.conf env LC_ALL=C lvs --noheadings --unit=g -o vg_name,name,size --nosuffix stack-volumes-lvmdriver-1/volume-06984f3c-44e1-42a5-b03c-08a0cfa19f73 {{(pid=22852) execute /usr/lib/python2.7/site-packages/oslo_concurrency/processutils.py:372}}

Jul 2 20:31:23 DevStack-Rocky-Controller-21

devstack@c-api.service: INFO cinder.api.openstack.wsgi [None req-c5bdbbaa-7c70-406c-9259-5a68f5d6dc9d admin admin]

GET http://10.12.30.21/volume//

Jul 2 20:31:23 DevStack-Rocky-Controller-21

devstack@c-api.service: DEBUG cinder.api.openstack.wsgi [None req-c5bdbbaa-7c70-406c-9259-5a68f5d6dc9d admin admin]

Empty body provided in request {{(pid=21071) get_body /opt/stack/cinder/cinder/api/openstack/wsgi.py:718}}

Jul 2 20:31:23 DevStack-Rocky-Controller-21

devstack@c-api.service: DEBUG cinder.api.openstack.wsgi [None req-c5bdbbaa-7c70-406c-9259-5a68f5d6dc9d admin admin]

Calling method 'all' {{(pid=21071) _process_stack /opt/stack/cinder/cinder/api/openstack/wsgi.py:872}}

Jul 2 20:31:23 DevStack-Rocky-Controller-21

devstack@c-api.service: INFO cinder.api.openstack.wsgi [None req-c5bdbbaa-7c70-406c-9259-5a68f5d6dc9d admin admin]

http://10.12.30.21/volume// returned with HTTP 300

Jul 2 20:31:24 DevStack-Rocky-Controller-21

cinder-volume: DEBUG oslo_concurrency.processutils [None req-c5bdbbaa-7c70-406c-9259-5a68f5d6dc9d admin None]

CMD "sudo cinder-rootwrap /etc/cinder/rootwrap.conf env LC_ALL=C lvs --noheadings --unit=g -o vg_name,name,size --nosuffix stack-volumes-lvmdriver-1/volume-06984f3c-44e1-42a5-b03c-08a0cfa19f73" returned: 0 in 0.266s {{(pid=22852) execute /usr/lib/python2.7/site-packages/oslo_concurrency/processutils.py:409}}

Jul 2 20:31:24 DevStack-Rocky-Controller-21

cinder-volume: DEBUG oslo_concurrency.processutils [None req-c5bdbbaa-7c70-406c-9259-5a68f5d6dc9d admin None]

Running cmd (subprocess): sudo cinder-rootwrap /etc/cinder/rootwrap.conf env LC_ALL=C lvcreate --name _snapshot-6589c650-8d85-4495-8e3d-93d2c3f04c51 --snapshot stack-volumes-lvmdriver-1/volume-06984f3c-44e1-42a5-b03c-08a0cfa19f73 {{(pid=22852) execute /usr/lib/python2.7/site-packages/oslo_concurrency/processutils.py:372}}

Jul 2 20:31:24 DevStack-Rocky-Controller-21

cinder-volume: DEBUG oslo_concurrency.processutils [None req-c5bdbbaa-7c70-406c-9259-5a68f5d6dc9d admin None]

CMD "sudo cinder-rootwrap /etc/cinder/rootwrap.conf env LC_ALL=C lvcreate --name _snapshot-6589c650-8d85-4495-8e3d-93d2c3f04c51 --snapshot stack-volumes-lvmdriver-1/volume-06984f3c-44e1-42a5-b03c-08a0cfa19f73" returned: 0 in 0.571s {{(pid=22852) execute /usr/lib/python2.7/site-packages/oslo_concurrency/processutils.py:409}}

Jul 2 20:31:24 DevStack-Rocky-Controller-21

cinder-volume: INFO cinder.volume.manager [None req-c5bdbbaa-7c70-406c-9259-5a68f5d6dc9d admin None]

Create snapshot completed successfully

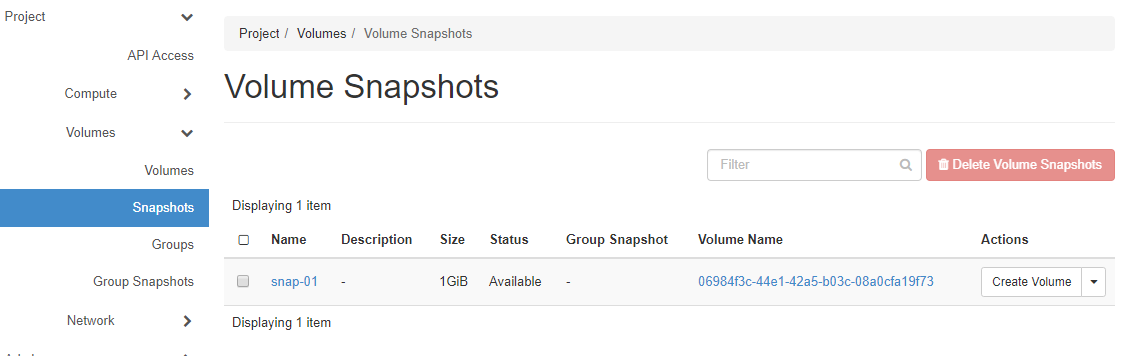

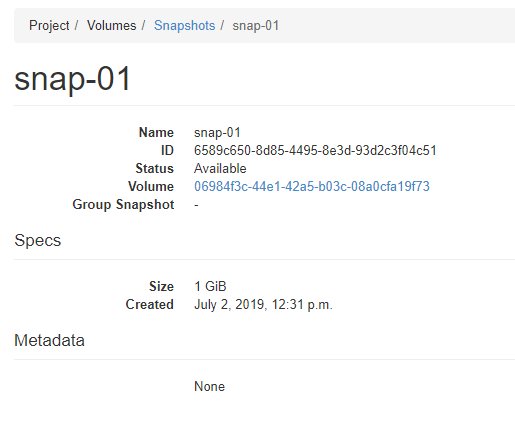

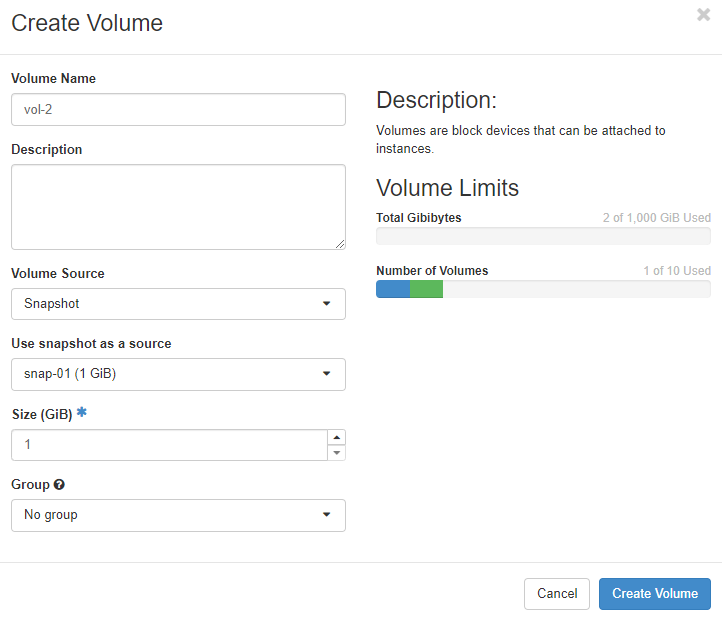

有了snapshot,我们就可以 将 volume 回溯到创建snapshot时的状态。方法是通过snapshot创建新的 volume,新创建的volume 容量必须大于或者等于snapshot的容量。

如果一个volume存在snapshot,则这个volume是无法删除的,这是因为snapshot依赖于volume,snapshot无法独立存在。在LVM作为volume provider 的环境中,snapshot是从源volume完全copy来的,所以这种依赖关系不强。但在其他volume provider (比如商业存储设备或者分布式文件系统),snapshot通常是源volume创建快照时数据状态的一个引用(指针),占用空间非常小,在这种实现方式里snapshot对源volume的依赖就非常明显。

135

135

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?