之前为了赶项目进度(人少,没资源),只研究了下针对processing time,因为这个取的是当前时间,通过争取时间快速支撑了一些业务的上线。

而随着业务的陆续接入,全部使用processing time已经开始有不妥之处,所以必须把这个问题研究清楚,然后针对具体的业务类型来选择不同的策略即可。

-------------------------------------------------------------------------------------------------------------------------------------------

0)jdb工具的使用

见 https://baike.baidu.com/item/jdb/15084706?fr=aladdin

1)物理机[完毕]

使用公司的开发环境

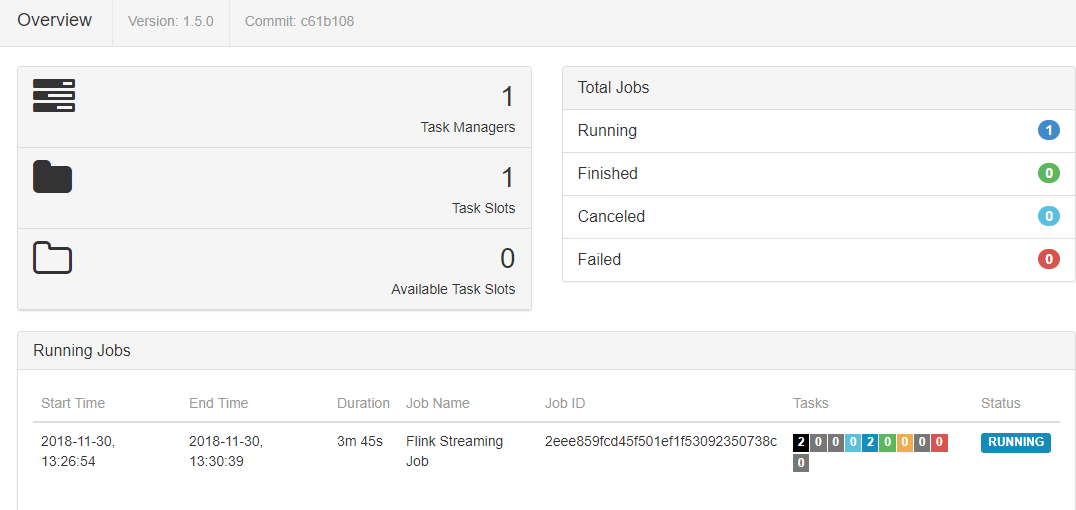

2)准备yarn集群&启动成功[完毕]

使用公司的开发环境

3)提交flink任务

顺利!

4)开启TaskManager的debug标志

为了能够debug TaskManager这个进程,开启了debug标志,主要是在启动命令行里添加了如下字样

-Xdebug -Xrunjdwp:transport=dt_socket,address=10000,server=y,suspend=n后来碰到问题,踩了个坑,百度了一下( http://www.cnblogs.com/XuYankang/p/jpda.html ),具体是这样的

对于JDK1.5以上的版本,JVM参数是:

-agentlib:jdwp=transport=dt_socket,server=y,suspend=n,address=10000

对于JDK1.4版本,使用:

-Xdebug -Xrunjdwp:transport=dt_socket,server=y,suspend=n,address=10000这样就可以attach这个进程,然后设置断点来进行debug

具体attach的命令如下

jdb -attach localhost:10000然后又发现,断点的时候,如果过一段时间不操作,就会退出The application has been disconnected

看了下日志:

[2018-11-30 15:06:53,167] WARN Detected unreachable: [akka.tcp://flink@qa-bigdata-test-01.hz:30714] org.slf4j.helpers.MarkerIgnoringBase.warn(MarkerIgnoringBase.java:131)

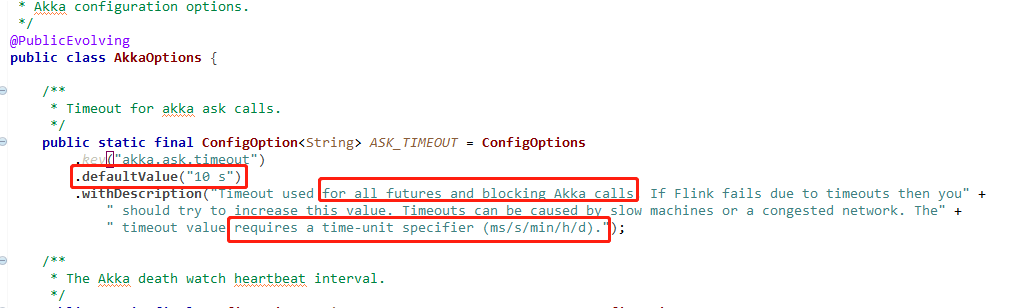

怎么解决呢?第一反应是调大jobmanager与TaskManager的交互超时时间,百度了一把https://www.2cto.com/kf/201701/592740.html

顺着这条线索一直往上找,看到这么一个

def getTimeout(config: Configuration): FiniteDuration = {

val duration = Duration(config.getString(AkkaOptions.ASK_TIMEOUT))

new FiniteDuration(duration.toMillis, TimeUnit.MILLISECONDS)

}继续找

那就设置配置文件里的这个值吧

#调试的时候开启

akka.ask.timeout: 30 min

akka.transport.threshold: 30000000.0

akka.transport.heartbeat.interval: 5999 s

akka.watch.heartbeat.interval: 1200 s

akka.watch.heartbeat.pause: 1201 s

亲测有效具体的还可以看 https://cwiki.apache.org/confluence/display/FLINK/Akka+and+Actors

5)程序启动的一些目录

./home/yarn/nm/usercache/flink/appcache/application_1542713936025_0106/container_e26_1542713936025_0106_01_000002

./home/yarn/nm/nmPrivate/application_1542713936025_0106/container_e26_1542713936025_0106_01_000002

./home/yarn/container-logs/application_1542713936025_0106/container_e26_1542713936025_0106_01_000002从第一个目录中可以看到为taskmanager生成的flink-conf.yaml文件的内容如下:

[yarn@***.hz container_e26_1542713936025_0106_01_000002]$ cat flink-conf.yaml

web.port: 0

web.backpressure.delay-between-samples: 50

web.backpressure.num-samples: 100

jobmanager.rpc.address: ***.hz

yarn.container-start-command-template: %java% %jvmmem% %jvmopts% %logging% -Xdebug -agentlib:jdwp=transport=dt_socket,server=y,suspend=n,address=10000 %class% %args% %redirects%

web.backpressure.refresh-interval: 60000

jobmanager.rpc.port: 38440

taskmanager.registration.timeout: 5 minutes

taskmanager.network.detailed-metrics: true

io.tmp.dirs: /tmp

containerized.heap-cutoff-ratio: 0.1

taskmanager.memory.off-heap: false

web.submit.enable: false

taskmanager.numberOfTaskSlots: 1

akka.ask.timeout: 30 min

env.java.opts.taskmanager: -server -Daaa=1 -XX:NativeMemoryTracking=detail -XX:+UseParNewGC -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=70 -XX:+UseCompressedClassPointers -XX:CompressedClassSpaceSize=400M -XX:MetaspaceSize=128m -XX:MaxMetaspaceSize=400m -XX:+PrintGCDetails -Xloggc:${LOG_DIRS}/taskmanager.out -XX:+UseGCLogFileRotation -XX:GCLogFileSize=32K -XX:NumberOfGCLogFiles=6

containerized.heap-cutoff-min: 200

web.backpressure.cleanup-interval: 600000

----------------------------------------------------------------------------第一种 Processing Time [处理时间]

我们先从简单的来弄,先来看看Processing Time的原理

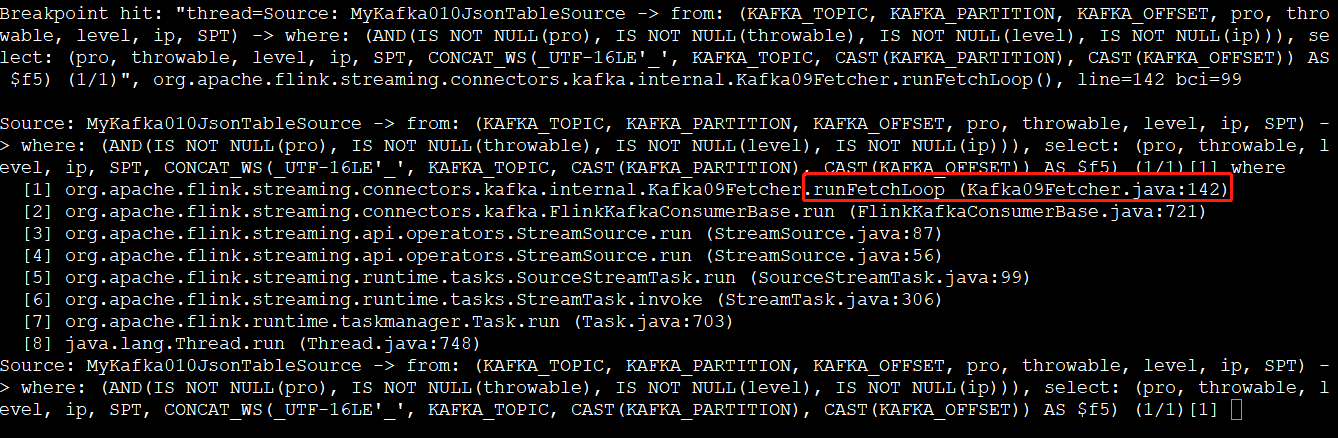

1)获取kafka消息的地方

先设置断点在下面这个地方,是开始获取原生kafka消息的地点

stop at org.apache.flink.streaming.connectors.kafka.internal.Kafka09Fetcher:142测试可以到达断点,具体如下

说明断点确实生效了,那么就继续debug下去!

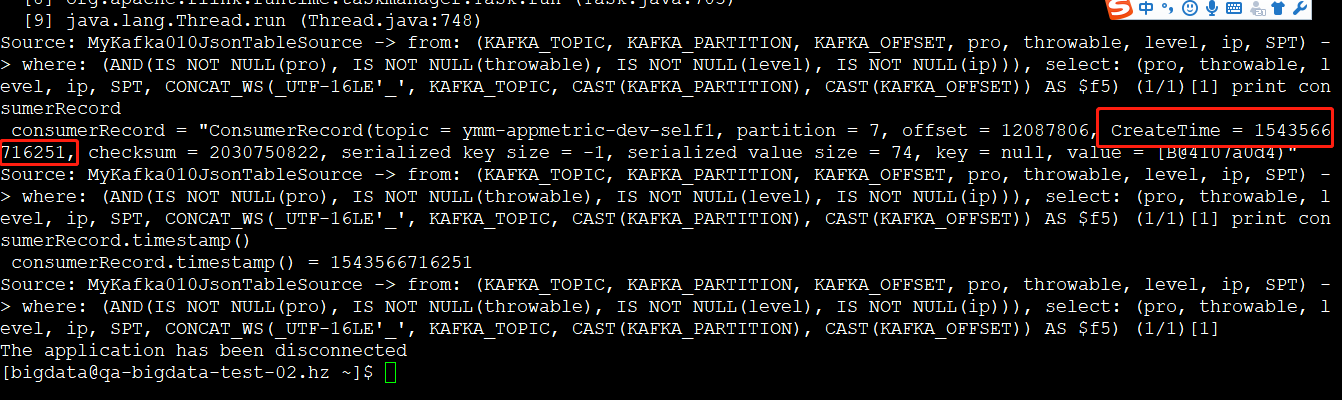

先是打印kafka的原生消息如下:

print record

record = "ConsumerRecord(topic = ymm-appmetric-dev-self1, partition = 3, offset = 924608530, CreateTime = 1543564501136, checksum = 2586921120, serialized key size = -1, serialized value size = 73, key = null, value = [B@655248af)"然后断点在

stop at org.apache.flink.streaming.connectors.kafka.internal.Kafka09Fetcher:154

就进入了

[1] org.apache.flink.streaming.connectors.kafka.internal.Kafka010Fetcher.emitRecord (Kafka010Fetcher.java:89)

[2] org.apache.flink.streaming.connectors.kafka.internal.Kafka09Fetcher.runFetchLoop (Kafka09Fetcher.java:154)

[3] org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumerBase.run (FlinkKafkaConsumerBase.java:721)

[4] org.apache.flink.streaming.api.operators.StreamSource.run (StreamSource.java:87)

[5] org.apache.flink.streaming.api.operators.StreamSource.run (StreamSource.java:56)

[6] org.apache.flink.streaming.runtime.tasks.SourceStreamTask.run (SourceStreamTask.java:99)

[7] org.apache.flink.streaming.runtime.tasks.StreamTask.invoke (StreamTask.java:306)

[8] org.apache.flink.runtime.taskmanager.Task.run (Task.java:703)

[9] java.lang.Thread.run (Thread.java:748)那我们就打断点

stop at org.apache.flink.streaming.connectors.kafka.internal.Kafka010Fetcher:89

这里有1个细节,看代码如下:

@Override

protected void emitRecord(

T record,

KafkaTopicPartitionState<TopicPartition> partition,

long offset,

ConsumerRecord<?, ?> consumerRecord) throws Exception {

// we attach the Kafka 0.10 timestamp here

emitRecordWithTimestamp(record, partition, offset, consumerRecord.timestamp());

}这个consumerRecord.timestamp()到底是个啥玩意?

经过分析,这里的时间戳是创建时间,时间类型是CreateTime

然后,进入

[1] org.apache.flink.streaming.connectors.kafka.internals.AbstractFetcher.emitRecordWithTimestamp (AbstractFetcher.java:392)

[2] org.apache.flink.streaming.connectors.kafka.internal.Kafka010Fetcher.emitRecord (Kafka010Fetcher.java:89)

[3] org.apache.flink.streaming.connectors.kafka.internal.Kafka09Fetcher.runFetchLoop (Kafka09Fetcher.java:154)

[4] org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumerBase.run (FlinkKafkaConsumerBase.java:721)

[5] org.apache.flink.streaming.api.operators.StreamSource.run (StreamSource.java:87)

[6] org.apache.flink.streaming.api.operators.StreamSource.run (StreamSource.java:56)

[7] org.apache.flink.streaming.runtime.tasks.SourceStreamTask.run (SourceStreamTask.java:99)

[8] org.apache.flink.streaming.runtime.tasks.StreamTask.invoke (StreamTask.java:306)

[9] org.apache.flink.runtime.taskmanager.Task.run (Task.java:703)

[10] java.lang.Thread.run (Thread.java:748)当前,我的row的数据是

print record

record = "ymm-appmetric-dev-self1,2,924282513,pro110,throwable112,level10,ip239,null"然后进入

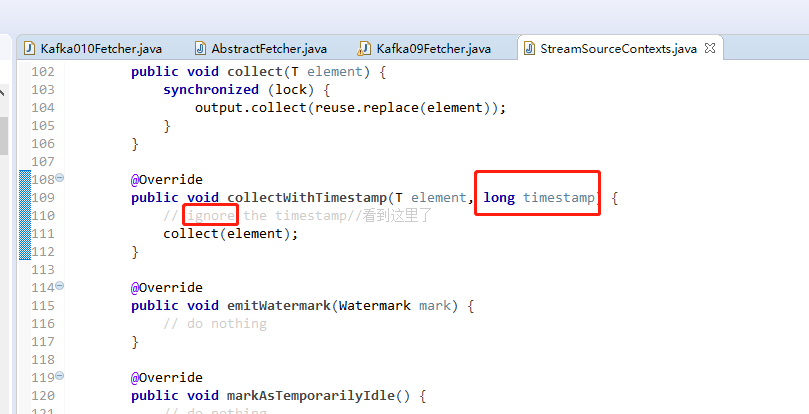

我们看到,又把这个kafka原生的创建时间戳信息扔掉了,那就只剩下反序列化之后的消息

继续执行collect函数

@Override

public void collect(T element) {

synchronized (lock) {//看到这里了

output.collect(reuse.replace(element));

}

}这里的reuse的类型是reuse.getClass() = "class org.apache.flink.streaming.runtime.streamrecord.StreamRecord"

我们先看replace发生了什么!

/**

* Replace the currently stored value by the given new value. This returns a StreamElement

* with the generic type parameter that matches the new value while keeping the old

* timestamp.

*

* @param element Element to set in this stream value

* @return Returns the StreamElement with replaced value

*/

@SuppressWarnings("unchecked")

public <X> StreamRecord<X> replace(X element) {

this.value = (T) element;

return (StreamRecord<X>) this;

}简单,不解释!

后面会经过一个复制操作,具体细节如下:

/**

* Creates a copy of this stream record. Uses the copied value as the value for the new

* record, i.e., only copies timestamp fields.

*/

public StreamRecord<T> copy(T valueCopy) {//看到这里了

StreamRecord<T> copy = new StreamRecord<>(valueCopy);//看到这里了

copy.timestamp = this.timestamp;//0

copy.hasTimestamp = this.hasTimestamp;//false

return copy;

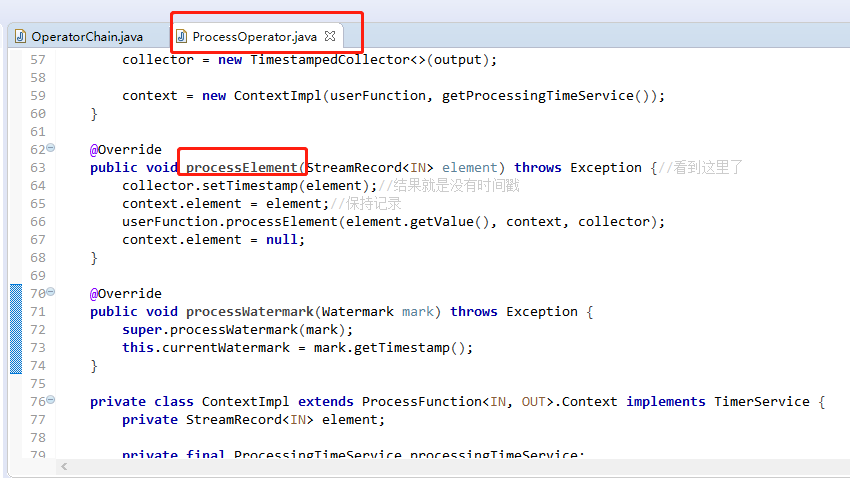

}然后,会执行到 org.apache.flink.streaming.api.operators.ProcessOperator.processElement (ProcessOperator.java:64)

然后执行到

这里的userFunction是

print userFunction

userFunction = "org.apache.flink.table.runtime.CRowOutputProcessRunner@729150b0"当前断点是

[1] org.apache.flink.table.runtime.CRowOutputProcessRunner.processElement (CRowOutputProcessRunner.scala:63)

[2] org.apache.flink.streaming.api.operators.ProcessOperator.processElement (ProcessOperator.java:66)

[3] org.apache.flink.streaming.runtime.tasks.OperatorChain$CopyingChainingOutput.pushToOperator (OperatorChain.java:560)

[4] org.apache.flink.streaming.runtime.tasks.OperatorChain$CopyingChainingOutput.collect (OperatorChain.java:535)

[5] org.apache.flink.streaming.runtime.tasks.OperatorChain$CopyingChainingOutput.collect (OperatorChain.java:515)

[6] org.apache.flink.streaming.api.operators.AbstractStreamOperator$CountingOutput.collect (AbstractStreamOperator.java:679)

[7] org.apache.flink.streaming.api.operators.AbstractStreamOperator$CountingOutput.collect (AbstractStreamOperator.java:657)

[8] org.apache.flink.streaming.api.operators.StreamSourceContexts$NonTimestampContext.collect (StreamSourceContexts.java:104)

[9] org.apache.flink.streaming.api.operators.StreamSourceContexts$NonTimestampContext.collectWithTimestamp (StreamSourceContexts.java:111)

[10] org.apache.flink.streaming.connectors.kafka.internals.AbstractFetcher.emitRecordWithTimestamp (AbstractFetcher.java:398)

[11] org.apache.flink.streaming.connectors.kafka.internal.Kafka010Fetcher.emitRecord (Kafka010Fetcher.java:89)

[12] org.apache.flink.streaming.connectors.kafka.internal.Kafka09Fetcher.runFetchLoop (Kafka09Fetcher.java:154)

[13] org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumerBase.run (FlinkKafkaConsumerBase.java:721)

[14] org.apache.flink.streaming.api.operators.StreamSource.run (StreamSource.java:87)

[15] org.apache.flink.streaming.api.operators.StreamSource.run (StreamSource.java:56)

[16] org.apache.flink.streaming.runtime.tasks.SourceStreamTask.run (SourceStreamTask.java:99)

[17] org.apache.flink.streaming.runtime.tasks.StreamTask.invoke (StreamTask.java:306)

[18] org.apache.flink.runtime.taskmanager.Task.run (Task.java:703)

[19] java.lang.Thread.run (Thread.java:748)中间经历一段非常恶心的scala源码,然后到了这里

[1] org.apache.flink.table.runtime.CRowWrappingCollector.collect (CRowWrappingCollector.scala:28)

[2] DataStreamSourceConversion$4328.processElement (null)

[3] org.apache.flink.table.runtime.CRowOutputProcessRunner.processElement (CRowOutputProcessRunner.scala:67)

[4] org.apache.flink.streaming.api.operators.ProcessOperator.processElement (ProcessOperator.java:66)

[5] org.apache.flink.streaming.runtime.tasks.OperatorChain$CopyingChainingOutput.pushToOperator (OperatorChain.java:560)

[6] org.apache.flink.streaming.runtime.tasks.OperatorChain$CopyingChainingOutput.collect (OperatorChain.java:535)

[7] org.apache.flink.streaming.runtime.tasks.OperatorChain$CopyingChainingOutput.collect (OperatorChain.java:515)

[8] org.apache.flink.streaming.api.operators.AbstractStreamOperator$CountingOutput.collect (AbstractStreamOperator.java:679)

[9] org.apache.flink.streaming.api.operators.AbstractStreamOperator$CountingOutput.collect (AbstractStreamOperator.java:657)

[10] org.apache.flink.streaming.api.operators.StreamSourceContexts$NonTimestampContext.collect (StreamSourceContexts.java:104)

[11] org.apache.flink.streaming.api.operators.StreamSourceContexts$NonTimestampContext.collectWithTimestamp (StreamSourceContexts.java:111)

[12] org.apache.flink.streaming.connectors.kafka.internals.AbstractFetcher.emitRecordWithTimestamp (AbstractFetcher.java:398)

[13] org.apache.flink.streaming.connectors.kafka.internal.Kafka010Fetcher.emitRecord (Kafka010Fetcher.java:89)

[14] org.apache.flink.streaming.connectors.kafka.internal.Kafka09Fetcher.runFetchLoop (Kafka09Fetcher.java:154)

[15] org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumerBase.run (FlinkKafkaConsumerBase.java:721)

[16] org.apache.flink.streaming.api.operators.StreamSource.run (StreamSource.java:87)

[17] org.apache.flink.streaming.api.operators.StreamSource.run (StreamSource.java:56)

[18] org.apache.flink.streaming.runtime.tasks.SourceStreamTask.run (SourceStreamTask.java:99)

[19] org.apache.flink.streaming.runtime.tasks.StreamTask.invoke (StreamTask.java:306)

[20] org.apache.flink.runtime.taskmanager.Task.run (Task.java:703)

[21] java.lang.Thread.run (Thread.java:748)再进一步,到了这里

[1] org.apache.flink.streaming.api.operators.TimestampedCollector.collect (TimestampedCollector.java:51)

[2] org.apache.flink.table.runtime.CRowWrappingCollector.collect (CRowWrappingCollector.scala:37)

[3] org.apache.flink.table.runtime.CRowWrappingCollector.collect (CRowWrappingCollector.scala:28)

[4] DataStreamSourceConversion$4328.processElement (null)

[5] org.apache.flink.table.runtime.CRowOutputProcessRunner.processElement (CRowOutputProcessRunner.scala:67)

[6] org.apache.flink.streaming.api.operators.ProcessOperator.processElement (ProcessOperator.java:66)

[7] org.apache.flink.streaming.runtime.tasks.OperatorChain$CopyingChainingOutput.pushToOperator (OperatorChain.java:560)

[8] org.apache.flink.streaming.runtime.tasks.OperatorChain$CopyingChainingOutput.collect (OperatorChain.java:535)

[9] org.apache.flink.streaming.runtime.tasks.OperatorChain$CopyingChainingOutput.collect (OperatorChain.java:515)

[10] org.apache.flink.streaming.api.operators.AbstractStreamOperator$CountingOutput.collect (AbstractStreamOperator.java:679)

[11] org.apache.flink.streaming.api.operators.AbstractStreamOperator$CountingOutput.collect (AbstractStreamOperator.java:657)

[12] org.apache.flink.streaming.api.operators.StreamSourceContexts$NonTimestampContext.collect (StreamSourceContexts.java:104)

[13] org.apache.flink.streaming.api.operators.StreamSourceContexts$NonTimestampContext.collectWithTimestamp (StreamSourceContexts.java:111)

[14] org.apache.flink.streaming.connectors.kafka.internals.AbstractFetcher.emitRecordWithTimestamp (AbstractFetcher.java:398)

[15] org.apache.flink.streaming.connectors.kafka.internal.Kafka010Fetcher.emitRecord (Kafka010Fetcher.java:89)

[16] org.apache.flink.streaming.connectors.kafka.internal.Kafka09Fetcher.runFetchLoop (Kafka09Fetcher.java:154)

[17] org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumerBase.run (FlinkKafkaConsumerBase.java:721)

[18] org.apache.flink.streaming.api.operators.StreamSource.run (StreamSource.java:87)

[19] org.apache.flink.streaming.api.operators.StreamSource.run (StreamSource.java:56)

[20] org.apache.flink.streaming.runtime.tasks.SourceStreamTask.run (SourceStreamTask.java:99)

[21] org.apache.flink.streaming.runtime.tasks.StreamTask.invoke (StreamTask.java:306)

[22] org.apache.flink.runtime.taskmanager.Task.run (Task.java:703)

[23] java.lang.Thread.run (Thread.java:748)所以,断点在

stop at org.apache.flink.streaming.api.operators.TimestampedCollector:51

---周末找了下同学,杭州的市容感觉比不上南京。。。道路很吐槽,开不习惯

好,我们继续我们的debug之路!

然后,就到了这里

[1] org.apache.flink.table.runtime.functions.ScalarFunctions$.concat_ws (ScalarFunctions.scala:62)

[2] org.apache.flink.table.runtime.functions.ScalarFunctions.concat_ws (null)

[3] DataStreamCalcRule$64.processElement (null)

[4] org.apache.flink.table.runtime.CRowProcessRunner.processElement (CRowProcessRunner.scala:66)

[5] org.apache.flink.table.runtime.CRowProcessRunner.processElement (CRowProcessRunner.scala:35)

[6] org.apache.flink.streaming.api.operators.ProcessOperator.processElement (ProcessOperator.java:66)

[7] org.apache.flink.streaming.runtime.tasks.OperatorChain$CopyingChainingOutput.pushToOperator (OperatorChain.java:560)

[8] org.apache.flink.streaming.runtime.tasks.OperatorChain$CopyingChainingOutput.collect (OperatorChain.java:535)

[9] org.apache.flink.streaming.runtime.tasks.OperatorChain$CopyingChainingOutput.collect (OperatorChain.java:515)

[10] org.apache.flink.streaming.api.operators.AbstractStreamOperator$CountingOutput.collect (AbstractStreamOperator.java:679)

[11] org.apache.flink.streaming.api.operators.AbstractStreamOperator$CountingOutput.collect (AbstractStreamOperator.java:657)

[12] org.apache.flink.streaming.api.operators.TimestampedCollector.collect (TimestampedCollector.java:51)

[13] org.apache.flink.table.runtime.CRowWrappingCollector.collect (CRowWrappingCollector.scala:37)

[14] org.apache.flink.table.runtime.CRowWrappingCollector.collect (CRowWrappingCollector.scala:28)

[15] DataStreamSourceConversion$20.processElement (null)

[16] org.apache.flink.table.runtime.CRowOutputProcessRunner.processElement (CRowOutputProcessRunner.scala:67)

[17] org.apache.flink.streaming.api.operators.ProcessOperator.processElement (ProcessOperator.java:66)

[18] org.apache.flink.streaming.runtime.tasks.OperatorChain$CopyingChainingOutput.pushToOperator (OperatorChain.java:560)

[19] org.apache.flink.streaming.runtime.tasks.OperatorChain$CopyingChainingOutput.collect (OperatorChain.java:535)

[20] org.apache.flink.streaming.runtime.tasks.OperatorChain$CopyingChainingOutput.collect (OperatorChain.java:515)

[21] org.apache.flink.streaming.api.operators.AbstractStreamOperator$CountingOutput.collect (AbstractStreamOperator.java:679)

[22] org.apache.flink.streaming.api.operators.AbstractStreamOperator$CountingOutput.collect (AbstractStreamOperator.java:657)

[23] org.apache.flink.streaming.api.operators.StreamSourceContexts$NonTimestampContext.collect (StreamSourceContexts.java:104)

[24] org.apache.flink.streaming.api.operators.StreamSourceContexts$NonTimestampContext.collectWithTimestamp (StreamSourceContexts.java:111)

[25] org.apache.flink.streaming.connectors.kafka.internals.AbstractFetcher.emitRecordWithTimestamp (AbstractFetcher.java:398)

[26] org.apache.flink.streaming.connectors.kafka.internal.Kafka010Fetcher.emitRecord (Kafka010Fetcher.java:89)

[27] org.apache.flink.streaming.connectors.kafka.internal.Kafka09Fetcher.runFetchLoop (Kafka09Fetcher.java:154)

[28] org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumerBase.run (FlinkKafkaConsumerBase.java:721)

[29] org.apache.flink.streaming.api.operators.StreamSource.run (StreamSource.java:87)

[30] org.apache.flink.streaming.api.operators.StreamSource.run (StreamSource.java:56)

[31] org.apache.flink.streaming.runtime.tasks.SourceStreamTask.run (SourceStreamTask.java:99)

[32] org.apache.flink.streaming.runtime.tasks.StreamTask.invoke (StreamTask.java:306)

[33] org.apache.flink.runtime.taskmanager.Task.run (Task.java:703)

[34] java.lang.Thread.run (Thread.java:748)然后会执行concat_ws函数

/**

* Returns the string that results from concatenating the arguments and separator.

* Returns NULL If the separator is NULL.

*

* Note: CONCAT_WS() does not skip empty strings. However, it does skip any NULL values after

* the separator argument.

*

**/

@varargs

def concat_ws(separator: String, args: String*): String = {

if (null == separator) {

return null

}

val sb = new StringBuilder

var i = 0

var hasValueAppended = false

while (i < args.length) {

if (null != args(i)) {

if (hasValueAppended) {

sb.append(separator)

}

sb.append(args(i))

hasValueAppended = true

}

i = i + 1

}

sb.toString

}这里就不是很清楚为啥先执行select里面的操作,因为group by还没有执行!先搁置,继续!

然后就到了这里

[1] org.apache.flink.streaming.runtime.io.StreamRecordWriter.emit (StreamRecordWriter.java:80)

[2] org.apache.flink.streaming.runtime.io.RecordWriterOutput.pushToRecordWriter (RecordWriterOutput.java:107)

[3] org.apache.flink.streaming.runtime.io.RecordWriterOutput.collect (RecordWriterOutput.java:89)

[4] org.apache.flink.streaming.runtime.io.RecordWriterOutput.collect (RecordWriterOutput.java:45)

[5] org.apache.flink.streaming.api.operators.AbstractStreamOperator$CountingOutput.collect (AbstractStreamOperator.java:679)

[6] org.apache.flink.streaming.api.operators.AbstractStreamOperator$CountingOutput.collect (AbstractStreamOperator.java:657)

[7] org.apache.flink.streaming.api.operators.TimestampedCollector.collect (TimestampedCollector.java:51)

[8] org.apache.flink.table.runtime.CRowWrappingCollector.collect (CRowWrappingCollector.scala:37)

[9] org.apache.flink.table.runtime.CRowWrappingCollector.collect (CRowWrappingCollector.scala:28)

[10] DataStreamCalcRule$64.processElement (null)

[11] org.apache.flink.table.runtime.CRowProcessRunner.processElement (CRowProcessRunner.scala:66)

[12] org.apache.flink.table.runtime.CRowProcessRunner.processElement (CRowProcessRunner.scala:35)

[13] org.apache.flink.streaming.api.operators.ProcessOperator.processElement (ProcessOperator.java:66)

[14] org.apache.flink.streaming.runtime.tasks.OperatorChain$CopyingChainingOutput.pushToOperator (OperatorChain.java:560)

[15] org.apache.flink.streaming.runtime.tasks.OperatorChain$CopyingChainingOutput.collect (OperatorChain.java:535)

[16] org.apache.flink.streaming.runtime.tasks.OperatorChain$CopyingChainingOutput.collect (OperatorChain.java:515)

[17] org.apache.flink.streaming.api.operators.AbstractStreamOperator$CountingOutput.collect (AbstractStreamOperator.java:679)

[18] org.apache.flink.streaming.api.operators.AbstractStreamOperator$CountingOutput.collect (AbstractStreamOperator.java:657)

[19] org.apache.flink.streaming.api.operators.TimestampedCollector.collect (TimestampedCollector.java:51)

[20] org.apache.flink.table.runtime.CRowWrappingCollector.collect (CRowWrappingCollector.scala:37)

[21] org.apache.flink.table.runtime.CRowWrappingCollector.collect (CRowWrappingCollector.scala:28)

[22] DataStreamSourceConversion$20.processElement (null)

[23] org.apache.flink.table.runtime.CRowOutputProcessRunner.processElement (CRowOutputProcessRunner.scala:67)

[24] org.apache.flink.streaming.api.operators.ProcessOperator.processElement (ProcessOperator.java:66)

[25] org.apache.flink.streaming.runtime.tasks.OperatorChain$CopyingChainingOutput.pushToOperator (OperatorChain.java:560)

[26] org.apache.flink.streaming.runtime.tasks.OperatorChain$CopyingChainingOutput.collect (OperatorChain.java:535)

[27] org.apache.flink.streaming.runtime.tasks.OperatorChain$CopyingChainingOutput.collect (OperatorChain.java:515)

[28] org.apache.flink.streaming.api.operators.AbstractStreamOperator$CountingOutput.collect (AbstractStreamOperator.java:679)

[29] org.apache.flink.streaming.api.operators.AbstractStreamOperator$CountingOutput.collect (AbstractStreamOperator.java:657)

[30] org.apache.flink.streaming.api.operators.StreamSourceContexts$NonTimestampContext.collect (StreamSourceContexts.java:104)

[31] org.apache.flink.streaming.api.operators.StreamSourceContexts$NonTimestampContext.collectWithTimestamp (StreamSourceContexts.java:111)

[32] org.apache.flink.streaming.connectors.kafka.internals.AbstractFetcher.emitRecordWithTimestamp (AbstractFetcher.java:398)

[33] org.apache.flink.streaming.connectors.kafka.internal.Kafka010Fetcher.emitRecord (Kafka010Fetcher.java:89)

[34] org.apache.flink.streaming.connectors.kafka.internal.Kafka09Fetcher.runFetchLoop (Kafka09Fetcher.java:154)

[35] org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumerBase.run (FlinkKafkaConsumerBase.java:721)

[36] org.apache.flink.streaming.api.operators.StreamSource.run (StreamSource.java:87)

[37] org.apache.flink.streaming.api.operators.StreamSource.run (StreamSource.java:56)

[38] org.apache.flink.streaming.runtime.tasks.SourceStreamTask.run (SourceStreamTask.java:99)

[39] org.apache.flink.streaming.runtime.tasks.StreamTask.invoke (StreamTask.java:306)

[40] org.apache.flink.runtime.taskmanager.Task.run (Task.java:703)

[41] java.lang.Thread.run (Thread.java:748)继续debug,可以设置断点 stop at org.apache.flink.streaming.runtime.io.StreamRecordWriter:125

这里就涉及到了分组!

源代码如下:

public void emit(T record) throws IOException, InterruptedException {

for (int targetChannel : channelSelector.selectChannels(record, numChannels)) {

sendToTarget(record, targetChannel);

}

}这里的channelSelector的类是: channelSelector.getClass() = "class org.apache.flink.streaming.runtime.partitioner.KeyGroupStreamPartitioner"

相关代码

@Override

public int[] selectChannels(

SerializationDelegate<StreamRecord<T>> record,

int numberOfOutputChannels) {

K key;

try {//看到这里了

key = keySelector.getKey(record.getInstance().getValue());

} catch (Exception e) {

throw new RuntimeException("Could not extract key from " + record.getInstance().getValue(), e);

}

returnArray[0] = KeyGroupRangeAssignment.assignKeyToParallelOperator(key, maxParallelism, numberOfOutputChannels);

return returnArray;

}继续跟进!断点在 stop in org.apache.flink.runtime.io.network.api.writer.RecordWriter.sendToTarget

然后会调用

if (flushAlways) {

targetPartition.flush(targetChannel);

}

1)[1] print targetPartition.getClass()

targetPartition.getClass() = "class org.apache.flink.runtime.io.network.partition.ResultPartition"然后调用

@Override

public void flush() {//看到这里了

synchronized (buffers) {

if (buffers.isEmpty()) {//不执行

return;

}

flushRequested = !buffers.isEmpty();//true

notifyDataAvailable();

}

}

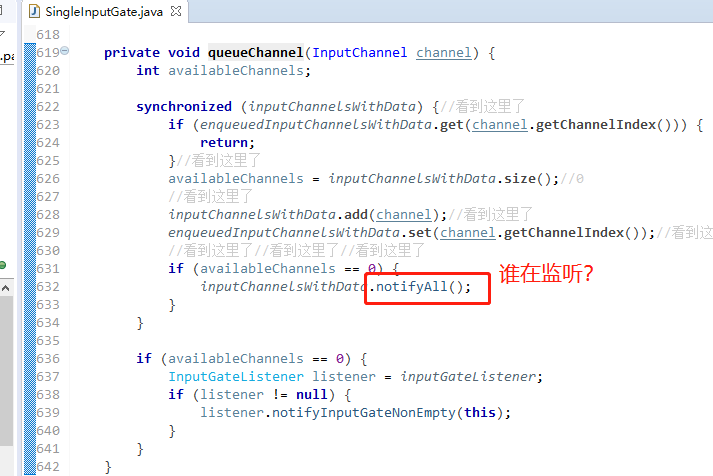

谁在监听?怎么找?必须线程栈啊!!!

Attaching to process ID 3188, please wait...

Debugger attached successfully.

Server compiler detected.

JVM version is 25.131-b11

Deadlock Detection:

No deadlocks found.

Thread 23310: (state = BLOCKED)

- sun.misc.Unsafe.park(boolean, long) @bci=0 (Compiled frame; information may be imprecise)

- scala.concurrent.forkjoin.ForkJoinPool.idleAwaitWork(scala.concurrent.forkjoin.ForkJoinPool$WorkQueue, long, long) @bci=129, line=2135 (Compiled frame)

- scala.concurrent.forkjoin.ForkJoinPool.scan(scala.concurrent.forkjoin.ForkJoinPool$WorkQueue) @bci=463, line=2067 (Compiled frame)

- scala.concurrent.forkjoin.ForkJoinPool.runWorker(scala.concurrent.forkjoin.ForkJoinPool$WorkQueue) @bci=8, line=1979 (Interpreted frame)

- scala.concurrent.forkjoin.ForkJoinWorkerThread.run() @bci=14, line=107 (Interpreted frame)

Thread 22759: (state = BLOCKED)

- sun.misc.Unsafe.park(boolean, long) @bci=0 (Compiled frame; information may be imprecise)

- scala.concurrent.forkjoin.ForkJoinPool.scan(scala.concurrent.forkjoin.ForkJoinPool$WorkQueue) @bci=525, line=2075 (Compiled frame)

- scala.concurrent.forkjoin.ForkJoinPool.runWorker(scala.concurrent.forkjoin.ForkJoinPool$WorkQueue) @bci=8, line=1979 (Interpreted frame)

- scala.concurrent.forkjoin.ForkJoinWorkerThread.run() @bci=14, line=107 (Interpreted frame)

Thread 21277: (state = BLOCKED)

- sun.misc.Unsafe.park(boolean, long) @bci=0 (Compiled frame; information may be imprecise)

- scala.concurrent.forkjoin.ForkJoinPool.scan(scala.concurrent.forkjoin.ForkJoinPool$WorkQueue) @bci=525, line=2075 (Compiled frame)

- scala.concurrent.forkjoin.ForkJoinPool.runWorker(scala.concurrent.forkjoin.ForkJoinPool$WorkQueue) @bci=8, line=1979 (Interpreted frame)

- scala.concurrent.forkjoin.ForkJoinWorkerThread.run() @bci=14, line=107 (Interpreted frame)

Thread 21189: (state = BLOCKED)

- sun.misc.Unsafe.park(boolean, long) @bci=0 (Compiled frame; information may be imprecise)

- scala.concurrent.forkjoin.ForkJoinPool.scan(scala.concurrent.forkjoin.ForkJoinPool$WorkQueue) @bci=525, line=2075 (Compiled frame)

- scala.concurrent.forkjoin.ForkJoinPool.runWorker(scala.concurrent.forkjoin.ForkJoinPool$WorkQueue) @bci=8, line=1979 (Interpreted frame)

- scala.concurrent.forkjoin.ForkJoinWorkerThread.run() @bci=14, line=107 (Interpreted frame)

Thread 3256: (state = BLOCKED)

- org.apache.flink.streaming.runtime.streamrecord.StreamRecord.replace(java.lang.Object) @bci=6, line=107 (Interpreted frame)

- org.apache.flink.streaming.api.operators.TimestampedCollector.collect(java.lang.Object) @bci=9, line=51 (Interpreted frame)

- org.apache.flink.table.runtime.aggregate.TimeWindowPropertyCollector.collect(java.lang.Object) @bci=122, line=65 (Compiled frame [deoptimized])

- org.apache.flink.table.runtime.aggregate.IncrementalAggregateWindowFunction.apply(org.apache.flink.types.Row, org.apache.flink.streaming.api.windowing.windows.Window, java.lang.Iterable, org.apache.flink.util.Collector) @bci=120, line=74 (Compiled frame [deoptimized])

- org.apache.flink.table.runtime.aggregate.IncrementalAggregateTimeWindowFunction.apply(org.apache.flink.types.Row, org.apache.flink.streaming.api.windowing.windows.TimeWindow, java.lang.Iterable, org.apache.flink.util.Collector) @bci=39, line=72 (Compiled frame [deoptimized])

- org.apache.flink.table.runtime.aggregate.IncrementalAggregateTimeWindowFunction.apply(java.lang.Object, org.apache.flink.streaming.api.windowing.windows.Window, java.lang.Iterable, org.apache.flink.util.Collector) @bci=12, line=39 (Compiled frame [deoptimized])

- org.apache.flink.streaming.runtime.operators.windowing.functions.InternalSingleValueWindowFunction.process(java.lang.Object, org.apache.flink.streaming.api.windowing.windows.Window, org.apache.flink.streaming.runtime.operators.windowing.functions.InternalWindowFunction$InternalWindowContext, java.lang.Object, org.apache.flink.util.Collector) @bci=16, line=46 (Compiled frame [deoptimized])

- org.apache.flink.streaming.runtime.operators.windowing.WindowOperator.emitWindowContents(org.apache.flink.streaming.api.windowing.windows.Window, java.lang.Object) @bci=43, line=550 (Compiled frame [deoptimized])

- org.apache.flink.streaming.runtime.operators.windowing.WindowOperator.onProcessingTime(org.apache.flink.streaming.api.operators.InternalTimer) @bci=141, line=505 (Compiled frame [deoptimized])

- org.apache.flink.streaming.api.operators.HeapInternalTimerService.onProcessingTime(long) @bci=71, line=266 (Compiled frame [deoptimized])

- org.apache.flink.streaming.runtime.tasks.SystemProcessingTimeService$TriggerTask.run() @bci=25, line=281 (Interpreted frame)

- java.util.concurrent.Executors$RunnableAdapter.call() @bci=4, line=511 (Compiled frame [deoptimized])

- java.util.concurrent.FutureTask.run() @bci=42, line=266 (Interpreted frame)

- java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$201(java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask) @bci=1, line=180 (Compiled frame [deoptimized])

- java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run() @bci=30, line=293 (Compiled frame [deoptimized])

- java.util.concurrent.ThreadPoolExecutor.runWorker(java.util.concurrent.ThreadPoolExecutor$Worker) @bci=95, line=1142 (Interpreted frame)

- java.util.concurrent.ThreadPoolExecutor$Worker.run() @bci=5, line=617 (Interpreted frame)

- java.lang.Thread.run() @bci=11, line=748 (Interpreted frame)

Thread 3252: (state = BLOCKED)

- java.lang.Object.wait(long) @bci=0 (Compiled frame; information may be imprecise)

- java.lang.Object.wait() @bci=2, line=502 (Compiled frame)

- org.apache.flink.streaming.connectors.kafka.internal.Handover.produce(org.apache.kafka.clients.consumer.ConsumerRecords) @bci=30, line=117 (Compiled frame)

- org.apache.flink.streaming.connectors.kafka.internal.KafkaConsumerThread.run() @bci=378, line=265 (Interpreted frame)

Thread 3251: (state = BLOCKED)

- java.lang.Thread.sleep(long) @bci=0 (Compiled frame; information may be imprecise)

- org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumerBase$2.run() @bci=123, line=701 (Interpreted frame)

- java.lang.Thread.run() @bci=11, line=748 (Interpreted frame)

Thread 3250: (state = BLOCKED)

- org.apache.flink.streaming.runtime.tasks.SystemProcessingTimeService$RepeatedTriggerTask.run() @bci=7, line=325 (Interpreted frame)

- java.util.concurrent.Executors$RunnableAdapter.call() @bci=4, line=511 (Compiled frame [deoptimized])

- java.util.concurrent.FutureTask.runAndReset() @bci=47, line=308 (Interpreted frame)

- java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$301(java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask) @bci=1, line=180 (Interpreted frame)

- java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run() @bci=37, line=294 (Interpreted frame)

- java.util.concurrent.ThreadPoolExecutor.runWorker(java.util.concurrent.ThreadPoolExecutor$Worker) @bci=95, line=1142 (Interpreted frame)

- java.util.concurrent.ThreadPoolExecutor$Worker.run() @bci=5, line=617 (Interpreted frame)

- java.lang.Thread.run() @bci=11, line=748 (Interpreted frame)

Thread 3248: (state = BLOCKED)

- sun.nio.ch.EPollArrayWrapper.epollWait(long, int, long, int) @bci=0 (Compiled frame; information may be imprecise)

- sun.nio.ch.EPollArrayWrapper.poll(long) @bci=18, line=269 (Compiled frame)

- sun.nio.ch.EPollSelectorImpl.doSelect(long) @bci=28, line=93 (Compiled frame)

- sun.nio.ch.SelectorImpl.lockAndDoSelect(long) @bci=37, line=86 (Compiled frame)

- sun.nio.ch.SelectorImpl.select(long) @bci=30, line=97 (Compiled frame)

- org.apache.kafka.common.network.Selector.select(long) @bci=35, line=489 (Compiled frame)

- org.apache.kafka.common.network.Selector.poll(long) @bci=53, line=298 (Compiled frame)

- org.apache.kafka.clients.NetworkClient.poll(long, long) @bci=36, line=349 (Compiled frame)

- org.apache.kafka.clients.producer.internals.Sender.run(long) @bci=415, line=225 (Compiled frame)

- org.apache.kafka.clients.producer.internals.Sender.run() @bci=27, line=126 (Compiled frame)

- java.lang.Thread.run() @bci=11, line=748 (Interpreted frame)

Thread 3247: (state = BLOCKED)

- java.lang.Object.wait(long) @bci=0 (Compiled frame; information may be imprecise)

- java.lang.Object.wait() @bci=2, line=502 (Compiled frame)

- org.apache.flink.runtime.io.network.partition.consumer.SingleInputGate.getNextBufferOrEvent(boolean) @bci=80, line=533 (Compiled frame)

- org.apache.flink.runtime.io.network.partition.consumer.SingleInputGate.getNextBufferOrEvent() @bci=2, line=502 (Compiled frame)

- org.apache.flink.streaming.runtime.io.BarrierTracker.getNextNonBlocked() @bci=4, line=94 (Compiled frame)

- org.apache.flink.streaming.runtime.io.StreamInputProcessor.processInput() @bci=285, line=209 (Compiled frame)

- org.apache.flink.streaming.runtime.tasks.OneInputStreamTask.run() @bci=13, line=103 (Compiled frame)

- org.apache.flink.streaming.runtime.tasks.StreamTask.invoke() @bci=270, line=306 (Interpreted frame)

- org.apache.flink.runtime.taskmanager.Task.run() @bci=787, line=703 (Interpreted frame)

- java.lang.Thread.run() @bci=11, line=748 (Interpreted frame)

Thread 3246: (state = BLOCKED)

- java.lang.Object.wait(long) @bci=0 (Interpreted frame)

- java.lang.ref.ReferenceQueue.remove(long) @bci=59, line=143 (Compiled frame)

- java.lang.ref.ReferenceQueue.remove() @bci=2, line=164 (Compiled frame)

- org.apache.flink.core.fs.SafetyNetCloseableRegistry$CloseableReaperThread.run() @bci=11, line=193 (Interpreted frame)

Thread 3245: (state = BLOCKED)

- org.apache.flink.streaming.runtime.io.RecordWriterOutput.pushToRecordWriter(org.apache.flink.streaming.runtime.streamrecord.StreamRecord) @bci=36, line=112 (Interpreted frame)

- org.apache.flink.streaming.runtime.io.RecordWriterOutput.collect(org.apache.flink.streaming.runtime.streamrecord.StreamRecord) @bci=10, line=89 (Interpreted frame)

- org.apache.flink.streaming.runtime.io.RecordWriterOutput.collect(java.lang.Object) @bci=5, line=45 (Interpreted frame)

- org.apache.flink.streaming.api.operators.AbstractStreamOperator$CountingOutput.collect(org.apache.flink.streaming.runtime.streamrecord.StreamRecord) @bci=14, line=679 (Interpreted frame)

- org.apache.flink.streaming.api.operators.AbstractStreamOperator$CountingOutput.collect(java.lang.Object) @bci=5, line=657 (Interpreted frame)

- org.apache.flink.streaming.api.operators.TimestampedCollector.collect(java.lang.Object) @bci=12, line=51 (Interpreted frame)

- org.apache.flink.table.runtime.CRowWrappingCollector.collect(org.apache.flink.types.Row) @bci=16, line=37 (Interpreted frame)

- org.apache.flink.table.runtime.CRowWrappingCollector.collect(java.lang.Object) @bci=5, line=28 (Interpreted frame)

- DataStreamCalcRule$64.processElement(java.lang.Object, org.apache.flink.streaming.api.functions.ProcessFunction$Context, org.apache.flink.util.Collector) @bci=935 (Interpreted frame)

- org.apache.flink.table.runtime.CRowProcessRunner.processElement(org.apache.flink.table.runtime.types.CRow, org.apache.flink.streaming.api.functions.ProcessFunction$Context, org.apache.flink.util.Collector) @bci=32, line=66 (Interpreted frame)

- org.apache.flink.table.runtime.CRowProcessRunner.processElement(java.lang.Object, org.apache.flink.streaming.api.functions.ProcessFunction$Context, org.apache.flink.util.Collector) @bci=7, line=35 (Interpreted frame)

- org.apache.flink.streaming.api.operators.ProcessOperator.processElement(org.apache.flink.streaming.runtime.streamrecord.StreamRecord) @bci=36, line=66 (Interpreted frame)

- org.apache.flink.streaming.runtime.tasks.OperatorChain$CopyingChainingOutput.pushToOperator(org.apache.flink.streaming.runtime.streamrecord.StreamRecord) @bci=42, line=560 (Interpreted frame)

- org.apache.flink.streaming.runtime.tasks.OperatorChain$CopyingChainingOutput.collect(org.apache.flink.streaming.runtime.streamrecord.StreamRecord) @bci=10, line=535 (Interpreted frame)

- org.apache.flink.streaming.runtime.tasks.OperatorChain$CopyingChainingOutput.collect(java.lang.Object) @bci=5, line=515 (Interpreted frame)

- org.apache.flink.streaming.api.operators.AbstractStreamOperator$CountingOutput.collect(org.apache.flink.streaming.runtime.streamrecord.StreamRecord) @bci=14, line=679 (Interpreted frame)

- org.apache.flink.streaming.api.operators.AbstractStreamOperator$CountingOutput.collect(java.lang.Object) @bci=5, line=657 (Interpreted frame)

- org.apache.flink.streaming.api.operators.TimestampedCollector.collect(java.lang.Object) @bci=12, line=51 (Interpreted frame)

- org.apache.flink.table.runtime.CRowWrappingCollector.collect(org.apache.flink.types.Row) @bci=16, line=37 (Compiled frame [deoptimized])

- org.apache.flink.table.runtime.CRowWrappingCollector.collect(java.lang.Object) @bci=5, line=28 (Interpreted frame)

- DataStreamSourceConversion$20.processElement(java.lang.Object, org.apache.flink.streaming.api.functions.ProcessFunction$Context, org.apache.flink.util.Collector) @bci=528 (Compiled frame [deoptimized])

- org.apache.flink.table.runtime.CRowOutputProcessRunner.processElement(java.lang.Object, org.apache.flink.streaming.api.functions.ProcessFunction$Context, org.apache.flink.util.Collector) @bci=29, line=67 (Compiled frame [deoptimized])

- org.apache.flink.streaming.api.operators.ProcessOperator.processElement(org.apache.flink.streaming.runtime.streamrecord.StreamRecord) @bci=36, line=66 (Compiled frame [deoptimized])

- org.apache.flink.streaming.runtime.tasks.OperatorChain$CopyingChainingOutput.pushToOperator(org.apache.flink.streaming.runtime.streamrecord.StreamRecord) @bci=42, line=560 (Compiled frame [deoptimized])

- org.apache.flink.streaming.runtime.tasks.OperatorChain$CopyingChainingOutput.collect(org.apache.flink.streaming.runtime.streamrecord.StreamRecord) @bci=10, line=535 (Compiled frame [deoptimized])

- org.apache.flink.streaming.runtime.tasks.OperatorChain$CopyingChainingOutput.collect(java.lang.Object) @bci=5, line=515 (Compiled frame [deoptimized])

- org.apache.flink.streaming.api.operators.AbstractStreamOperator$CountingOutput.collect(org.apache.flink.streaming.runtime.streamrecord.StreamRecord) @bci=14, line=679 (Compiled frame [deoptimized])

- org.apache.flink.streaming.api.operators.AbstractStreamOperator$CountingOutput.collect(java.lang.Object) @bci=5, line=657 (Compiled frame [deoptimized])

- org.apache.flink.streaming.api.operators.StreamSourceContexts$NonTimestampContext.collect(java.lang.Object) @bci=19, line=104 (Compiled frame [deoptimized])

- org.apache.flink.streaming.api.operators.StreamSourceContexts$NonTimestampContext.collectWithTimestamp(java.lang.Object, long) @bci=2, line=111 (Compiled frame [deoptimized])

- org.apache.flink.streaming.connectors.kafka.internals.AbstractFetcher.emitRecordWithTimestamp(java.lang.Object, org.apache.flink.streaming.connectors.kafka.internals.KafkaTopicPartitionState, long, long) @bci=26, line=398 (Compiled frame [deoptimized])

- org.apache.flink.streaming.connectors.kafka.internal.Kafka010Fetcher.emitRecord(java.lang.Object, org.apache.flink.streaming.connectors.kafka.internals.KafkaTopicPartitionState, long, org.apache.kafka.clients.consumer.ConsumerRecord) @bci=9, line=89 (Interpreted frame)

- org.apache.flink.streaming.connectors.kafka.internal.Kafka09Fetcher.runFetchLoop() @bci=175, line=154 (Compiled frame [deoptimized])

- org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumerBase.run(org.apache.flink.streaming.api.functions.source.SourceFunction$SourceContext) @bci=224, line=721 (Interpreted frame)

- org.apache.flink.streaming.api.operators.StreamSource.run(java.lang.Object, org.apache.flink.streaming.runtime.streamstatus.StreamStatusMaintainer, org.apache.flink.streaming.api.operators.Output) @bci=98, line=87 (Interpreted frame)

- org.apache.flink.streaming.api.operators.StreamSource.run(java.lang.Object, org.apache.flink.streaming.runtime.streamstatus.StreamStatusMaintainer) @bci=7, line=56 (Interpreted frame)

- org.apache.flink.streaming.runtime.tasks.SourceStreamTask.run() @bci=15, line=99 (Interpreted frame)

- org.apache.flink.streaming.runtime.tasks.StreamTask.invoke() @bci=270, line=306 (Interpreted frame)

- org.apache.flink.runtime.taskmanager.Task.run() @bci=787, line=703 (Interpreted frame)

- java.lang.Thread.run() @bci=11, line=748 (Interpreted frame)

Thread 3244: (state = BLOCKED)

- sun.misc.Unsafe.park(boolean, long) @bci=0 (Compiled frame; information may be imprecise)

- java.util.concurrent.locks.LockSupport.parkNanos(java.lang.Object, long) @bci=20, line=215 (Compiled frame)

- java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject.awaitNanos(long) @bci=78, line=2078 (Interpreted frame)

- java.util.concurrent.ScheduledThreadPoolExecutor$DelayedWorkQueue.take() @bci=124, line=1093 (Compiled frame)

- java.util.concurrent.ScheduledThreadPoolExecutor$DelayedWorkQueue.take() @bci=1, line=809 (Compiled frame)

- java.util.concurrent.ThreadPoolExecutor.getTask() @bci=149, line=1067 (Compiled frame)

- java.util.concurrent.ThreadPoolExecutor.runWorker(java.util.concurrent.ThreadPoolExecutor$Worker) @bci=26, line=1127 (Interpreted frame)

- java.util.concurrent.ThreadPoolExecutor$Worker.run() @bci=5, line=617 (Interpreted frame)

- java.lang.Thread.run() @bci=11, line=748 (Interpreted frame)

Thread 3242: (state = BLOCKED)

- java.lang.Object.wait(long) @bci=0 (Compiled frame; information may be imprecise)

- java.util.TimerThread.mainLoop() @bci=201, line=552 (Interpreted frame)

- java.util.TimerThread.run() @bci=1, line=505 (Interpreted frame)

Thread 3241: (state = BLOCKED)

- java.lang.Object.wait(long) @bci=0 (Compiled frame; information may be imprecise)

- java.util.TimerThread.mainLoop() @bci=201, line=552 (Interpreted frame)

- java.util.TimerThread.run() @bci=1, line=505 (Interpreted frame)

Thread 3240: (state = BLOCKED)

- java.net.URLClassLoader.findClass(java.lang.String) @bci=44, line=381 (Interpreted frame)

- java.lang.ClassLoader.loadClass(java.lang.String, boolean) @bci=70, line=424 (Compiled frame)

- java.lang.ClassLoader.loadClass(java.lang.String, boolean) @bci=38, line=411 (Compiled frame)

- sun.misc.Launcher$AppClassLoader.loadClass(java.lang.String, boolean) @bci=81, line=335 (Compiled frame)

- java.lang.ClassLoader.loadClass(java.lang.String) @bci=3, line=357 (Compiled frame)

- akka.remote.RemoteSystemDaemon.$bang(java.lang.Object, akka.actor.ActorRef) @bci=638, line=205 (Interpreted frame)

- akka.actor.ActorRef.tell(java.lang.Object, akka.actor.ActorRef) @bci=6, line=130 (Compiled frame)

- akka.event.AddressTerminatedTopic$$anonfun$publish$1.apply(akka.actor.ActorRef) @bci=11, line=53 (Interpreted frame)

- akka.event.AddressTerminatedTopic$$anonfun$publish$1.apply(java.lang.Object) @bci=5, line=53 (Interpreted frame)

- scala.collection.immutable.Set$Set3.foreach(scala.Function1) @bci=5, line=163 (Interpreted frame)

- akka.event.AddressTerminatedTopic.publish(akka.actor.AddressTerminated) @bci=19, line=53 (Interpreted frame)

- akka.remote.RemoteWatcher.publishAddressTerminated(akka.actor.Address) @bci=26, line=173 (Interpreted frame)

- akka.remote.RemoteWatcher$$anonfun$reapUnreachable$1.apply(akka.actor.Address) @bci=75, line=167 (Interpreted frame)

- akka.remote.RemoteWatcher$$anonfun$reapUnreachable$1.apply(java.lang.Object) @bci=5, line=163 (Interpreted frame)

- scala.collection.mutable.HashMap$$anon$1$$anonfun$foreach$2.apply(scala.collection.mutable.DefaultEntry) @bci=8, line=134 (Interpreted frame)

- scala.collection.mutable.HashMap$$anon$1$$anonfun$foreach$2.apply(java.lang.Object) @bci=5, line=134 (Interpreted frame)

- scala.collection.mutable.HashTable$class.foreachEntry(scala.collection.mutable.HashTable, scala.Function1) @bci=38, line=236 (Compiled frame)

- scala.collection.mutable.HashMap.foreachEntry(scala.Function1) @bci=2, line=40 (Compiled frame)

- scala.collection.mutable.HashMap$$anon$1.foreach(scala.Function1) @bci=13, line=134 (Compiled frame)

- akka.remote.RemoteWatcher.reapUnreachable() @bci=12, line=163 (Compiled frame)

- akka.remote.RemoteWatcher$$anonfun$receive$1.applyOrElse(java.lang.Object, scala.Function1) @bci=171, line=129 (Interpreted frame)

- akka.actor.Actor$class.aroundReceive(akka.actor.Actor, scala.PartialFunction, java.lang.Object) @bci=8, line=502 (Compiled frame)

- akka.remote.RemoteWatcher.aroundReceive(scala.PartialFunction, java.lang.Object) @bci=3, line=86 (Compiled frame)

- akka.actor.ActorCell.receiveMessage(java.lang.Object) @bci=15, line=526 (Compiled frame)

- akka.actor.ActorCell.invoke(akka.dispatch.Envelope) @bci=59, line=495 (Compiled frame)

- akka.dispatch.Mailbox.processMailbox(int, long) @bci=24, line=257 (Compiled frame)

- akka.dispatch.Mailbox.run() @bci=20, line=224 (Compiled frame)

- akka.dispatch.Mailbox.exec() @bci=1, line=234 (Compiled frame)

- scala.concurrent.forkjoin.ForkJoinTask.doExec() @bci=10, line=260 (Compiled frame)

- scala.concurrent.forkjoin.ForkJoinPool$WorkQueue.runTask(scala.concurrent.forkjoin.ForkJoinTask) @bci=10, line=1339 (Compiled frame)

- scala.concurrent.forkjoin.ForkJoinPool.runWorker(scala.concurrent.forkjoin.ForkJoinPool$WorkQueue) @bci=11, line=1979 (Interpreted frame)

- scala.concurrent.forkjoin.ForkJoinWorkerThread.run() @bci=14, line=107 (Interpreted frame)

Thread 3239: (state = BLOCKED)

- java.lang.Thread.sleep(long) @bci=0 (Compiled frame; information may be imprecise)

- org.apache.flink.shaded.akka.org.jboss.netty.util.HashedWheelTimer$Worker.waitForNextTick() @bci=81, line=445 (Compiled frame)

- org.apache.flink.shaded.akka.org.jboss.netty.util.HashedWheelTimer$Worker.run() @bci=43, line=364 (Compiled frame)

- org.apache.flink.shaded.akka.org.jboss.netty.util.ThreadRenamingRunnable.run() @bci=55, line=108 (Interpreted frame)

- java.lang.Thread.run() @bci=11, line=748 (Interpreted frame)

Thread 3237: (state = BLOCKED)

- sun.misc.Unsafe.park(boolean, long) @bci=0 (Interpreted frame)

- java.util.concurrent.locks.LockSupport.park(java.lang.Object) @bci=14, line=175 (Interpreted frame)

- java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject.await() @bci=42, line=2039 (Interpreted frame)

- java.util.concurrent.LinkedBlockingQueue.take() @bci=29, line=442 (Interpreted frame)

- org.apache.flink.runtime.io.disk.iomanager.IOManagerAsync$ReaderThread.run() @bci=24, line=355 (Interpreted frame)

Thread 3236: (state = BLOCKED)

- sun.misc.Unsafe.park(boolean, long) @bci=0 (Interpreted frame)

- java.util.concurrent.locks.LockSupport.park(java.lang.Object) @bci=14, line=175 (Interpreted frame)

- java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject.await() @bci=42, line=2039 (Interpreted frame)

- java.util.concurrent.LinkedBlockingQueue.take() @bci=29, line=442 (Interpreted frame)

- org.apache.flink.runtime.io.disk.iomanager.IOManagerAsync$WriterThread.run() @bci=24, line=461 (Interpreted frame)

Thread 3235: (state = BLOCKED)

- sun.nio.ch.EPollArrayWrapper.epollWait(long, int, long, int) @bci=0 (Compiled frame; information may be imprecise)

- sun.nio.ch.EPollArrayWrapper.poll(long) @bci=18, line=269 (Compiled frame)

- sun.nio.ch.EPollSelectorImpl.doSelect(long) @bci=28, line=93 (Compiled frame)

- sun.nio.ch.SelectorImpl.lockAndDoSelect(long) @bci=37, line=86 (Compiled frame)

- sun.nio.ch.SelectorImpl.select(long) @bci=30, line=97 (Compiled frame)

- org.apache.flink.shaded.netty4.io.netty.channel.nio.NioEventLoop.select(boolean) @bci=62, line=622 (Compiled frame)

- org.apache.flink.shaded.netty4.io.netty.channel.nio.NioEventLoop.run() @bci=25, line=310 (Interpreted frame)

- org.apache.flink.shaded.netty4.io.netty.util.concurrent.SingleThreadEventExecutor$2.run() @bci=13, line=111 (Interpreted frame)

- java.lang.Thread.run() @bci=11, line=748 (Interpreted frame)

Thread 3234: (state = BLOCKED)

- sun.nio.ch.EPollArrayWrapper.epollWait(long, int, long, int) @bci=0 (Compiled frame; information may be imprecise)

- sun.nio.ch.EPollArrayWrapper.poll(long) @bci=18, line=269 (Compiled frame)

- sun.nio.ch.EPollSelectorImpl.doSelect(long) @bci=28, line=93 (Compiled frame)

- sun.nio.ch.SelectorImpl.lockAndDoSelect(long) @bci=37, line=86 (Compiled frame)

- sun.nio.ch.SelectorImpl.select(long) @bci=30, line=97 (Compiled frame)

- org.apache.flink.shaded.netty4.io.netty.channel.nio.NioEventLoop.select(boolean) @bci=62, line=622 (Compiled frame)

- org.apache.flink.shaded.netty4.io.netty.channel.nio.NioEventLoop.run() @bci=25, line=310 (Interpreted frame)

- org.apache.flink.shaded.netty4.io.netty.util.concurrent.SingleThreadEventExecutor$2.run() @bci=13, line=111 (Interpreted frame)

- java.lang.Thread.run() @bci=11, line=748 (Interpreted frame)

Thread 3233: (state = BLOCKED)

- sun.nio.ch.EPollArrayWrapper.epollWait(long, int, long, int) @bci=0 (Compiled frame; information may be imprecise)

- sun.nio.ch.EPollArrayWrapper.poll(long) @bci=18, line=269 (Compiled frame)

- sun.nio.ch.EPollSelectorImpl.doSelect(long) @bci=28, line=93 (Compiled frame)

- sun.nio.ch.SelectorImpl.lockAndDoSelect(long) @bci=37, line=86 (Compiled frame)

- sun.nio.ch.SelectorImpl.select(long) @bci=30, line=97 (Compiled frame)

- org.apache.flink.shaded.netty4.io.netty.channel.nio.NioEventLoop.select(boolean) @bci=62, line=622 (Compiled frame)

- org.apache.flink.shaded.netty4.io.netty.channel.nio.NioEventLoop.run() @bci=25, line=310 (Interpreted frame)

- org.apache.flink.shaded.netty4.io.netty.util.concurrent.SingleThreadEventExecutor$2.run() @bci=13, line=111 (Interpreted frame)

- java.lang.Thread.run() @bci=11, line=748 (Interpreted frame)

Thread 3231: (state = BLOCKED)

- sun.misc.Unsafe.park(boolean, long) @bci=0 (Compiled frame; information may be imprecise)

- scala.concurrent.forkjoin.ForkJoinPool.scan(scala.concurrent.forkjoin.ForkJoinPool$WorkQueue) @bci=525, line=2075 (Compiled frame)

- scala.concurrent.forkjoin.ForkJoinPool.runWorker(scala.concurrent.forkjoin.ForkJoinPool$WorkQueue) @bci=8, line=1979 (Interpreted frame)

- scala.concurrent.forkjoin.ForkJoinWorkerThread.run() @bci=14, line=107 (Interpreted frame)

Thread 3230: (state = IN_NATIVE)

- sun.nio.ch.EPollArrayWrapper.epollWait(long, int, long, int) @bci=0 (Interpreted frame)

- sun.nio.ch.EPollArrayWrapper.poll(long) @bci=18, line=269 (Interpreted frame)

- sun.nio.ch.EPollSelectorImpl.doSelect(long) @bci=28, line=93 (Interpreted frame)

- sun.nio.ch.SelectorImpl.lockAndDoSelect(long) @bci=37, line=86 (Interpreted frame)

- sun.nio.ch.SelectorImpl.select(long) @bci=30, line=97 (Interpreted frame)

- sun.nio.ch.SelectorImpl.select() @bci=2, line=101 (Interpreted frame)

- org.apache.flink.shaded.akka.org.jboss.netty.channel.socket.nio.NioServerBoss.select(java.nio.channels.Selector) @bci=1, line=163 (Interpreted frame)

- org.apache.flink.shaded.akka.org.jboss.netty.channel.socket.nio.AbstractNioSelector.run() @bci=56, line=212 (Interpreted frame)

- org.apache.flink.shaded.akka.org.jboss.netty.channel.socket.nio.NioServerBoss.run() @bci=1, line=42 (Interpreted frame)

- org.apache.flink.shaded.akka.org.jboss.netty.util.ThreadRenamingRunnable.run() @bci=55, line=108 (Interpreted frame)

- org.apache.flink.shaded.akka.org.jboss.netty.util.internal.DeadLockProofWorker$1.run() @bci=14, line=42 (Interpreted frame)

- java.util.concurrent.ThreadPoolExecutor.runWorker(java.util.concurrent.ThreadPoolExecutor$Worker) @bci=95, line=1142 (Interpreted frame)

- java.util.concurrent.ThreadPoolExecutor$Worker.run() @bci=5, line=617 (Interpreted frame)

- java.lang.Thread.run() @bci=11, line=748 (Interpreted frame)

Thread 3229: (state = BLOCKED)

- sun.nio.ch.EPollArrayWrapper.epollWait(long, int, long, int) @bci=0 (Compiled frame; information may be imprecise)

- sun.nio.ch.EPollArrayWrapper.poll(long) @bci=18, line=269 (Compiled frame)

- sun.nio.ch.EPollSelectorImpl.doSelect(long) @bci=28, line=93 (Compiled frame)

- sun.nio.ch.SelectorImpl.lockAndDoSelect(long) @bci=37, line=86 (Compiled frame)

- sun.nio.ch.SelectorImpl.select(long) @bci=30, line=97 (Compiled frame)

- org.apache.flink.shaded.akka.org.jboss.netty.channel.socket.nio.SelectorUtil.select(java.nio.channels.Selector) @bci=4, line=68 (Compiled frame)

- org.apache.flink.shaded.akka.org.jboss.netty.channel.socket.nio.AbstractNioSelector.select(java.nio.channels.Selector) @bci=1, line=434 (Compiled frame)

- org.apache.flink.shaded.akka.org.jboss.netty.channel.socket.nio.AbstractNioSelector.run() @bci=56, line=212 (Interpreted frame)

- org.apache.flink.shaded.akka.org.jboss.netty.channel.socket.nio.AbstractNioWorker.run() @bci=1, line=89 (Interpreted frame)

- org.apache.flink.shaded.akka.org.jboss.netty.channel.socket.nio.NioWorker.run() @bci=1, line=178 (Interpreted frame)

- org.apache.flink.shaded.akka.org.jboss.netty.util.ThreadRenamingRunnable.run() @bci=55, line=108 (Interpreted frame)

- org.apache.flink.shaded.akka.org.jboss.netty.util.internal.DeadLockProofWorker$1.run() @bci=14, line=42 (Interpreted frame)

- java.util.concurrent.ThreadPoolExecutor.runWorker(java.util.concurrent.ThreadPoolExecutor$Worker) @bci=95, line=1142 (Interpreted frame)

- java.util.concurrent.ThreadPoolExecutor$Worker.run() @bci=5, line=617 (Interpreted frame)

- java.lang.Thread.run() @bci=11, line=748 (Interpreted frame)

Thread 3228: (state = BLOCKED)

- sun.nio.ch.EPollArrayWrapper.epollWait(long, int, long, int) @bci=0 (Compiled frame; information may be imprecise)

- sun.nio.ch.EPollArrayWrapper.poll(long) @bci=18, line=269 (Compiled frame)

- sun.nio.ch.EPollSelectorImpl.doSelect(long) @bci=28, line=93 (Compiled frame)

- sun.nio.ch.SelectorImpl.lockAndDoSelect(long) @bci=37, line=86 (Compiled frame)

- sun.nio.ch.SelectorImpl.select(long) @bci=30, line=97 (Compiled frame)

- org.apache.flink.shaded.akka.org.jboss.netty.channel.socket.nio.SelectorUtil.select(java.nio.channels.Selector) @bci=4, line=68 (Compiled frame)

- org.apache.flink.shaded.akka.org.jboss.netty.channel.socket.nio.AbstractNioSelector.select(java.nio.channels.Selector) @bci=1, line=434 (Compiled frame)

- org.apache.flink.shaded.akka.org.jboss.netty.channel.socket.nio.AbstractNioSelector.run() @bci=56, line=212 (Interpreted frame)

- org.apache.flink.shaded.akka.org.jboss.netty.channel.socket.nio.AbstractNioWorker.run() @bci=1, line=89 (Interpreted frame)

- org.apache.flink.shaded.akka.org.jboss.netty.channel.socket.nio.NioWorker.run() @bci=1, line=178 (Interpreted frame)

- org.apache.flink.shaded.akka.org.jboss.netty.util.ThreadRenamingRunnable.run() @bci=55, line=108 (Interpreted frame)

- org.apache.flink.shaded.akka.org.jboss.netty.util.internal.DeadLockProofWorker$1.run() @bci=14, line=42 (Interpreted frame)

- java.util.concurrent.ThreadPoolExecutor.runWorker(java.util.concurrent.ThreadPoolExecutor$Worker) @bci=95, line=1142 (Interpreted frame)

- java.util.concurrent.ThreadPoolExecutor$Worker.run() @bci=5, line=617 (Interpreted frame)

- java.lang.Thread.run() @bci=11, line=748 (Interpreted frame)

Thread 3227: (state = BLOCKED)

- sun.nio.ch.EPollArrayWrapper.epollWait(long, int, long, int) @bci=0 (Compiled frame; information may be imprecise)

- sun.nio.ch.EPollArrayWrapper.poll(long) @bci=18, line=269 (Compiled frame)

- sun.nio.ch.EPollSelectorImpl.doSelect(long) @bci=28, line=93 (Compiled frame)

- sun.nio.ch.SelectorImpl.lockAndDoSelect(long) @bci=37, line=86 (Compiled frame)

- sun.nio.ch.SelectorImpl.select(long) @bci=30, line=97 (Compiled frame)

- org.apache.flink.shaded.akka.org.jboss.netty.channel.socket.nio.SelectorUtil.select(java.nio.channels.Selector) @bci=4, line=68 (Compiled frame)

- org.apache.flink.shaded.akka.org.jboss.netty.channel.socket.nio.AbstractNioSelector.select(java.nio.channels.Selector) @bci=1, line=434 (Compiled frame)

- org.apache.flink.shaded.akka.org.jboss.netty.channel.socket.nio.AbstractNioSelector.run() @bci=56, line=212 (Interpreted frame)

- org.apache.flink.shaded.akka.org.jboss.netty.channel.socket.nio.NioClientBoss.run() @bci=1, line=42 (Interpreted frame)

- org.apache.flink.shaded.akka.org.jboss.netty.util.ThreadRenamingRunnable.run() @bci=55, line=108 (Interpreted frame)

- org.apache.flink.shaded.akka.org.jboss.netty.util.internal.DeadLockProofWorker$1.run() @bci=14, line=42 (Interpreted frame)

- java.util.concurrent.ThreadPoolExecutor.runWorker(java.util.concurrent.ThreadPoolExecutor$Worker) @bci=95, line=1142 (Interpreted frame)

- java.util.concurrent.ThreadPoolExecutor$Worker.run() @bci=5, line=617 (Interpreted frame)

- java.lang.Thread.run() @bci=11, line=748 (Interpreted frame)

Thread 3226: (state = BLOCKED)

- sun.nio.ch.EPollArrayWrapper.epollWait(long, int, long, int) @bci=0 (Compiled frame; information may be imprecise)

- sun.nio.ch.EPollArrayWrapper.poll(long) @bci=18, line=269 (Compiled frame)

- sun.nio.ch.EPollSelectorImpl.doSelect(long) @bci=28, line=93 (Compiled frame)

- sun.nio.ch.SelectorImpl.lockAndDoSelect(long) @bci=37, line=86 (Compiled frame)

- sun.nio.ch.SelectorImpl.select(long) @bci=30, line=97 (Compiled frame)

- org.apache.flink.shaded.akka.org.jboss.netty.channel.socket.nio.SelectorUtil.select(java.nio.channels.Selector) @bci=4, line=68 (Compiled frame)

- org.apache.flink.shaded.akka.org.jboss.netty.channel.socket.nio.AbstractNioSelector.select(java.nio.channels.Selector) @bci=1, line=434 (Compiled frame)

- org.apache.flink.shaded.akka.org.jboss.netty.channel.socket.nio.AbstractNioSelector.run() @bci=56, line=212 (Interpreted frame)

- org.apache.flink.shaded.akka.org.jboss.netty.channel.socket.nio.AbstractNioWorker.run() @bci=1, line=89 (Interpreted frame)

- org.apache.flink.shaded.akka.org.jboss.netty.channel.socket.nio.NioWorker.run() @bci=1, line=178 (Interpreted frame)

- org.apache.flink.shaded.akka.org.jboss.netty.util.ThreadRenamingRunnable.run() @bci=55, line=108 (Interpreted frame)

- org.apache.flink.shaded.akka.org.jboss.netty.util.internal.DeadLockProofWorker$1.run() @bci=14, line=42 (Interpreted frame)

- java.util.concurrent.ThreadPoolExecutor.runWorker(java.util.concurrent.ThreadPoolExecutor$Worker) @bci=95, line=1142 (Interpreted frame)

- java.util.concurrent.ThreadPoolExecutor$Worker.run() @bci=5, line=617 (Interpreted frame)

- java.lang.Thread.run() @bci=11, line=748 (Interpreted frame)

Thread 3225: (state = BLOCKED)

- sun.nio.ch.EPollArrayWrapper.epollWait(long, int, long, int) @bci=0 (Compiled frame; information may be imprecise)

- sun.nio.ch.EPollArrayWrapper.poll(long) @bci=18, line=269 (Compiled frame)

- sun.nio.ch.EPollSelectorImpl.doSelect(long) @bci=28, line=93 (Compiled frame)

- sun.nio.ch.SelectorImpl.lockAndDoSelect(long) @bci=37, line=86 (Compiled frame)

- sun.nio.ch.SelectorImpl.select(long) @bci=30, line=97 (Compiled frame)

- org.apache.flink.shaded.akka.org.jboss.netty.channel.socket.nio.SelectorUtil.select(java.nio.channels.Selector) @bci=4, line=68 (Compiled frame)

- org.apache.flink.shaded.akka.org.jboss.netty.channel.socket.nio.AbstractNioSelector.select(java.nio.channels.Selector) @bci=1, line=434 (Compiled frame)

- org.apache.flink.shaded.akka.org.jboss.netty.channel.socket.nio.AbstractNioSelector.run() @bci=56, line=212 (Interpreted frame)

- org.apache.flink.shaded.akka.org.jboss.netty.channel.socket.nio.AbstractNioWorker.run() @bci=1, line=89 (Interpreted frame)

- org.apache.flink.shaded.akka.org.jboss.netty.channel.socket.nio.NioWorker.run() @bci=1, line=178 (Interpreted frame)

- org.apache.flink.shaded.akka.org.jboss.netty.util.ThreadRenamingRunnable.run() @bci=55, line=108 (Interpreted frame)

- org.apache.flink.shaded.akka.org.jboss.netty.util.internal.DeadLockProofWorker$1.run() @bci=14, line=42 (Interpreted frame)

- java.util.concurrent.ThreadPoolExecutor.runWorker(java.util.concurrent.ThreadPoolExecutor$Worker) @bci=95, line=1142 (Interpreted frame)

- java.util.concurrent.ThreadPoolExecutor$Worker.run() @bci=5, line=617 (Interpreted frame)

- java.lang.Thread.run() @bci=11, line=748 (Interpreted frame)

Thread 3224: (state = IN_JAVA)

- akka.util.SubclassifiedIndex$$anonfun$innerFindSubKeys$1.<init>(akka.util.SubclassifiedIndex, java.lang.Object, scala.collection.immutable.Vector) @bci=25, line=186 (Interpreted frame)

- akka.util.SubclassifiedIndex.innerFindSubKeys(java.lang.Object, scala.collection.immutable.Vector) @bci=22, line=186 (Interpreted frame)

- akka.util.SubclassifiedIndex$$anonfun$innerFindSubKeys$1.apply(scala.collection.immutable.Set, akka.util.SubclassifiedIndex$Nonroot) @bci=36, line=188 (Interpreted frame)

- akka.util.SubclassifiedIndex$$anonfun$innerFindSubKeys$1.apply(java.lang.Object, java.lang.Object) @bci=9, line=186 (Interpreted frame)

- scala.collection.TraversableOnce$$anonfun$foldLeft$1.apply(java.lang.Object) @bci=16, line=157 (Interpreted frame)

- scala.collection.TraversableOnce$$anonfun$foldLeft$1.apply(java.lang.Object) @bci=2, line=157 (Interpreted frame)

- scala.collection.Iterator$class.foreach(scala.collection.Iterator, scala.Function1) @bci=16, line=891 (Compiled frame)

- scala.collection.AbstractIterator.foreach(scala.Function1) @bci=2, line=1334 (Compiled frame)

- scala.collection.IterableLike$class.foreach(scala.collection.IterableLike, scala.Function1) @bci=7, line=72 (Compiled frame)

- scala.collection.AbstractIterable.foreach(scala.Function1) @bci=2, line=54 (Compiled frame)

- scala.collection.TraversableOnce$class.foldLeft(scala.collection.TraversableOnce, java.lang.Object, scala.Function2) @bci=16, line=157 (Interpreted frame)

- scala.collection.AbstractTraversable.foldLeft(java.lang.Object, scala.Function2) @bci=3, line=104 (Interpreted frame)

- scala.collection.TraversableOnce$class.$div$colon(scala.collection.TraversableOnce, java.lang.Object, scala.Function2) @bci=3, line=151 (Compiled frame)

- scala.collection.AbstractTraversable.$div$colon(java.lang.Object, scala.Function2) @bci=3, line=104 (Compiled frame)

- akka.util.SubclassifiedIndex.innerFindSubKeys(java.lang.Object, scala.collection.immutable.Vector) @bci=25, line=186 (Interpreted frame)

- akka.util.SubclassifiedIndex.findSubKeysExcept(java.lang.Object, scala.collection.immutable.Vector) @bci=6, line=184 (Interpreted frame)

- akka.util.SubclassifiedIndex.integrate(akka.util.SubclassifiedIndex$Nonroot) @bci=127, line=206 (Interpreted frame)

- akka.util.SubclassifiedIndex.innerAddKey(java.lang.Object) @bci=64, line=107 (Interpreted frame)

- akka.util.SubclassifiedIndex.addKey(java.lang.Object) @bci=3, line=93 (Interpreted frame)

- akka.event.SubchannelClassification$class.publish(akka.event.SubchannelClassification, java.lang.Object) @bci=89, line=171 (Interpreted frame)

- akka.event.EventStream.publish(java.lang.Object) @bci=2, line=28 (Interpreted frame)

- akka.remote.EventPublisher.notifyListeners(akka.remote.RemotingLifecycleEvent) @bci=8, line=123 (Interpreted frame)

- akka.remote.EndpointManager$$anonfun$3.applyOrElse(java.lang.Object, scala.Function1) @bci=572, line=597 (Compiled frame)

- akka.actor.Actor$class.aroundReceive(akka.actor.Actor, scala.PartialFunction, java.lang.Object) @bci=8, line=502 (Compiled frame)

- akka.remote.EndpointManager.aroundReceive(scala.PartialFunction, java.lang.Object) @bci=3, line=441 (Compiled frame)

- akka.actor.ActorCell.receiveMessage(java.lang.Object) @bci=15, line=526 (Compiled frame)

- akka.actor.ActorCell.invoke(akka.dispatch.Envelope) @bci=59, line=495 (Compiled frame)

- akka.dispatch.Mailbox.processMailbox(int, long) @bci=24, line=257 (Compiled frame)

- akka.dispatch.Mailbox.run() @bci=20, line=224 (Compiled frame)

- akka.dispatch.Mailbox.exec() @bci=1, line=234 (Compiled frame)

- scala.concurrent.forkjoin.ForkJoinTask.doExec() @bci=10, line=260 (Compiled frame)

- scala.concurrent.forkjoin.ForkJoinPool$WorkQueue.runTask(scala.concurrent.forkjoin.ForkJoinTask) @bci=10, line=1339 (Compiled frame)

- scala.concurrent.forkjoin.ForkJoinPool.runWorker(scala.concurrent.forkjoin.ForkJoinPool$WorkQueue) @bci=11, line=1979 (Interpreted frame)

- scala.concurrent.forkjoin.ForkJoinWorkerThread.run() @bci=14, line=107 (Interpreted frame)

Thread 3223: (state = BLOCKED)

- java.lang.Class.forName0(java.lang.String, boolean, java.lang.ClassLoader, java.lang.Class) @bci=0 (Compiled frame; information may be imprecise)

- java.lang.Class.forName(java.lang.String, boolean, java.lang.ClassLoader) @bci=49, line=348 (Compiled frame)

- akka.util.ClassLoaderObjectInputStream.resolveClass(java.io.ObjectStreamClass) @bci=9, line=18 (Compiled frame)

- java.io.ObjectInputStream.readNonProxyDesc(boolean) @bci=108, line=1826 (Compiled frame)

- java.io.ObjectInputStream.readClassDesc(boolean) @bci=86, line=1713 (Compiled frame)

- java.io.ObjectInputStream.readOrdinaryObject(boolean) @bci=31, line=2003 (Compiled frame)

- java.io.ObjectInputStream.readObject0(boolean) @bci=401, line=1535 (Compiled frame)

- java.io.ObjectInputStream.defaultReadFields(java.lang.Object, java.io.ObjectStreamClass) @bci=150, line=2245 (Compiled frame)

- java.io.ObjectInputStream.readSerialData(java.lang.Object, java.io.ObjectStreamClass) @bci=298, line=2169 (Compiled frame)

- java.io.ObjectInputStream.readOrdinaryObject(boolean) @bci=181, line=2027 (Compiled frame)

- java.io.ObjectInputStream.readObject0(boolean) @bci=401, line=1535 (Compiled frame)

- java.io.ObjectInputStream.readObject() @bci=19, line=422 (Compiled frame)

- akka.serialization.JavaSerializer$$anonfun$1.apply() @bci=4, line=328 (Compiled frame)

- scala.util.DynamicVariable.withValue(java.lang.Object, scala.Function0) @bci=14, line=58 (Compiled frame)

- akka.serialization.JavaSerializer.fromBinary(byte[], scala.Option) @bci=45, line=328 (Interpreted frame)

- akka.serialization.Serialization.akka$serialization$Serialization$$deserializeByteArray(byte[], akka.serialization.Serializer, java.lang.String) @bci=62, line=156 (Compiled frame)

- akka.serialization.Serialization$$anonfun$deserialize$2.apply() @bci=25, line=142 (Compiled frame)

- scala.util.Try$.apply(scala.Function0) @bci=5, line=192 (Compiled frame)

- akka.serialization.Serialization.deserialize(byte[], int, java.lang.String) @bci=14, line=136 (Compiled frame)

- akka.remote.serialization.MessageContainerSerializer.fromBinary(byte[], scala.Option) @bci=43, line=80 (Compiled frame)

- akka.serialization.Serialization.akka$serialization$Serialization$$deserializeByteArray(byte[], akka.serialization.Serializer, java.lang.String) @bci=62, line=156 (Compiled frame)

- akka.serialization.Serialization$$anonfun$deserialize$2.apply() @bci=25, line=142 (Compiled frame)

- scala.util.Try$.apply(scala.Function0) @bci=5, line=192 (Compiled frame)

- akka.serialization.Serialization.deserialize(byte[], int, java.lang.String) @bci=14, line=136 (Compiled frame)

- akka.remote.MessageSerializer$.deserialize(akka.actor.ExtendedActorSystem, akka.remote.WireFormats$SerializedMessage) @bci=40, line=30 (Interpreted frame)

- akka.remote.DefaultMessageDispatcher.payload$lzycompute$1(akka.remote.WireFormats$SerializedMessage, scala.runtime.ObjectRef, scala.runtime.VolatileByteRef) @bci=25, line=64 (Interpreted frame)

- akka.remote.DefaultMessageDispatcher.payload$1(akka.remote.WireFormats$SerializedMessage, scala.runtime.ObjectRef, scala.runtime.VolatileByteRef) @bci=15, line=64 (Compiled frame)

- akka.remote.DefaultMessageDispatcher.dispatch(akka.actor.InternalActorRef, akka.actor.Address, akka.remote.WireFormats$SerializedMessage, akka.actor.ActorRef) @bci=266, line=82 (Interpreted frame)

- akka.remote.EndpointReader$$anonfun$receive$2.applyOrElse(java.lang.Object, scala.Function1) @bci=273, line=982 (Compiled frame)

- akka.actor.Actor$class.aroundReceive(akka.actor.Actor, scala.PartialFunction, java.lang.Object) @bci=8, line=502 (Compiled frame)

- akka.remote.EndpointActor.aroundReceive(scala.PartialFunction, java.lang.Object) @bci=3, line=446 (Compiled frame)

- akka.actor.ActorCell.receiveMessage(java.lang.Object) @bci=15, line=526 (Compiled frame)

- akka.actor.ActorCell.invoke(akka.dispatch.Envelope) @bci=59, line=495 (Compiled frame)

- akka.dispatch.Mailbox.processMailbox(int, long) @bci=24, line=257 (Compiled frame)

- akka.dispatch.Mailbox.run() @bci=20, line=224 (Compiled frame)

- akka.dispatch.Mailbox.exec() @bci=1, line=234 (Compiled frame)

- scala.concurrent.forkjoin.ForkJoinTask.doExec() @bci=10, line=260 (Compiled frame)

- scala.concurrent.forkjoin.ForkJoinPool$WorkQueue.runTask(scala.concurrent.forkjoin.ForkJoinTask) @bci=10, line=1339 (Compiled frame)

- scala.concurrent.forkjoin.ForkJoinPool.runWorker(scala.concurrent.forkjoin.ForkJoinPool$WorkQueue) @bci=11, line=1979 (Interpreted frame)

- scala.concurrent.forkjoin.ForkJoinWorkerThread.run() @bci=14, line=107 (Interpreted frame)

Thread 3218: (state = BLOCKED)

- java.lang.Thread.sleep(long) @bci=0 (Compiled frame; information may be imprecise)

- akka.actor.LightArrayRevolverScheduler.waitNanos(long) @bci=36, line=85 (Compiled frame)

- akka.actor.LightArrayRevolverScheduler$$anon$4.nextTick() @bci=50, line=266 (Compiled frame)

- akka.actor.LightArrayRevolverScheduler$$anon$4.run() @bci=1, line=236 (Interpreted frame)

- java.lang.Thread.run() @bci=11, line=748 (Interpreted frame)

Thread 3205: (state = BLOCKED)

Thread 3204: (state = BLOCKED)

Thread 3203: (state = BLOCKED)

- java.lang.Object.wait(long) @bci=0 (Compiled frame; information may be imprecise)

- java.lang.ref.ReferenceQueue.remove(long) @bci=59, line=143 (Compiled frame)

- java.lang.ref.ReferenceQueue.remove() @bci=2, line=164 (Compiled frame)

- java.lang.ref.Finalizer$FinalizerThread.run() @bci=36, line=209 (Interpreted frame)

Thread 3202: (state = BLOCKED)

- java.lang.Object.wait(long) @bci=0 (Compiled frame; information may be imprecise)

- java.lang.Object.wait() @bci=2, line=502 (Compiled frame)

- java.lang.ref.Reference.tryHandlePending(boolean) @bci=54, line=191 (Compiled frame)

- java.lang.ref.Reference$ReferenceHandler.run() @bci=1, line=153 (Interpreted frame)

Thread 3189: (state = BLOCKED)

- sun.misc.Unsafe.park(boolean, long) @bci=0 (Interpreted frame)

- java.util.concurrent.locks.LockSupport.park(java.lang.Object) @bci=14, line=175 (Interpreted frame)

- java.util.concurrent.locks.AbstractQueuedSynchronizer.parkAndCheckInterrupt() @bci=1, line=836 (Interpreted frame)

- java.util.concurrent.locks.AbstractQueuedSynchronizer.doAcquireSharedInterruptibly(int) @bci=72, line=997 (Interpreted frame)

- java.util.concurrent.locks.AbstractQueuedSynchronizer.acquireSharedInterruptibly(int) @bci=24, line=1304 (Interpreted frame)

- scala.concurrent.impl.Promise$DefaultPromise.tryAwait(scala.concurrent.duration.Duration) @bci=74, line=206 (Interpreted frame)

- scala.concurrent.impl.Promise$DefaultPromise.ready(scala.concurrent.duration.Duration, scala.concurrent.CanAwait) @bci=2, line=222 (Interpreted frame)

- scala.concurrent.impl.Promise$DefaultPromise.ready(scala.concurrent.duration.Duration, scala.concurrent.CanAwait) @bci=3, line=157 (Interpreted frame)

- scala.concurrent.Await$$anonfun$ready$1.apply() @bci=11, line=169 (Interpreted frame)

- scala.concurrent.Await$$anonfun$ready$1.apply() @bci=1, line=169 (Interpreted frame)

- scala.concurrent.BlockContext$DefaultBlockContext$.blockOn(scala.Function0, scala.concurrent.CanAwait) @bci=1, line=53 (Interpreted frame)

- scala.concurrent.Await$.ready(scala.concurrent.Awaitable, scala.concurrent.duration.Duration) @bci=26, line=169 (Interpreted frame)

- akka.actor.ActorSystemImpl.awaitTermination(scala.concurrent.duration.Duration) @bci=8, line=844 (Interpreted frame)

- akka.actor.ActorSystemImpl.awaitTermination() @bci=7, line=845 (Interpreted frame)

- org.apache.flink.runtime.taskmanager.TaskManager$.runTaskManager(java.lang.String, org.apache.flink.runtime.clusterframework.types.ResourceID, int, org.apache.flink.configuration.Configuration, org.apache.flink.runtime.highavailability.HighAvailabilityServices, java.lang.Class) @bci=355, line=1899 (Interpreted frame)

- org.apache.flink.runtime.taskmanager.TaskManager$$anonfun$1.apply$mcV$sp() @bci=92, line=1964 (Interpreted frame)

- org.apache.flink.runtime.taskmanager.TaskManager$$anonfun$1.apply() @bci=1, line=1942 (Interpreted frame)

- org.apache.flink.runtime.taskmanager.TaskManager$$anonfun$1.apply() @bci=1, line=1942 (Interpreted frame)

- scala.util.Try$.apply(scala.Function0) @bci=5, line=192 (Interpreted frame)

- org.apache.flink.runtime.akka.AkkaUtils$.retryOnBindException(scala.Function0, scala.Function0, long) @bci=10, line=766 (Interpreted frame)

- org.apache.flink.runtime.taskmanager.TaskManager$.runTaskManager(java.lang.String, org.apache.flink.runtime.clusterframework.types.ResourceID, java.util.Iterator, org.apache.flink.configuration.Configuration, org.apache.flink.runtime.highavailability.HighAvailabilityServices, java.lang.Class) @bci=30, line=1942 (Interpreted frame)

- org.apache.flink.runtime.taskmanager.TaskManager$.selectNetworkInterfaceAndRunTaskManager(org.apache.flink.configuration.Configuration, org.apache.flink.runtime.clusterframework.types.ResourceID, java.lang.Class) @bci=93, line=1713 (Interpreted frame)

- org.apache.flink.runtime.taskmanager.TaskManager.selectNetworkInterfaceAndRunTaskManager(org.apache.flink.configuration.Configuration, org.apache.flink.runtime.clusterframework.types.ResourceID, java.lang.Class) @bci=6 (Interpreted frame)

- org.apache.flink.yarn.YarnTaskManagerRunnerFactory$Runner.call() @bci=12, line=70 (Interpreted frame)

- org.apache.flink.runtime.security.HadoopSecurityContext$$Lambda$8.run() @bci=4 (Interpreted frame)

- java.security.AccessController.doPrivileged(java.security.PrivilegedExceptionAction, java.security.AccessControlContext) @bci=0 (Compiled frame)

- javax.security.auth.Subject.doAs(javax.security.auth.Subject, java.security.PrivilegedExceptionAction) @bci=42, line=422 (Interpreted frame)

- org.apache.hadoop.security.UserGroupInformation.doAs(java.security.PrivilegedExceptionAction) @bci=14, line=1692 (Interpreted frame)

- org.apache.flink.runtime.security.HadoopSecurityContext.runSecured(java.util.concurrent.Callable) @bci=15, line=41 (Interpreted frame)

- org.apache.flink.yarn.YarnTaskManager$.main(java.lang.String[]) @bci=14, line=78 (Interpreted frame)

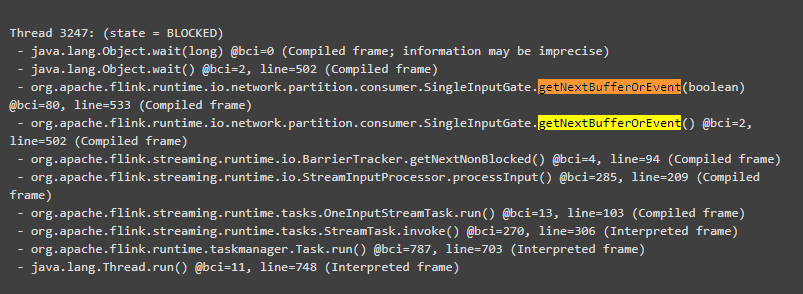

- org.apache.flink.yarn.YarnTaskManager.main(java.lang.String[]) @bci=4 (Interpreted frame)分析这个线程栈,找到了这个!

所以接下来,我们来分析这个线程!!!

542

542

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?