实验目标:掌握组播在MPLS/×××骨干中实现组播的传输

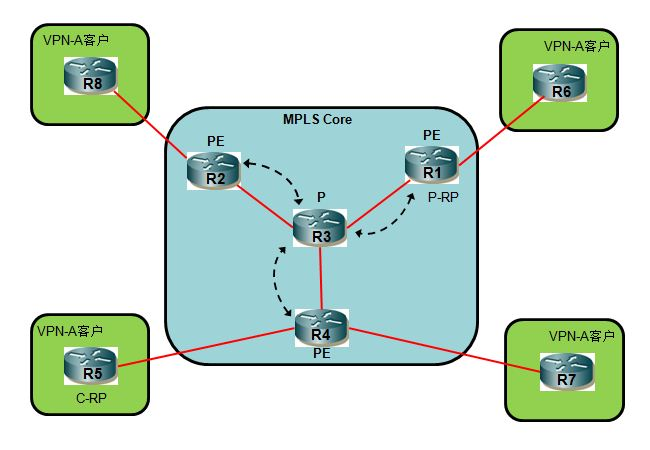

实验TOP:

首先完成MPLS/×××骨干网络的配置,保证×××客户之间的连通性。

基本配置:

R1:

! hostname R1 ! ip vrf A rd 1:100 route-target export 1:100 route-target import 1:100 ! ip cef no ip domain lookup ! mpls label range 100 199 mpls label protocol ldp ! interface Loopback0 ip address 1.1.1.1 255.255.255.255 ! interface Ethernet0/0 no ip address ! interface Ethernet0/0.13 encapsulation dot1Q 13 ip address 13.1.1.1 255.255.255.0 mpls ip ! interface Ethernet0/0.16 encapsulation dot1Q 16 ip vrf forwarding A ip address 16.1.1.1 255.255.255.0 ! router ospf 100 vrf A log-adjacency-changes redistribute bgp 1 subnets network 16.1.1.0 0.0.0.255 area 0 ! router ospf 10 router-id 1.1.1.1 log-adjacency-changes network 0.0.0.0 255.255.255.255 area 0 ! router bgp 1 bgp router-id 1.1.1.1 no bgp default ipv4-unicast bgp log-neighbor-changes neighbor 3.3.3.3 remote-as 1 neighbor 3.3.3.3 update-source Loopback0 ! address-family ipv4 no synchronization no auto-summary exit-address-family ! address-family ***v4 neighbor 3.3.3.3 activate neighbor 3.3.3.3 send-community extended exit-address-family ! address-family ipv4 vrf A no synchronization redistribute ospf 100 vrf A exit-address-family ! mpls ldp router-id Loopback0 force ! line con 0 logging synchronous line aux 0 line vty 0 4 login ! end

R2:

! hostname R2 ! ip vrf A rd 1:100 route-target export 1:100 route-target import 1:100 ! ip cef no ip domain lookup ! mpls label range 200 299 mpls label protocol ldp ! interface Loopback0 ip address 2.2.2.2 255.255.255.255 ! interface Ethernet0/0 no ip address ! interface Ethernet0/0.23 encapsulation dot1Q 23 ip address 23.1.1.2 255.255.255.0 mpls ip ! interface Ethernet0/0.28 encapsulation dot1Q 28 ip vrf forwarding A ip address 28.1.1.2 255.255.255.0 ! ! router ospf 100 vrf A log-adjacency-changes redistribute bgp 1 subnets network 28.1.1.0 0.0.0.255 area 0 ! router ospf 10 router-id 2.2.2.2 log-adjacency-changes network 0.0.0.0 255.255.255.255 area 0 ! router bgp 1 bgp router-id 2.2.2.2 no bgp default ipv4-unicast bgp log-neighbor-changes neighbor 3.3.3.3 remote-as 1 neighbor 3.3.3.3 update-source Loopback0 ! address-family ipv4 no synchronization no auto-summary exit-address-family ! address-family ***v4 neighbor 3.3.3.3 activate neighbor 3.3.3.3 send-community extended exit-address-family ! address-family ipv4 vrf A no synchronization redistribute ospf 100 vrf A exit-address-family ! ! mpls ldp router-id Loopback0 force ! line con 0 logging synchronous line aux 0 line vty 0 4 login ! end

R3:

! hostname R3 ! ip cef no ip domain lookup ! mpls label range 300 399 mpls label protocol ldp ! interface Loopback0 ip address 3.3.3.3 255.255.255.255 ! interface Ethernet0/0 no ip address ! interface Ethernet0/0.13 encapsulation dot1Q 13 ip address 13.1.1.3 255.255.255.0 mpls ip ! interface Ethernet0/0.23 encapsulation dot1Q 23 ip address 23.1.1.3 255.255.255.0 mpls ip ! interface Ethernet0/0.34 encapsulation dot1Q 34 ip address 34.1.1.3 255.255.255.0 mpls ip ! ! router ospf 10 router-id 3.3.3.3 log-adjacency-changes network 0.0.0.0 255.255.255.255 area 0 ! router bgp 1 bgp router-id 3.3.3.3 no bgp default ipv4-unicast bgp log-neighbor-changes neighbor rr peer-group neighbor rr remote-as 1 neighbor rr update-source Loopback0 neighbor 1.1.1.1 peer-group rr neighbor 2.2.2.2 peer-group rr neighbor 4.4.4.4 peer-group rr ! address-family ipv4 no synchronization no auto-summary exit-address-family ! address-family ***v4 neighbor rr send-community extended neighbor rr route-reflector-client neighbor 1.1.1.1 activate neighbor 2.2.2.2 activate neighbor 4.4.4.4 activate exit-address-family ! ! mpls ldp router-id Loopback0 force ! line con 0 logging synchronous line aux 0 line vty 0 4 login ! end

R4:

! hostname R4 ! ! ip vrf A rd 1:100 route-target export 1:100 route-target import 1:100 ! ! ! ip cef no ip domain lookup ! mpls label range 400 499 mpls label protocol ldp ! interface Loopback0 ip address 4.4.4.4 255.255.255.255 ! interface Ethernet0/0 no ip address ! interface Ethernet0/0.34 encapsulation dot1Q 34 ip address 34.1.1.4 255.255.255.0 mpls ip ! interface Ethernet0/0.45 encapsulation dot1Q 45 ip vrf forwarding A ip address 45.1.1.4 255.255.255.0 ! interface Ethernet0/0.47 encapsulation dot1Q 47 ip vrf forwarding A ip address 47.1.1.4 255.255.255.0 ! ! router ospf 100 vrf A log-adjacency-changes redistribute bgp 1 subnets network 45.1.1.0 0.0.0.255 area 0 network 47.1.1.0 0.0.0.255 area 0 ! router ospf 10 router-id 4.4.4.4 log-adjacency-changes network 0.0.0.0 255.255.255.255 area 0 ! router bgp 1 bgp router-id 4.4.4.4 no bgp default ipv4-unicast bgp log-neighbor-changes neighbor 3.3.3.3 remote-as 1 neighbor 3.3.3.3 update-source Loopback0 ! address-family ipv4 no synchronization no auto-summary exit-address-family ! address-family ***v4 neighbor 3.3.3.3 activate neighbor 3.3.3.3 send-community extended exit-address-family ! address-family ipv4 vrf A no synchronization redistribute ospf 100 vrf A exit-address-family ! ! mpls ldp router-id Loopback0 force ! line con 0 logging synchronous line aux 0 line vty 0 4 login ! End

R5:

! hostname R5 ! ip cef no ip domain lookup ! ! interface Loopback0 ip address 5.5.5.5 255.255.255.255 ! interface Ethernet0/0 no ip address ! interface Ethernet0/0.45 encapsulation dot1Q 45 ip address 45.1.1.5 255.255.255.0 ! ! router ospf 100 router-id 5.5.5.5 log-adjacency-changes network 0.0.0.0 255.255.255.255 area 0 ! ! line con 0 logging synchronous line aux 0 line vty 0 4 login ! end

R6:

! hostname R6 ! ! ip cef no ip domain lookup ! ! interface Loopback0 ip address 6.6.6.6 255.255.255.255 ! interface Ethernet0/0 no ip address ! interface Ethernet0/0.16 encapsulation dot1Q 16 ip address 16.1.1.6 255.255.255.0 ! ! router ospf 100 router-id 6.6.6.6 log-adjacency-changes network 0.0.0.0 255.255.255.255 area 0 ! ! line con 0 logging synchronous line aux 0 line vty 0 4 login ! end

R7:

! hostname R7 ! ! ip cef no ip domain lookup ! interface Loopback0 ip address 7.7.7.7 255.255.255.255 ! interface Ethernet0/0 no ip address ! interface Ethernet0/0.47 encapsulation dot1Q 47 ip address 47.1.1.7 255.255.255.0 ! ! router ospf 100 router-id 7.7.7.7 log-adjacency-changes network 0.0.0.0 255.255.255.255 area 0 ! ! line con 0 logging synchronous line aux 0 line vty 0 4 login ! end

R8:

! hostname R8 ! ! ip cef no ip domain lookup ! ! interface Loopback0 ip address 8.8.8.8 255.255.255.255 ! interface Ethernet0/0 no ip address ! interface Ethernet0/0.28 encapsulation dot1Q 28 ip address 28.1.1.8 255.255.255.0 ! ! router ospf 100 router-id 8.8.8.8 log-adjacency-changes network 0.0.0.0 255.255.255.255 area 0 ! ! line con 0 logging synchronous line aux 0 line vty 0 4 login ! end

测试以上配置:

R3#sh ip bgp ***v4 all

BGP table version is 9, local router ID is 3.3.3.3

Status codes: s suppressed, d damped, h history, * valid, > best, i - internal,

r RIB-failure, S Stale

Origin codes: i - IGP, e - EGP, ? - incomplete

Network Next Hop Metric LocPrf Weight Path

Route Distinguisher: 1:100

*>i5.5.5.5/32 4.4.4.4 11 100 0 ?

*>i6.6.6.6/32 1.1.1.1 11 100 0 ?

*>i7.7.7.7/32 4.4.4.4 11 100 0 ?

*>i8.8.8.8/32 2.2.2.2 11 100 0 ?

*>i16.1.1.0/24 1.1.1.1 0 100 0 ?

*>i28.1.1.0/24 2.2.2.2 0 100 0 ?

*>i45.1.1.0/24 4.4.4.4 0 100 0 ?

*>i47.1.1.0/24 4.4.4.4 0 100 0 ?

收敛!

R5#sh ip route ospf

Codes: L - local, C - connected, S - static, R - RIP, M - mobile, B - BGP

D - EIGRP, EX - EIGRP external, O - OSPF, IA - OSPF inter area

N1 - OSPF NSSA external type 1, N2 - OSPF NSSA external type 2

E1 - OSPF external type 1, E2 - OSPF external type 2

i - IS-IS, su - IS-IS summary, L1 - IS-IS level-1, L2 - IS-IS level-2

ia - IS-IS inter area, * - candidate default, U - per-user static route

o - ODR, P - periodic downloaded static route, + - replicated route

Gateway of last resort is not set

6.0.0.0/32 is subnetted, 1 subnets

O IA 6.6.6.6 [110/21] via 45.1.1.4, 00:25:25, Ethernet0/0.45

7.0.0.0/32 is subnetted, 1 subnets

O 7.7.7.7 [110/21] via 45.1.1.4, 00:27:39, Ethernet0/0.45

8.0.0.0/32 is subnetted, 1 subnets

O IA 8.8.8.8 [110/21] via 45.1.1.4, 00:25:25, Ethernet0/0.45

16.0.0.0/24 is subnetted, 1 subnets

O IA 16.1.1.0 [110/11] via 45.1.1.4, 00:25:25, Ethernet0/0.45

28.0.0.0/24 is subnetted, 1 subnets

O IA 28.1.1.0 [110/11] via 45.1.1.4, 00:25:25, Ethernet0/0.45

47.0.0.0/24 is subnetted, 1 subnets

O 47.1.1.0 [110/20] via 45.1.1.4, 00:27:49, Ethernet0/0.45

×××客户路由收敛!

注:MPLS/×××服务提供商,为客户提供×××服务时,可以完全实现客户站点之间的连通性,但是在默认情况下,服务商骨干网不会传输来自客户站点的组播报文,如需将组播报文穿过骨干网,客户一般会在站点之间建立GRE隧道。不过这种方式有明显缺陷。

因此,在服务商骨干网为客户提供组播服务,需要特别的机制,而且对于不同的L3客户×××站点,在骨干网实现组播域的隔离,以便实现高可扩展性。这样的功能被称为m×××(组播×××)。

接着,我们开始m×××的配置:

1)客户站点启用组播功能,采用稀疏模式(SM),指定1台路由器为RP(本实验确定客户RP为5.5.5.5)

R5:

!

ip multicast-routing

!

interface Ethernet0/0.45

ip pim sparse-mode

!

ip pim rp-address 5.5.5.5

!

R6:

!

ip multicast-routing

!

interface Ethernet0/0.16

ip pim sparse-mode

!

ip pim rp-address 5.5.5.5

!

R7:

!

ip multicast-routing

!

interface Ethernet0/0.47

ip pim sparse-mode

!

ip pim rp-address 5.5.5.5

!

R8:

!

ip multicast-routing

!

interface Ethernet0/0.28

ip pim sparse-mode

!

ip pim rp-address 5.5.5.5

!

2)P网络启用组播功能,选择任意1台路由器充当RP(本实验选取RP为1.1.1.1):

R1:

!

ip multicast-routing

!

interface Ethernet0/0.13

ip pim sparse-mode

!

ip pim rp-address 1.1.1.1

!

R2:

!

ip multicast-routing

!

interface Ethernet0/0.23

ip pim sparse-mode

!

ip pim rp-address 1.1.1.1

!

R3:

!

ip multicast-routing

!

interface Ethernet0/0.13

ip pim sparse-mode

!

interface Ethernet0/0.23

ip pim sparse-mode

!

interface Ethernet0/0.34

ip pim sparse-mode

!

ip pim rp-address 1.1.1.1

!

R4:

!

ip multicast-routing

!

interface Ethernet0/0.34

ip pim sparse-mode

!

ip pim rp-address 1.1.1.1

!

除了以上配置外,本实验中,P路由器R3已经无需其它额外配置。开启P网络的组播功能是为了支持m×××而做的准备,在当前这一步没有必然的效果。

3)在PE配置,应启动对应VRF的组播功能:

R1:

!

ip multicast-routing vrf A //启用VRF下的组播

!

interface Ethernet0/0.16

ip pim sparse-mode

!

ip pim vrf A rp-address 5.5.5.5 //可以将VRF路由看做一台虚拟的客户路由器,当然也需要配置rp。

!

R2:

!

ip multicast-routing vrf A

!

interface Ethernet0/0.28

ip pim sparse-mode

!

ip pim vrf A rp-address 5.5.5.5

!

R4:

!

ip multicast-routing vrf A

!

interface Ethernet0/0.45

ip pim sparse-mode

!

interface Ethernet0/0.47

ip pim sparse-mode

!

ip pim vrf A rp-address 5.5.5.5

!

以上配置完成后,我们可以检查各个路由器的RP是否正常。

R5、R6、R7、R8

R5#show ip pim rp mapping

PIM Group-to-RP Mappings

Group(s): 224.0.0.0/4, Static

RP: 5.5.5.5 (?)

正常!

R1、R2、R3、R4

R1#sh ip pim rp mapping

PIM Group-to-RP Mappings

Group(s): 224.0.0.0/4, Static

RP: 1.1.1.1 (?)

R1、R2、R4三台PE VRF的RP

R1#show ip pim vrf A rp mapping

PIM Group-to-RP Mappings

Group(s): 224.0.0.0/4, Static

RP: 5.5.5.5 (?)

正常!

R1#show ip pim neighbor

PIM Neighbor Table

Mode: B - Bidir Capable, DR - Designated Router, N - Default DR Priority,

P - Proxy Capable, S - State Refresh Capable, G - GenID Capable

Neighbor Interface Uptime/Expires Ver DR

Address Prio/Mode

13.1.1.3 Ethernet0/0.13 00:17:57/00:01:29 v2 1 / DR S P G

到这一步,客户站点的组播报文无法穿越MPLS/×××骨干,原因在于无法跨越骨干网形成组播分发树,分2种情况:

1)PE端连接着组播源,PE收到来自客户端的组播报文后,可以发送基于单播的PIM-SM源注册信息。但是连接着RP所在站点的PE,无法向源方向发送PIM组加入信息(因为对应VRF,PE只和CE形成了PIM邻居关系)。

2)PE端连接组播接收者,PE收到PIM加入信息后,无法向RP方向发送组加入信息(因为对应VRF,PE只和CE形成了PIM邻居关系)。

可以测试一下看看:

R6(config)#interface loopback 0

R6(config-if)# ip pim sparse-mode

R6(config-if)# ip igmp join-group 239.1.1.1

R8#ping 239.1.1.1

Type escape sequence to abort.

Sending 1, 100-byte ICMP Echos to 239.1.1.1, timeout is 2 seconds:

在客户站点的RP查看,RP确实收到了组播源的注册信息:R5为RP确实收到了组播源的信息

R5#sh ip mroute summary | include 239.1.1.1

(*, 239.1.1.1), 00:01:28/stopped, RP 5.5.5.5, OIF count: 0, flags: SP

(28.1.1.8, 239.1.1.1), 00:01:28/00:01:31, OIF count: 0, flags: P

R1收到了加入组信息,但明显无法向RP方向发送加入信息,输入接口为空:

R1#show ip mroute vrf A

IP Multicast Routing Table

Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected,

L - Local, P - Pruned, R - RP-bit set, F - Register flag,

T - SPT-bit set, J - Join SPT, M - MSDP created entry, E - Extranet,

X - Proxy Join Timer Running, A - Candidate for MSDP Advertisement,

U - URD, I - Received Source Specific Host Report,

Z - Multicast Tunnel, z - MDT-data group sender,

Y - Joined MDT-data group, y - Sending to MDT-data group,

V - RD & Vector, v - Vector

Outgoing interface flags: H - Hardware switched, A - Assert winner

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 239.1.1.1), 00:04:24/00:03:00, RP 5.5.5.5, flags: S

Incoming interface: Null, RPF nbr 0.0.0.0

Outgoing interface list:

Ethernet0/0.16, Forward/Sparse, 00:04:24/00:03:00

(*, 224.0.1.40), 00:16:11/00:02:45, RP 5.5.5.5, flags: SJCL

Incoming interface: Null, RPF nbr 0.0.0.0

Outgoing interface list:

Ethernet0/0.16, Forward/Sparse, 00:15:11/00:03:02

如要完成组播PIM的组加入和注册流程,PE之间需要形成PIM邻居关系,传统的方式可以通过建立单播GRE隧道的方式(同时配置静态组播路由,以免RPF检查失效),这样PE之间的流量实际是以单播方式传播,如果客户站点之间没有全互联的GRE,组播流量会通过GRE隧道回溯到P网络,浪费很多带宽。

m×××解决方案,以组播地址为目的地址,自动建立点到多点的GRE隧道(隧道正式名称为MTI,组播隧道接口),隧道的目的地址为组播地址,源地址为本端MP-BGP的对等体地址。PE路由器通过MTI隧道建立邻接关系,传输PIM组播控制流量。

同一个VRF的多个PE,通过MTI建立邻接关系,工作效果相当于处在1个相同的LAN网段。

4)在PE的VRF里设置缺省的MDT群组(不同的×××客户使用的群组是不同,由服务商负责分配),这一步是最为关键的:

R1、R2、R4均配置:

!

ip vrf A

mdt default 233.3.3.3

!

interface Loopback0

ip pim sparse-mode

!

注意,以上环回口需要配置为稀疏模式,而且该环回口必需同时作为建立MP-BGP对等体的源地址。完成以上配置后,各个PE同时作为组233.3.3.3的发送者和接收者,发送时采用的源地址也就是以上环回口的地址。通过以下指令可见,R2、R3、R4已经在P网络里面开始发送组播:

R4#sh ip mroute summary

IP Multicast Routing Table

Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected,

L - Local, P - Pruned, R - RP-bit set, F - Register flag,

T - SPT-bit set, J - Join SPT, M - MSDP created entry, E - Extranet,

X - Proxy Join Timer Running, A - Candidate for MSDP Advertisement,

U - URD, I - Received Source Specific Host Report,

Z - Multicast Tunnel, z - MDT-data group sender,

Y - Joined MDT-data group, y - Sending to MDT-data group,

V - RD & Vector, v - Vector

Outgoing interface flags: H - Hardware switched, A - Assert winner

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 233.3.3.3), 00:00:13/stopped, RP 1.1.1.1, OIF count: 1, flags: SJCFZ

(4.4.4.4, 233.3.3.3), 00:00:03/00:02:56, OIF count: 0, flags: PFJT

(1.1.1.1, 233.3.3.3), 00:00:03/00:02:56, OIF count: 1, flags: JTZ

(2.2.2.2, 233.3.3.3), 00:00:03/00:02:56, OIF count: 1, flags: JTZ

(*, 224.0.1.40), 00:00:13/00:02:46, RP 1.1.1.1, OIF count: 0, flags: SJPCL

这个时候,客户站点并未产生任何组播流量,但是P网络中的PE会立即加入缺省MDT的原因在于PE之间需要建立PIM邻接关系,也就是说,邻居关系先建立起来,满足跨越骨干传输站点之间PIM控制信息的需求。通过以下指令,查看PE之间形成的PIM邻居关系:

R4#sh ip pim vrf A neighbor

PIM Neighbor Table

Mode: B - Bidir Capable, DR - Designated Router, N - Default DR Priority,

P - Proxy Capable, S - State Refresh Capable, G - GenID Capable

Neighbor Interface Uptime/Expires Ver DR

Address Prio/Mode

45.1.1.5 Ethernet0/0.45 00:24:01/00:01:24 v2 1 / DR S P G

47.1.1.7 Ethernet0/0.47 00:24:01/00:01:20 v2 1 / DR S P G

2.2.2.2 Tunnel2 00:02:09/00:01:33 v2 1 / S P G

1.1.1.1 Tunnel2 00:03:07/00:01:33 v2 1 / S P G

可见,以上邻接关系通过Tunnel接口建立,这些隧道接口即为自动建立的MTI。接着,我们测试一下客户站点组播的连通性:

R8#ping 239.1.1.1

Type escape sequence to abort.

Sending 1, 100-byte ICMP Echos to 239.1.1.1, timeout is 2 seconds:

Reply to request 0 from 16.1.1.6, 120 ms

所有经过骨干网传输的客户站点组播,均被封装进MTI,输送到对应客户站点的所有PE,PE收到后解封装,在传送原始的组播报文给目标客户站点。对于P路由器而言,不需要知道客户站点的组播地址,因此在R3上查看组播路由表,不会有客户组播地址239.1.1.1的转发信息:

R3#show ip mroute summary

IP Multicast Routing Table

Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected,

L - Local, P - Pruned, R - RP-bit set, F - Register flag,

T - SPT-bit set, J - Join SPT, M - MSDP created entry,

X - Proxy Join Timer Running, A - Candidate for MSDP Advertisement,

U - URD, I - Received Source Specific Host Report,

Z - Multicast Tunnel, z - MDT-data group sender,

Y - Joined MDT-data group, y - Sending to MDT-data group

V - RD & Vector, v - Vector

Outgoing interface flags: H - Hardware switched, A - Assert winner

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 233.3.3.3), 00:21:11/00:02:39, RP 1.1.1.1, OIF count: 2, flags: S

(4.4.4.4, 233.3.3.3), 00:21:03/00:03:16, OIF count: 2, flags: T

(1.1.1.1, 233.3.3.3), 00:21:10/00:03:24, OIF count: 2, flags: T

(2.2.2.2, 233.3.3.3), 00:21:11/00:03:16, OIF count: 2, flags: T

(*, 224.0.1.40), 03:47:02/00:02:45, RP 1.1.1.1, OIF count: 2, flags: SJCL

在PE上查看,可见组播的流量即来自MTI接口:

R1#show ip mroute vrf A

IP Multicast Routing Table

Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected,

L - Local, P - Pruned, R - RP-bit set, F - Register flag,

T - SPT-bit set, J - Join SPT, M - MSDP created entry,

X - Proxy Join Timer Running, A - Candidate for MSDP Advertisement,

U - URD, I - Received Source Specific Host Report,

Z - Multicast Tunnel, z - MDT-data group sender,

Y - Joined MDT-data group, y - Sending to MDT-data group

V - RD & Vector, v - Vector

Outgoing interface flags: H - Hardware switched, A - Assert winner

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 239.1.1.1), 00:52:35/00:03:05, RP 5.5.5.5, flags: S

Incoming interface: Tunnel1, RPF nbr 4.4.4.4

Outgoing interface list:

Ethernet0/0.16, Forward/Sparse, 00:42:59/00:03:05

(28.1.1.8, 239.1.1.1), 00:03:36/00:00:29, flags:

Incoming interface: Tunnel1, RPF nbr 2.2.2.2

Outgoing interface list:

Ethernet0/0.16, Forward/Sparse, 00:03:36/00:03:26

(以下输出省略)

在这个实验中,通过查看组播转发路径,可以发现,穿过MTI的组播流量转发到所有的PE,而实际上,PE-R4所连接的站点并没有对应组239.1.1.1的接收者,也就是说,对于PE-R4而言接收到不必要的组播泛洪。为了克服这个问题,m×××解决方案创造了一个特殊的MDT群组,称为数据MDT,专门用于传送超过带宽阈值的组播流量。

5)只需在连接组播源的PE配置数据MDT,以下设置带宽阈值为1kbps:

R2(config)#ip vrf A

R2(config-vrf)#mdt data 234.4.4.4 0.0.0.0 threshold 1

接着,在客户站点发送组播源数据:

R8#ping

Protocol [ip]:

Target IP address: 239.1.1.1

Repeat count [1]: 100

Datagram size [100]: 1000

Datagram size [100]: 36

Timeout in seconds [2]:

Extended commands [n]:

Sweep range of sizes [n]:

Type escape sequence to abort.

Sending 1000, 36-byte ICMP Echos to 239.1.1.1, timeout is 2 seconds:

Reply to request 0 from 16.1.1.6, 148 ms

Reply to request 1 from 16.1.1.6, 20 ms

(以下输出省略)

因为发送的流量超过数据MDT的带宽阈值,因此组播通过数据MDT传送:

R3# show ip mroute

IP Multicast Routing Table

Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected,

L - Local, P - Pruned, R - RP-bit set, F - Register flag,

T - SPT-bit set, J - Join SPT, M - MSDP created entry,

X - Proxy Join Timer Running, A - Candidate for MSDP Advertisement,

U - URD, I - Received Source Specific Host Report,

Z - Multicast Tunnel, z - MDT-data group sender,

Y - Joined MDT-data group, y - Sending to MDT-data group

V - RD & Vector, v - Vector

Outgoing interface flags: H - Hardware switched, A - Assert winner

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 234.4.4.4), 00:02:27/stopped, RP 1.1.1.1, flags: SP

Incoming interface: Ethernet0/0.13, RPF nbr 13.1.1.1

Outgoing interface list: Null

(2.2.2.2, 234.4.4.4), 00:02:27/00:03:23, flags: T

Incoming interface: Ethernet0/0.23, RPF nbr 23.1.1.2

Outgoing interface list:

Ethernet0/0.13, Forward/Sparse, 00:02:27/00:03:24

(以下输出省略)

以上可见对应(S,G)条目下的OIL仅有一个接口,也就是说PE-R4不会再收到不必要的组播泛洪。

数据MDT可以设置一段群组(比如234.4.4.0 0.0.0.255),将客户站点的组播报文映射进不同的数据MDT是由PE决定的,这个动作是自动触发的,而且PE会将该映射信息发送给其它PE,以便其它PE在收到(S,G)加入信息时,知道触发加入对应数据MDT:

R1#show ip pim vrf A mdt receive detail

Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected,

L - Local, P - Pruned, R - RP-bit set, F - Register flag,

T - SPT-bit set, J - Join SPT, M - MSDP created entry,

X - Proxy Join Timer Running, A - Candidate for MSDP Advertisement,

U - URD, I - Received Source Specific Host Report,

Z - Multicast Tunnel, z - MDT-data group sender,

Y - Joined MDT-data group, y - Sending to MDT-data group

V - RD & Vector, v - Vector

Joined MDT-data [group : source] uptime/expires for VRF: A

[234.4.4.4 : 0.0.0.0] 00:01:39/00:02:37

(28.1.1.8, 239.1.1.1), 00:14:07/00:03:23/00:02:37, OIF count: 1, flags: TY

注意:MDT仅由mVRF中的(S,G)触发,也就是对应双向PIM的情况下将永远使用缺省MDT。一种应用对应一个数据MDT群组,如果并发的组播应用种类,超过数据MDT群组的数量,则一个数据MDT群组映射多个(S,G):

R8(config)#interface loopback 0

R8(config-if)#ip pim sparse-mode

R8#ping

Protocol [ip]:

Target IP address: 239.1.1.1

Repeat count [1]: 30

Datagram size [100]: 1200

Timeout in seconds [2]:

Extended commands [n]:

Sweep range of sizes [n]:

Type escape sequence to abort.

Sending 30, 1200-byte ICMP Echos to 239.1.1.1, timeout is 2 seconds:

Reply to request 0 from 16.1.1.6, 52 ms

Reply to request 0 from 16.1.1.6, 52 ms

(以下输出省略)

R2#show ip pim vrf A mdt send

MDT-data send list for VRF: ×××-A

(source, group) MDT-data group ref_count

(8.8.8.8, 239.1.1.1) 234.4.4.4 2

(28.1.1.8, 239.1.1.1) 234.4.4.4 2

可见R2将2个不同(S,G)条目映射为1个相同的MDT数据群组。针对这种情况,在实际设计数据MDT时,可以采取以下原则:

1.配置的MDT数据群组数目大于客户站点并发的组播应用数据量

2.各个PE之间配置不同的数据MDT群组,避免重叠分配(因为不同的PE连接着不同的源,承担着不同的应用)。

为了克服以上的两个不足,可以采用ssm的方式实现m×××,因为SSM无需依赖于RP,不存在单点故障的问题。使用SSM时,还有一个好处,就是当不同PE使用相同的数据MDT时,只要客户站点的源使用不同的组地址,就不会引起不必要的组播泛洪。

在PE上启用SSM,本实验没有其它基于标准PIM-SM的应用,因此可直接删除掉RP配置:

R1(config)#no ip pim rp-address 1.1.1.1

R1(config)#ip pim ssm range 10

R1(config)#access-list 10 permit 233.3.3.0 0.0.0.255

R2(config)#no ip pim rp-address 1.1.1.1

R2(config)#ip pim ssm range 10

R2(config)#access-list 10 permit 233.3.3.0 0.0.0.255

R4(config)#no ip pim rp-address 1.1.1.1

R4(config)#ip pim ssm range 10

R4(config)#access-list 10 permit 233.3.3.0 0.0.0.255

R3(config)#no ip pim rp-address 1.1.1.1

R3(config)#ip pim ssm range 10

R3(config)#access-list 10 permit 233.3.3.0 0.0.0.255

2)PE需要知道各自所使用的组播源地址,因为SSM的PIM加入信息为(S,G)形式。PE使用MP-BGP通告MDT信息(源、组):

R1(config)#router bgp 1

R1(config-router)# address-family ipv4 mdt

R1(config-router-af)# neighbor 3.3.3.3 activate

R2(config)#router bgp 1

R2(config-router)# address-family ipv4 mdt

R2(config-router-af)# neighbor 3.3.3.3 activate

R4(config)#router bgp 1

R4(config-router)# address-family ipv4 mdt

R4(config-router-af)# neighbor 3.3.3.3 activate

R3(config)#router bgp 1

R3(config-router)# address-family ipv4 mdt

R3(config-router-af)# neighbor 1.1.1.1 activate

R3(config-router-af)# neighbor 2.2.2.2 activate

R3(config-router-af)# neighbor 4.4.4.4 activate

R3(config-router-af)# neighbor rr route-reflector-client

BGP的对等体重新建立完成后,PE收到各自的MDT信息:

R1#show ip bgp ipv4 mdt all

BGP table version is 9, local router ID is 1.1.1.1

Status codes: s suppressed, d damped, h history, * valid, > best, i - internal,

r RIB-failure, S Stale

Origin codes: i - IGP, e - EGP, ? - incomplete

Network Next Hop Metric LocPrf Weight Path

Route Distinguisher: 1:100 (default for vrf ×××-A)

*> 1.1.1.1/32 0.0.0.0 0 ?

*>i2.2.2.2/32 2.2.2.2 0 100 0 ?

*>i4.4.4.4/32 4.4.4.4 0 100 0 ?

R1#show ip bgp ipv4 mdt all 2.2.2.2

BGP routing table entry for 1:100:2.2.2.2/32, version 8

Paths: (1 available, best #1, table IPv4-MDT-BGP-Table)

Not advertised to any peer

Local

2.2.2.2 (metric 21) from 3.3.3.3 (3.3.3.3)

Origin incomplete, metric 0, localpref 100, valid, internal, best

Originator: 2.2.2.2, Cluster list: 3.3.3.3,

MDT group address: 233.3.3.3

PE收到这些信息后,立即加入对应的SSM(S,G),通过MTI建立PIM邻接关系,在P网络里面可以看到对应SSM的转发表:

R3# show ip mroute

IP Multicast Routing Table

Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected,

L - Local, P - Pruned, R - RP-bit set, F - Register flag,

T - SPT-bit set, J - Join SPT, M - MSDP created entry,

X - Proxy Join Timer Running, A - Candidate for MSDP Advertisement,

U - URD, I - Received Source Specific Host Report,

Z - Multicast Tunnel, z - MDT-data group sender,

Y - Joined MDT-data group, y - Sending to MDT-data group

V - RD & Vector, v - Vector

Outgoing interface flags: H - Hardware switched, A - Assert winner

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(1.1.1.1, 233.3.3.3), 00:00:26/00:03:21, flags: sT

Incoming interface: Ethernet0/0.13, RPF nbr 13.1.1.1

Outgoing interface list:

Ethernet0/0.34, Forward/Sparse, 00:00:26/00:03:07

(2.2.2.2, 233.3.3.3), 00:00:55/00:03:21, flags: sT

Incoming interface: Ethernet0/0.23, RPF nbr 23.1.1.2

Outgoing interface list:

Ethernet0/0.34, Forward/Sparse, 00:00:26/00:03:08

Ethernet0/0.13, Forward/Sparse, 00:00:55/00:02:43

(4.4.4.4, 233.3.3.3), 00:01:00/00:03:21, flags: sT

Incoming interface: Ethernet0/0.34, RPF nbr 34.1.1.4

Outgoing interface list:

Ethernet0/0.13, Forward/Sparse, 00:00:53/00:02:45

Ethernet0/0.23, Forward/Sparse, 00:01:00/00:02:39

(以下输出省略)

到这一步为止,缺省的MDT已经建立起来了,客户站点之间可以互通组播,但要注意,因为已经删除RP配置,对应数据MDT亦需在SSM组范围内,否之客户站点的组播报文无法被转发。以下测试数据MDT的启用:

R2(config)#ip vrf A

R2(config-vrf)#mdt data 233.3.3.99 0.0.0.0 threshold 1

R8#ping

Protocol [ip]:

Target IP address: 239.1.1.1

Repeat count [1]: 100

Datagram size [100]: 1000

Timeout in seconds [2]:

Extended commands [n]:

Sweep range of sizes [n]:

Type escape sequence to abort.

Sending 100, 1000-byte ICMP Echos to 239.1.1.1, timeout is 2 seconds:

Reply to request 0 from 16.1.1.6, 48 ms

Reply to request 0 from 16.1.1.6, 72 ms

(以下输出省略)

对比PE收到的数据MDT映射信息和上一个实验的差别:

R1#show ip pim vrf A mdt receive

Joined MDT-data [group : source] uptime/expires for VRF: A

[233.3.3.99 : 2.2.2.2] 00:04:26/00:02:57

至此我们的实验完毕!

转载于:https://blog.51cto.com/hexintj/1310488

489

489

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?