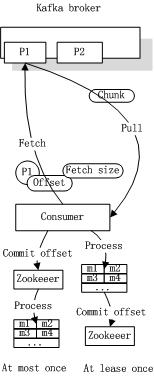

消费者需要自己保留一个offset,从kafka 获取消息时,只拉去当前offset 以后的消息。Kafka 的scala/java 版的client 已经实现了这部分的逻辑,将offset 保存到zookeeper 上

What to do when there is no initial offset in ZooKeeper or if an offset is out of range:

smallest : automatically reset the offset to the smallest offset

largest : automatically reset the offset to the largest offset

anything else: throw exception to the consumer

如果Kafka没有开启Consumer,只有Producer生产了数据到Kafka中,此后开启Consumer。在这种场景下,将auto.offset.reset设置为largest,那么Consumer会读取不到之前Produce的消息,只有新Produce的消息才会被Consumer消费

2. auto.commit.enable(例如true,表示offset自动提交到Zookeeper)

If true, periodically commit to ZooKeeper the offset of messages already fetched by the consumer. This committed offset will be used when the process fails as the position from which the new consumer will begin

3. auto.commit.interval.ms(例如60000,每隔1分钟offset提交到Zookeeper)

The frequency in ms that the consumer offsets are committed to zookeeper.

问题:如果在一个时间间隔内,没有提交offset,岂不是要重复读了?

Select where offsets should be stored (zookeeper or kafka).默认是Zookeeper

The Kafka consumer works by issuing "fetch" requests to the brokers leading the partitions it wants to consume. The consumer specifies its offset in the log with each request and receives back a chunk of log beginning from that position. The consumer thus has significant control over this position and can rewind it to re-consume data if need be.

6. Kafka的可靠性保证(消息消费和Offset提交的时机决定了At most once和At least once语义)

At Most Once:

At Least Once:

Kafka默认实现了At least once语义

3254

3254

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?