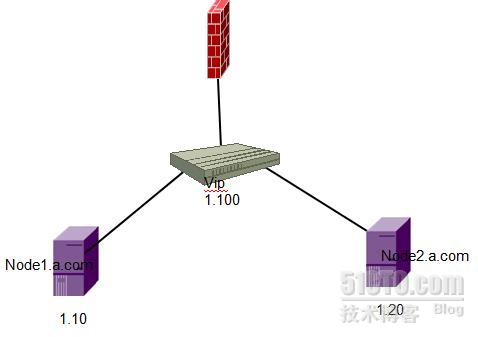

corosync/openais+pacemaker实现的www的高可用性群集

拓扑图:

在node1 node2上做基本配置

[root@localhost ~]# hwclock -s

[root@localhost ~]# date

2012年 10月 18日 星期四 10:35:55 CST

[root@localhost ~]# vim /etc/hosts

# Do not remove the following line, or various programs

# that require network functionality will fail.

127.0.0.1

localhost.localdomain localhost

::1

localhost6.localdomain6 localhost6

192.168.1.10 node1.a.com node1

192.168.1.20 node2.a.com node2

[root@localhost ~]# vim /etc/sysconfig/network

NETWORKING=yes

NETWORKING_IPV6=no

HOSTNAME=node1.a.com

[root@localhost ~]# hostname node1.a.com

[root@node1 ~]# vim /etc/yum.repos.d/rhel-debuginfo.repo

[rhel-server]

name=Red Hat Enterprise Linux serverbaseurl=file:///mnt/cdrom/Server

enabled=1

gpgcheck=1

gpgkey=file:///mnt/cdrom/RPM-GPG-KEY-redhat-release

[rhel-cluster]

name=Red Hat Enterprise Linux cluster

baseurl=file:///mnt/cdrom/Cluster

enabled=1

gpgcheck=1

gpgkey=file:///mnt/cdrom/RPM-GPG-KEY-redhat-release

[root@localhost ~]# hwclock -s

[root@localhost ~]# date

2012年 10月 18日 星期四 10:44:46 CST

[root@localhost ~]# vim /etc/hosts

# Do not remove the following line, or various programs

# that require network functionality will fail.

127.0.0.1

localhost.localdomain localhost

::1

localhost6.localdomain6 localhost6

192.168.1.10 node1.a.com node1

192.168.1.20 node2.a.com node2

[root@localhost ~]# vim /etc/sysconfig/network

NETWORKING=yes

NETWORKING_IPV6=no

HOSTNAME=node2.a.com

[root@localhost ~]# hostname node2.a.com

[root@node1 ~]# scp /etc/yum.repos.d/rhel-debuginfo.repo node2.a.com:/etc/yum.repos.d/

The authenticity of host 'node2.a.com (192.168.1.20)' can't be established.

RSA key fingerprint is c7:11:f0:b8:8b:33:ba:66:9f:c3:d7:a0:5c:67:f0:e1.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'node2.a.com,192.168.1.20' (RSA) to the list of known hosts.

root@node2.a.com's password:

rhel-debuginfo.repo

100% 317 0.3KB/s 00:00

在各个节点上面产生密钥实现无密码的通讯

[root@node1 ~]# ssh-keygen -t rsa

把公钥拷贝给对方

[root@node1 .ssh]# ssh-copy-id -i id_rsa.pub node2

[root@node2 ~]# ssh-keygen -t rsa

[root@node2 ~]# cd .ssh/

[root@node2 .ssh]# ssh-copy-id -i id_rsa.pub node1

安装软件包

[root@node1 ~]# yum localinstall -y *.rpm --nogpgcheck

Installed:

cluster-glue.i386 0:1.0.6-1.6.el5

cluster-glue-libs.i386 0:1.0.6-1.6.el5

corosync.i386 0:1.2.7-1.1.el5

corosynclib.i386 0:1.2.7-1.1.el5

heartbeat.i386 0:3.0.3-2.3.el5

heartbeat-libs.i386 0:3.0.3-2.3.el5

libesmtp.i386 0:1.0.4-5.el5

openais.i386 0:1.1.3-1.6.el5

openaislib.i386 0:1.1.3-1.6.el5

pacemaker.i386 0:1.1.5-1.1.el5

pacemaker-cts.i386 0:1.1.5-1.1.el5

pacemaker-libs.i386 0:1.1.5-1.1.el5

perl-TimeDate.noarch 1:1.16-5.el5

resource-agents.i386 0:1.0.4-1.1.el5

Dependency Installed:

libibverbs.i386 0:1.1.2-4.el5

librdmacm.i386 0:1.0.8-5.el5 libtool-ltdl.i386 0:1.5.22-6.1

lm_sensors.i386 0:2.10.7-4.el5 openhpi-libs.i386 0:2.14.0-5.el5 openib.noarch 0:1.4.1-3.el5

Complete!

[root@node2 ~]# scp node1:/root/*.rpm ./

[root@node2 ~]# yum localinstall -y *.rpm –nogpgcheck

生成配置文档

[root@node1 ~]# cd /etc/corosync/

[root@node1 corosync]# cp corosync.conf.example corosync.conf

[root@node1 corosync]# vim corosync.conf

10

bindnetaddr: 192.168.1.0

34 service {

35

ver: 0

36

name: pacemaker

37 }

38

39 aisexec {

40

user: root

41

group: root

42 }

创建目录

[root@node1 corosync]# mkdir /var/log/cluster

[root@node1 corosync]# ssh node2 'mkdir /var/log/cluster'

为了便面其他主机加入该集群,需要认证,生成一个authkey

[root@node1 corosync]# corosync-keygen

Corosync Cluster Engine Authentication key generator.

Gathering 1024 bits for key from /dev/random.

Press keys on your keyboard to generate entropy.

Writing corosync key to /etc/corosync/authkey.

拷贝文件到node2

[root@node1 corosync]# scp -p authkey corosync.conf node2:/etc/corosync/

authkey

100% 128 0.1KB/s 00:00

corosync.conf

100% 540 0.

启动corosync服务

[root@node1 corosync]# service corosync start

Starting Corosync Cluster Engine (corosync):

[确定]

[root@node1 corosync]# ssh node2 'service corosync start'

Starting Corosync Cluster Engine (corosync): [确定]

验证corosync引擎是否正常启动

[root@node1 corosync]# grep -i -e "corosync cluster engine" -e "configuration file" /var/log/messages

Aug 19 19:28:24 localhost smartd[3150]: Opened configuration file /etc/smartd.conf

Aug 19 19:28:24 localhost smartd[3150]: Configuration file /etc/smartd.conf was parsed, found DEVICESCAN, scanning devices

Aug 19 19:34:45 localhost smartd[3364]: Opened configuration file /etc/smartd.conf

Aug 19 19:34:45 localhost smartd[3364]: Configuration file /etc/smartd.conf was parsed, found DEVICESCAN, scanning devices

Aug 19 19:38:06 localhost smartd[3364]: Opened configuration file /etc/smartd.conf

Aug 19 19:38:06 localhost smartd[3364]: Configuration file /etc/smartd.conf was parsed, found DEVICESCAN, scanning devices

Oct 18 10:28:01 localhost smartd[3361]: Opened configuration file /etc/smartd.conf

Oct 18 10:28:01 localhost smartd[3361]: Configuration file /etc/smartd.conf was parsed, found DEVICESCAN, scanning devices

Oct 18 11:31:23 localhost corosync[4300]:

[MAIN ] Corosync Cluster Engine ('1.2.7'): started and ready to provide service.

Oct 18 11:31:23 localhost corosync[4300]:

[MAIN ] Successfully read main configuration file '/etc/corosync/corosync.conf'.

查看初始化成员节点通知是否发出

[root@node1 corosync]# grep -i totem /var/log/messages

Oct 18 11:31:23 localhost corosync[4300]:

[TOTEM ] Initializing transport (UDP/IP).

Oct 18 11:31:23 localhost corosync[4300]:

[TOTEM ] Initializing transmit/receive security: libtomcrypt SOBER128/SHA1HMAC (mode 0).

Oct 18 11:31:23 localhost corosync[4300]:

[TOTEM ] The network interface [192.168.1.10] is now up.

Oct 18 11:31:24 localhost corosync[4300]:

[TOTEM ] A processor joined or left the membership and a new membership was formed.

Oct 18 11:32:34 localhost corosync[4300]:

[TOTEM ] A processor joined or left the membership and a new membership was formed.

Oct 18 11:33:50 localhost corosync[4300]:

[TOTEM ] Process pause detected for 1087 ms, flushing membership messages.

Oct 18 11:33:50 localhost corosync[4300]:

[TOTEM ] A processor failed, forming new configuration.

Oct 18 11:33:50 localhost corosync[4300]:

[TOTEM ] A processor joined or left the membership and a new membership was formed.

Oct 18 11:33:53 localhost corosync[4300]:

[TOTEM ] Process pause detected for 1759 ms, flushing membership messages.

Oct 18 11:33:53 localhost corosync[4300]:

[TOTEM ] A processor failed, forming new configuration.

Oct 18 11:33:57 localhost corosync[4300]:

[TOTEM ] A processor joined or left the membership and a new membership was formed.

Oct 18 11:34:30 localhost corosync[4300]:

[TOTEM ] A processor failed, forming new configuration.

Oct 18 11:34:34 localhost corosync[4300]:

[TOTEM ] A processor joined or left the membership and a new membership was formed.

Oct 18 11:34:35 localhost corosync[4300]:

[TOTEM ] Process pause detected for 909 ms, flushing membership messages.

Oct 18 11:34:41 localhost corosync[4300]:

[TOTEM ] Process pause detected for 910 ms, flushing membership messages.

Oct 18 11:34:41 localhost corosync[4300]:

[TOTEM ] Process pause detected for 1490 ms, flushing membership messages.

Oct 18 11:34:42 localhost corosync[4300]:

[TOTEM ] A processor joined or left the membership and a new membership was formed.

Oct 18 11:34:42 localhost corosync[4300]:

[TOTEM ] Process pause detected for 1311 ms, flushing membership messages.

Oct 18 11:34:42 localhost corosync[4300]:

[TOTEM ] A processor failed, forming new configuration.

Oct 18 11:34:42 localhost corosync[4300]:

[TOTEM ] Process pause detected for 947 ms, flushing membership messages.

Oct 18 11:34:43 localhost corosync[4300]:

[TOTEM ] A processor joined or left the membership and a new membership was formed.

Oct 18 11:35:17 localhost corosync[4300]: [TOTEM ] A processor failed, forming new configuration.

Oct 18 11:35:20 localhost corosync[4300]:

[TOTEM ] A processor joined or left the membership and a new membership was formed.

检查pacemaker时候已经启动

[root@node1 corosync]# grep -i pcmk_startup /var/log/messages

Oct 18 11:31:23 localhost corosync[4300]:

[pcmk ] info: pcmk_startup: CRM: Initialized

Oct 18 11:31:23 localhost corosync[4300]:

[pcmk ] Logging: Initialized pcmk_startup

Oct 18 11:31:23 localhost corosync[4300]:

[pcmk ] info: pcmk_startup: Maximum core file size is: 4294967295

Oct 18 11:31:23 localhost corosync[4300]:

[pcmk ] info: pcmk_startup: Service: 9

Oct 18 11:31:23 localhost corosync[4300]:

[pcmk ] info: pcmk_startup: Local hostname: node1.a.com

[root@node2 ~]# grep -i pcmk_startup /var/log/messages

Oct 18 11:32:37 localhost corosync[4303]:

[pcmk ] info: pcmk_startup: CRM: Initialized

Oct 18 11:32:37 localhost corosync[4303]:

[pcmk ] Logging: Initialized pcmk_startup

Oct 18 11:32:37 localhost corosync[4303]:

[pcmk ] info: pcmk_startup: Maximum core file size is: 4294967295

Oct 18 11:32:37 localhost corosync[4303]:

[pcmk ] info: pcmk_startup: Service: 9

Oct 18 11:32:37 localhost corosync[4303]:

[pcmk ] info: pcmk_startup: Local hostname: node2.a.com

查看集群的成员状态

[root@node1 ~]# crm status

============

Last updated: Thu Oct 18 11:54:19 2012

Stack: openais

Current DC: node2.a.com - partition with quorum

Version: 1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f

2 Nodes configured, 2 expected votes

0 Resources configured.

============

Online: [ node1.a.com node2.a.com ]

[root@node2 ~]# crm status

============

Last updated: Thu Oct 18 11:54:29 2012

Stack: openais

Current DC: node2.a.com - partition with quorum

Version: 1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f

2 Nodes configured, 2 expected votes

0 Resources configured.

============

Online: [ node1.a.com node2.a.com ]

验证文件的语法错误

[root@node1 ~]# crm_verify -L

crm_verify[3639]: 2012/10/18_12:42:05 ERROR: unpack_resources: Resource start-up disabled since no STONITH resources have been defined

crm_verify[3639]: 2012/10/18_12:42:05 ERROR: unpack_resources: Either configure some or disable STONITH with the stonith-enabled option

crm_verify[3639]: 2012/10/18_12:42:05 ERROR: unpack_resources: NOTE: Clusters with shared data need STONITH to ensure data integrity

Errors found during check: config not valid

-V may provide more details

检测到语法错误解决方法是禁用stonith

[root@node1 ~]# crm

crm(live)# configure

crm(live)configure# property stonith-enabled=false

crm(live)configure# show

node node1.a.com

node node2.a.com

property $id="cib-bootstrap-options" \

dc-version="1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f" \

cluster-infrastructure="openais" \

expected-quorum-votes="2" \

stonith-enabled="false"

crm(live)configure# commit

配置资源ip

crm(live)configure# primitive webIP ocf:heartbeat:IPaddr params ip=192.168.1.100

crm(live)configure# show

node node1.a.com

node node2.a.com

primitive webIP ocf:heartbeat:IPaddr \

params ip="192.168.1.100"

property $id="cib-bootstrap-options" \

dc-version="1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f" \

cluster-infrastructure="openais" \

expected-quorum-votes="2" \

stonith-enabled="false"

crm(live)configure# commit

返回上一级查看群集状态

crm(live)configure# end

crm(live)# status

============

Last updated: Thu Oct 18 12:51:05 2012

Stack: openais

Current DC: node2.a.com - partition with quorum

Version: 1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f

2 Nodes configured, 2 expected votes

1 Resources configured.

============

Online: [ node1.a.com node2.a.com ]

webIP

(ocf::heartbeat:IPaddr): Started node1.a.com

查看ip

[root@node1 ~]# ifconfig

eth0

Link encap:Ethernet HWaddr 00:0C:29:36:75:47

inet addr:192.168.1.10 Bcast:192.168.1.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe36:7547/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:22552 errors:0 dropped:0 overruns:0 frame:0

TX packets:33309 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:2738041 (2.6 MiB) TX bytes:3908709 (3.7 MiB)

Base address:0x2000 Memory:c9020000-c9040000

eth0:0

Link encap:Ethernet HWaddr 00:0C:29:36:75:47

inet addr:192.168.1.100 Bcast:192.168.1.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

Base address:0x2000 Memory:c9020000-c9040000

lo

Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:46 errors:0 dropped:0 overruns:0 frame:0

TX packets:46 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:3398 (3.3 KiB) TX bytes:3398 (3.3 KiB)

在node2上查看

[root@node2 ~]# crm status

============

Last updated: Thu Oct 18 12:55:18 2012

Stack: openais

Current DC: node2.a.com - partition with quorum

Version: 1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f

2 Nodes configured, 2 expected votes

1 Resources configured.

============

Online: [ node1.a.com node2.a.com ]

webIP

(ocf::heartbeat:IPaddr): Started node1.a.com

[root@node2 ~]# ifconfig

eth0

Link encap:Ethernet HWaddr 00:0C:29:C9:A7:CF

inet addr:192.168.1.20 Bcast:192.168.1.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fec9:a7cf/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:65448 errors:0 dropped:0 overruns:0 frame:0

TX packets:56185 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:11677482 (11.1 MiB) TX bytes:7266921 (6.9 MiB)

Base address:0x2000 Memory:c9020000-c9040000

lo

Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:8798 errors:0 dropped:0 overruns:0 frame:0

TX packets:8798 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:872483 (852.0 KiB) TX bytes:872483 (852.0 KiB)

定义web资源

[root@node1 ~]# yum install -y httpd

[root@node2 ~]# yum install -y httpd

[root@node1 ~]# echo "node1.a.com" >/var/www/html/index.html

[root@node2 ~]# echo "node2.a.com" >/var/www/html/index.html

查看httpd的参数

[root@node1 ~]# crm

crm(live)# ra

crm(live)ra# meta lsb:httpd

lsb:httpd

Apache is a World Wide Web server. It is used to serve \

HTML files and CGI.

Operations' defaults (advisory minimum):

start timeout=15

stop timeout=15

status timeout=15

restart timeout=15

force-reload timeout=15

monitor interval=15 timeout=15 start-delay=15

定义httpd的资源

crm(live)# configure

crm(live)configure# primitive webserver lsb:httpd

crm(live)configure# show

node node1.a.com

node node2.a.com

primitive webIP ocf:heartbeat:IPaddr \

params ip="192.168.1.100"

primitive webserver lsb:httpd

property $id="cib-bootstrap-options" \

dc-version="1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f" \

cluster-infrastructure="openais" \

expected-quorum-votes="2" \

stonith-enabled="false"

crm(live)configure# commit

查看

crm(live)# status

============

Last updated: Thu Oct 18 13:09:19 2012

Stack: openais

Current DC: node2.a.com - partition with quorum

Version: 1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f

2 Nodes configured, 2 expected votes

2 Resources configured.

============

Online: [ node1.a.com node2.a.com ]

webIP

(ocf::heartbeat:IPaddr): Started node1.a.com

webserver

(lsb:httpd): Started node2.a.com

发现httpd已经启动了,但是在node2节点上高级群集服务资源越来越多,会分布在不同的节点上,以尽量负载均衡,需要约束在同一个节点上,定义成一个组

[root@node1 ~]# crm

crm(live)# configure

crm(live)configure#

group web webIP webserver

crm(live)configure# show

node node1.a.com

node node2.a.com

primitive webIP ocf:heartbeat:IPaddr \

params ip="192.168.1.100"

primitive webserver lsb:httpd

group web webIP webserver

property $id="cib-bootstrap-options" \

dc-version="1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f" \

cluster-infrastructure="openais" \

expected-quorum-votes="2" \

stonith-enabled="false"

crm(live)configure# commit

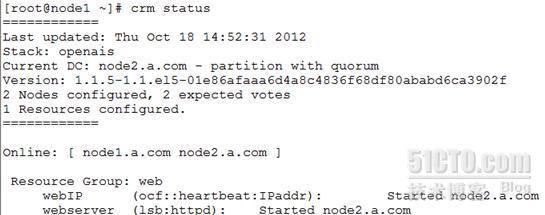

查看群集的状态

crm(live)configure# end

crm(live)# status

============

Last updated: Thu Oct 18 13:17:47 2012

Stack: openais

Current DC: node2.a.com - partition with quorum

Version: 1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f

2 Nodes configured, 2 expected votes

1 Resources configured.

============

Online: [ node1.a.com node2.a.com ]

Resource Group: web

webIP (ocf::heartbeat:IPaddr): Started node1.a.com

webserver (lsb:httpd): Started node1.a.com

查看节点1状态

[root@node1 ~]# ifconfig

eth0

Link encap:Ethernet HWaddr 00:0C:29:36:75:47

inet addr:192.168.1.10 Bcast:192.168.1.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe36:7547/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:46796 errors:0 dropped:0 overruns:0 frame:0

TX packets:59188 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:6835722 (6.5 MiB) TX bytes:7309024 (6.9 MiB)

Base address:0x2000 Memory:c9020000-c9040000

eth0:0

Link encap:Ethernet HWaddr 00:0C:29:36:75:47

inet addr:192.168.1.100 Bcast:192.168.1.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

Base address:0x2000 Memory:c9020000-c9040000

lo

Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:971 errors:0 dropped:0 overruns:0 frame:0

TX packets:971 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:97053 (94.7 KiB) TX bytes:97053 (94.7 KiB)

[root@node1 ~]# service httpd status

httpd (pid 3864) 正在运行...

查看节点2

[root@node2 ~]# service httpd status

httpd 已停

[root@node2 ~]# ifconfig

eth0

Link encap:Ethernet HWaddr 00:0C:29:C9:A7:CF

inet addr:192.168.1.20 Bcast:192.168.1.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fec9:a7cf/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:90716 errors:0 dropped:0 overruns:0 frame:0

TX packets:79974 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:15007931 (14.3 MiB) TX bytes:11327408 (10.8 MiB)

Base address:0x2000 Memory:c9020000-c9040000

lo

Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:13129 errors:0 dropped:0 overruns:0 frame:0

TX packets:13129 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:1302381 (1.2 MiB) TX bytes:1302381 (1.2 MiB)

[root@node2 ~]# crm status

============

Last updated: Thu Oct 18 13:23:13 2012

Stack: openais

Current DC: node2.a.com - partition with quorum

Version: 1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f

2 Nodes configured, 2 expected votes

1 Resources configured.

============

Online: [ node1.a.com node2.a.com ]

Resource Group: web

webIP (ocf::heartbeat:IPaddr): Started node1.a.com

webserver (lsb:httpd): Started node1.a.com

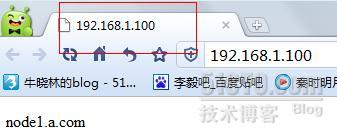

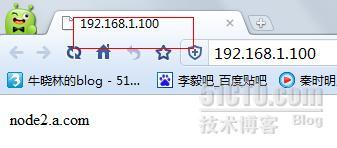

测试访问192.168.1.100

现在模拟节点1死掉

[root@node1 ~]# service corosync stop

Signaling Corosync Cluster Engine (corosync) to terminate: [确定]

Waiting for corosync services to unload:...................[确定]

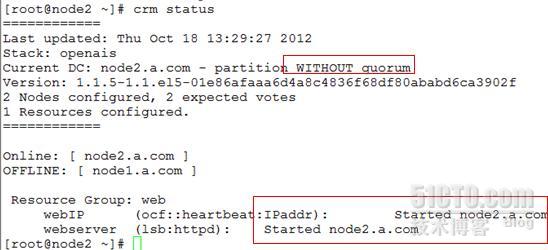

查看节点2

虽然node2节点在线但是所有的资源无法使用

访问网页

关闭quorum

[root@node1 ~]# crm

crm(live)# configure

crm(live)configure# property no-quorum-policy=ignore

crm(live)configure# show

node node1.a.com

node node2.a.com

primitive webIP ocf:heartbeat:IPaddr \

params ip="192.168.1.100"

primitive webserver lsb:httpd

group web webIP webserver

property $id="cib-bootstrap-options" \

dc-version="1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f" \

cluster-infrastructure="openais" \

expected-quorum-votes="2" \

stonith-enabled="false" \

no-quorum-policy="ignore"

crm(live)configure# commit

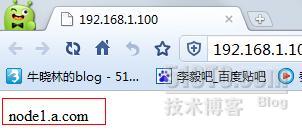

再次启动节点1服务

[root@node1 ~]# service corosync start

Starting Corosync Cluster Engine (corosync):

[确定]

查看节点1

访问网页

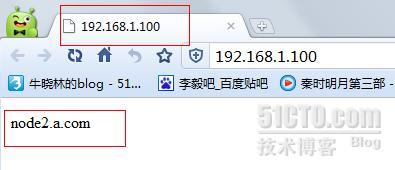

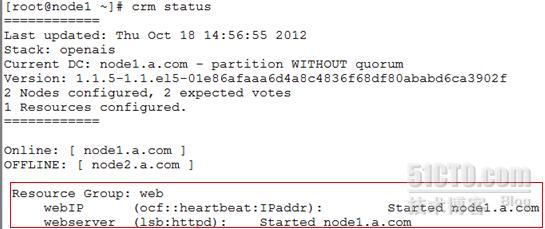

关闭节点2服务

[root@node2 ~]# service corosync stop

Signaling Corosync Cluster Engine (corosync) to terminate: [确定]

Waiting for corosync services to unload:..........

[确定]

测试访问

查看节点1

转载于:https://blog.51cto.com/niuxiaolin/1031826

6525

6525

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?