介绍

hadoop生态里面常用的安全认证无非两种kerberos和ldap,kerberos的繁琐已经领教过了,ldap还好在生产中也用过,看来hive+ldap+sentry用起来应该比较顺手一些。hive+sentry的配置见Sentry Service for Hive Without kerberos。ldap的部署见openldap+phpldapadmin

LDAP配置

/etc/openldap/slapd.conf

include /etc/openldap/schema/corba.schema

include /etc/openldap/schema/core.schema

include /etc/openldap/schema/cosine.schema

include /etc/openldap/schema/duaconf.schema

include /etc/openldap/schema/dyngroup.schema

include /etc/openldap/schema/inetorgperson.schema

include /etc/openldap/schema/java.schema

include /etc/openldap/schema/misc.schema

include /etc/openldap/schema/nis.schema

include /etc/openldap/schema/openldap.schema

include /etc/openldap/schema/ppolicy.schema

include /etc/openldap/schema/collective.schema

allow bind_v2

pidfile /var/run/openldap/slapd.pid

argsfile /var/run/openldap/slapd.args

TLSCACertificatePath /etc/openldap/certs

TLSCertificateFile "\"OpenLDAP Server\""

TLSCertificateKeyFile /etc/openldap/certs/password

database config

access to *

by dn.exact="gidNumber=0+uidNumber=0,cn=peercred,cn=external,cn=auth" manage

by * none

database monitor

access to *

by dn.exact="gidNumber=0+uidNumber=0,cn=peercred,cn=external,cn=auth" read

by dn.exact="cn=Manager,dc=my-domain,dc=com" read

by * none

database bdb

suffix "dc=bdbigdata,dc=com"

checkpoint 1024 15

rootdn "cn=Manager,dc=bdbigdata,dc=com"

rootpw bigdata

directory /var/lib/ldap

loglevel 296

index objectClass eq,pres

index ou,cn,mail,surname,givenname eq,pres,sub

index uidNumber,gidNumber,loginShell eq,pres

index uid,memberUid eq,pres,sub

index nisMapName,nisMapEntry eq,pres,sub

hive配置

/etc/hive/conf/hive-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:oracle:thin:@192.168.1.6:1521/BDODS</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>oracle.jdbc.driver.OracleDriver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>hive</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>H222</value>

</property>

<property>

<name>datanucleus.readOnlyDatastore</name>

<value>false</value>

</property>

<property>

<name>datanucleus.fixedDatastore</name>

<value>false</value>

</property>

<property>

<name>datanucleus.autoCreateSchema</name>

<value>true</value>

</property>

<property>

<name>datanucleus.autoCreateTables</name>

<value>true</value>

</property>

<property>

<name>datanucleus.autoCreateColumns</name>

<value>true</value>

</property>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>hadoop2-148:23125,hadoop2-149:23125</value>

</property>

<property>

<name>hive.auto.convert.join</name>

<value>true</value>

</property>

<property>

<name>hive.metastore.schema.verification</name>

<value>false</value>

</property>

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/user/hive/warehouse</value>

</property>

<property>

<name>hive.warehouse.subdir.inherit.perms</name>

<value>true</value>

</property>

<property>

<name>hive.metastore.uris</name>

<value>thrift://Server:9083</value>

</property>

<property>

<name>hive.metastore.client.socket.timeout</name>

<value>36000</value>

</property>

<property>

<name>hive.zookeeper.quorum</name>

<value>hadoop2-152:2181,hadoop2-153:2181,hadoop2-154:2181,hadoop2-181:2181,hadoop2-182:2181</value>

</property>

<property>

<name>hive.server2.thrift.port</name>

<value>10000</value>

</property>

<property>

<name>hive.server2.thrift.bind.host</name>

<value>0.0.0.0</value>

</property>

<property>

<name>hive.server2.thrift.min.worker.threads</name>

<value>20</value>

</property>

<property>

<name>hive.server2.thrift.max.worker.threads</name>

<value>100</value>

</property>

<property>

<name>hive.metastore.authorization.storage.checks</name>

<value>true</value>

</property>

<property>

<name>dfs.client.read.shortcircuit</name>

<value>true</value>

</property>

<property>

<name>dfs.domain.socket.path</name>

<value>/var/lib/hadoop-hdfs/dn_socket</value>

</property>

<property>

<name>hive.querylog.location</name>

<value>/var/log/hive/</value>

</property>

<property>

<name>hive.exec.compress.intermediate</name>

<value>true</value>

</property>

<property>

<name>hive.exec.compress.output</name>

<value>true</value>

</property>

<property>

<name>mapred.output.compression.codec</name>

<value>org.apache.hadoop.io.compress.SnappyCodec</value>

</property>

<property>

<name>hive.exec.parallel</name>

<value>true</value>

</property>

<property>

<name>hive.exec.parallel.thread.number</name>

<value>16</value>

</property>

<property>

<name>hive.mapred.mode</name>

<value>strict</value>

</property>

<property>

<name>hive.exec.dynamic.partition.mode</name>

<value>strict</value>

</property>

<property>

<name>hive.exec.max.dynamic.partitions</name>

<value>300000</value>

</property>

<property>

<name>hive.exec.max.dynamic.partitions.pernode</name>

<value>1000</value>

</property>

<!-- Hbase config-->

<property>

<name>hbase.zookeeper.quorum</name>

<value>hadoop2-152:2181,hadoop2-153:2181,hadoop2-154:2181,hadoop2-181:2181,hadoop2-182:2181</value>

</property>

<!-- Sentry Hiveserver2 config-->

<property>

<name>hive.sentry.conf.url</name>

<value>file:///etc/hive/conf/sentry-site.xml</value>

</property>

<property>

<name>hive.server2.session.hook</name>

<value>org.apache.sentry.binding.hive.HiveAuthzBindingSessionHook</value>

</property>

<property>

<name>hive.security.authorization.task.factory</name>

<value>org.apache.sentry.binding.hive.SentryHiveAuthorizationTaskFactoryImpl</value>

</property>

<!-- Sentry hivemeastore config -->

<property>

<name>hive.metastore.filter.hook</name>

<value>org.apache.sentry.binding.metastore.SentryMetaStoreFilterHook</value>

</property>

<property>

<name>hive.metastore.pre.event.listeners</name>

<value>org.apache.sentry.binding.metastore.MetastoreAuthzBinding</value>

</property>

<property>

<name>hive.metastore.event.listeners</name>

<value>org.apache.sentry.binding.metastore.SentryMetastorePostEventListener</value>

</property>

<!-- OpenLDAP -->

<property>

<name>hive.server2.authentication</name>

<value>LDAP</value>

</property>

<property>

<name>hive.server2.authentication.ldap.url</name>

<value>ldap://10.205.54.14</value>

</property>

<property>

<name>hive.server2.authentication.ldap.baseDN</name>

<value>ou=People,dc=bdbigdata,dc=com</value>

</property>

</configuration>

ps:注意openladp的配置

PHPladpadmin

$servers = new Datastore();

$servers->newServer('ldap_pla');

$servers->setValue('server','name','Bigdata LDAP Server');

$servers->setValue('server','host','10.205.54.14');

$servers->setValue('server','port',389);

$servers->setValue('server','base',array('dc=bdbigdata,dc=com'));

$servers->setValue('login','auth_type','session');

$servers->setValue('login','bind_id','cn=Manager,dc=bdbigdata,dc=com');

$servers->setValue('login','bind_pass','bigdata');

$servers->setValue('server','tls',false);

设置LDAP

通过phpldapadmin添加修改用户更方便一些

Hive验证

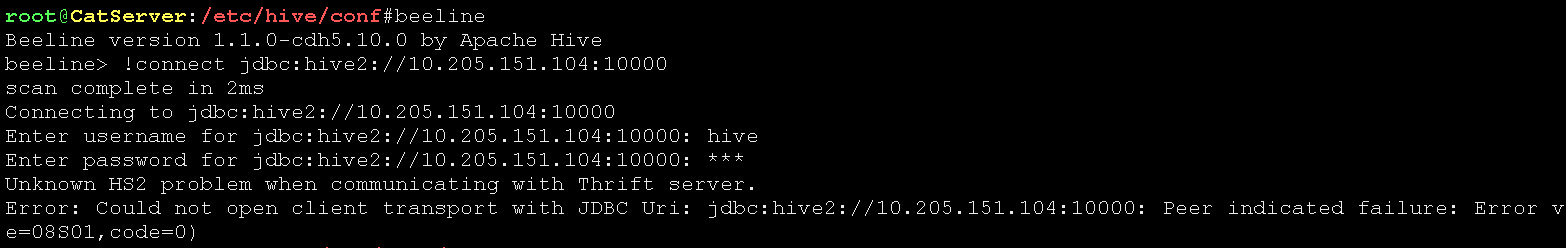

通过hive用户登录,输入错误密码

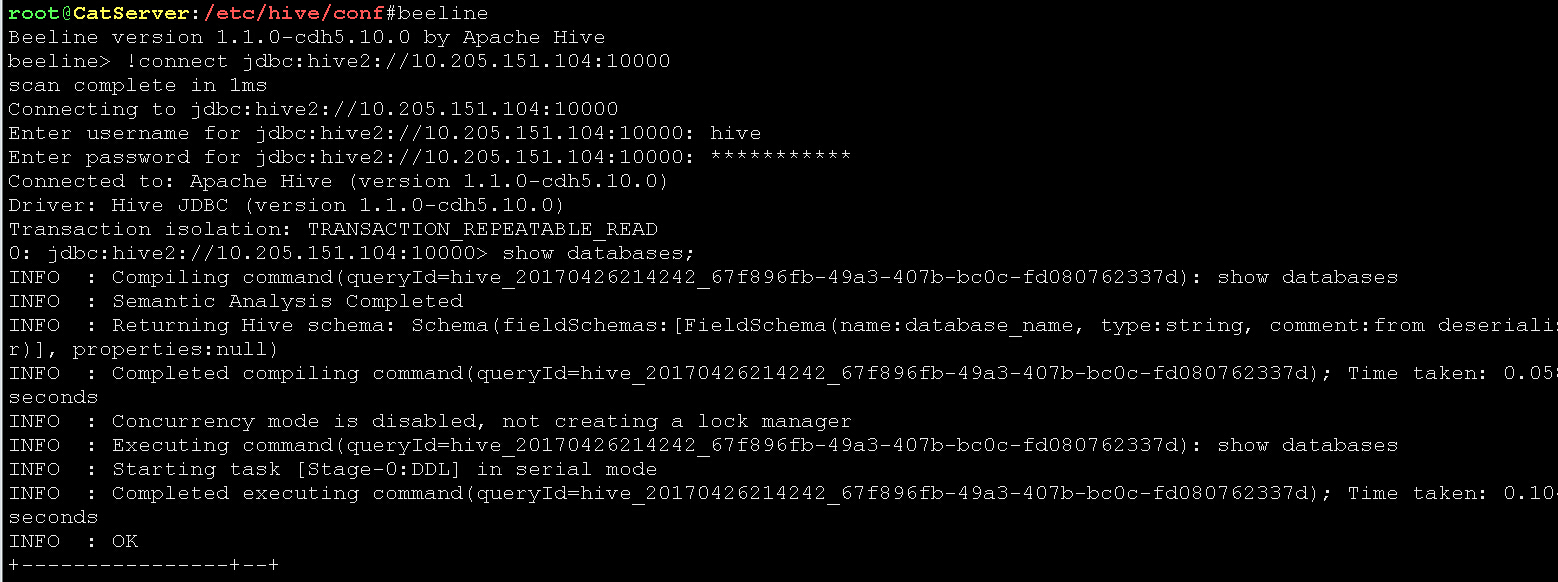

通过hive登录,输入正确密码(密码为在phpladpadmin中设置的密码)

8191

8191

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?