1、Intall Parcel 的时候,hash verification failure

首先使用命令以下命令查看其hash值

sha1sum CDH-5.12.0-1.cdh5.12.0.p0.29-el6.parcel然后将 sha1sum 命令得出的值和 CDH-5.12.0-1.cdh5.12.0.p0.29-el6.parcel.sha1、manifest.json 里面的值对比是否一致。若不一致可能下载文件 CDH-5.12.0-1.cdh5.12.0.p0.29-el6.parcel 已经损坏。使用迅雷等下载工具再次下载一个。

2、使用Cloudera Manager 添加主机的时候,mysql-libs-* 和已经安装的mysql冲突

如果节点已经高版本的mysql数据库的,可能会存在mysql-lib冲突的问题,不过mysql的官方已经给了一个解决的方法,就是安装mysql-lib兼容包。例如:

[root@test1 soft]# tar xvf mysql-5.7.19-1.el6.x86_64.rpm-bundle.tar

mysql-community-libs-5.7.19-1.el6.x86_64.rpm

mysql-community-libs-compat-5.7.19-1.el6.x86_64.rpm # mysql-libs 兼容的包

mysql-community-devel-5.7.19-1.el6.x86_64.rpm

mysql-community-embedded-5.7.19-1.el6.x86_64.rpm

mysql-community-common-5.7.19-1.el6.x86_64.rpm

mysql-community-server-5.7.19-1.el6.x86_64.rpm

mysql-community-test-5.7.19-1.el6.x86_64.rpm

mysql-community-embedded-devel-5.7.19-1.el6.x86_64.rpm

mysql-community-client-5.7.19-1.el6.x86_64.rpm

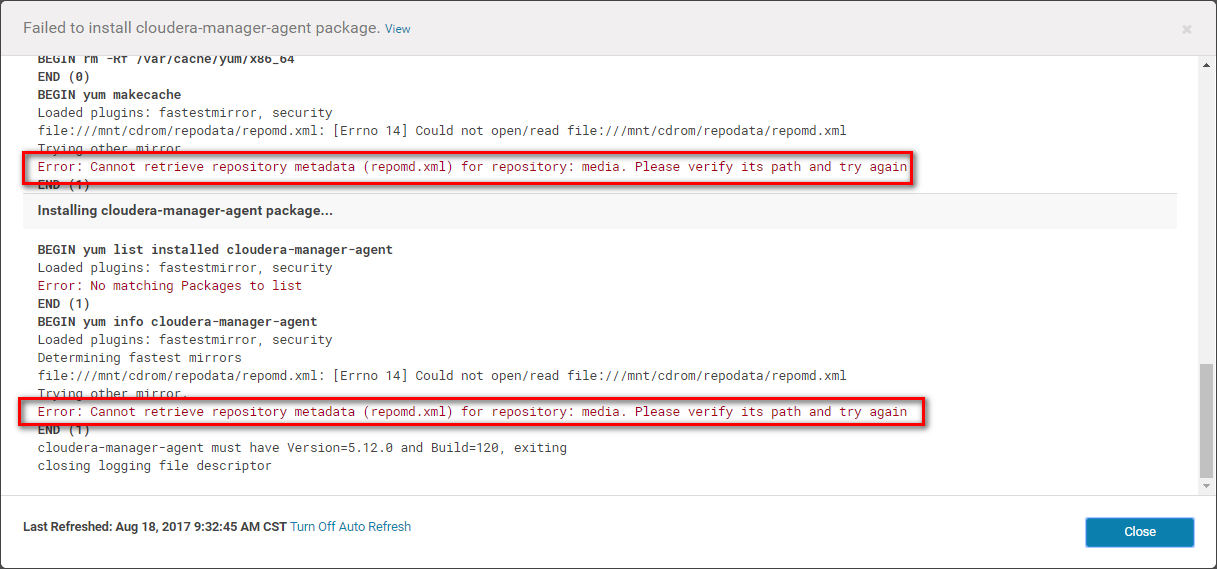

[root@test1 soft]# 3、没有安装centos***.iso文件

解决方法:挂载操作系统本地yum源。

4、如何查看cdh中可用的components的版本信息?

cat manifest.json5、Validations界面提示修改swappiness

安装CDH5.4.0的时候,Validations界面中有以下提示内容

| Cloudera recommends setting /proc/sys/vm/swappiness to at most 10. Current setting is 60. Use the sysctl command to change this setting at runtime and edit /etc/sysctl.conf for this setting to be saved after a reboot. You may continue with installation, but you may run into issues with Cloudera Manager reporting that your hosts are unhealthy because they are swapping. The following hosts are affected: test1; test2 |

说明下swappiness:

Linux系统的swap分区并不是等所有的物理内存都消耗完毕之后,才去使用swap分区的空间,什么时候使用是由swappiness参数值控制。通过下面的方法可以查看swappiness参数的值:

[root@rhce ~]# cat /proc/sys/vm/swappiness

60结果显示该值默认为60,其中:

swappiness=0的时候,表示最大限度使用物理内存,然后才使用swap空间;swappiness=100的时候,表示积极的使用swap分区,并且把内存上的数据及时的搬运到swap空间中。为了让操作系统尽可能的使用物理内存,降低系统对swap的使用,从而提高系统的性能。针对该值的修改应该先临时修改,再永久修改保证重启后生效,具体修改过程如下:

[root@test1 ~]# sysctl vm.swappiness=10 # 临时修改

vm.swappiness = 10

[root@test1 ~]# cat /proc/sys/vm/swappiness # 修改后验证

10

[root@test1 ~]# echo 'vm.swappiness=10' >> /etc/sysctl.conf # 永久修改

[root@test1 ~]# tail -1 /etc/sysctl.conf # 修改后验证

vm.swappiness=10

[root@test1 ~]#

6、Distrubuted None

Installing Selected Parcels

The selected parcels are being downloaded and installed on all the hosts in the cluster.

Distrubuted None解决:

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 # =>保留

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 # =>保留7、clock off

在安装完hadoop集群中,hdfs报clock off,但经检查时间跟ntp server并无大的时间差距,最终解决是修改 /etc/ntp.conf中server只保留本地ntp server。

8、离线cloudera manager 仓库不识别

在配置完repo文件后,使用命令 yum repolist 查看cm的仓库时,报错:

namenode1:/var/www/html# yum repolist

Loaded plugins: product-id, search-disabled-repos, security, subscription-manager

This system is not registered with an entitlement server. You can use subscription-manager to register.

http://10.188.37.36/test/cm5/redhat/6/x86_64/5.12/repodata/repomd.xml: [Errno 14] PYCURL ERROR 22 - "The requested URL returned error: 403 Forbidden"

Trying other mirror.

To address this issue please refer to the below knowledge base article

https://access.redhat.com/solutions/69319

If above article doesn't help to resolve this issue please open a ticket with Red Hat Support.

repo id repo name status

Packages Red Hat Enterprise Linux local 3,854

cloudera-manager Cloudera Manager 7

repolist: 3,861

namenode1:/var/www/html# 经查文件 test/cm5/redhat/6/x86_64/5.12/repodata/repomd.xml 存在且权限为777,如下所示:

namenode1:/var/www/html# ll

lrwxrwxrwx 1 root root 10 Aug 30 14:54 test -> /data/soft

namenode1:/var/www/html# ll test/cm5/redhat/6/x86_64/5.12/repodata/repomd.xml

-rwxrwxrwx 1 root root 951 Aug 9 2017 test/cm5/redhat/6/x86_64/5.12/repodata/repomd.xml

namenode1:/var/www/html# 但是,test为软连接,真实目录为 /data/soft。取消软连接,将安装软件拷贝过来。

namenode1:/var/www/html# ll

total 4

drwxrwxrwx 3 root root 4096 Aug 30 14:51 soft

namenode1:/var/www/html# vi /etc/yum.repos.d/cloudera-manager.repo

[cloudera-manager]

# Packages for Cloudera Manager, Version 5, on RedHat or CentOS 6 x86_64

name=Cloudera Manager

baseurl=http://10.188.37.36/soft/cm5/redhat/6/x86_64/5.12/

gpgcheck=0

"/etc/yum.repos.d/cloudera-manager.repo" 5L, 184C written

namenode1:/var/www/html# yum repolist

Loaded plugins: product-id, search-disabled-repos, security, subscription-manager

This system is not registered with an entitlement server. You can use subscription-manager to register.

cloudera-manager | 951 B 00:00

repo id repo name status

Packages Red Hat Enterprise Linux local 3,854

cloudera-manager Cloudera Manager 7

repolist: 3,861

namenode1:/var/www/html#

9、Safe mode will be turned off automatically once the thresholds have been reached.

org.apache.hadoop.ipc.RetriableException: org.apache.hadoop.hdfs.server.namenode.SafeModeException: Cannot create file/tmp/.cloudera_health_monitoring_canary_files/.canary_file_2018_09_11-19_09_36. Name node is in safe mode.

The reported blocks 0 has reached the threshold 0.9990 of total blocks 0. The number of live datanodes 0 needs an additional 1 live datanodes to reach the minimum number 1.

Safe mode will be turned off automatically once the thresholds have been reached.

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkNameNodeSafeMode(FSNamesystem.java:1497)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.startFileInt(FSNamesystem.java:2767)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.startFile(FSNamesystem.java:2654)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.create(NameNodeRpcServer.java:599)

at org.apache.hadoop.hdfs.server.namenode.AuthorizationProviderProxyClientProtocol.create(AuthorizationProviderProxyClientProtocol.java:112)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.create(ClientNamenodeProtocolServerSideTranslatorPB.java:401)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:617)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1073)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2217)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2213)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1917)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2211)

Caused by: org.apache.hadoop.hdfs.server.namenode.SafeModeException: Cannot create file/tmp/.cloudera_health_monitoring_canary_files/.canary_file_2018_09_11-19_09_36. Name node is in safe mode.

The reported blocks 0 has reached the threshold 0.9990 of total blocks 0. The number of live datanodes 0 needs an additional 1 live datanodes to reach the minimum number 1.

Safe mode will be turned off automatically once the thresholds have been reached.

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkNameNodeSafeMode(FSNamesystem.java:1493)

... 14 more解决方法:

data1:/usr/share/java# hadoop dfsadmin -safemode leave

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

safemode: Access denied for user root. Superuser privilege is required

data1:/usr/share/java# su hdfs

data1:/usr/share/java# hadoop dfsadmin -safemode leave

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

Safe mode is OFF in data1/10.188.37.36:8020

Safe mode is OFF in data2/10.188.37.37:8020

data1:/usr/share/java# 10、Bad : The Hive Metastore canary failed to drop the table it created.

2018-09-11 17:53:27,908 WARN org.apache.hadoop.hive.metastore.ObjectStore: [pool-8-thread-2]: Failed to get database cloudera_manager_metastore_canary_test_db_hive_hivemetastore_4f54e642ae7c4edef5f4c84fbea4a7a2, returning NoSuchObjectException

MetaException(message:You have an error in your SQL syntax; check the manual that corresponds to your MySQL server version for the right syntax to use near 'OPTION SQL_SELECT_LIMIT=DEFAULT' at line 1)

at org.apache.hadoop.hive.metastore.ObjectStore$GetHelper.run(ObjectStore.java:2640)

at org.apache.hadoop.hive.metastore.ObjectStore.getDatabaseInternal(ObjectStore.java:635)

at org.apache.hadoop.hive.metastore.ObjectStore.getDatabase(ObjectStore.java:619)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.RawStoreProxy.invoke(RawStoreProxy.java:103)

at com.sun.proxy.$Proxy7.getDatabase(Unknown Source)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.drop_database_core(HiveMetaStore.java:1083)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.drop_database(HiveMetaStore.java:1229)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invokeInternal(RetryingHMSHandler.java:140)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invoke(RetryingHMSHandler.java:99)

at com.sun.proxy.$Proxy16.drop_database(Unknown Source)

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Processor$drop_database.getResult(ThriftHiveMetastore.java:9138)

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Processor$drop_database.getResult(ThriftHiveMetastore.java:9122)

at org.apache.thrift.ProcessFunction.process(ProcessFunction.java:39)

at org.apache.hadoop.hive.metastore.TUGIBasedProcessor$1.run(TUGIBasedProcessor.java:110)

at org.apache.hadoop.hive.metastore.TUGIBasedProcessor$1.run(TUGIBasedProcessor.java:106)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1917)

at org.apache.hadoop.hive.metastore.TUGIBasedProcessor.process(TUGIBasedProcessor.java:118)

at org.apache.thrift.server.TThreadPoolServer$WorkerProcess.run(TThreadPoolServer.java:286)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

2018-09-11 17:53:27,909 ERROR org.apache.hadoop.hive.metastore.RetryingHMSHandler: [pool-8-thread-2]: NoSuchObjectException(message:cloudera_manager_metastore_canary_test_db_hive_hivemetastore_4f54e642ae7c4edef5f4c84fbea4a7a2: You have an error in your SQL syntax; check the manual that corresponds to your MySQL server version for the right syntax to use near 'OPTION SQL_SELECT_LIMIT=DEFAULT' at line 1)

at org.apache.hadoop.hive.metastore.ObjectStore.getDatabase(ObjectStore.java:629)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.RawStoreProxy.invoke(RawStoreProxy.java:103)

at com.sun.proxy.$Proxy7.getDatabase(Unknown Source)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.drop_database_core(HiveMetaStore.java:1083)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.drop_database(HiveMetaStore.java:1229)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invokeInternal(RetryingHMSHandler.java:140)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invoke(RetryingHMSHandler.java:99)

at com.sun.proxy.$Proxy16.drop_database(Unknown Source)

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Processor$drop_database.getResult(ThriftHiveMetastore.java:9138)

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Processor$drop_database.getResult(ThriftHiveMetastore.java:9122)

at org.apache.thrift.ProcessFunction.process(ProcessFunction.java:39)

at org.apache.hadoop.hive.metastore.TUGIBasedProcessor$1.run(TUGIBasedProcessor.java:110)

at org.apache.hadoop.hive.metastore.TUGIBasedProcessor$1.run(TUGIBasedProcessor.java:106)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1917)

at org.apache.hadoop.hive.metastore.TUGIBasedProcessor.process(TUGIBasedProcessor.java:118)

at org.apache.thrift.server.TThreadPoolServer$WorkerProcess.run(TThreadPoolServer.java:286)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)重点信息:

You have an error in your SQL syntax; check the manual that corresponds to your MySQL server version for the right syntax to use near 'OPTION SQL_SELECT_LIMIT=DEFAULT' at line 1需要调整mysql的jar包适配MySql Server的版本,首先查看一下mysql的版本:

data1:/root# mysql -u root -p

Enter password:

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 1823

Server version: 5.7.19 MySQL Community Server (GPL)

Copyright (c) 2000, 2017, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> 确认一下mysql的jar包版本:

data1:/root# ll /usr/share/java/

total 13604

drwxr-xr-x. 2 root root 4096 Oct 19 2016 gcj-endorsed

-rw-r--r--. 1 root root 10143547 Oct 19 2016 libgcj-4.4.4.jar

lrwxrwxrwx. 1 root root 16 Jun 15 19:01 libgcj-4.4.7.jar -> libgcj-4.4.4.jar

lrwxrwxrwx 1 root root 31 Sep 11 19:00 mysql-connector-java.jar -> mysql-connector-java-5.1.17.jar

data1:/root# 升级mysql的jar包到mysql-connector-java-5.1.38.jar

mysql-connector-java.jar -> mysql-connector-java-5.1.38.jar

重启hive,问题解决。

11、Spark2 : Deploy Client Configuration failed : Completed only 6/8 steps. First failure:Client configuration(id=17) onhost data17(id=7) exited with 1 and expectd 0.

确认jdk的版本

data1:/root# rpm -aq| grep jdk

jdk-1.7.0_80-fcs.x86_64

jdk1.8-1.8.0_181-fcs.x86_64

data1:/root# rpm -e --nodeps jdk-1.7.0_80-fcs.x86_64重新deploy client configuration,问题解决。

9275

9275

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?