主要数据结构

1: struct fib_table { 2: struct hlist_node tb_hlist; 3: u32 tb_id; 4: int tb_default; 5: int (*tb_lookup)(struct fib_table *tb, const struct flowi *flp, struct fib_result *res); 6: int (*tb_insert)(struct fib_table *, struct fib_config *); 7: int (*tb_delete)(struct fib_table *, struct fib_config *); 8: int (*tb_dump)(struct fib_table *table, struct sk_buff *skb, 9: struct netlink_callback *cb); 10: int (*tb_flush)(struct fib_table *table); 11: void (*tb_select_default)(struct fib_table *table, 12: const struct flowi *flp, struct fib_result *res); 13: 14: unsigned char tb_data[0]; 15: };每个路由表实例创建一个fib_table结构,这个结构主要由一个路由表标识和管理该路由表的一组函数指针组成

tb_id,路由表标识符。在include/linux/rtnetlink.h文件中可以找到预先定义的值的列表rt_class_t,例如RT_TABLE_LOCAL.

tb_lookup,被fib_lookup函数调用

tb_insert,被inet_rtm_newroute和ip_rt_ioctl调用,以处理用户空间的ip route add/change/replace/prepend/append/test和route add 命令。类似地,tb_delete被inet_rtm_delroute和ip_rt_ioctl调用,用于从路由表中删除一个路由。两者也被fib_magic调用。

tb_dump,转储路由表的内容

tb_flush,将设置有RTNH_F_DEAD标识的fib_info结构删除

tb_select_default,选择一个默认路由

tb_data,指向该结构的尾部。当该结构被分配用作一个更大结构的一部分时,这种做法是很有用的,因为代码可以在该结构之后紧接着指向外部数据结构的一部分。

1: struct fn_zone { 2: struct fn_zone *fz_next; /* Next not empty zone */ 3: struct hlist_head *fz_hash; /* Hash table pointer */ 4: int fz_nent; /* Number of entries */ 5: 6: int fz_divisor; /* Hash divisor */ 7: u32 fz_hashmask; /* (fz_divisor - 1) */ 8: #define FZ_HASHMASK(fz) ((fz)->fz_hashmask) 9: 10: int fz_order; /* Zone order */ 11: __be32 fz_mask; 12: #define FZ_MASK(fz) ((fz)->fz_mask) 13: };一个zone是一组有着相同子网掩码长度的路由项。路由表中的路由项按照区域来组织

fz_next,将活动区域(至少有一个路由项的区域)链接在一起的指针。该链表的头部保存在fn_zone_list中,fn_zone_list是fn_hash数据结构的一个字段。

fz_hash,指向存储该区域中路由项的hash表

fz_nent,在该区域中路由项的数据(即在该区域的hash表中fib_node实例的数目)。这个值可以用于检查是否需要改变该hash表的容量

fz_divisor,hash表fz_hash的容量

fz_hashmask,这是fz_divisor-1,提供该字段可以用廉价的二进制按位与操作,替代昂贵的模操作以计算一个值对fz_divisor取模操作。、

fz_order,在网络掩码fz_mask中设置的位数目(所有连续),在代码中也用prefixlen来表示

fz_mask,用fz_order构建的子网掩码

1: struct fib_node { 2: struct hlist_node fn_hash; 3: struct list_head fn_alias; 4: __be32 fn_key; 5: struct fib_alias fn_embedded_alias; 6: };内核路由项中每一个唯一的目的网络对应一个fib_node实例。目的网络相同但其他配置参数不同的路由项共享一个fib_node实例。

fn_hash,fib_node元素是用hash表来组织的。这个指针用于把hash表中的一个bucket内所有发生冲突的元素链接在一起。

fn_alias,每个fib_node结构与包含一个或多个fib_alias结构的链表相关联。fn_alias是一个指向该链表头部的指针

fn_key,这是路由的前缀。该字段被用作查找路由表时的搜索关键字

1: struct fib_alias { 2: struct list_head fa_list; 3: struct fib_info *fa_info; 4: u8 fa_tos; 5: u8 fa_type; 6: u8 fa_scope; 7: u8 fa_state; 8: #ifdef CONFIG_IP_FIB_TRIE 9: struct rcu_head rcu; 10: #endif 11: };fib_alias实例是用来区分目的网络相同但其他配置参数(除了目的地址以外)不同的路由项。

fa_list,用于链接与同一个fib_node结构相关联的所有fib_alias实例

fa_info,指向一个fib_info实例,该实例存储着如果处理与该路由相匹配封包的信息

fa_tos,路由的服务类型字段。当该值为零时意味着还没有配置TOS,所以在路由查找时任何值都可以匹配。fa_tos字段允许用户在tos中对个别路由表项指定条件,而fib_rule结构中的r_tos字段是用户在tos中指定策略规则条件

fa_state,一些标识的位图。FA_S_ACCESSED,无论何时查找访问到fib_alias实例,就用该标识来标记。当一个fib_node数据结构改变并应用时这个标识很有用:它用于决定是否应当刷新路由缓存。如果fib_node已经被访问,可以就意味着在该路由发生变化时需要清除路由缓存内的表项,从而触发一次刷新

1: struct fib_info { 2: struct hlist_node fib_hash; 3: struct hlist_node fib_lhash; 4: struct net *fib_net; 5: int fib_treeref; 6: atomic_t fib_clntref; 7: int fib_dead; 8: unsigned fib_flags; 9: int fib_protocol; 10: __be32 fib_prefsrc; 11: u32 fib_priority; 12: u32 fib_metrics[RTAX_MAX]; 13: #define fib_mtu fib_metrics[RTAX_MTU-1] 14: #define fib_window fib_metrics[RTAX_WINDOW-1] 15: #define fib_rtt fib_metrics[RTAX_RTT-1] 16: #define fib_advmss fib_metrics[RTAX_ADVMSS-1] 17: int fib_nhs; 18: #ifdef CONFIG_IP_ROUTE_MULTIPATH 19: int fib_power; 20: #endif 21: struct fib_nh fib_nh[0]; 22: #define fib_dev fib_nh[0].nh_dev 23: };fib_hash,fib_lhash,用于将fib_info数据结构插入到hash表中。

fib_treeref,fib_clntref,引用计数。fib_treeref是持有该fib_info实例引用的fib_node数据结构的数目,fib_clntref是由于路由查找成功而被吃用的引用计数。

fib_dead,标识路由项正在被删除的标识。当该标识置1时,警告该数据结构将被删除而不能再使用。

fib_flags,RTNH_F_DEAD。当与一个多路径路由项相关联的所有fib_nh结构都设置了RTNH_F_DEAD标识时,设置该标识。

fib_protocol,已安装的路由协议。

fib_prefsrc,首选源IP地址

fib_priority,路由的优先级。值越小则优先级越高。它的值可以用IPROUTE2包中的metric/priority.preference关键字来配置。当没有明确设置时,内核将它的值初始化为默认值0

fib_metrics[RTAX_MAX],当配置路由时,ip route命令允许指定一组规格(metric).fib_metrics是存储这组规格的向量。没有明确设置的规格在初始后被设置为0.

fib_power,仅当内核编译为支持多路径时,该字段才是fib_info数据结构的一部分

fib_nhs,仅内核支持多路径功能时,fib_nhs才可能大于0

1: struct fib_nh { 2: struct net_device *nh_dev; 3: struct hlist_node nh_hash; 4: struct fib_info *nh_parent; 5: unsigned nh_flags; 6: unsigned char nh_scope; 7: #ifdef CONFIG_IP_ROUTE_MULTIPATH 8: int nh_weight; 9: int nh_power; 10: #endif 11: #ifdef CONFIG_NET_CLS_ROUTE 12: __u32 nh_tclassid; 13: #endif 14: int nh_oif; 15: __be32 nh_gw; 16: };对每一个下一跳,内核需要跟踪的信息与IP地址相比更多。

nh_dev,这是与设备nh_oif相关联的net_device数据结构。因为设备标识符和指向net_device结构的指针都需要利用(在不同的环境下),虽然利用其中的任何一项就可以得到另一项,但这两项都保存在fib_nh结构中。

nh_hash,用于将fib_nh数据结构插入到hash表中

nh_parent,该指针指向包含该fib_nb实例的fib_info结构

nh_flags,一组RTNH_F_XXX标识

nh_scope,用于获取下一跳的路由范围。在大多数情况下为RT_SCOPE_LINK。该字段有fib_check_in初始化

nh_power,nh_weight,nh_power是由内核初始化,nh_weight是有用户利用关键字weight来设置

nh_classid,它的值是利用realms关键字来设置

nh_oif,出口设备标识符。它是利用关键字oif和dev来设置的

nh_gw,下一跳网关的IP地址,有关键字via提供。

1: struct fib_rule 2: { 3: struct list_head list; 4: atomic_t refcnt; 5: int ifindex; 6: char ifname[IFNAMSIZ]; 7: u32 mark; 8: u32 mark_mask; 9: u32 pref; 10: u32 flags; 11: u32 table; 12: u8 action; 13: u32 target; 14: struct fib_rule * ctarget; 15: struct rcu_head rcu; 16: struct net * fr_net; 17: };利用ip rule命令来配置策略路由规则。

list,将这些fib_rule结构链接到一个包含所有fib_rule实例的全局链表内。

refcnt,引用计数。该引用计数的递增由fib_lookup完成,这就解释了为什么在每次路由查找成功后总是需要调用fib_res_put.

pref, 路由规则的优先级,

table,路由表标识符,范围从0~255.当用户没有指定时,IPROUTE2采用以下的默认原则:当用户命令式添加一条规则时使用RT_TABLE_MAIN,其他情况使用RT_TABLE_UNSPEC。

r_ifindex,r_ifname, r_ifname是策略应用的设备名称。

1: struct fib_result { 2: unsigned char prefixlen; 3: unsigned char nh_sel; 4: unsigned char type; 5: unsigned char scope; 6: struct fib_info *fi; 7: #ifdef CONFIG_IP_MULTIPLE_TABLES 8: struct fib_rule *r; 9: #endif 10: };prefixlen, 匹配路由的前缀长度

nh_sel,多路径路由多个下一跳定义

type,scope,这两个字段被初始化为相匹配的fib_alias实例的fa_type和fa_scope字段的取值

fi,与匹配的fib_alias实例相关联的fib_info实例

r, 该字段有fib_lookup初始化。

1: struct rtable 2: { 3: union 4: { 5: struct dst_entry dst; 6: } u; 7: 8: /* Cache lookup keys */ 9: struct flowi fl; 10: 11: struct in_device *idev; 12: 13: int rt_genid; 14: unsigned rt_flags; 15: __u16 rt_type; 16: 17: __be32 rt_dst; /* Path destination */ 18: __be32 rt_src; /* Path source */ 19: int rt_iif; 20: 21: /* Info on neighbour */ 22: __be32 rt_gateway; 23: 24: /* Miscellaneous cached information */ 25: __be32 rt_spec_dst; /* RFC1122 specific destination */ 26: struct inet_peer *peer; /* long-living peer info */ 27: };rtable数据结构来存储缓存内的路由表项。为了转储路由缓存的内容,可以查看/proc/net/rt_cache文件,或是发布一个ip route list cache

u,用于将一个dst_entry结构嵌入到rtable结构中。其中的rt_next字段用于链接分布在同一个hash表的bucket的rtable实例。

idev,该指针指向出口设备的IP配置块。注意,对送往本地的入口封包的路由,设置的出口设备为回环设备

rt_flags,标志值

rt_type,间接定义了当路由查找匹配时应采取的动作

rt_dst,rt_src, 目标地址和源地址

rt_iif, 入口设备标识符。该值是从入口设备的net_device数据结构中提取的。对于本地产生的流量,该字段被设置为流出设备的ifindex字段。

rt_gateway,当目的主机位直连时,rt_gateway匹配目的地址。当需要通过一个网关到达目的地时,rt_gateway设置为被路由识别的下一跳网关

1: struct dst_entry 2: { 3: struct rcu_head rcu_head; 4: struct dst_entry *child; 5: struct net_device *dev; 6: short error; 7: short 8: int flags; 9: #define DST_HOST 1 //tcp使用

10: #define DST_NOXFRM 2 //IPSec 11: #define DST_NOPOLICY 4 12: #define DST_NOHASH 8 13: unsigned long expires;//过期的时间戳 14: 15: unsigned short header_len; /* more space at head required */ 16: unsigned short trailer_len; /* space to reserve at tail */ 17: 18: unsigned int rate_tokens; 19: unsigned long rate_last; /* rate limiting for ICMP */ 20: 21: struct dst_entry *path; 22: 23: struct neighbour *neighbour; 24: struct hh_cache *hh; 25: #ifdef CONFIG_XFRM 26: struct xfrm_state *xfrm; 27: #else 28: void *__pad1; 29: #endif 30: int (*input)(struct sk_buff*);//分别表示处理入口封包和处理出口封包的函数 31: int (*output)(struct sk_buff*); 32: 33: struct dst_ops *ops;//用于处理dst_entry结构的VFT 34: 35: u32 metrics[RTAX_MAX];//规格向量 36: 37: #ifdef CONFIG_NET_CLS_ROUTE 38: __u32 tclassid;//基于路由表的分类器的标识 39: #else 40: __u32 __pad2; 41: #endif 42: 43: 44: /* 45: * Align __refcnt to a 64 bytes alignment 46: * (L1_CACHE_SIZE would be too much) 47: */ 48: #ifdef CONFIG_64BIT 49: long __pad_to_align_refcnt[2]; 50: #else 51: long __pad_to_align_refcnt[1]; 52: #endif 53: /* 54: * __refcnt wants to be on a different cache line from 55: * input/output/ops or performance tanks badly 56: */ 57: atomic_t __refcnt; /* client references */ 58: int __use; 59: unsigned long lastuse; 60: union { 61: struct dst_entry *next; 62: struct rtable *rt_next; 63: struct rt6_info *rt6_next; 64: struct dn_route *dn_next; 65: }; 66: };用于缓存路由项中独立于协议的信息。L3层协议在单独的结构中存储本协议的更多的私有信息。

1: struct dst_ops { 2: unsigned short family;//地址簇 3: __be16 protocol;//协议标识符 4: unsigned gc_thresh;//垃圾回收算法 5: 6: int (*gc)(struct dst_ops *ops); 7: struct dst_entry * (*check)(struct dst_entry *, __u32 cookie); 8: void (*destroy)(struct dst_entry *); 9: void (*ifdown)(struct dst_entry *, 10: struct net_device *dev, int how); 11: struct dst_entry * (*negative_advice)(struct dst_entry *); 12: void (*link_failure)(struct sk_buff *); 13: void (*update_pmtu)(struct dst_entry *dst, u32 mtu); 14: int (*local_out)(struct sk_buff *skb); 15: 16: atomic_t entries; 17: struct kmem_cache *kmem_cachep;//分配路由缓存元素的内存池 18: };

1: struct flowi { 2: int oif; //出口和入口设备标识符 3: int iif; 4: __u32 mark; 5: 6: union { 7: struct { 8: __be32 daddr; 9: __be32 saddr; 10: __u8 tos; 11: __u8 scope; 12: } ip4_u; 13: 14: struct { 15: struct in6_addr daddr; 16: struct in6_addr saddr; 17: __be32 flowlabel; 18: } ip6_u; 19: 20: struct { 21: __le16 daddr; 22: __le16 saddr; 23: __u8 scope; 24: } dn_u; 25: } nl_u;//该联合的各个字段可用于指定L3层参数取值的结构 26: #define fld_dst nl_u.dn_u.daddr 27: #define fld_src nl_u.dn_u.saddr 28: #define fld_scope nl_u.dn_u.scope 29: #define fl6_dst nl_u.ip6_u.daddr 30: #define fl6_src nl_u.ip6_u.saddr 31: #define fl6_flowlabel nl_u.ip6_u.flowlabel 32: #define fl4_dst nl_u.ip4_u.daddr 33: #define fl4_src nl_u.ip4_u.saddr 34: #define fl4_tos nl_u.ip4_u.tos 35: #define fl4_scope nl_u.ip4_u.scope 36: 37: __u8 proto;//L4层协议 38: __u8 flags; 39: #define FLOWI_FLAG_ANYSRC 0x01 40: union { 41: struct { 42: __be16 sport; 43: __be16 dport; 44: } ports; 45: 46: struct { 47: __u8 type; 48: __u8 code; 49: } icmpt; 50: 51: struct { 52: __le16 sport; 53: __le16 dport; 54: } dnports; 55: 56: __be32 spi; 57: 58: struct { 59: __u8 type; 60: } mht; 61: } uli_u;//该联合的各个字段是可用于指定L4层参数取值的主要结构 62: #define fl_ip_sport uli_u.ports.sport 63: #define fl_ip_dport uli_u.ports.dport 64: #define fl_icmp_type uli_u.icmpt.type 65: #define fl_icmp_code uli_u.icmpt.code 66: #define fl_ipsec_spi uli_u.spi 67: #define fl_mh_type uli_u.mht.type 68: __u32 secid; /* used by xfrm; see secid.txt */ 69: } __attribute__((__aligned__(BITS_PER_LONG/8)));使用flowi数据结构,可以根据如入口设备和出口设备、L3和L4层协议报头中的参数等字段的组合对流量进行分类。它通常被用作路由查找的搜索关键字,IPSec策略的流量选择器及其他高级用途。

内核选项

- 一类是永远支持的,只需要用户通知比如/proc来配置或开启的选项

- 另一类是重新编译内核时可以添加或删除的选项。可以将CONFIG_WAN_ROUTER选项和CONFIG_IP_ROUTE_MULTIPATH_CACHED菜单下的选项编译进主内核

路由Scope与地址Scope

1: /* rtm_scope 2: 3: Really it is not scope, . 4: NOWHERE are reserved for not existing destinations, 7: 8: Intermediate values are also possible f.e. interior routes 9: could be assigned a value between UNIVERSE and LINK. 10: */ 11: 12: enum rt_scope_t 13: { 14: RT_SCOPE_UNIVERSE=0, 15: /* User defined values */ 16: RT_SCOPE_SITE=200, 17: RT_SCOPE_LINK=253, 18: RT_SCOPE_HOST=254, 19: RT_SCOPE_NOWHERE=255 20: };路由的scope保存在fib_alias数据结构内的fa_scope字段。

下一跳的nh_scope范围是根据配置的路由表项的scope计算出来的:一般来讲,给了一条路由和下一跳,那么分配给nh_scope的值就是通往该下一跳的路由scope。但也有一些特殊情况规则不同。

主地址与辅助IP地址

1: #define for_primary_ifa(in_dev) { struct in_ifaddr *ifa; \ 2: for (ifa = (in_dev)->ifa_list; ifa && !(ifa->ifa_flags&IFA_F_SECONDARY); ifa = ifa->ifa_next) 3: 4: #define for_ifa(in_dev) { struct in_ifaddr *ifa; \ 5: for (ifa = (in_dev)->ifa_list; ifa; ifa = ifa->ifa_next) 6: 7: 8: #define endfor_ifa(in_dev) }路由子系统初始化

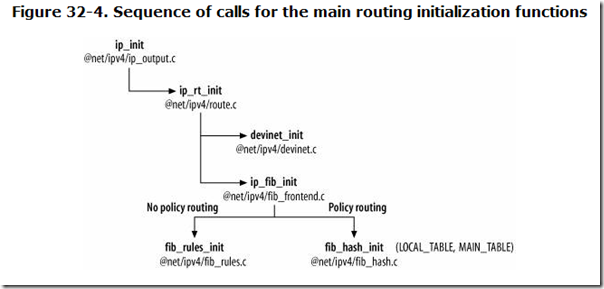

1: void __init ip_init(void) 2: { 3: ip_rt_init(); 4: inet_initpeers(); 5: 6: #if defined(CONFIG_IP_MULTICAST) && defined(CONFIG_PROC_FS) 7: igmp_mc_proc_init(); 8: #endif 9: } 1: int __init ip_rt_init(void) 2: { 3: int rc = 0; 4: 5: #ifdef CONFIG_NET_CLS_ROUTE 6: ip_rt_acct = __alloc_percpu(256 * sizeof(struct ip_rt_acct), __alignof__(struct ip_rt_acct)); 7: if (!ip_rt_acct) 8: panic("IP: failed to allocate ip_rt_acct\n"); 9: #endif 10: 11: ipv4_dst_ops.kmem_cachep = 12: kmem_cache_create("ip_dst_cache", sizeof(struct rtable), 0, 13: SLAB_HWCACHE_ALIGN|SLAB_PANIC, NULL); 14: 15: ipv4_dst_blackhole_ops.kmem_cachep = ipv4_dst_ops.kmem_cachep; 16: 17: rt_hash_table = (struct rt_hash_bucket *) 18: alloc_large_system_hash("IP route cache", 19: sizeof(struct rt_hash_bucket), 20: rhash_entries, 21: (totalram_pages >= 128 * 1024) ? 22: 15 : 17, 23: 0, 24: &rt_hash_log, 25: &rt_hash_mask, 26: rhash_entries ? 0 : 512 * 1024); 27: memset(rt_hash_table, 0, (rt_hash_mask + 1) * sizeof(struct rt_hash_bucket)); 28: rt_hash_lock_init(); 29: 30: ipv4_dst_ops.gc_thresh = (rt_hash_mask + 1); 31: ip_rt_max_size = (rt_hash_mask + 1) * 16; 32: 33: devinet_init(); 34: ip_fib_init(); 35: 36: /* All the timers, started at system startup tend 37: to synchronize. Perturb it a bit. 38: */ 39: INIT_DELAYED_WORK_DEFERRABLE(&expires_work, rt_worker_func); 40: expires_ljiffies = jiffies; 41: schedule_delayed_work(&expires_work, 42: net_random() % ip_rt_gc_interval + ip_rt_gc_interval); 43: 44: if (register_pernet_subsys(&rt_secret_timer_ops)) 45: printk(KERN_ERR "Unable to setup rt_secret_timer\n"); 46: 47: if (ip_rt_proc_init()) 48: printk(KERN_ERR "Unable to create route proc files\n"); 49: #ifdef CONFIG_XFRM //IPSec初始化函数 50: xfrm_init(); 51: xfrm4_init(ip_rt_max_size); 52: #endif 53: rtnl_register(PF_INET, RTM_GETROUTE, inet_rtm_getroute, NULL); 54: 55: #ifdef CONFIG_SYSCTL 56: register_pernet_subsys(&sysctl_route_ops); 57: #endif 58: return rc; 59: } 1: void __init devinet_init(void) 2: { 3: register_pernet_subsys(&devinet_ops); 4: 5: register_gifconf(PF_INET, inet_gifconf); 6: register_netdevice_notifier(&ip_netdev_notifier); 7: 8: rtnl_register(PF_INET, RTM_NEWADDR, inet_rtm_newaddr, NULL); 9: rtnl_register(PF_INET, RTM_DELADDR, inet_rtm_deladdr, NULL); 10: rtnl_register(PF_INET, RTM_GETADDR, NULL, inet_dump_ifaddr); 11: } 1: void __init ip_fib_init(void)//初始化默认路由表,用两个通知链注册处理函数 2: { 3: rtnl_register(PF_INET, RTM_NEWROUTE, inet_rtm_newroute, NULL); 4: rtnl_register(PF_INET, RTM_DELROUTE, inet_rtm_delroute, NULL); 5: rtnl_register(PF_INET, RTM_GETROUTE, NULL, inet_dump_fib); 6: 7: register_pernet_subsys(&fib_net_ops); 8: register_netdevice_notifier(&fib_netdev_notifier); 9: register_inetaddr_notifier(&fib_inetaddr_notifier); 10: 11: fib_hash_init(); 12: } 1: void __init fib_hash_init(void) 2: { 3: fn_alias_kmem = kmem_cache_create("ip_fib_alias", 4: sizeof(struct fib_alias), 5: 0, SLAB_PANIC, NULL); 6: 7: trie_leaf_kmem = kmem_cache_create("ip_fib_trie", 8: max(sizeof(struct leaf), 9: sizeof(struct leaf_info)), 10: 0, SLAB_PANIC, NULL); 11: } 1: int __net_init fib4_rules_init(struct net *net) 2: { 3: int err; 4: struct fib_rules_ops *ops; 5: 6: ops = kmemdup(&fib4_rules_ops_template, sizeof(*ops), GFP_KERNEL); 7: if (ops == NULL) 8: return -ENOMEM; 9: INIT_LIST_HEAD(&ops->rules_list); 10: ops->fro_net = net; 11: 12: fib_rules_register(ops); 13: 14: err = fib_default_rules_init(ops); 15: if (err < 0) 16: goto fail; 17: net->ipv4.rules_ops = ops; 18: return 0; 19: 20: fail: 21: /* also cleans all rules already added */ 22: fib_rules_unregister(ops); 23: kfree(ops); 24: return err; 25: }外部事件

网络协议栈中路由子系统处于中心地位。因此,需要知道发生的网络变化何时会影响到路由表和路由缓存。网络拓扑结构变化是由运行在用户空间的可选的路由协议来处理,而另一方面,本地主机的配置变化也需要让内核知道。

路由子系统尤其对两类时间感兴趣:

- 网络设备状态的变化

- 网络设备上IP配置的变化

为了在这两种变化发生时收到通知,路由子系统分别用netdev_chain和inetaddr_chain通知链向内核注册。

IP配置改变

只要设备的IP配置发生变化,路由子系统就收到一个通知,并运行fib_inetaddr_event来处理该通知。

- NETDEV_UP,本地设备上已经配置了一个新的IP地址。处理函数必须将必要的路由项添加到local_table路由表中。完成这个任务的处理函是 fib_add_ifaddr

- NETDEV_DOWN,本地设备上已经删除了一个IP地址。处理函数必须将以前由NETDEV_UP事件添加的与该地址有关的路由项删除,完成这个任务的处理函数是fib_del_ifaddr

设备状态改变

路由子系统向netdev_chain通知链注册三个不同的处理函数来处理设备状态的变化:

- fib_netdev_event更新路由表

- 当使用策略路由时,fib_rules_event更新策略数据库

- ip_netdev_event更新设备IP配置

路由缓存

路由缓存用于减少路由表查找时间。路由缓存的核心是与协议无关的目的缓存。尽管采用策略路由可有效地创建多张路由表,但所有这些路由表都共享一个路由缓存。缓存的主要工作是存储使路由子系统能够找到封包目的地的信息,并将这些信息通过一组函数提供给上层协议。路由缓存还提供了一些函数用于缓存的清理。路由缓存存储的信息是可应用在所有三层协议的路由表缓存表项,所以,表示路由表缓存表项的任何数据结构都包含这些信息。

路由缓存初始化

缓存容量取决于主机中可用的物理内存的大小。

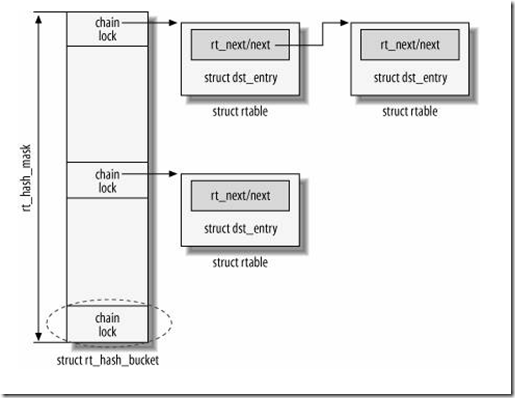

rt_hash_table,用hash表来定义的路由缓存

rt_hash_mask,rt_hash_log,分别表示hash表rt_hash_table的容量和该容量以2为对数所得的值,当一个值必须通过二进制位数来移位时后一个值通常很有用

rt_hash_rnd,当每次利用rt_run_flush来刷新路由缓存时,生成一个新的随机值,它保存在rt_hash_rnd参数中。该参数用于防止DoS攻击,它是路由缓存元素分布算法的一部分,使元素分布没有确定性。

Hash表的组织

(实际情况与上图稍有区别)

当对缓存表进行插入、删除或查找等操作时,路由子系统根据源IP地址、目的IP地址、TOS字段、入口设备或出口设备的组合来选择缓存表中的一个bucket。在为入口流量选择路由时使用入口设备ID,对本地生成的出口流量使用出口设备ID。

分配缓存表项

1: void * dst_alloc(struct dst_ops * ops) 2: { 3: struct dst_entry * dst; 4: 5: if (ops->gc && atomic_read(&ops->entries) > ops->gc_thresh) { 6: if (ops->gc(ops)) 7: return NULL; 8: } 9: dst = kmem_cache_zalloc(ops->kmem_cachep, GFP_ATOMIC); 10: if (!dst) 11: return NULL; 12: atomic_set(&dst->__refcnt, 0); 13: dst->ops = ops; 14: dst->lastuse = jiffies; 15: dst->path = dst; 16: dst->input = dst->output = dst_discard; 17: #if RT_CACHE_DEBUG >= 2 18: atomic_inc(&dst_total); 19: #endif 20: atomic_inc(&ops->entries); 21: return dst;添加元素到缓存中

1: static int rt_intern_hash(unsigned hash, struct rtable *rt, 2: struct rtable **rp, struct sk_buff *skb) 3: { 4: struct rtable *rth, **rthp; 5: unsigned long now; 6: struct rtable *cand, **candp; 7: u32 min_score; 8: int chain_length; 9: int attempts = !in_softirq(); 10: 11: restart: 12: chain_length = 0; 13: min_score = ~(u32)0; 14: cand = NULL; 15: candp = NULL; 16: now = jiffies; 17: 18: if (!rt_caching(dev_net(rt->u.dst.dev))) { 19: /* 20: * If we're not caching, just tell the caller we 21: * were successful and don't touch the route. The 22: * caller hold the sole reference to the cache entry, and 23: * it will be released when the caller is done with it. 24: * If we drop it here, the callers have no way to resolve routes 25: * when we're not caching. Instead, just point *rp at rt, so 26: * the caller gets a single use out of the route 27: * Note that we do rt_free on this new route entry, so that 28: * once its refcount hits zero, we are still able to reap it 29: * (Thanks Alexey) 30: * Note also the rt_free uses call_rcu. We don't actually 31: * need rcu protection here, this is just our path to get 32: * on the route gc list. 33: */ 34: 35: if (rt->rt_type == RTN_UNICAST || rt->fl.iif == 0) { 36: int err = arp_bind_neighbour(&rt->u.dst); 37: if (err) { 38: if (net_ratelimit()) 39: printk(KERN_WARNING 40: "Neighbour table failure & not caching routes.\n"); 41: rt_drop(rt); 42: return err; 43: } 44: } 45: 46: rt_free(rt); 47: goto skip_hashing; 48: } 49: 50: rthp = &rt_hash_table[hash].chain; 51: 52: spin_lock_bh(rt_hash_lock_addr(hash)); 53: while ((rth = *rthp) != NULL) { 54: if (rt_is_expired(rth)) { 55: *rthp = rth->u.dst.rt_next; 56: rt_free(rth); 57: continue; 58: } 59: if (compare_keys(&rth->fl, &rt->fl) && compare_netns(rth, rt)) { 60: /* Put it first */ 61: *rthp = rth->u.dst.rt_next; 62: /* 63: * Since lookup is lockfree, the deletion 64: * must be visible to another weakly ordered CPU before 65: * the insertion at the start of the hash chain. 66: */ 67: rcu_assign_pointer(rth->u.dst.rt_next, 68: rt_hash_table[hash].chain); 69: /* 70: * Since lookup is lockfree, the update writes 71: * must be ordered for consistency on SMP. 72: */ 73: rcu_assign_pointer(rt_hash_table[hash].chain, rth); 74: 75: dst_use(&rth->u.dst, now); 76: spin_unlock_bh(rt_hash_lock_addr(hash)); 77: 78: rt_drop(rt); 79: if (rp) 80: *rp = rth; 81: else 82: skb_dst_set(skb, &rth->u.dst); 83: return 0; 84: } 85: 86: if (!atomic_read(&rth->u.dst.__refcnt)) { 87: u32 score = rt_score(rth); 88: 89: if (score <= min_score) { 90: cand = rth; 91: candp = rthp; 92: min_score = score; 93: } 94: } 95: 96: chain_length++; 97: 98: rthp = &rth->u.dst.rt_next; 99: } 100: 101: if (cand) { 102: /* ip_rt_gc_elasticity used to be average length of chain 103: * length, when exceeded gc becomes really aggressive. 104: * 105: * The second limit is less certain. At the moment it allows 106: * only 2 entries per bucket. We will see. 107: */ 108: if (chain_length > ip_rt_gc_elasticity) { 109: *candp = cand->u.dst.rt_next; 110: rt_free(cand); 111: } 112: } else { 113: if (chain_length > rt_chain_length_max) { 114: struct net *net = dev_net(rt->u.dst.dev); 115: int num = ++net->ipv4.current_rt_cache_rebuild_count; 116: if (!rt_caching(dev_net(rt->u.dst.dev))) { 117: printk(KERN_WARNING "%s: %d rebuilds is over limit, route caching disabled\n", 118: rt->u.dst.dev->name, num); 119: } 120: rt_emergency_hash_rebuild(dev_net(rt->u.dst.dev)); 121: } 122: } 123: 124: /* Try to bind route to arp only if it is output 125: route or unicast forwarding path. 126: */ 127: if (rt->rt_type == RTN_UNICAST || rt->fl.iif == 0) { 128: int err = arp_bind_neighbour(&rt->u.dst); 129: if (err) { 130: spin_unlock_bh(rt_hash_lock_addr(hash)); 131: 132: if (err != -ENOBUFS) { 133: rt_drop(rt); 134: return err; 135: } 136: 137: /* Neighbour tables are full and nothing 138: can be released. Try to shrink route cache, 139: it is most likely it holds some neighbour records. 140: */ 141: if (attempts-- > 0) { 142: int saved_elasticity = ip_rt_gc_elasticity; 143: int saved_int = ip_rt_gc_min_interval; 144: ip_rt_gc_elasticity = 1; 145: ip_rt_gc_min_interval = 0; 146: rt_garbage_collect(&ipv4_dst_ops); 147: ip_rt_gc_min_interval = saved_int; 148: ip_rt_gc_elasticity = saved_elasticity; 149: goto restart; 150: } 151: 152: if (net_ratelimit()) 153: printk(KERN_WARNING "Neighbour table overflow.\n"); 154: rt_drop(rt); 155: return -ENOBUFS; 156: } 157: } 158: 159: rt->u.dst.rt_next = rt_hash_table[hash].chain; 160: 161: #if RT_CACHE_DEBUG >= 2 162: if (rt->u.dst.rt_next) { 163: struct rtable *trt; 164: printk(KERN_DEBUG "rt_cache @%02x: %pI4", 165: hash, &rt->rt_dst); 166: for (trt = rt->u.dst.rt_next; trt; trt = trt->u.dst.rt_next) 167: printk(" . %pI4", &trt->rt_dst); 168: printk("\n"); 169: } 170: #endif 171: /* 172: * Since lookup is lockfree, we must make sure 173: * previous writes to rt are comitted to memory 174: * before making rt visible to other CPUS. 175: */ 176: rcu_assign_pointer(rt_hash_table[hash].chain, rt); 177: 178: spin_unlock_bh(rt_hash_lock_addr(hash)); 179: 180: skip_hashing: 181: if (rp) 182: *rp = rt; 183: else 184: skb_dst_set(skb, &rt->u.dst); 185: return 0; 186: }缓存查找

ip_route_input,用于入口流量的路由查找,这些流量可能是本地提交或要被转发。这个函数决定如何来处理常规封包(本地提交,还是转发、丢弃等),但它也被其他子系统用于确定如何来处理他们的入口流量。

ip_route_output_key,用于出口流量的路由查找,这些流量是由本地生成,可能被本地提交或被发送出去。

函数名以_slow结尾是为了强调缓存查找和路由表找到在速度上的差异,这两种查找途径也被称为快速路径和慢速路径。

1: int ip_route_input(struct sk_buff *skb, __be32 daddr, __be32 saddr, 2: u8 tos, struct net_device *dev) 3: { 4: struct rtable * rth; 5: unsigned hash; 6: int iif = dev->ifindex; 7: struct net *net; 8: 9: net = dev_net(dev); 10: 11: if (!rt_caching(net)) 12: goto skip_cache; 13: 14: tos &= IPTOS_RT_MASK; 15: hash = rt_hash(daddr, saddr, iif, rt_genid(net)); 16: 17: rcu_read_lock(); 18: for (rth = rcu_dereference(rt_hash_table[hash].chain); rth; 19: rth = rcu_dereference(rth->u.dst.rt_next)) { 20: if (((rth->fl.fl4_dst ^ daddr) | 21: (rth->fl.fl4_src ^ saddr) | 22: (rth->fl.iif ^ iif) | 23: rth->fl.oif | 24: (rth->fl.fl4_tos ^ tos)) == 0 && 25: rth->fl.mark == skb->mark && 26: net_eq(dev_net(rth->u.dst.dev), net) && 27: !rt_is_expired(rth)) { 28: dst_use(&rth->u.dst, jiffies); 29: RT_CACHE_STAT_INC(in_hit); 30: rcu_read_unlock(); 31: skb_dst_set(skb, &rth->u.dst); 32: return 0; 33: } 34: RT_CACHE_STAT_INC(in_hlist_search); 35: } 36: rcu_read_unlock(); 37: 38: skip_cache: 39: /* Multicast recognition logic is moved from route cache to here. 40: The problem was that too many Ethernet cards have broken/missing 41: hardware multicast filters :-( As result the host on multicasting 42: network acquires a lot of useless route cache entries, sort of 43: SDR messages from all the world. Now we try to get rid of them. 44: Really, provided software IP multicast filter is organized 45: reasonably (at least, hashed), it does not result in a slowdown 46: comparing with route cache reject entries. 47: Note, that multicast routers are not affected, because 48: route cache entry is created eventually. 49: */ 50: if (ipv4_is_multicast(daddr)) { 51: struct in_device *in_dev; 52: 53: rcu_read_lock(); 54: if ((in_dev = __in_dev_get_rcu(dev)) != NULL) { 55: int our = ip_check_mc(in_dev, daddr, saddr, 56: ip_hdr(skb)->protocol); 57: if (our 58: #ifdef CONFIG_IP_MROUTE 59: || (!ipv4_is_local_multicast(daddr) && 60: IN_DEV_MFORWARD(in_dev)) 61: #endif 62: ) { 63: rcu_read_unlock(); 64: return ip_route_input_mc(skb, daddr, saddr, 65: tos, dev, our); 66: } 67: } 68: rcu_read_unlock(); 69: return -EINVAL; 70: } 71: return ip_route_input_slow(skb, daddr, saddr, tos, dev); 72: } 1: int __ip_route_output_key(struct net *net, struct rtable **rp, 2: const struct flowi *flp) 3: { 4: unsigned hash; 5: struct rtable *rth; 6: 7: if (!rt_caching(net)) 8: goto slow_output; 9: 10: hash = rt_hash(flp->fl4_dst, flp->fl4_src, flp->oif, rt_genid(net)); 11: 12: rcu_read_lock_bh(); 13: for (rth = rcu_dereference(rt_hash_table[hash].chain); rth; 14: rth = rcu_dereference(rth->u.dst.rt_next)) { 15: if (rth->fl.fl4_dst == flp->fl4_dst && 16: rth->fl.fl4_src == flp->fl4_src && 17: rth->fl.iif == 0 && 18: rth->fl.oif == flp->oif && 19: rth->fl.mark == flp->mark && 20: !((rth->fl.fl4_tos ^ flp->fl4_tos) & 21: (IPTOS_RT_MASK | RTO_ONLINK)) && 22: net_eq(dev_net(rth->u.dst.dev), net) && 23: !rt_is_expired(rth)) { 24: dst_use(&rth->u.dst, jiffies); 25: RT_CACHE_STAT_INC(out_hit); 26: rcu_read_unlock_bh(); 27: *rp = rth; 28: return 0; 29: } 30: RT_CACHE_STAT_INC(out_hlist_search); 31: } 32: rcu_read_unlock_bh(); 33: 34: slow_output: 35: return ip_route_output_slow(net, rp, flp); 36: }DST与调用协议间的接口

DST向更高层提供的主要数据结构是dst_entry,与协议相关的结构,比如rtable等。只是对这个结构的包裹。IP协议有自己的路由缓存,但其他协议通常保持对这些路由缓存元素的引用。所有这些引用都涉及dst_entry,而不是协议自己的包裹结构rtable。sk_buff缓冲区也保持对dst_entry结构的一个引用,而不是对rtable结构的引用,这个引用用于存储路由查找结果。

1: static struct dst_ops ipv4_dst_ops = { 2: .family = AF_INET, 3: .protocol = cpu_to_be16(ETH_P_IP), 4: .gc = rt_garbage_collect, 5: .check = ipv4_dst_check, 6: .destroy = ipv4_dst_destroy, 7: .ifdown = ipv4_dst_ifdown, 8: .negative_advice = ipv4_negative_advice, 9: .link_failure = ipv4_link_failure, 10: .update_pmtu = ip_rt_update_pmtu, 11: .local_out = __ip_local_out, 12: .entries = ATOMIC_INIT(0), 13: };只要DST子系统收到一个事件通知,或通过其中一个DST接口函数发起请求,与受影响的dst_entry实例相关的协议就得到通知,通过其dst_ops VFT实例来调用由dst_entry提供的适当函数。

gc,负责垃圾回收。当子系统通过dst_alloc分配一个新的缓存表项,且该函数发现内存不够时就进行垃圾回收。

check,dst_entry被标记为dead的缓存路由项通常不再被使用,但当使用IPsec时该结论不一定成立。该函数检查一个废弃的dst_entry是否还有用。

destroy,该函数被dst_entry调用,DST用dst_destroy来删除一个dst_entry结构,并将删除通知调用协议,以便调用协议先做一些必要的清理工作。

ifdown,

ifdown,该函数被dst_ifdown调用,当一个设备被关闭或注销时,DST子系统自己会调用dst_ifdown。对每一个受影响的缓存路由项都要调用该函数一次

negative_advice,该函数被DST函数dst_negative_advice调用,dst_negative_advice用于向DST通知某个dst_entry实例出现问题

link_failure,该函数被DST函数dst_link_failure调用,由于目的地不可到达而出现封包传输问题,就调用dst_link_failure。

update_pmtu,更新缓存路由项的PMTU。通常是在处理所接收到的ICMP分片需求消息时调用。

get_mss,返回该路由使用的TCP最大段。

外部事件

1: static int dst_dev_event(struct notifier_block *this, unsigned long event, void *ptr) 2: { 3: struct net_device *dev = ptr; 4: struct dst_entry *dst, *last = NULL; 5: 6: switch (event) { 7: case NETDEV_UNREGISTER: 8: case NETDEV_DOWN: 9: mutex_lock(&dst_gc_mutex); 10: for (dst = dst_busy_list; dst; dst = dst->next) { 11: last = dst; 12: dst_ifdown(dst, dev, event != NETDEV_DOWN); 13: } 14: 15: spin_lock_bh(&dst_garbage.lock); 16: dst = dst_garbage.list; 17: dst_garbage.list = NULL; 18: spin_unlock_bh(&dst_garbage.lock); 19: 20: if (last) 21: last->next = dst; 22: else 23: dst_busy_list = dst; 24: for (; dst; dst = dst->next) { 25: dst_ifdown(dst, dev, event != NETDEV_DOWN); 26: } 27: mutex_unlock(&dst_gc_mutex); 28: break; 29: } 30: return NOTIFY_DONE; 31: }刷新路由缓存

- 设备启动或关闭,通过一个给定设备在过去可达的一些地址,可能不再可达或者是由于有更好的路由而通过另一个设备可达

- 向设备添加或删除一个IP地址,

- 全局转发状态或设备的转发状态发生变化,如果禁止转发,就需要删除可以转发流量的所有缓存路由项

- 一条路由被删除

- 通过/proc接口请求进行管理性刷新

垃圾回收

- 当检测到内存不够时需要释放内存。这实际上分为两个任务,一个同步任务和一个异步任务。同步任务是由特殊条件在邬规律的时间点触发,异步任务是在一个定时器到期时或多或少有规律的运行

- 清理内核要求删除的dst_entry结构,它们可能因为还被某些程序引用而不能被立刻删除

同步清理

- 当一个新表项要添加到路由缓存中但发现内存不够时。

- 当一个新表项要添加到路由缓存中,但表项总数将超过阀值gc_threash时。

1: static int rt_garbage_collect(struct dst_ops *ops) 2: { 3: static unsigned long expire = RT_GC_TIMEOUT; 4: static unsigned long last_gc; 5: static int rover; 6: static int equilibrium; 7: struct rtable *rth, **rthp; 8: unsigned long now = jiffies; 9: int goal; 10: 11: /* 12: * Garbage collection is pretty expensive, 13: * do not make it too frequently. 14: */ 15: 16: RT_CACHE_STAT_INC(gc_total); 17: 18: if (now - last_gc < ip_rt_gc_min_interval && 19: atomic_read(&ipv4_dst_ops.entries) < ip_rt_max_size) { 20: RT_CACHE_STAT_INC(gc_ignored); 21: goto out; 22: } 23: 24: /* Calculate number of entries, which we want to expire now. */ 25: goal = atomic_read(&ipv4_dst_ops.entries) - 26: (ip_rt_gc_elasticity << rt_hash_log); 27: if (goal <= 0) { 28: if (equilibrium < ipv4_dst_ops.gc_thresh) 29: equilibrium = ipv4_dst_ops.gc_thresh; 30: goal = atomic_read(&ipv4_dst_ops.entries) - equilibrium; 31: if (goal > 0) { 32: equilibrium += min_t(unsigned int, goal >> 1, rt_hash_mask + 1); 33: goal = atomic_read(&ipv4_dst_ops.entries) - equilibrium; 34: } 35: } else { 36: /* We are in dangerous area. Try to reduce cache really 37: * aggressively. 38: */ 39: goal = max_t(unsigned int, goal >> 1, rt_hash_mask + 1); 40: equilibrium = atomic_read(&ipv4_dst_ops.entries) - goal; 41: } 42: 43: if (now - last_gc >= ip_rt_gc_min_interval) 44: last_gc = now; 45: 46: if (goal <= 0) { 47: equilibrium += goal; 48: goto work_done; 49: } 50: 51: do { 52: int i, k; 53: 54: for (i = rt_hash_mask, k = rover; i >= 0; i--) { 55: unsigned long tmo = expire; 56: 57: k = (k + 1) & rt_hash_mask; 58: rthp = &rt_hash_table[k].chain; 59: spin_lock_bh(rt_hash_lock_addr(k)); 60: while ((rth = *rthp) != NULL) { 61: if (!rt_is_expired(rth) && 62: !rt_may_expire(rth, tmo, expire)) { 63: tmo >>= 1; 64: rthp = &rth->u.dst.rt_next; 65: continue; 66: } 67: *rthp = rth->u.dst.rt_next; 68: rt_free(rth); 69: goal--; 70: } 71: spin_unlock_bh(rt_hash_lock_addr(k)); 72: if (goal <= 0) 73: break; 74: } 75: rover = k; 76: 77: if (goal <= 0) 78: goto work_done; 79: 80: /* Goal is not achieved. We stop process if: 81: 82: - if expire reduced to zero. Otherwise, expire is halfed. 83: - if table is not full. 84: - if we are called from interrupt. 85: - jiffies check is just fallback/debug loop breaker. 86: We will not spin here for long time in any case. 87: */ 88: 89: RT_CACHE_STAT_INC(gc_goal_miss); 90: 91: if (expire == 0) 92: break; 93: 94: expire >>= 1; 95: #if RT_CACHE_DEBUG >= 2 96: printk(KERN_DEBUG "expire>> %u %d %d %d\n", expire, 97: atomic_read(&ipv4_dst_ops.entries), goal, i); 98: #endif 99: 100: if (atomic_read(&ipv4_dst_ops.entries) < ip_rt_max_size) 101: goto out; 102: } while (!in_softirq() && time_before_eq(jiffies, now)); 103: 104: if (atomic_read(&ipv4_dst_ops.entries) < ip_rt_max_size) 105: goto out; 106: if (net_ratelimit()) 107: printk(KERN_WARNING "dst cache overflow\n"); 108: RT_CACHE_STAT_INC(gc_dst_overflow); 109: return 1; 110: 111: work_done: 112: expire += ip_rt_gc_min_interval; 113: if (expire > ip_rt_gc_timeout || 114: atomic_read(&ipv4_dst_ops.entries) < ipv4_dst_ops.gc_thresh) 115: expire = ip_rt_gc_timeout; 116: #if RT_CACHE_DEBUG >= 2 117: printk(KERN_DEBUG "expire++ %u %d %d %d\n", expire, 118: atomic_read(&ipv4_dst_ops.entries), goal, rover); 119: #endif 120: out: return 0; 121: }过期标准

默认情况下,路由缓存表项永远不过期,因为dst_entry->expires为0。当能够使缓存表项过期的事件发生时,利用dst_set_expires函数设置表项的dst_entry->expires时间戳字段为一个非零值来时表项能够过期。

调整和控制垃圾回收的变量

dst_garbage_list,dst_entry结构组成的链表等待被删除。当dst_gc_timer定时器到期时,定时器处理函数就会处理这个链表。只有引用计数__refcnt大于0的待删除缓存表项才被放入该链表内(而不是直接删除),以避免被直接删除。新表项将被插入到链表首部。

dst_gc_timer_expires,dst_gc_timer_inc,dst_gc_timer_expires是定时器在到期之前等待的秒数,取值范围在DST_GC_MIN和DST_GC_MAX之间,当定时器处理函数dst_run_gc运行但没能清空dst_garbage_list链表时,dst_run_gc将这个等待时间增加dst_gc_timer_inc个单位。但dst_gc_timer_inc必须在DST_GC_MIN~DST_GC_MAX之间。

9492

9492

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?