SELECT *

FROM

(SELECT id

,last_value(name,TRUE) OVER (PARTITION BY id ORDER BY up_time) name

,last_value(age,TRUE) OVER (PARTITION BY id ORDER BY up_time) age

,last_value(address,TRUE) OVER (PARTITION BY id ORDER BY up_time) address

,last_value(ct_time,TRUE) OVER (PARTITION BY id ORDER BY up_time) ct_time

,up_time

,row_number() over (partition by id order by up_time desc ) as rank

FROM

(select *

from

(select 1 as id,'a' as name ,'a' as age,null as address,202301 as ct_time,202301 as up_time

union all

select 1 as id,'b' as name ,'b' as age,null as address,null as ct_time, 202302 as up_time

union all

select 1 as id,null as name,'c' as age,null as address,null as ct_time, 202303 as up_time

union all

select 1 as id,'d' as name ,null as age,null as address,null as ct_time, 202304 as up_time

union all

select 2 as id,'a' as name ,'a' as age,null as address,202301 as ct_time, 202301 as up_time

) t

)

)

WHERE rank=1

;

SELECT *

FROM

(SELECT id

,last_value(name,TRUE) OVER (PARTITION BY id ORDER BY up_time ROWS BETWEEN unbounded preceding and unbounded following) name

,last_value(age,TRUE) OVER (PARTITION BY id ORDER BY up_time ROWS BETWEEN unbounded preceding and unbounded following) age

,last_value(address,TRUE) OVER (PARTITION BY id ORDER BY up_time ROWS BETWEEN unbounded preceding and unbounded following) address

,last_value(ct_time,TRUE) OVER (PARTITION BY id ORDER BY up_time ROWS BETWEEN unbounded preceding and unbounded following) ct_time

,up_time

,row_number() over (partition by id order by up_time desc ) as rank

FROM

(select *

from

(select 1 as id,'a' as name ,'a' as age,null as address,202301 as ct_time,202301 as up_time

union all

select 1 as id,'b' as name ,'b' as age,null as address,null as ct_time, 202302 as up_time

union all

select 1 as id,null as name,'c' as age,null as address,null as ct_time, 202303 as up_time

union all

select 1 as id,'d' as name ,null as age,null as address,null as ct_time, 202304 as up_time

union all

select 2 as id,'a' as name ,'a' as age,null as address,202301 as ct_time, 202301 as up_time

) t

)

)

WHERE rank=1

;

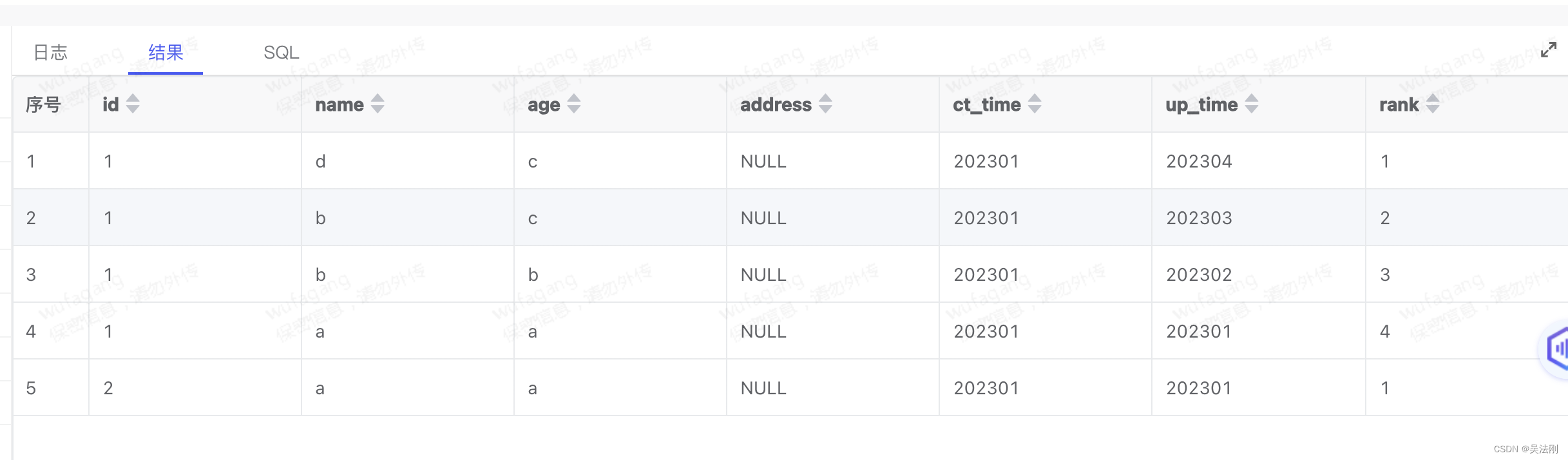

在上述sql中,使用last_value函数对每一个列按照主键id分组,取一个最新值,如果遇见null值,使用参数true进行忽略,最后再使用窗口函数row_number进行分组排序取最大一条数据即可实现数据合并。

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

- 26.

- 27.

- 28.

- 29.

- 30.

- 31.

- 32.

- 33.

- 34.

- 35.

- 36.

- 37.

- 38.

- 39.

- 40.

- 41.

- 42.

- 43.

- 44.

- 45.

- 46.

- 47.

- 48.

- 49.

- 50.

- 51.

- 52.

- 53.

- 54.

1605

1605

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?