一.预先环境搭建

需要三台主机,我这里选用三台虚拟机,系统centos7,ip:192.168.0.100 101 102

1.更改三台主机hostname

vi /etc/hostname

master01

vi /etc/hostname

node01

vi /etc/hostname

node02

2.修改三台主机hosts

vi /etc/hosts

192.168.0.100 master01

192.168.0.101 node01

192.168.0.102 node02

更新yum

yum update

安装依赖包

yum install -y conntrack ipvsadm ipset jq sysstat curl iptables libseccomp

3.关闭防火墙等

systemctl stop firewalld && systemctl disable firewalld

重置iptables

iptables -F && iptables -X && iptables -F -t nat && iptables -X -t nat && iptables -P FORWARD ACCEPT

关闭交换

swapoff -a

永久关闭

vi /etc/fstab

# 注释关于swap的那一行

#关闭selinux

setenforce 0

vi /etc/sysconfig/selinux

#将里面的SELINUX配置为disabled SELINUX=disabled

#同步时间

# 安装ntpdate

yum install ntpdate -y

# 添加定时任务

crontab -e

插入内容:

0-59/10 * * * * /usr/sbin/ntpdate us.pool.ntp.org | logger -t NTP

# 先手动同步一次

ntpdate us.pool.ntp.org

4.安装Docker

若已安装,查看版本是否一致,不一致卸了重装

卸载现有版本

yum remove -y docker* container-selinux

删除容器镜像:

sudo rm -rf /var/lib/docker

安装依赖包

sudo yum install -y yum-utils device-mapper-persistent-data lvm2

设置阿里云镜像源

sudo yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

安装 Docker-CE

sudo yum install docker-ce

开机自启

sudo systemctl enable docker

启动docker服务

sudo systemctl start docker

5.做免密登陆(三台)

[root@master ~]# ssh-keygen -t rsa

//生成密钥, 连续回车

复制密钥到其他主机

ssh-copy-id node01

ssh-copy-id node02

把域名解析复制到其他主机,分别改了的就不用了

scp /etc/hosts node01:/etc

scp /etc/hosts node02:/etc

6. 打开路由转发和iptables桥接功能(三台),两种方法建议二

[root@master ~]# vim /etc/sysctl.d/k8s.conf

//开启iptables桥接功能

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

[root@master ~]# echo net.ipv4.ip_forward = 1 >> /etc/sysctl.conf

//**打开路由转发

[root@master ~]# sysctl -p /etc/sysctl.d/k8s.conf

[root@master ~]# sysctl -p

//刷新一下

或者(推荐)

#写入配置文件

$ cat <<EOF > /etc/sysctl.d/kubernetes.conf

vm.swappiness=0

vm.overcommit_memory = 1

vm.panic_on_oom=0

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

fs.inotify.max_user_watches=89100

EOF

#生效配置文件

$ sysctl -p /etc/sysctl.d/kubernetes.conf

如果以上命令执行失败可能是缺少模块,可执行以下命令

[root@master ~]# modprobe br_netfilter

把路由转发和iptables桥接复制到其他主机

[root@master ~]# scp /etc/sysctl.d/kubernetes.conf node01:/etc/sysctl.d/

[root@master ~]# scp /etc/sysctl.d/kubernetes.conf node02:/etc/sysctl.d/

[root@master ~]# scp /etc/sysctl.conf node02:/etc/

[root@master ~]# scp /etc/sysctl.conf node01:/etc/

记得node01和node02也要执行以下命令

[root@master ~]# sysctl -p /etc/sysctl.d/kubernetes.conf

[root@master ~]# sysctl -p

二. 安装部署k8s

· kubeadm:部署集群用的命令

· kubelet:在集群中每台机器上都要运行的组件,负责管理pod、容器的生命周期

· kubectl:集群管理工具

(1)指定yum安装kubernetes的yum源(三台)

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

下载完成之后,查看一下仓库是否可用

[root@master ~]# yum repolist

创建本地缓存(三台)

[root@master ~]# yum makecache fast

(2)各节点安装所需安装包

1.三台主机下载版本号自行更改

master执行

[root@master ~]# yum -y install kubeadm-1.15.0-0 kubelet-1.15.0-0 kubectl-1.15.0-0

node执行

[root@node01 ~]# yum -y install kubeadm-1.15.0-0 kubelet-1.15.0-0

2.三台主机把 kubelet加入开机自启

systemctl enable kubelet

3.节点创建配置文件夹(所有节点)后面粘贴

mkdir -p /home/glory/working

cd /home/glory/working/

#之后这个是在master上搞apiserver,都是在master上

4.生成配置文件

kubeadm config print init-defaults ClusterConfiguration > kubeadm.conf

5.修改 kubeadm.conf 中的如下几项:

imageRepository

kubernetesVersion

vi kubeadm.conf

# 修改 imageRepository: k8s.gcr.io

# 改为 registry.cn-hangzhou.aliyuncs.com/google_containers

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

# 修改kubernetes版本kubernetesVersion: v1.13.0

# 改为kubernetesVersion: v1.15.0

kubernetesVersion: v1.15.0

修改 kubeadm.conf 中的API服务器地址,后面会频繁使用这个

地址。就是后面serverapi的地址

localAPIEndpoint:

localAPIEndpoint:

advertiseAddress: 192.168.0.100

bindPort: 6443

注意: 192.168.0.100 是master主机的ip地址

配置子网网络

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16

serviceSubnet: 10.96.0.0/12

scheduler: {}

这里的 10.244.0.0/16 和 10.96.0.0/12 分别是k8s内部pods和services的子网网络,最好使用这个地址,后续flannel网络需要用到。可能没有pod,就添加一行yaml文件格式要求很严格,空格别少了或者多了。

6.查看一下都需要哪些镜像文件需要拉取

kubeadm config images list --config kubeadm.conf

$ kubeadm config images list --config kubeadm.conf

registry.cn-beijing.aliyuncs.com/imcto/kube-

apiserver:v1.15.0

registry.cn-beijing.aliyuncs.com/imcto/kube-

controller-manager:v1.13.1

registry.cn-beijing.aliyuncs.com/imcto/kube-

scheduler:v1.13.1

registry.cn-beijing.aliyuncs.com/imcto/kube-

proxy:v1.13.1

registry.cn-beijing.aliyuncs.com/imcto/pause:3.1

registry.cn-beijing.aliyuncs.com/imcto/etcd:3.2.24

registry.cn-beijing.aliyuncs.com/imcto/coredns:1.2.6

7.拉取镜像

kubeadm config images pull --config ./kubeadm.conf

8.初始化并且启动

初始化

sudo kubeadm init --config ./kubeadm.conf

若报错根据报错信息对应修改

[error]比如什么cpu个数,swap未关闭,bridge-nf-call-iptables 这个参数,需要设置为 1:

改好重新执行

#iptable设置为一的方法

echo "1" >/proc/sys/net/bridge/bridge-nf-call-iptables

modprobe br_netfilter

成功后记下最后的token,这个很重要,很重要,很重要。要复制出来。

9.更更多kubeadm配置文件参数详见(没啥用)

kubeadm config print-defaults

10.k8s启动成功输出内容较多,但是记住末尾的内容

末尾token命令复制出来,这种样子的

kubeadm join 172.24.207.115:6443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:cf7ed04f59030a449f2f50c1db6640cbce33770e70aee7af2b7824167a97bad4

kubeadm join 192.168.0.100:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:89a4e7ac6f2a4722dfe17b70fa6ab06da4a6672ea7017857ef79d50826942b38

11.按照官方提示,执行以下操作。刚才初始化里面有这个提示

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

12.创建系统服务并启动

# 启动kubelet 设置为开机⾃自启动

$ sudo systemctl enable kubelet

# 启动k8s服务程序

$ sudo systemctl start kubelet

13.验证输入,注意显示master状态是 NotReady ,证明初始化服务器器成功

kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady master 12m v1.13.1

14.查看当前k8s集群状态

$ kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health": "true"}

目前只有一个master,还没有node,而且是NotReady状态,那么我们需要将node加⼊入到master管理理的集群中来。在加入之前,我们需要先配置k8s集群的内部通信网络,这里采用的是flannel网络。

- 部署集群内部通信flannel网络

cd /home/glory/working

wget https://raw.githubusercontent.com/coreos/flannel/a70459be0084506e4ec919aa1c114638878db11b/Documentation/kube-flannel.yml

#注意:如果下不了,就自己创建一个kube-flannel.yml内容如下

## 因yaml格式要求严格,未确保,先建立txt,保存内容后再改yml

##我建议,不下载,直接复制我的,改名字,不然可能出现镜像源无法下载问题

vi kube-flannel.txt

mv kube-flannel.txt kube-flannel.yml

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: kube-flannel-ds-amd64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: amd64

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay-mirror.qiniu.com/coreos/flannel:v0.11.0-amd64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay-mirror.qiniu.com/coreos/flannel:v0.11.0-amd64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: arm64

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay-mirror.qiniu.com/coreos/flannel:v0.11.0-arm64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay-mirror.qiniu.com/coreos/flannel:v0.11.0-arm64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm

namespace: kube-system

labels:

tier: node

app: flannel

spec:

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: arm

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay-mirror.qiniu.com/coreos/flannel:v0.11.0-arm

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay-mirror.qiniu.com/coreos/flannel:v0.11.0-arm

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: kube-flannel-ds-ppc64le

namespace: kube-system

labels:

tier: node

app: flannel

spec:

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: ppc64le

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay-mirror.qiniu.com/coreos/flannel:v0.11.0-ppc64le

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay-mirror.qiniu.com/coreos/flannel:v0.11.0-ppc64le

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: kube-flannel-ds-s390x

namespace: kube-system

labels:

tier: node

app: flannel

spec:

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: s390x

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay-mirror.qiniu.com/coreos/flannel:v0.11.0-s390x

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay-mirror.qiniu.com/coreos/flannel:v0.11.0-s390x

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

注意:国内环境应将image 中quay.io 替换为 quay-mirror.qiniu.com,这是七牛的。若挂了

参照这篇镜像源声明:https://www.cnblogs.com/ants/archive/2020/04/09/12663724.html

若是之前忘了改,直接执行了下面,发现又下不来,只能手动下载,复制的我的,直接16.

docker pull quay-mirror.qiniu.com/coreos/flannel:v0.11.0-amd64

#你上面文件没改,就要把这镜像标签改回quay.io

docker tag quay-mirror.qiniu.com/coreos/flannel:v0.11.0-amd64 quay.io/coreos/flannel:v0.11.0-amd64

######2021/3/15更新,七牛的flannel也挂了,你后面执行get pods时flannel一直会无法下载,这里建议你自己上阿里云找flannel####

我这里给个,上面文件不用改了,手动改下flannel的镜像源位置

#自己上阿里云找的朋友,不要忘了后面的版本号,复制地址时时默认没有版本号的,不然会报page not found 之类的错误

docker pull registry.cn-hangzhou.aliyuncs.com/mygcrio/flannel:v0.11.0-amd64

docker tag registry.cn-hangzhou.aliyuncs.com/mygcrio/flannel:v0.11.0-amd64 quay-mirror.qiniu.com/coreos/flannel:v0.11.0-amd64

16.开启flannel服务

kubectl apply -f kube-flannel.yml

过程比较久需要下镜像

17.master准备成功

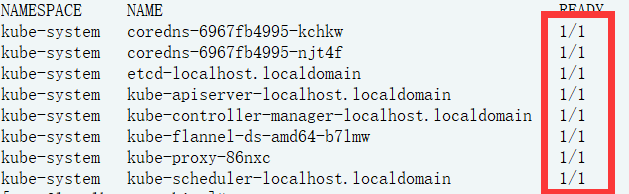

notready变为ready就好了

查看镜像下载情况

kubectl get pods --all-namespaces -o wide

root@master01:/home/itcast/working# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master01 Ready master 6m58s v1.13.1

全部ready之后进行后续步骤

18.配置k8s集群的Node主机环境

19.启动k8s后台服务

#启动kubelet 设置为开机⾃自启动

$ sudo systemctl enable kubelet

#启动k8s服务程序

$ sudo systemctl start kubelet

20.将master机器器的 /etc/kubernetes/admin.conf 传到到node1和node2

#将admin.conf传递给node1

sudo scp /etc/kubernetes/admin.conf root@192.168.0.101:/home/glory/

#将admin.conf传递给node2

sudo scp /etc/kubernetes/admin.conf root@192.168.0.102:/home/glory/

21.node执行以下命令

mkdir -p $HOME/.kube

sudo cp -i admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

22.node节点执行

之前复制的token那条命令

kubeadm join 172.17.93.196:6443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:4c38f97d88b8ab34fae182b3387e260b12807511094a13d9884337169a750eba

23.应用两个node主机分别应用flannel网络

将 master 中的 kube-flannel.yml 分别传递给两个 node 节点.

0#将kube-flannel.yml传递给node1

sudo scp $HOME/working/kube-flannel.yml root@192.168.0.101:/home/glory/

#将kube-flannel.yml传递给node2

sudo scp $HOME/working/kube-flannel.yml root@192.168.0.102:/home/glory/

分别启动 flannel ⽹网络

root@node1:~$ kubectl apply -f kube-flannel.yml

root@node2:~$ kubectl apply -f kube-flannel.yml

24.查看node是否已经加入到k8s集群中(需要等一段时间才能ready)

kubectl get pods --all-namespaces -o wide

glory@node2:~$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 35m v1.13.1

node1 Ready <none> 2m23s v1.13.1

node2 Ready <none> 40s v1.13.1

参考文献:

https://blog.51cto.com/14320361/2463790?source=drh

891

891

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?