本人环境:

3台虚拟机分别为

sparkproject1 192.168.124.110

sparkproject2 192.168.124.111

sparkproject3 192.168.124.112

linux(vi /etc/hosts)和windows配置hosts文件(C:\Windows\System32\drivers\etc) :

# Copyright (c) 1993-2009 Microsoft Corp.

#

# This is a sample HOSTS file used by Microsoft TCP/IP for Windows.

#

# This file contains the mappings of IP addresses to host names. Each

# entry should be kept on an individual line. The IP address should

# be placed in the first column followed by the corresponding host name.

# The IP address and the host name should be separated by at least one

# space.

#

# Additionally, comments (such as these) may be inserted on individual

# lines or following the machine name denoted by a '#' symbol.

#

# For example:

#

# 102.54.94.97 rhino.acme.com # source server

# 38.25.63.10 x.acme.com # x client host

# localhost name resolution is handled within DNS itself.

# 127.0.0.1 localhost

# ::1 localhost

192.168.124.110 sparkproject1

192.168.124.111 sparkproject2

192.168.124.112 sparkproject3

前提是这3台机器(虚拟机)我之前已经搭好集群 了,包括但不限于Hadoop,hive,kafka,flume,es,zookeeper等等,本篇文章只是对我之前搭好集群的启动停止做个记录而已。

启动/停止 hdfs:

启动hdfs

./sbin/start-dfs.sh

停止hdfs

./sbin/stop-dfs.sh如果你配置了环境变量,不需要 进入Hadoop安装目录直接执行即可。

执行启动命令效果:

sparkproject1

[root@sparkproject1 ~]# jps

26103 NameNode

26279 SecondaryNameNode

26384 Jps

[root@sparkproject1 ~]# sparkproject2

[root@sparkproject2 ~]# jps

4829 Jps

4762 DataNode

[root@sparkproject2 ~]# sparkproject3

[root@sparkproject3 ~]# jps

1308 Jps

1241 DataNode

[root@sparkproject3 ~]# 执行stop-dfs.sh效果:

sparkproject1

[root@sparkproject1 ~]# stop-dfs.sh

18/10/23 00:26:28 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Stopping namenodes on [sparkproject1]

sparkproject1: stopping namenode

sparkproject3: stopping datanode

sparkproject2: stopping datanode

Stopping secondary namenodes [0.0.0.0]

0.0.0.0: stopping secondarynamenode

18/10/23 00:26:48 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[root@sparkproject1 ~]# jps

26729 Jps

[root@sparkproject1 ~]# sparkproject2和sparkproject3的 datanode全部停止。

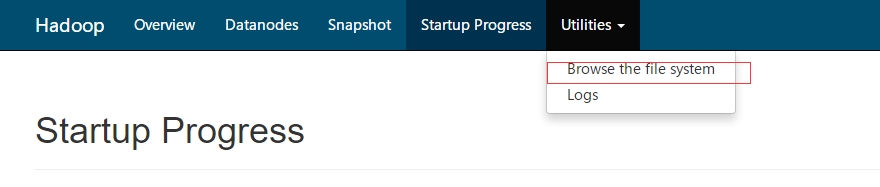

登陆:

http://sparkproject1:50070

但我的sparkproject1才是主机,如果我现在在sparkproject2上启动start-all.sh,效果如下:

sparkproject1:

[root@sparkproject1 local]# jps

27022 Jps

26954 NameNode

You have new mail in /var/spool/mail/root

[root@sparkproject1 local]# sparkproject2:

[root@sparkproject2 ~]# jps

5022 DataNode

5147 SecondaryNameNode

5255 Jps

[root@sparkproject2 ~]# sparkproject3:

[root@sparkproject3 ~]# jps

1462 Jps

1395 DataNode

[root@sparkproject3 ~]# 和第一种区别在于SecondaryNameNode在sparkproject2启动了。namenode,和datanode是配置好的,不变。

且只能

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?