元启发式算法的tuners方法主要有CRS-Tuning, F-Race, REVAC等。下面,分别附上每一种方法的伪代码。

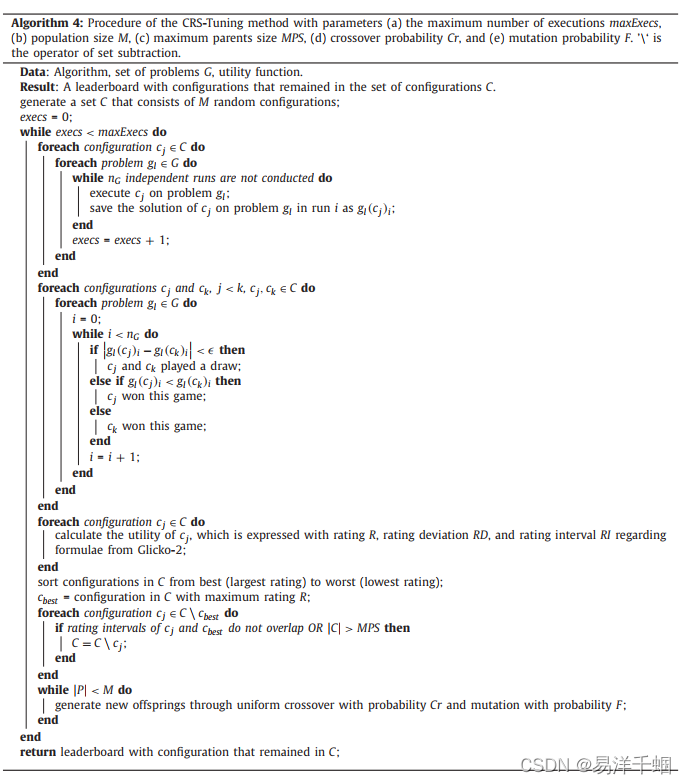

1. CRS-Tuning

Tuning using the Chess Rating System (CRS-Tuning) is a novel tuning method based on our recently presented method for comparing and ranking evolutionary algorithms Chess Rating System for Evolutionary Algorithms (CRS4EAs) [47]. An experiment using the CRS4EAs method is conducted in the form of a tournament where m algorithms participate in a tournament as chess players, solving NG optimisation problems over nG independent runs. All m∗NG∗nG solutions are then pairwisely compared, where one comparison is considered as one game, and the results of such comparisons are saved as wins, losses, or draws (score Sj against jth player equals 1, 0 or 0.5, respectively). The winner of a game is the algorithm with a solution that was closer to the optimum of the considered problem. However, if the difference between these two solutions is smaller than the predefined , the solutions are considered equal and the players declare a draw. There are total games played (comparisons performed) throughout a tournament and by considering the results of all these games information is generated about the performance of a player. Each player on the leaderboard is described using rating R, rating deviation RD, and rating interval RI that are calculated regarding the formulae from the Glicko-2 chess rating system [20]. The rating shows the absolute power of a player, and the rating deviation is an indicator of how reliable the player’s rating is (if the rating deviation is small, the rating is considered reliable, otherwise it is unreliable). For a new player the initial rating equals 1500 and rating deviation 350. These two values are updated after each conducted tournament. From rating R and rating deviation RD a rating interval RI is formed regarding the statistical 68-95-99.7 rule. It can be said that with a probability of 95% the player’s rating R belongs to an interval [R − 2RD, R + 2RD]. Whenever this choice of an interval is too liberal, a more conservative 99.7% RI can be used. In this case there is a 99.7% probability that a player’s rating belongs to the interval [R − 3RD, R + 3RD]. The choice of the interval is the same choice as selecting significance level α when conducting a statistical test of significance standard values for α are 0.05 and 0.01 and it is up to a researcher to decide if he wants a more conservative (α = 0.01) or more liberal (α = 0.05) comparison. If rating intervals of two algorithms do not overlap, the performances of these two algorithms are considered significantly different. With each tournament played the rating deviation RD gets smaller and it is clear that the value of RD should be greater than 0. If an algorithm were to have RD = 0, it would be significantly better than all algorithms with lower ratings and significantly worse than all algorithms with higher ratings and the comparison where every algorithm is significantly different from all other algorithms would be unreasonable and meaningless. Similarly, a very large RD value would incline that an algorithm has the same performance as other algorithms with much better or much worse ratings. Hence, when applying the Glicko-2 rating system, the rating deviation RD has lower and upper limits. These limits are usually determined regarding the situation, but we suggest that RDmax should not be greater than 350 and RDmin not less than 30 [47]. In our previous experiment RDmin = 50 showed as an appropriate value.

使用国际象棋评分系统(CRS-Tuning)进行调优是一种基于我们最近提出的方法的新调优方法用于比较和排序进化算法国际象棋进化算法评级系统(CRS4EAs)。一个使用 CRS4EAs 方法的实验以锦标赛的形式进行,其中 m 个算法参与作为国际象棋选手,在 nG 独立运行中解决 NG 优化问题。那么所有的 m∗NG∗nG 解都是两两比较,其中一次比较被认为是一场比赛,并且这种比较的结果保存为赢、输或平(对第 j 个玩家的得分 Sj 分别等于 1、0 或 0.5)。游戏的赢家是算法具有更接近所考虑问题的最优解的解决方案。但是,如果这两者之间的差异解决方案小于预定义的,解决方案被认为是相等的,玩家宣布平局。有NG *nG ∗ m ∗ (m − 1)/2 场比赛总数(进行的比较)在整个锦标赛中并考虑到所有这些游戏信息都是关于玩家表现的。排行榜上的每个玩家都有描述使用根据 Glicko-2 的公式计算的评级 R、评级偏差 RD 和评级间隔 RI国际象棋评分系统[20]。评分显示了一个球员的绝对实力,评分偏差是一个指标玩家的评分是可靠的(如果评分偏差很小,则认为评分可靠,否则不可靠)。对于新玩家,初始评分等于 1500,评分偏差为 350。这两个值在每次执行后更新比赛。根据统计 68-95-99.7 规则,从评级 R 和评级偏差 RD 形成评级区间 RI。可以说,玩家的评分 R 有 95% 的概率属于区间 [R − 2RD, R + 2RD]。每当这区间选择过于宽松,可以使用更保守的 99.7% RI。在这种情况下,有 99.7% 的概率一个玩家的评分属于区间 [R − 3RD, R + 3RD]。区间的选择与选择相同进行显着性统计检验时,显着性水平 α 的标准值为 0.05 和 0.01,最高可达研究人员决定他是否想要更保守(α = 0.01)或更自由(α = 0.05)的比较。如果评级间隔两种算法不重叠,这两种算法的性能被认为是显着不同的。与每个锦标赛的评分偏差 RD 变小了,很明显 RD 的值应该大于 0。如果一个如果算法的 RD = 0,它将明显优于所有评分较低且明显较差的算法比所有具有更高评级的算法以及每个算法与所有其他算法显着不同的比较算法将是不合理且毫无意义的。类似地,一个非常大的 RD 值会倾向于算法具有与其他算法具有更好或更差评级的相同性能。因此,当应用 Glicko-2 评级时系统,额定偏差RD有下限和上限。这些限制通常是根据情况确定的,但我们建议 RDmax 不应大于 350,RDmin 不应小于 30。在我们之前的实验中RDmin = 50 显示为合适的值。

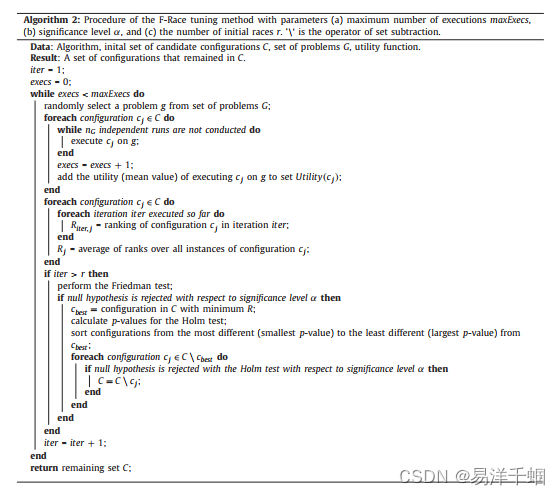

2. F-Race

Birattari et al. presented F-Race as a racing method that empirically evaluates a set of configurations by discarding the bad ones as soon as statistically sufficient evidence is gathered against them. This makes the whole process more effective and less time-consuming than the Brute Force method. In the F-Race method, statistically sufficient evidence is provided by the Friedman test . There are three parameter values that have to be set before the F-Race begins: the maximum number of executions (maxExecs), significance level (α), and the number of initial races ®. These values are determined regarding the tuning problem that needs to be solved. The whole procedure of the F-Race method is shown in Algorithm 2 and described as

follows.

比拉塔里等人将 F-Race 作为一种竞赛方法,通过丢弃 一旦收集到足够的反对他们的统计证据,就会出现坏的。 这使得整个过程更加有效 并且比蛮力方法耗时更少。 在 F-Race 方法中,统计上足够的证据由 弗里德曼检验。

在 F-Race 开始之前必须设置三个参数值: 最大执行次数

(maxExecs)、显着性水平 (α) 和初始竞赛数 ®。 这些值是关于调谐确定的 需要解决的问题。 F-Race 方法的整个过程如算法 2 所示,描述为跟随。

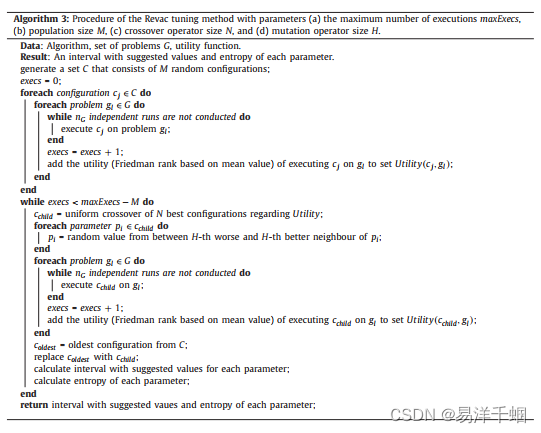

3. REVAC

有关内容可以阅读Parameter tuning with Chess Rating System (CRS-Tuning) for meta-heuristic algorithms一文。

附上有关可以学习的参考文献

Reference

- [1] T. Bartz-Beielstein, C.W. Lasarczyk, M. Preuß, Sequential parameter optimization, IEEE Congress Evol. Comput. 1 (2005) 773–780.

- [2] M. Birattari, T. Stützle, L. Paquete, K. Varrentrapp, A racing algorithm for configuring metaheuristics, Genetic Evol. Comput. Conf. 2 (2002) 11–18.

- [3] R.A. Bradley, M.E. Terry, Rank analysis of incomplete block designs: I. the method of paired comparisons, in: Biometrika, 1952, pp. 324–345.

- [4] J. Brest, S. Greiner, B. Boškovic´, M. Mernik, V. Žumer, Self-adapting control parameters in differential evolution: A comparative study on numerical

benchmark problems, IEEE Trans. Evol. Comput. 10 (6) (2006) 646–657. - [5] J. Brest, M. Maucec ˇ , Self-adaptive differential evolution algorithm using population size reduction and three strategies, Soft Comput. 15 (11) (2011)

2157–2174. - [6] M. Crepinšek ˇ , S.-H. Liu, M. Mernik, Exploration and exploitation in evolutionary algorithms: A survey, ACM Comput. Surveys (CSUR) 45 (3) (2013)

35:1–33. - [7] M. Crepinšek ˇ , S.-H. Liu, M. Mernik, Replication and comparison of computational experiments in applied evolutionary computing: Common pitfalls

and guidelines to avoid them, Appl. Soft Comput. 19 (2014) 161–170. - [8] C. Dai, Y. Wang, A new decomposition based evolutionary algorithm with uniform designs for many-objective optimization, Appl. Soft Comput. 30

(2015) 238–248. - [9] S. Das, P.N. Suganthan, Problem definitions and evaluation criteria for CEC 2011 competition on testing evolutionary algorithms on real world optimization problems, Technical report, Jadavpur University, Kolkata, and Nanyang Technological University, Singapore, 2010.

- [10] S. Das, P.N. Suganthan, Differential evolution: A survey of the state-of-the-art, IEEE Trans. Evol. Comput. 15 (1) (2011) 4–31.

- [11] J. Derrac, S. García, S. Hui, P.N. Suganthan, F. Herrera, Analyzing convergence performance of evolutionary algorithms: A statistical approach, Inf. Sci.

289 (2014) 41–58. - [12] EARS, Evolutionary algorithms rating system (GitHub), https://github.com/matejxxx/EARS.

- [13] A.E. Eiben, R. Hinterding, Z. Michalewicz, Parameter control in evolutionary algorithms, IEEE Trans. Evol. Comput. 3 (2) (1999) 124–141.

- [14] A.E. Eiben, M. Jelasity, A critical note on experimental research methodology in evolutionary computation, Proc. World Congress Comput. Intell. 1

(2002) 582–587. - [15] A.E. Eiben, S.K. Smit, Parameter tuning for configuring and analyzing evolutionary algorithms, Swarm Evol. Comput. 1 (1) (2011) 19–31.

- [16] M. Friedman, A comparison of alternative tests of significance for the problem of m rankings, Annal. Math. Stat. 11 (1) (1940) 86–92.

- [17] S. García, A. Fernández, J. Luengo, F. Herrera, A study of statistical techniques and performance measures for genetics-based machine learning: Accuracy

and interpretability, Soft Comput. 13 (10) (2009) 959–977. - [18] S. García, A. Fernández, J. Luengo, F. Herrera, Advanced nonparametric tests for multiple comparisons in the design of experiments in computational

intelligence and data mining: experimental analysis of power, Inf. Sci. 180 (10) (2010) 2044–2064. - [19] J. Gill, The insignificance of null hypothesis significance testing, Political Res. Q. 52 (3) (1999) 647–674.

- [20] M.E. Glickman, Dynamic paired comparison models with stochastic variances, J. Appl. Stat. 28 (6) (2001) 673–689.

- [21] N. Hansen, A. Auger, S. Finck, R. Ros, Real-parameter black-box optimization benchmarking 2010: experimental setup, Technical report RR-6828, Institut

National de Recherche en Informatique et en Automatique (INRIA), 2010. - [22] S. Holm, A simple sequentially rejective multiple test procedure, in: Scandinavian Journal of Statistics, 1979, pp. 65–70.

- [23] J.N. Hooker, Testing heuristics: we have it all wrong, J. Heuristics 1 (1) (1995) 33–42.

- [24] F. Hutter, H.H. Hoos, T. Stützle, Automatic algorithm configuration based on local search, Association Adv. Artif. Intell. 7 (2007) 1152–1157.

- [25] D. Karaboga, B. Basturk, On the performance of artificial bee colony (ABC) algorithm, Appl. Soft Comput. 8 (1) (2008) 687–697.

- [26] G. Karafotias, M. Hoogendoorn, A.E. Eiben, Parameter control in evolutionary algorithms: trends and challenges, IEEE Trans. Evol. Comput. 19 (2) (2015)

167–187. - [27] Y. Lee, J.J. Filliben, R.J. Micheals, P.J. Phillips, Sensitivity analysis for biometric systems: A methodology based on orthogonal experiment designs,

Comput. Vision Image Understanding 117 (5) (2013) 532–550. - [28] T.R. Levine, R. Weber, C. Hullett, H.S. Park, L.L.M. Lindsey, A critical assessment of null hypothesis significance testing in quantitative communication

research, Human Commun. Res. 34 (2) (2008) 171–187. - [29] T. Liao, D. Molina, T. Stützle, Performance evaluation of automatically tuned continuous optimizers on different benchmark sets, Appl. Soft Comput.

27 (2015) 490–503. - [30] S.-H. Liu, M. Mernik, B.R. Bryant, To explore or to exploit: An entropy-driven approach for evolutionary algorithms, Int. J. Knowl.-Based Intell. Eng.

Syst. 13 (3) (2009) 185–206. - [31] S.-H. Liu, M. Mernik, D. Hrnci ˇ cˇ, M. Crepinšek ˇ , A parameter control method of evolutionary algorithms using exploration and exploitation measures

with a practical application for fitting sovova’s mass transfer model, Appl. Soft Comput. 13 (9) (2013) 3792–3805.N. Vecek ˇ et al. / Information Sciences 372 (2016) 446–469 469 - [32] M. Mernik, S.-H. Liu, D. Karaboga, M. Crepinšek ˇ , On clarifying misconceptions when comparing variants of the artificial bee colony algorithm by

offering a new implementation, Inf. Sci. 291 (2015) 115–127. - [33] E. Montero, M.-C. Riff, Towards a method for automatic algorithm configuration: A design evaluation using tuners, in: Parallel Problem Solving from

Nature, 2014, pp. 90–99. - [34] E. Montero, M.-C. Riff, B. Neveu, A beginner’s guide to tuning methods, Appl. Soft Comput. 17 (2014) 39–51.

- [35] V. Nannen, A.E. Eiben, Efficient relevance estimation and value calibration of evolutionary algorithm parameters, in: IEEE Congress on Evolutionary

Computation, 2007, pp. 103–110. - [36] V. Nannen, A.E. Eiben, Relevance estimation and value calibration of evolutionary algorithm parameters, Int. Joint Conf. Artif. Intell. 7 (2007) 975–980.

- [37] V. Nannen, S.K. Smit, A.E. Eiben, Costs and benefits of tuning parameters of evolutionary algorithms, in: Parallel Problem Solving from Nature, 2008,

pp. 528–538. - [38] E. Rashedi, H. Nezamabadi-Pour, S. Saryazdi, GSA: A gravitational search algorithm, Inf. Sci. 179 (13) (2009) 2232–2248.

- [39] M. Shams, E. Rashedi, A. Hakimi, Clustered-gravitational search algorithm and its application in parameter optimization of a low noise amplifier, Appl.

Math. Comput. 258 (2015) 436–453. - [40] S.K. Smit, Parameter Tuning and Scientific Testing in Evolutionary Algorithms, Vrije Universiteit, Amsterdam, 2012.

- [41] S.K. Smit, A.E. Eiben, Comparing parameter tuning methods for evolutionary algorithms, in: IEEE Congress on Evolutionary Computation, 2009,

pp. 399–406. - [42] S.K. Smit, A.E. Eiben, Beating the world champion evolutionary algorithm via Revac tuning, in: IEEE Congress on Evolutionary Computation, 2010,

pp. 1–8. - [43] S.K. Smit, A.E. Eiben, Parameter tuning of evolutionary algorithms: Generalist vs. specialist, in: Applications of Evolutionary Computation, 2010,

pp. 542–551. - [44] S.K. Smit, A.E. Eiben, Z. Szlávik, An MOEA-based method to tune EA parameters on multiple objective functions, in: International Conference on

Evolutionary Computation, 2010, pp. 261–268. - [45] R. Storn, K. Price, Differential evolution–a simple and efficient heuristic for global optimization over continuous spaces, J. Global Optimization 11 (4)

(1997) 341–359. - [46] R.K. Ursem, Diversity-guided evolutionary algorithms, in: Parallel Problem Solving from Nature, 2002, pp. 462–471.

- [47] N. Vecek ˇ , M. Mernik, M. Crepinšek ˇ , A chess rating system for evolutionary algorithms: A new method for the comparison and ranking of evolutionary

algorithms, Inf. Sci. 277 (2014) 656–679. - [48] D.H. Wolpert, W.G. Macready, No free lunch theorems for optimization, IEEE Trans. Evol. Comput. 1 (1) (1997) 67–82.

- [49] B. Yuan, M. Gallagher, Statistical racing techniques for improved empirical evaluation of evolutionary algorithms, in: Parallel Problem Solving from

Nature, 2004, pp. 172–181. - [50] J. Zhang, A.C. Sanderson, JADE: Adaptive differential evolution with optional external archive, IEEE Trans. Evol. Comput. 13 (5) (2009) 945–958.

313

313

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?