sqlserver数据库服务异常停止分析

背景

2019年11月13日早上9:37分钟,我们的运维监控系统告警提示某项目数据库服务1433端口无法访问,我们赶紧登陆服务器查看详情,发现数据库服务以及数据库agent服务都已停止,重新手工启动对应的服务恢复生产。接下来我们就需赶紧分析引起这个问题的原因以及如何去修复;

服务器环境情况如下:

Microsoft SQL Server 2017 (RTM-CU14) (KB4484710) - 14.0.3076.1 (X64) Mar 12 2019 19:29:19 Copyright (C) 2017 Microsoft Corporation Enterprise Edition: Core-based Licensing (64-bit) on Windows Server 2012 R2 Datacenter 6.3 (Build 9600: ) (Hypervisor)

硬件配置:8CPU , 32G memory,数据盘SSD云盘550GiB (18300 IOPS)

分析过程

第一步 查询系统日志

a.有个eventid 1000的错误

-

-

1000

2

100

0x80000000000000

8587

Application

****************

-

sqlservr.exe

2017.140.3076.1

5c88918c

ntdll.dll

6.3.9600.19304

5c7f684f

c0000374

00000000000f1cd0

1b8c

01d564b93362c212

E:\Program Files\Microsoft SQL Server\MSSQL14.MSSQLSERVER\MSSQL\Binn\sqlservr.exe

C:\Windows\SYSTEM32\ntdll.dll

2db02342-05b6-11ea-80ba-a469ceaa5caa

b.有个eventid 19019的错误;

-

-

19019

2

2

0x80000000000000

8589

Application

*******************

-

MSSQLSERVER 服务意外终止.

B9370000190000001000000069005A006100630075006900650062006400780031007300370069005A000000070000006D00610073007400650072000000

我是觉的这些信息不是非常明了,只是告知MSSQLSERVER服务确实在问题时间点意外终止;

c.夹在两个错误日志中间,注意到一个eventid 1001

-

-

1001

4

0

0x80000000000000

8588

Application

**********************

-

0

APPCRASH

不可用

0

sqlservr.exe

2017.140.3076.1

5c88918c

StackHash_f18b

6.3.9600.19304

5c7f684f

c0000374

PCH_99_FROM_ntdll+0x0000000000090D0A

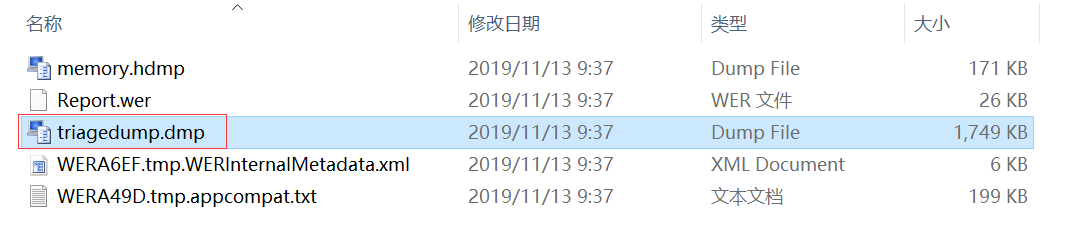

C:\Users\MSSQLSERVER\AppData\Local\Temp\WERA49D.tmp.appcompat.txt C:\Users\MSSQLSERVER\AppData\Local\Temp\WERA6EF.tmp.WERInternalMetadata.xml C:\ProgramData\Microsoft\Windows\WER\ReportQueue\AppCrash_sqlservr.exe_4b5e12dc9b4c86bd34896c996afe289e6149ec98_cac3d1cb_cab_4d0da6ee\triagedump.dmp WERGenerationLog.txt

C:\ProgramData\Microsoft\Windows\WER\ReportQueue\AppCrash_sqlservr.exe_4b5e12dc9b4c86bd34896c996afe289e6149ec98_cac3d1cb_cab_4d0da6ee

0

2db02342-05b6-11ea-80ba-a469ceaa5caa

4

发现系统已打出了dump文件了,直接把上述地址的文件,拷到本地来分析。

通过windbg查看如下:

# Child-SP RetAddr Call Site00000000e1`14c192b8 00007ff8`6012b61e ntdll!NtWaitForMultipleObjects+0xa01000000e1`14c192c0 00007ff8`6012b17c ntdll!RtlReportExceptionEx+0x45202000000e1`14c19890 00007ff8`60151d26 ntdll!RtlReportException+0xbc03000000e1`14c19920 00007ff8`600e1e26 ntdll!RtlReportCriticalFailure$filt$0+0x3304000000e1`14c19950 00007ff8`600f030e ntdll!_C_specific_handler+0x9605000000e1`14c199c0 00007ff8`600f349d ntdll!_GSHandlerCheck_SEH+0x7606000000e1`14c199f0 00007ff8`600b48d7 ntdll!RtlpExecuteHandlerForException+0xd07000000e1`14c19a20 00007ff8`600b3afd ntdll!RtlDispatchException+0x19708000000e1`14c1a0f0 00007ff8`60151cd0 ntdll!RtlRaiseException+0x18d09000000e1`14c1a8b0 00007ff8`60154f12 ntdll!RtlReportCriticalFailure+0x8c0a000000e1`14c1a9c0 00007ff8`60155b10 ntdll!RtlpHeapHandleError+0x120b000000e1`14c1a9f0 00007ff8`6010a5ff ntdll!RtlpLogHeapFailure+0xa40c000000e1`14c1aa20 00007ff8`40d269d8 ntdll!RtlFreeHeap+0x74f2f0d000000e1`14c1aac0 00007ff8`40d27692 msvcr120!free+0x1c0e000000e1`14c1aaf0 00007ff8`31aa748e msvcr120!realloc+0x2e0f000000e1`14c1ab20 00000000`00000000 psqlodbc35w+0x5748e

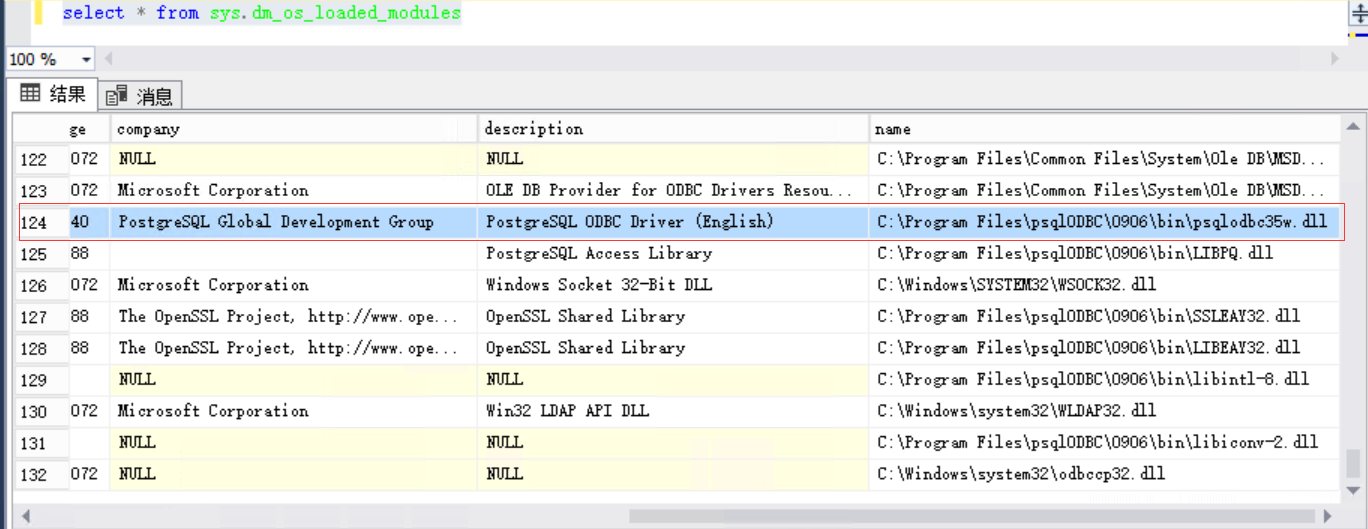

从分析结果来看像是第三方功能引起,接下来就要去数据库去验证猜测

第二步 数据库验证猜测

select * from sys.dm_os_loaded_modules

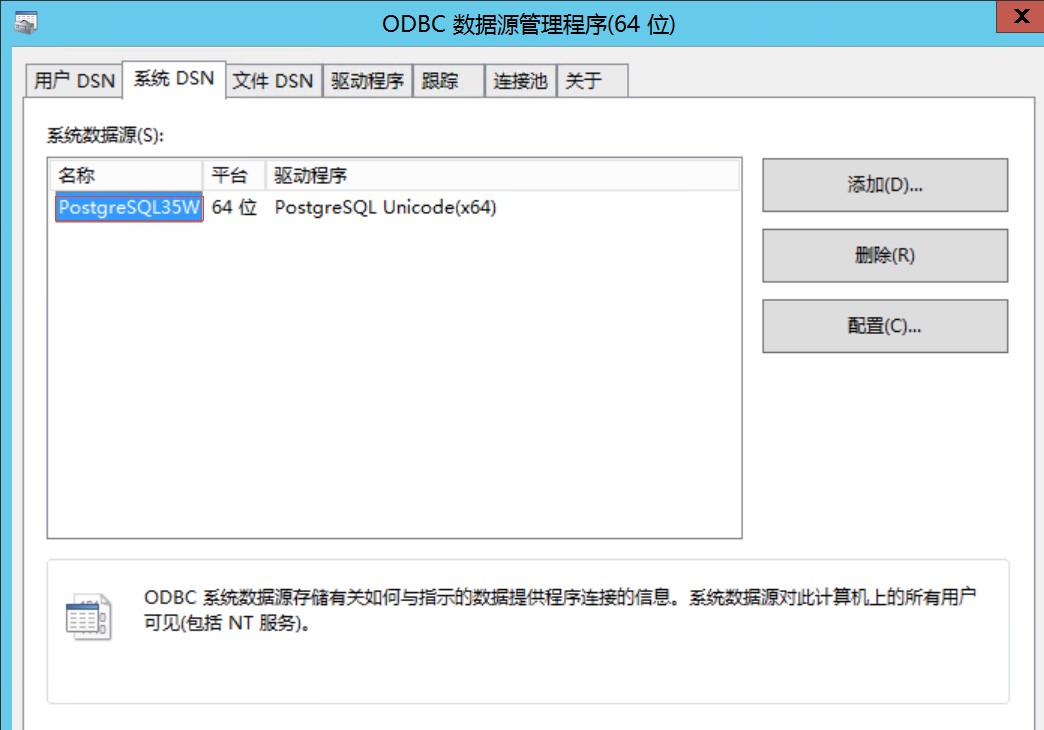

ODBC中设置如下:

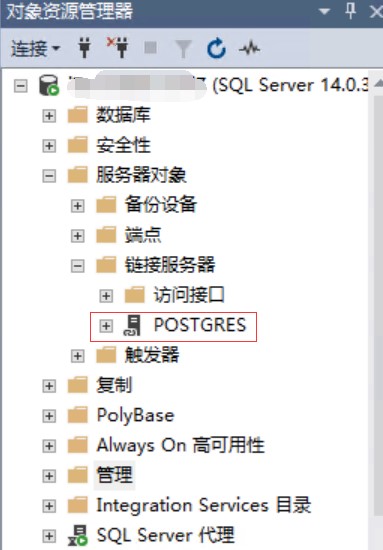

数据库中有个dblink如下:

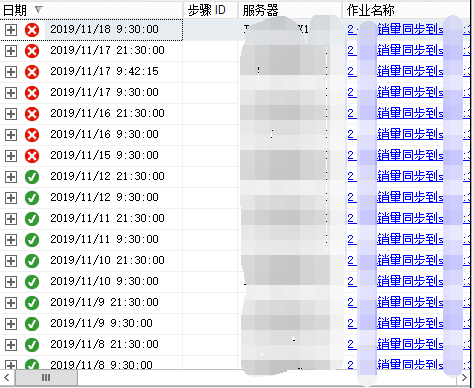

跟对应项目负责人员了解到,最近他们有个临时需求使用job定时在9:30通过dblink与postgres做数据同步,基本时间点也能对上了,那问题基本已明确是第三方的dblink调用造成sql server 异常停止;

那么接下的问题就是分析9:30调用的job的代码,就是运行一个存储过程,存储过程的详细代码如下:

--同步并清洗**销量数据

--1、分别将通过**link获取到的**和**销量数据同步到sfa_t_pflh_sales和sfa_t_drf_sales中

--2、将同步过来的销量数据进行清洗,并按客户需求保存数据到sfa_t_KaSales

CREATE PROCEDURE [dbo].[sfa_p_KaSalesLYNC]

AS

--同步****销量数据

INSERT INTO sfa_t_pflh_sales

SELECT *

FROM OPENQUERY

(POSTGRES,

'select * from "tn_bflh_sales" where "tn_tenant_id" = ''****''and tn_product_code<>''合计''AND tn_dealstatus=0 '

);

--更新*****销量销量数据同步状态tn_dealstatus=1代表已同步

UPDATE OPENQUERY

(POSTGRES, 'select * from "tn_bflh_sales"')

SET tn_dealstatus = 1

WHERE tn_tenant_id = '****'

AND tn_dealstatus = 0;

--同步***销量数据

INSERT INTO sfa_t_drf_sales

SELECT *

FROM OPENQUERY

(POSTGRES, 'select * from "tn_drf_salses" where "tn_tenant_id" = ''****'' AND tn_dealstatus=0 ');

--更新****销量销量数据同步状态tn_dealstatus=1代表已同步

UPDATE OPENQUERY

(POSTGRES, 'select * from "tn_drf_salses"')

SET tn_dealstatus = 1

WHERE tn_tenant_id = '****'

AND tn_dealstatus = 0;

--同步***link作业计划日志

TRUNCATE TABLE sfa_ka_tasklog;

INSERT INTO sfa_ka_tasklog

SELECT *

FROM OPENQUERY

(POSTGRES,

'SELECT t.tn_task_id,t.tn_ka_id,tn_ka_code,tn_dealers_account,t.tn_task_date,i.tn_status,i.tn_task_steps,i.tn_reak FROM tn_ka_task t LEFT JOIN tn_ka_task_info i on t.tn_task_id=i.tn_task_id

where t.tn_tenant_id=''*****'''

)

ORDER BY tn_task_date,tn_task_steps

DELETE FROM sfa_t_KaSales WHERE DATEDIFF(DAY, tn_date, GETDATE()) = 1--清洗数据前,先删除之前清洗好的前一天销量

INSERT INTO sfa_t_KaSales

--清洗******前一天销量数据

SELECT tn_tenant_id,'****'kaname,

tn_dealer_number,

case WHEN tn_supplier_code = '******' THEN

'***'

WHEN tn_supplier_code = '*****' THEN

'***'

END quyu,

tn_supplier_code,

tn_supplier_name,

tn_end_date tn_date,

tn_product_code,

tn_product_desc producname,

tn_store_code,

tn_store_name,

MAX(tn_sale_quantity) AS tn_day_sales,

CAST(MAX(tn_sale_amount) AS NVARCHAR(20)) AS tn_sale_amount

FROM sfa_t_pflh_sales

WHERE DATEDIFF(DAY, tn_create_date, GETDATE()) = 0

--AND tn_dealstatus = 0

GROUP BY tn_tenant_id,

tn_dealer_number,

tn_supplier_code,

tn_supplier_name,

tn_end_date,

tn_product_code,

tn_product_desc,

tn_store_code,

tn_store_name

UNION

--清洗****前一天销量数据

SELECT tn_tenant_id,'****'kaname,

tn_dealer_number,

CASE

WHEN tn_dealer_number = '*****' THEN

'***'

WHEN tn_dealer_number = '****' THEN

'***'

WHEN tn_dealer_number = '****' THEN

'***'

END quyu,

CASE

WHEN tn_dealer_number = '****' THEN

'****'

WHEN tn_dealer_number = '*****' THEN

'****'

WHEN tn_dealer_number = '****' THEN

'***'

END tn_supplier_code,

'******' tn_supplier_name,

DATEADD(DAY, -1, tn_date) tn_date,

tn_product_code,

p.producname,

'' tn_store_code,

tn_store_name,

MAX(tn_day_sales) tn_day_sales,

'' tn_sale_amount

FROM dbo.sfa_t_drf_sales s

LEFT JOIN dbo.sfa_t_KaProduct p

ON s.tn_product_code = p.productcode

AND p.KaName = 'drf'

WHERE DATEDIFF(DAY, s.tn_create_date, GETDATE()) = 0

--AND s.tn_dealstatus = 0

GROUP BY tn_tenant_id,

tn_dealer_number,

tn_product_code,

tn_store_name,

p.producname,

DATEADD(DAY, -1, tn_date);

--为保持数据清洗速度,各***系统销量临时表只保留15天数据

DELETE FROM sfa_t_pflh_sales

WHERE DATEDIFF(DAY, tn_create_date, GETDATE()) = 15

DELETE FROM sfa_t_drf_sales

WHERE DATEDIFF(DAY, tn_create_date, GETDATE()) = 15

GO

/*--同步并清洗**销量数据

--1、分别将通过**link获取到的**和**销量数据同步到sfa_t_pflh_sales和sfa_t_drf_sales中

--2、将同步过来的销量数据进行清洗,并按客户需求保存数据到sfa_t_KaSales

*/

CREATE PROCEDURE [dbo].[sfa_p_KaSalesLYNC]

AS

/*--同步****销量数据*/

INSERT INTO sfa_t_pflh_sales

SELECT *

FROM OPENQUERY

(POSTGRES,

'select * from "tn_bflh_sales" where "tn_tenant_id" = ''****''and tn_product_code<>''合计''AND tn_dealstatus=0 '

);

/*--更新*****销量销量数据同步状态tn_dealstatus=1代表已同步*/

UPDATE OPENQUERY

(POSTGRES, 'select * from "tn_bflh_sales"')

SET tn_dealstatus = 1

WHERE tn_tenant_id = '****'

AND tn_dealstatus = 0;

/*--同步***销量数据*/

INSERT INTO sfa_t_drf_sales

SELECT *

FROM OPENQUERY

(POSTGRES, 'select * from "tn_drf_salses" where "tn_tenant_id" = ''****'' AND tn_dealstatus=0 ');

/*--更新****销量销量数据同步状态tn_dealstatus=1代表已同步*/

UPDATE OPENQUERY

(POSTGRES, 'select * from "tn_drf_salses"')

SET tn_dealstatus = 1

WHERE tn_tenant_id = '****'

AND tn_dealstatus = 0;

/*--同步***link作业计划日志*/

TRUNCATE TABLE sfa_ka_tasklog;

INSERT INTO sfa_ka_tasklog

SELECT *

FROM OPENQUERY

(POSTGRES,

'SELECT t.tn_task_id,t.tn_ka_id,tn_ka_code,tn_dealers_account,t.tn_task_date,i.tn_status,i.tn_task_steps,i.tn_reak FROM tn_ka_task t LEFT JOIN tn_ka_task_info i on t.tn_task_id=i.tn_task_id

where t.tn_tenant_id=''*****'''

)

ORDER BY tn_task_date,tn_task_steps

DELETE FROM sfa_t_KaSales WHERE DATEDIFF(DAY, tn_date, GETDATE()) = 1 /*--清洗数据前,先删除之前清洗好的前一天销量*/

INSERT INTO sfa_t_KaSales

/*--清洗******前一天销量数据*/

SELECT tn_tenant_id,'****'kaname,

tn_dealer_number,

case WHEN tn_supplier_code = '******' THEN

'***'

WHEN tn_supplier_code = '*****' THEN

'***'

END quyu,

tn_supplier_code,

tn_supplier_name,

tn_end_date tn_date,

tn_product_code,

tn_product_desc producname,

tn_store_code,

tn_store_name,

MAX(tn_sale_quantity) AS tn_day_sales,

CAST(MAX(tn_sale_amount) AS NVARCHAR(20)) AS tn_sale_amount

FROM sfa_t_pflh_sales

WHERE DATEDIFF(DAY, tn_create_date, GETDATE()) = 0

/* --AND tn_dealstatus = 0 */

GROUP BY tn_tenant_id,

tn_dealer_number,

tn_supplier_code,

tn_supplier_name,

tn_end_date,

tn_product_code,

tn_product_desc,

tn_store_code,

tn_store_name

UNION

/*--清洗****前一天销量数据*/

SELECT tn_tenant_id,'****'kaname,

tn_dealer_number,

CASE

WHEN tn_dealer_number = '*****' THEN

'***'

WHEN tn_dealer_number = '****' THEN

'***'

WHEN tn_dealer_number = '****' THEN

'***'

END quyu,

CASE

WHEN tn_dealer_number = '****' THEN

'****'

WHEN tn_dealer_number = '*****' THEN

'****'

WHEN tn_dealer_number = '****' THEN

'***'

END tn_supplier_code,

'******' tn_supplier_name,

DATEADD(DAY, -1, tn_date) tn_date,

tn_product_code,

p.producname,

'' tn_store_code,

tn_store_name,

MAX(tn_day_sales) tn_day_sales,

'' tn_sale_amount

FROM dbo.sfa_t_drf_sales s

LEFT JOIN dbo.sfa_t_KaProduct p

ON s.tn_product_code = p.productcode

AND p.KaName = 'drf'

WHERE DATEDIFF(DAY, s.tn_create_date, GETDATE()) = 0

/* --AND s.tn_dealstatus = 0 */

GROUP BY tn_tenant_id,

tn_dealer_number,

tn_product_code,

tn_store_name,

p.producname,

DATEADD(DAY, -1, tn_date);

/*--为保持数据清洗速度,各***系统销量临时表只保留15天数据 */

DELETE FROM sfa_t_pflh_sales

WHERE DATEDIFF(DAY, tn_create_date, GETDATE()) = 15

DELETE FROM sfa_t_drf_sales

WHERE DATEDIFF(DAY, tn_create_date, GETDATE()) = 15

GO

后记

后续通过观察,发现该job某些时间段是可以正常执行,某些时间段是会失败;

直接报错信息如下:

Msg 109, Level 20, State 0, Line 6

A transport-level error has occurred when receiving results from the server. (provider: Shared Memory Provider, error: 0 - The pipe has been ended.)

微软官方有个hotfix针对这种情况,可惜下载执行提示已不适应当前系统了;

该job使用dblink的内存与sql server设置的最大内存以及是否锁定内存页都没有直接关系,我们甚至把max memroy 值从28G调小至10G,该现象还是会出现,观察该job执行的时候,其中有一段脚本reads超15万,查询有类似的案例如下:

后续跟该项目负责人沟通之后,他们评估认为直接停用该job,该问题是没有再重现了。但我认为如他们要实现需求还可以尝试:

替换现有的ODBC的驱动;

在job中代码做些优化;

或换其他模式如SSIS等;

结论

从这个案例来看,直接使用DBlink做数据对接,且每次数据量超5万以上不是一个首选方案;

参考

5742

5742

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?