1、前言

本文爬取的是链家的二手房信息,相信个位小伙伴看完后一定能自己动手爬取链家的其他模块,比如:租房、新房等等模块房屋数据。

话不多说,来到链家首页,点击北京

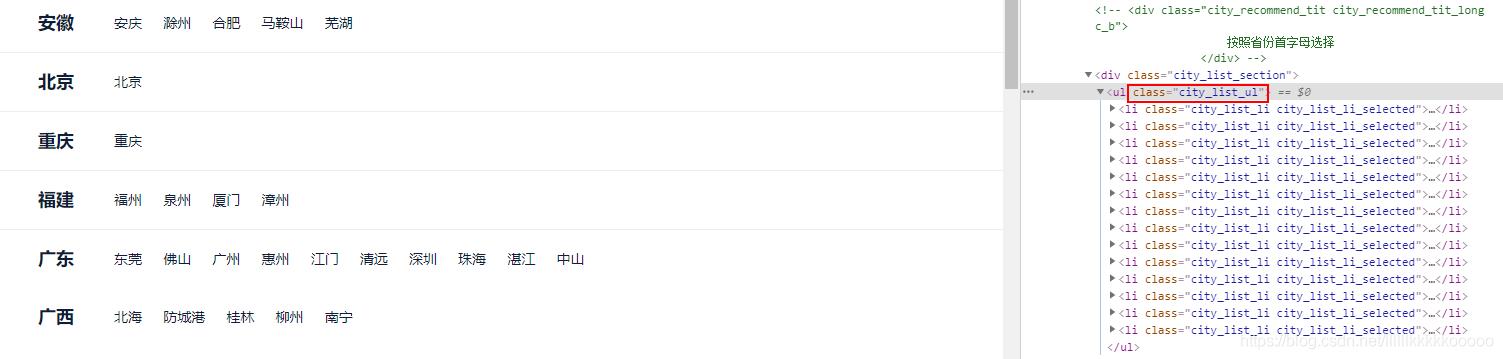

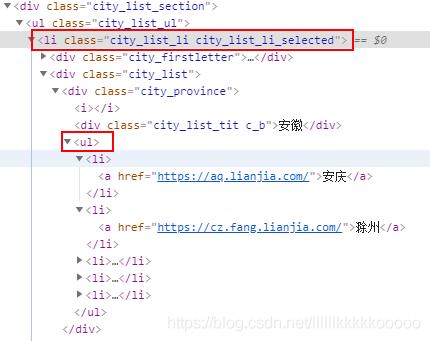

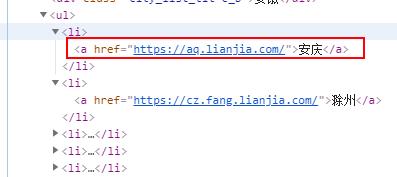

来到如下页面,这里有全国各个各个省份城市,而且点击某个城市会跳转到以该城市的为定位的页面

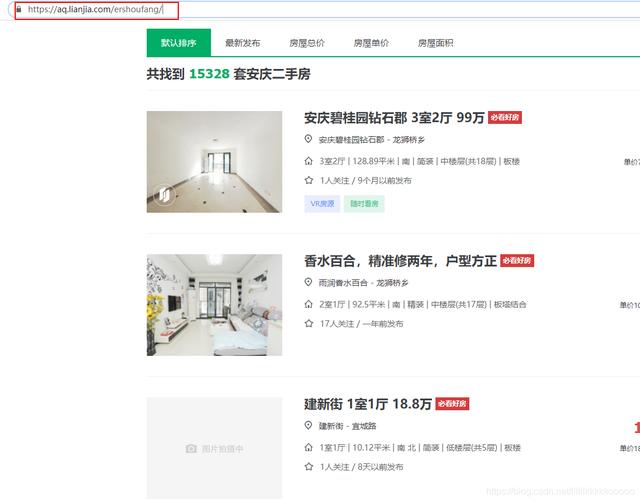

点击二手房,来到二手房页面,可以发现链接地址只是在原先的URL上拼接了 /ershoufang/,所以我们之后也可以直接拼接

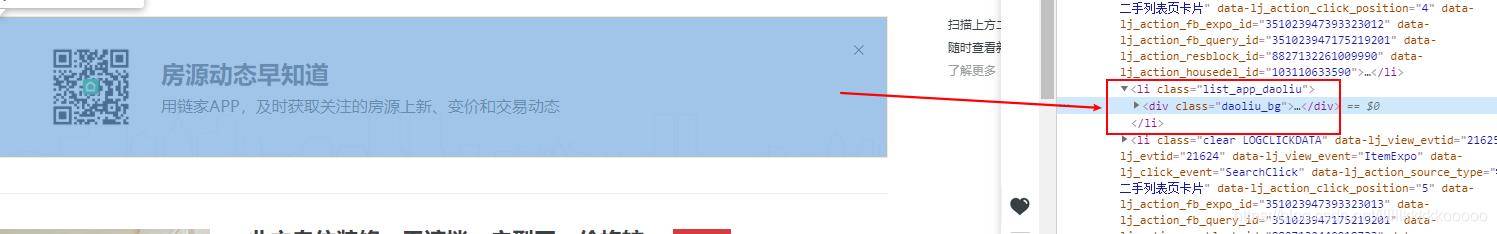

但注意,以下这种我们不需要的需要排除

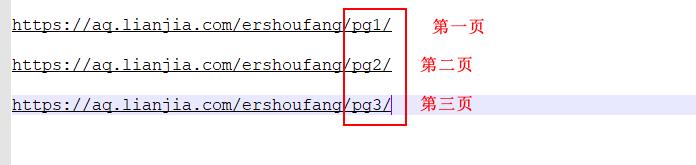

多页爬取,规律如下,多的也不用我说了,大家都能看出来

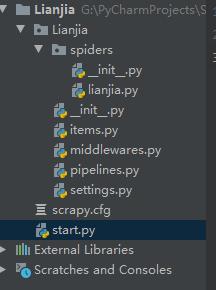

2、基本环境搭建

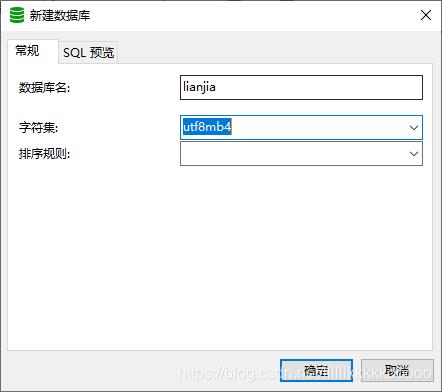

建立数据库

建表语句

CREATE TABLE `lianjia` ( `id` int(11) NOT NULL AUTO_INCREMENT, `city` varchar(100) DEFAULT NULL, `money` varchar(100) DEFAULT NULL, `address` varchar(100) DEFAULT NULL, `house_pattern` varchar(100) DEFAULT NULL, `house_size` varchar(100) DEFAULT NULL, `house_degree` varchar(100) DEFAULT NULL, `house_floor` varchar(100) DEFAULT NULL, `price` varchar(50) DEFAULT NULL, PRIMARY KEY (`id`)) ENGINE=InnoDB AUTO_INCREMENT=212 DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_0900_ai_ci;123456789101112创建scrapy项目

start.py

from scrapy import cmdlinecmdline.execute("scrapy crawl lianjia".split())1233、代码注释分析

lianjia.py

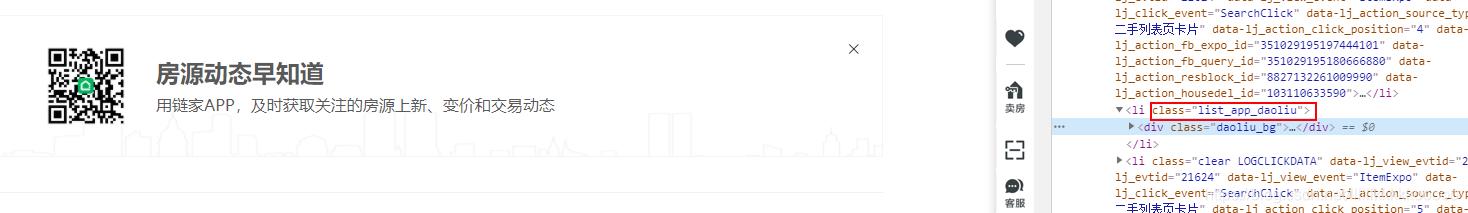

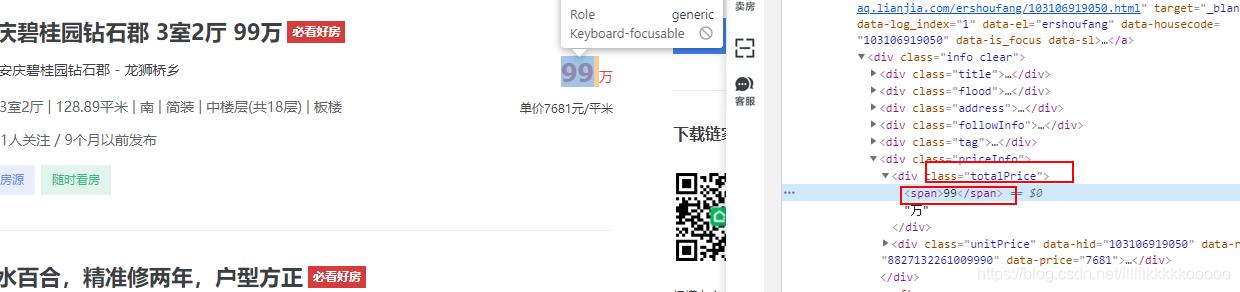

# -*- coding: utf-8 -*-import scrapyimport timefrom Lianjia.items import LianjiaItemclass LianjiaSpider(scrapy.Spider): name = 'lianjia' allowed_domains = ['lianjia.com'] #拥有各个省份城市的URL start_urls = ['https://www.lianjia.com/city/'] def parse(self, response): #参考图1,找到class值为city_list_ul的ul标签,在获取其下的所有li标签 ul = response.xpath("//ul[@class='city_list_ul']/li") #遍历ul,每个省份代表一个li标签 for li in ul: #参考图2,获取每个省份下的所有城市的li标签 data_ul = li.xpath(".//ul/li")#遍历得到每个城市 for li_data in data_ul: #参考图3,获取每个城市的URL和名称 city = li_data.xpath(".//a/text()").get() #拼接成为二手房链接 page_url = li_data.xpath(".//a/@href").get() + "/ershoufang/"#多页爬取 for i in range(3): url = page_url + "pg" + str(i+1) print(url) yield scrapy.Request(url=url,callback=self.pageData,meta={"info":city}) def pageData(self,response): print("="*50) #获取传过来的城市名称 city = response.meta.get("info") #参考图4,找到class值为sellListContent的ul标签,在获取其下的所有li标签 detail_li = response.xpath("//ul[@class='sellListContent']/li") #遍历 for page_li in detail_li: #参考图5,获取class值判断排除多余的广告 if page_li.xpath("@class").get() == "list_app_daoliu": continue #参考图6,获取房屋总价 money = page_li.xpath(".//div[@class='totalPrice']/span/text()").get() money = str(money) + "万"#参考图7 address = page_li.xpath(".//div[@class='positionInfo']/a/text()").get() #参考图8,获取到房屋的全部数据,进行分割 house_data = page_li.xpath(".//div[@class='houseInfo']/text()").get().split("|") #房屋格局 house_pattern = house_data[0] #面积大小 house_size = house_data[1].strip() #装修程度 house_degree = house_data[3].strip() #楼层 house_floor = house_data[4].strip() #单价,参考图9 price = page_li.xpath(".//div[@class='unitPrice']/span/text()").get().replace("单价","") time.sleep(0.5) item = LianjiaItem(city=city,money=money,address=address,house_pattern=house_pattern,house_size=house_size,house_degree=house_degree,house_floor=house_floor,price=price) yield item12345678910111213141516171819202122232425262728293031323334353637383940414243444546474849505152535455565758596061626364656667686970717273743、图片辅助分析

图1

图2

图3

图4

图5

图6

图7

图8

图9

4、完整代码

lianjia.py

# -*- coding: utf-8 -*-import scrapyimport timefrom Lianjia.items import LianjiaItemclass LianjiaSpider(scrapy.Spider): name = 'lianjia' allowed_domains = ['lianjia.com'] start_urls = ['https://www.lianjia.com/city/'] def parse(self, response): ul = response.xpath("//ul[@class='city_list_ul']/li") for li in ul: data_ul = li.xpath(".//ul/li") for li_data in data_ul: city = li_data.xpath(".//a/text()").get() page_url = li_data.xpath(".//a/@href").get() + "/ershoufang/" for i in range(3): url = page_url + "pg" + str(i+1) print(url) yield scrapy.Request(url=url,callback=self.pageData,meta={"info":city}) def pageData(self,response): print("="*50) city = response.meta.get("info") detail_li = response.xpath("//ul[@class='sellListContent']/li") for page_li in detail_li: if page_li.xpath("@class").get() == "list_app_daoliu": continue money = page_li.xpath(".//div[@class='totalPrice']/span/text()").get() money = str(money) + "万" address = page_li.xpath(".//div[@class='positionInfo']/a/text()").get() #获取到房屋的全部数据,进行分割 house_data = page_li.xpath(".//div[@class='houseInfo']/text()").get().split("|") #房屋格局 house_pattern = house_data[0] #面积大小 house_size = house_data[1].strip() #装修程度 house_degree = house_data[3].strip() #楼层 house_floor = house_data[4].strip() #单价 price = page_li.xpath(".//div[@class='unitPrice']/span/text()").get().replace("单价","") time.sleep(0.5) item = LianjiaItem(city=city,money=money,address=address,house_pattern=house_pattern,house_size=house_size,house_degree=house_degree,house_floor=house_floor,price=price) yield item12345678910111213141516171819202122232425262728293031323334353637383940414243444546474849505152items.py

# -*- coding: utf-8 -*-import scrapyclass LianjiaItem(scrapy.Item): #城市 city = scrapy.Field() #总价 money = scrapy.Field() #地址 address = scrapy.Field() # 房屋格局 house_pattern = scrapy.Field() # 面积大小 house_size = scrapy.Field() # 装修程度 house_degree = scrapy.Field() # 楼层 house_floor = scrapy.Field() # 单价 price = scrapy.Field()123456789101112131415161718192021pipelines.py

import pymysqlclass LianjiaPipeline: def __init__(self): dbparams = { 'host': '127.0.0.1', 'port': 3306, 'user': 'root', #数据库账号 'password': 'root',#数据库密码 'database': 'lianjia', #数据库名称 'charset': 'utf8' } #初始化数据库连接 self.conn = pymysql.connect(**dbparams) self.cursor = self.conn.cursor() self._sql = None def process_item(self, item, spider): #执行sql self.cursor.execute(self.sql,(item['city'],item['money'],item['address'],item['house_pattern'],item['house_size'],item['house_degree'] ,item['house_floor'],item['price'])) self.conn.commit() #提交 return item @property def sql(self): if not self._sql: #数据库插入语句 self._sql = """ insert into lianjia(id,city,money,address,house_pattern,house_size,house_degree,house_floor,price) values(null,%s,%s,%s,%s,%s,%s,%s,%s) """ return self._sql return self._sql123456789101112131415161718192021222324252627282930313233343536settings.py

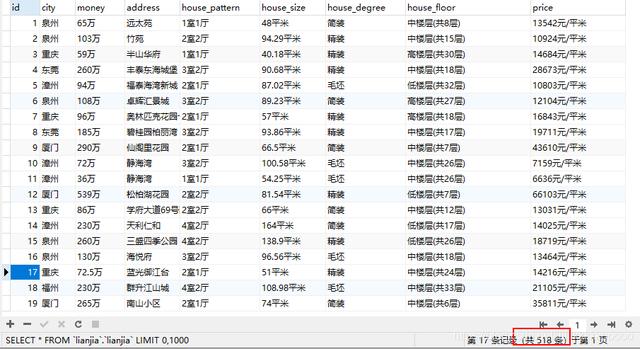

# -*- coding: utf-8 -*-BOT_NAME = 'Lianjia'SPIDER_MODULES = ['Lianjia.spiders']NEWSPIDER_MODULE = 'Lianjia.spiders'LOG_LEVEL="ERROR"# Crawl responsibly by identifying yourself (and your website) on the user-agent#USER_AGENT = 'Lianjia (+http://www.yourdomain.com)'# Obey robots.txt rulesROBOTSTXT_OBEY = False# Configure maximum concurrent requests performed by Scrapy (default: 16)#CONCURRENT_REQUESTS = 32# Configure a delay for requests for the same website (default: 0)# See https://docs.scrapy.org/en/latest/topics/settings.html#download-delay# See also autothrottle settings and docs#DOWNLOAD_DELAY = 3# The download delay setting will honor only one of:#CONCURRENT_REQUESTS_PER_DOMAIN = 16#CONCURRENT_REQUESTS_PER_IP = 16# Disable cookies (enabled by default)#COOKIES_ENABLED = False# Disable Telnet Console (enabled by default)#TELNETCONSOLE_ENABLED = False# Override the default request headers:DEFAULT_REQUEST_HEADERS = { 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8', 'Accept-Language': 'en', "User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/84.0.4147.135 Safari/537.36 Edg/84.0.522.63"}# Enable or disable spider middlewares# See https://docs.scrapy.org/en/latest/topics/spider-middleware.html#SPIDER_MIDDLEWARES = {# 'Lianjia.middlewares.LianjiaSpiderMiddleware': 543,#}# Enable or disable downloader middlewares# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html#DOWNLOADER_MIDDLEWARES = {# 'Lianjia.middlewares.LianjiaDownloaderMiddleware': 543,#}# Enable or disable extensions# See https://docs.scrapy.org/en/latest/topics/extensions.html#EXTENSIONS = {# 'scrapy.extensions.telnet.TelnetConsole': None,#}# Configure item pipelines# See https://docs.scrapy.org/en/latest/topics/item-pipeline.htmlITEM_PIPELINES = { 'Lianjia.pipelines.LianjiaPipeline': 300,}# Enable and configure the AutoThrottle extension (disabled by default)# See https://docs.scrapy.org/en/latest/topics/autothrottle.html#AUTOTHROTTLE_ENABLED = True# The initial download delay#AUTOTHROTTLE_START_DELAY = 5# The maximum download delay to be set in case of high latencies#AUTOTHROTTLE_MAX_DELAY = 60# The average number of requests Scrapy should be sending in parallel to# each remote server#AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0# Enable showing throttling stats for every response received:#AUTOTHROTTLE_DEBUG = False# Enable and configure HTTP caching (disabled by default)# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings#HTTPCACHE_ENABLED = True#HTTPCACHE_EXPIRATION_SECS = 0#HTTPCACHE_DIR = 'httpcache'#HTTPCACHE_IGNORE_HTTP_CODES = []#HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'12345678910111213141516171819202122232425262728293031323334353637383940414243444546474849505152535455565758596061626364656667686970717273747576777879808182835、运行结果

全部数据远远大于518条,博主爬取一会就停下来了,这里只是个演示

博主会持续更新,有兴趣的小伙伴可以点赞、关注和收藏下哦,你们的支持就是我创作最大的动力!

源码获取私信小编 01

如有侵权联系小编删除!

722

722

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?