计划维护一个有关不平衡学习的资源库,搜索了一下并没有现有的类似项目。

Github Repository:

https://github.com/ZhiningLiu1998/awesome-imbalanced-learninggithub.com这个仓库存储一切有关类别不平衡学习的,"awesome"的资源,包括:

- 领域中 最新的/经典的/有影响力的 研究论文及其对应的实现(若有)

- 帮助人们快速部署已有方法的 软件包/计算库/开源代码仓库

- 帮助人们快速了解本领域的 教程/讲座/报告/其他多媒体资源

- 其他有帮助的资源,如实用工具/某个子领域的paper/resource list

列表中的资源可以增加,修改(包含失效链接/过时信息),移除(若多人认为其不够"awesome"),可使用 (:accept:)符号做出有理由的推荐。

欢迎捧场,欢迎contribute,欢迎star。

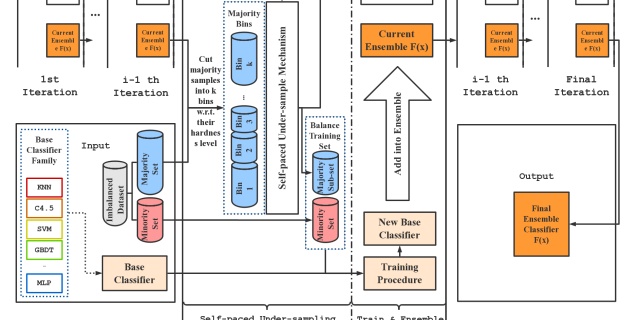

惯例宣传一下自己的工作:"Self-paced Ensemble for Highly Imbalanced Massive Data Classification"[arXiv][Github].

刘芷宁:Self-paced Ensemble: 高效、泛用、鲁棒的不平衡学习框架zhuanlan.zhihu.com

正文附下(不会更新,请在Github查看最新的list)

Awesome Imbalanced Learning

A curated list of awesome imbalanced learning papers, codes, frameworks and libraries.

Inspired by awesome-machine-learning. Contributions are welcomed!

Items marked with are personally recommended (important/high-quality papers or libraries).

Table of Contents

- Awesome Imbalanced Learning

- Table of Contents

- Libraries

- Python

- R

- Java

- Scalar

- Julia

- Papers

- Surveys

- Data resampling

- Cost-sensitive Learning

- Ensemble Learning

- Deep Learning

- Anomaly Detection

- Other Resources

Libraries

Python

- imbalanced-learn [Github][Documentation][Paper] - imbalanced-learn is a python package offering a number of re-sampling techniques commonly used in datasets showing strong between-class imbalance. It is compatible with scikit-learn and is part of scikit-learn-contrib projects.

written in python, easy to use.

R

- smote_variants [Documentation][Github] - A collection of 85 minority over-sampling techniques for imbalanced learning with multi-class oversampling and model selection features (support R and Julia).

- caret [Documentation][Github] - Contains the implementation of Random under/over-sampling.

- ROSE [Documentation] - Contains the implementation of ROSE (Random Over-Sampling Examples).

- DMwR [Documentation] - Contains the implementation of SMOTE (Synthetic Minority Over-sampling TEchnique).

Java

- KEEL [Github][Paper] - KEEL provides a simple GUI based on data flow to design experiments with different datasets and computational intelligence algorithms (paying special attention to evolutionary algorithms) in order to assess the behavior of the algorithms. This tool includes many widely used imbalanced learning techniques such as (evolutionary) over/under-resampling, cost-sensitive learning, algorithm modification, and ensemble learning methods.

wide variety of classical classification, regression, preprocessing algorithms included.

Scalar

- undersampling [Documentation][Github] - A Scala library for under-sampling and their ensemble variants in imbalanced classification.

Julia

- smote_variants [Documentation][Github] - A collection of 85 minority over-sampling techniques for imbalanced learning with multi-class oversampling and model selection feature (support R and Julia).

Papers

Surveys

- Learning from imbalanced data (2009, 4700+ citations) - Highly cited, classic survey paper. It systematically reviewed the popular solutions, evaluation metrics, and challenging problems in future research in this area (as of 2009).

classic work.

- Learning from imbalanced data: open challenges and future directions (2016, 400+ citations) - This paper concentrates on discussing the open issues and challenges in imbalanced learning, such as extreme class imbalance, dealing imbalance in online/stream learning, multi-class imbalanced learning, and semi/un-supervised imbalanced learning.

- Learning from class-imbalanced data: Review of methods and applications (2017, 400+ citations) - A recent exhaustive survey of imbalanced learning methods and applications, a total of 527 papers were included in this study. It provides several detailed taxonomies of existing methods and also the recent trend of this research area.

a systematic survey with detailed taxonomies of existing methods.

Data resampling

- Over-sampling

- ROS [Code] - Random Over-sampling

- SMOTE [Code] (2002, 9800+ citations) - Synthetic Minority Over-sampling TEchnique

classic work.

- Borderline-SMOTE [Code] (2005, 1400+ citations) - Borderline-Synthetic Minority Over-sampling TEchnique

- ADASYN [Code] (2008, 1100+ citations) - ADAptive SYNthetic Sampling

- SPIDER [Code (Java)] (2008, 150+ citations) - Selective Preprocessing of Imbalanced Data

- Safe-Level-SMOTE [Code (Java)] (2009, 370+ citations) - Safe Level Synthetic Minority Over-sampling TEchnique

- SVM-SMOTE [Code] (2009, 120+ citations) - SMOTE based on Support Vectors of SVM

- SMOTE-IPF (2015, 180+ citations) - SMOTE with Iterative-Partitioning Filter

- Under-sampling

- RUS [Code] - Random Under-sampling

- CNN [Code] (1968, 2100+ citations) - Condensed Nearest Neighbor

- ENN [Code] (1972, 1500+ citations) - Edited Condensed Nearest Neighbor

- TomekLink [Code] (1976, 870+ citations) - Tomek's modification of Condensed Nearest Neighbor

- NCR [Code] (2001, 500+ citations) - Neighborhood Cleaning Rule

- NearMiss-1 & 2 & 3 [Code] (2003, 420+ citations) - Several kNN approaches to unbalanced data distributions.

- CNN with TomekLink [Code (Java)] (2004, 2000+ citations) - Condensed Nearest Neighbor + TomekLink

- OSS [Code] (2007, 2100+ citations) - One Side Selection

- EUS (2009, 290+ citations) - Evolutionary Under-sampling

- IHT [Code] (2014, 130+ citations) - Instance Hardness Threshold

- Hybrid-sampling

- SMOTE-Tomek & SMOTE-ENN (2004, 2000+ citations) [Code (SMOTE-Tomek)] [Code (SMOTE-ENN)] - Synthetic Minority Over-sampling TEchnique + Tomek's modification of Condensed Nearest Neighbor/Edited Nearest Neighbor > :accept: extensive experimental evaluation involving 10 different over/under-sampling methods.

- SMOTE-RSB (2012, 210+ citations) - Hybrid Preprocessing using SMOTE and Rough Sets Theory

Cost-sensitive Learning

- CSC4.5 [Code (Java)] (2002, 420+ citations) - An instance-weighting method to induce cost-sensitive trees

- CSSVM [Code (Java)] (2008, 710+ citations) - Cost-sensitive SVMs for highly imbalanced classification

- CSNN [Code (Java)] (2005, 950+ citations) - Training cost-sensitive neural networks with methods addressing the class imbalance problem.

Ensemble Learning

- Boosting-based

- AdaBoost [Code] (1995, 18700+ citations) - Adaptive Boosting with C4.5

- DataBoost (2004, 570+ citations) - Boosting with Data Generation for Imbalanced Data

- SMOTEBoost [Code] (2003, 1100+ citations) - Synthetic Minority Over-sampling TEchnique Boosting

classic work.

- MSMOTEBoost (2011, 1300+ citations) - Modified Synthetic Minority Over-sampling TEchnique Boosting

- RAMOBoost [Code] (2010, 140+ citations) - Ranked Minority Over-sampling in Boosting

- RUSBoost [Code] (2009, 850+ citations) - Random Under-Sampling Boosting

classic work.

- AdaBoostNC (2012, 350+ citations) - Adaptive Boosting with Negative Correlation Learning

- EUSBoost (2013, 210+ citations) - Evolutionary Under-sampling in Boosting

- Bagging-based

- Bagging [Code] (1996, 23100+ citations) - Bagging predictors

- OverBagging & UnderOverBagging & SMOTEBagging & MSMOTEBagging [Code (SMOTEBagging)] (2009, 290+ citations) - Random Over-sampling / Random Hybrid Resampling / SMOTE / Modified SMOTE with Bagging

- UnderBagging [Code] (2003, 170+ citations) - Random Under-sampling with Bagging

- Other forms of ensemble

- EasyEnsemble & BalanceCascade [Code (EasyEnsemble)] [Code (BalanceCascade)] (2008, 1300+ citations) - Parallel ensemble training with RUS (EasyEnsemble) / Cascade ensemble training with RUS while iteratively drops well-classified examples (BalanceCascade)

simple but effective solution.

- Self-paced Ensemble [Code] (ICDE 2020) - Training Effective Ensemble on Imbalanced Data by Self-paced Harmonizing Classification Hardness

high performance & computational efficiency.

Deep Learning

- Surveys

- A systematic study of the class imbalance problem in convolutional neural networks (2018, 330+ citations)

- Survey on deep learning with class imbalance (2019, 50+ citations)

a recent comprehensive survey of the class imbalance problem in deep learning.

- Hard example mining

- Training region-based object detectors with online hard example mining (CVPR 2016, 840+ citations) - In the later phase of NN training, only do gradient back-propagation for "hard examples" (i.e., with large loss value)

- Loss function engineering

- Training deep neural networks on imbalanced data sets (IJCNN 2016, 110+ citations) - Mean (square) false error that can equally capture classification errors from both the majority class and the minority class.

- Focal loss for dense object detection [Code (Unofficial)] (ICCV 2017, 2600+ citations) - A uniform loss function that focuses training on a sparse set of hard examples to prevents the vast number of easy negatives from overwhelming the detector during training.

elegant solution, high influence.

- Deep imbalanced attribute classification using visual attention aggregation [Code] (ECCV 2018, 30+ citation)

- Imbalanced deep learning by minority class incremental rectification (TPAMI 2018, 60+ citations) - Class Rectification Loss for minimizing the dominant effect of majority classes by discovering sparsely sampled boundaries of minority classes in an iterative batch-wise learning process.

- Learning Imbalanced Datasets with Label-Distribution-Aware Margin Loss [Code] (NIPS 2019, 10+ citations) - A theoretically-principled label-distribution-aware margin (LDAM) loss motivated by minimizing a margin-based generalization bound.

- Gradient harmonized single-stage detector [Code] (AAAI 2019, 40+ citations) - Compared to Focal Loss, which only down-weights "easy" negative examples, GHM also down-weights "very hard" examples as they are likely to be outliers.

interesting idea: harmonizing the contribution of examples on the basis of their gradient distribution.

- Meta-learning

- Learning to model the tail (NIPS 2017, 70+ citations) - Transfer meta-knowledge from the data-rich classes in the head of the distribution to the data-poor classes in the tail.

- Learning to reweight examples for robust deep learning [Code] (ICML 2018, 150+ citations) - Implicitly learn a weight function to reweight the samples in gradient updates of DNN.

representative work to solve the class imbalance problem through meta-learning.

- Meta-weight-net: Learning an explicit mapping for sample weighting [Code] (NIPS 2019) - Explicitly learn a weight function (with an MLP as the function approximator) to reweight the samples in gradient updates of DNN.

- Learning to Balance: Bayesian Meta-Learning for Imbalanced and Out-of-distribution Tasks [Code] (ICLR 2020)

- Representation Learning

- Learning deep representation for imbalanced classification (CVPR 2016, 220+ citations)

- Supervised Class Distribution Learning for GANs-Based Imbalanced Classification (ICDM 2019)

- Two-phase training

- Brain tumor segmentation with deep neural networks (2017, 1200+ citations) - Pre-training on balanced dataset, fine-tuning the last output layer before softmax on the original, imbalanced data.

Anomaly Detection

- Surveys

- Anomaly detection: A survey (2009, 7300+ citations)

- A survey of network anomaly detection techniques (2017, 210+ citations)

- Classification-based

- One-class SVMs for document classification (2001, 1300+ citations)

- One-class Collaborative Filtering (2008, 830+ citations)

- Isolation Forest (2008, 1000+ citations)

- Anomaly Detection using One-Class Neural Networks (2018, 70+ citations)

- Anomaly Detection with Robust Deep Autoencoders (KDD 2017, 170+ citations)

Other Resources

- Paper-list-on-Imbalanced-Time-series-Classification-with-Deep-Learning

- acm_imbalanced_learning - slides and code for the ACM Imbalanced Learning talk on 27th April 2016 in Austin, TX.

- imbalanced-algorithms - Python-based implementations of algorithms for learning on imbalanced data.

- imbalanced-dataset-sampler - A (PyTorch) imbalanced dataset sampler for oversampling low frequent classes and undersampling high frequent ones.

- class_imbalance - Jupyter Notebook presentation for class imbalance in binary classification.

2132

2132

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?