前言

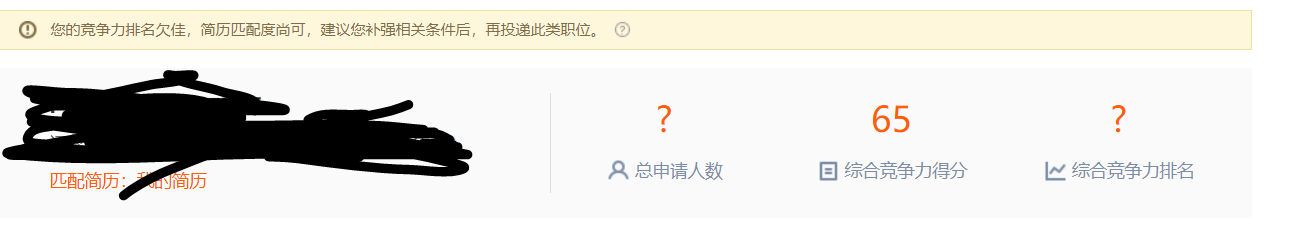

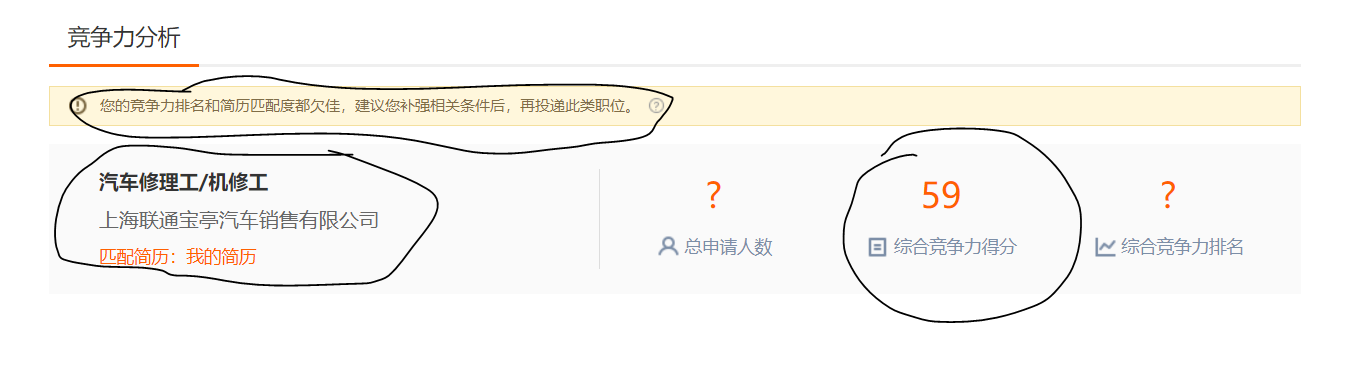

在前程无忧上投递简历发现有竞争力分析,免费能看到匹配度评价和综合竞争力分数,可以做投递参考

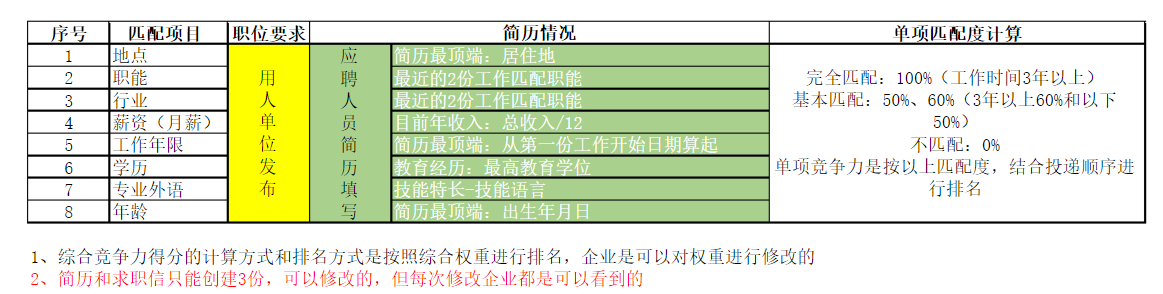

计算方式

综合竞争力得分应该越高越好,匹配度评语也应该评价越高越好

抓取所有职位关键字搜索结果并获取综合竞争力得分和匹配度评语,最后筛选得分评语自动投递合适的简历

登陆获取cookie

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

chrome_options = Options()

# chrome_options.add_argument('--headless')

from time import sleep

import re

from lxml import etree

import requests

import os

import json

driver = webdriver.Chrome(chrome_options=chrome_options,executable_path = 'D:\python\chromedriver.exe')

headers={"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.86 Safari/537.36"}

driver.get(https://search.51job.com/list/020000,000000,0000,00,9,99,%2520,2,1.html?lang=c&stype=&postchannel=0000&workyear=99&cotype=99°reefrom=99&jobterm=99&companysize=99&providesalary=99&lonlat=0%2C0&radius=-1&ord_field=0&confirmdate=9&fromType=&dibiaoid=0&address=&line=&specialarea=00&from=&welfare=)

webdriver需要在相应域名写入cookie,所以转到职位搜索页面

def get_cookie():

driver.get("https://login.51job.com/login.php?loginway=1&lang=c&url=")

sleep(2)

phone=input("输入手机号:")

driver.find_element_by_id("loginname").send_keys(phone)

driver.find_element_by_id("btn7").click()

sleep(1)

code=input("输入短信:")

driver.find_element_by_id("phonecode").send_keys(code)

driver.find_element_by_id("login_btn").click()

sleep(2)

cookies = driver.get_cookies()

with open("cookie.json", "w")as f:

f.write(json.dumps(cookies))

检查cookie文件是否存在,如果不存在执行get_cookie把cookie写入文件,在登陆的时候最好不用无头模式,偶尔有滑动验证码

前程无忧手机短信一天只能发送三条,保存cookie下次登陆用

def get_job():

driver.get("https://search.51job.com/list/020000,000000,0000,00,9,99,%2520,2,1.html?lang=c&stype=&postchannel=0000&workyear=99&cotype=99°reefrom=99&jobterm=99&companysize=99&providesalary=99&lonlat=0%2C0&radius=-1&ord_field=0&confirmdate=9&fromType=&dibiaoid=0&address=&line=&specialarea=00&from=&welfare=")

sleep(2)

job=input("输入职位:")

driver.find_element_by_id("kwdselectid").send_keys(job)

driver.find_element_by_xpath('//button[@class="p_but"]').click()

url=driver.current_url

page=driver.page_source

return url,page

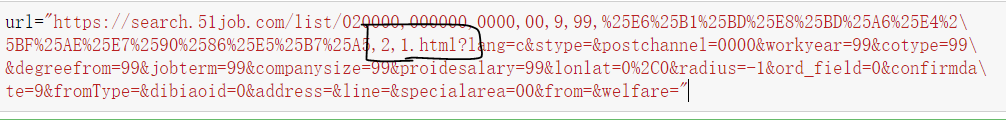

在职位搜索获取职位搜索结果,需要返回页面源码和地址

分析页码结构html前的是页码,全部页码数量通过共XX页得到

def get_pages(url,page):

tree=etree.HTML(page)

href=[]

x = tree.xpath('//span[@class="td"]/text()')[0]

total_page=int(re.findall("(\d+)", x)[0])

for i in range(1,total_page+1):

href.append(re.sub("\d.html", f'{i}.html', url))

return href

获取全部页码

def get_job_code(url):

headers={"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.86 Safari/537.36"}

r=session.get(url,headers=headers)

tree=etree.HTML(r.text)

divs=tree.xpath('//div[@class="el"]/p/span/a/@href')

job=str(divs)

job_id=re.findall("\/(\d+).html",job)

return job_id

获取职位id

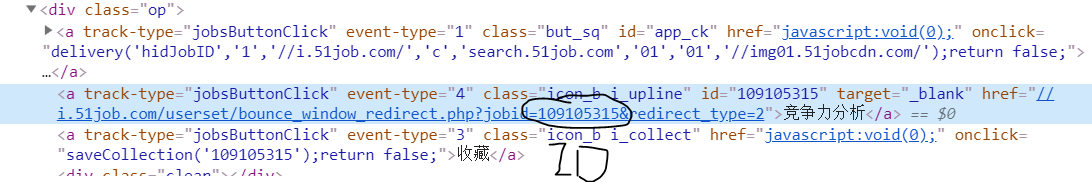

修改id请求网址到竞争力分析页面

def get_info(job_id):

href=f"https://i.51job.com/userset/bounce_window_redirect.php?jobid={job_id}&redirect_type=2"

r=session.get(href,headers=headers)

r.encoding=r.apparent_encoding

tree=etree.HTML(r.text)

pingjia=tree.xpath('//div[@class="warn w1"]//text()')[0].strip()

gongsi=[]

for i in tree.xpath('//div[@class="lf"]//text()'):

if i.strip():

gongsi.append(i.strip())

fenshu=[]

for i in tree.xpath('//ul[@class="rt"]//text()'):

if i.strip():

fenshu.append(i.strip())

url=f"https://jobs.51job.com/shanghai/{job_id}.html?s=03&t=0"

return {"公司":gongsi[1],"职位":gongsi[0],"匹配度":pingjia,fenshu[3]:fenshu[2],"链接":url,"_id":job_id}

抓取竞争力分析页面,返回一个字典

主程序

if not os.path.exists("cookie.json"):

get_cookie()

f=open("cookie.json","r")

cookies=json.loads(f.read())

f.close()

检查cookie文件载入cookie,不存在执行get_cookie()把cookie保存到文件

session = requests.Session()

for cookie in cookies:

driver.add_cookie(cookie)

session.cookies.set(cookie['name'],cookie['value'])

url, page = get_job()

driver.close()

在session和webdriver写入cookie登陆

获取第一页和url后webdriver就可以关掉了

code=[]

for i in get_pages(url,page):

code=code+get_job_code(i)

获取的职位id添加到列表

import pymongo

client=pymongo.MongoClient("localhost",27017)

db=client["job_he"]

job_info=db["job_info"]

for i in code:

try:

if not job_info.find_one({"_id":i}):

info=get_info(i)

sleep(1)

job_info.insert_one(info)

print(info,"插入成功")

except:

print(code)

龟速爬取,用MongDB保存结果,职位id作为索引id,插入之前检查id是否存在简单去重减少访问

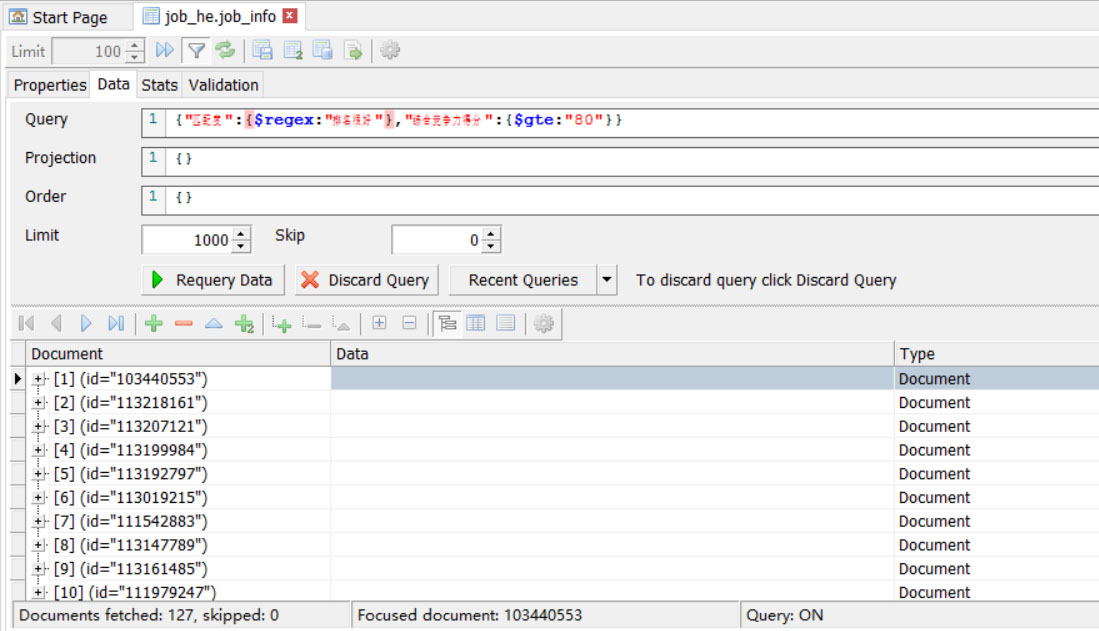

吃完饭已经抓到8000个职位了,筛选找到127个匹配度好的,开始批量投递

登陆状态点击申请职位,用wevdriver做

for i in job_info.find({"匹配度":{$regex:"排名很好"},"综合竞争力得分":{$gte:"80"}}):

print(i)

try:

driver.get(i)

driver.find_element_by_id("app_ck").click()

sleep(2)

except:

pass

用cookie登陆简单for循环投递,在Mongodb里查表,正则筛选匹配度和竞争力得分获取所有匹配结果

投递成功

代码

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

chrome_options = Options()

# chrome_options.add_argument('--headless')

from time import sleep

import re

from lxml import etree

import requests

import os

import json

driver = webdriver.Chrome(chrome_options=chrome_options,executable_path = 'D:\python\chromedriver.exe')

headers={"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.86 Safari/537.36"}

driver.get("https://search.51job.com/list/020000,000000,0000,00,9,99,%2520,2,1.html?lang=c&stype=&postchannel=0000&workyear=99&cotype=99°reefrom=99&jobterm=99&companysize=99&providesalary=99&lonlat=0%2C0&radius=-1&ord_field=0&confirmdate=9&fromType=&dibiaoid=0&address=&line=&specialarea=00&from=&welfare=")

def get_cookie():

driver.get("https://login.51job.com/login.php?loginway=1&lang=c&url=")

sleep(2)

phone=input("输入手机号:")

driver.find_element_by_id("loginname").send_keys(phone)

driver.find_element_by_id("btn7").click()

sleep(1)

code=input("输入短信:")

driver.find_element_by_id("phonecode").send_keys(code)

driver.find_element_by_id("login_btn").click()

sleep(2)

cookies = driver.get_cookies()

with open("cookie.json", "w")as f:

f.write(json.dumps(cookies))

def get_job():

driver.get("https://search.51job.com/list/020000,000000,0000,00,9,99,%2520,2,1.html?lang=c&stype=&postchannel=0000&workyear=99&cotype=99°reefrom=99&jobterm=99&companysize=99&providesalary=99&lonlat=0%2C0&radius=-1&ord_field=0&confirmdate=9&fromType=&dibiaoid=0&address=&line=&specialarea=00&from=&welfare=")

sleep(2)

job=input("输入职位:")

driver.find_element_by_id("kwdselectid").send_keys(job)

driver.find_element_by_xpath('//button[@class="p_but"]').click()

url=driver.current_url

page=driver.page_source

return url,page

def close_driver():

driver.close()

def get_pages(url,page):

tree=etree.HTML(page)

href=[]

x = tree.xpath('//span[@class="td"]/text()')[0]

total_page=int(re.findall("(\d+)", x)[0])

for i in range(1,total_page+1):

href.append(re.sub("\d.html", f'{i}.html', url))

return href

def get_job_code(url):

headers={"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.86 Safari/537.36"}

r=session.get(url,headers=headers)

tree=etree.HTML(r.text)

divs=tree.xpath('//div[@class="el"]/p/span/a/@href')

job=str(divs)

job_id=re.findall("\/(\d+).html",job)

return job_id

def get_info(job_id):

href=f"https://i.51job.com/userset/bounce_window_redirect.php?jobid={job_id}&redirect_type=2"

r=session.get(href,headers=headers)

r.encoding=r.apparent_encoding

tree=etree.HTML(r.text)

pingjia=tree.xpath('//div[@class="warn w1"]//text()')[0].strip()

gongsi=[]

for i in tree.xpath('//div[@class="lf"]//text()'):

if i.strip():

gongsi.append(i.strip())

fenshu=[]

for i in tree.xpath('//ul[@class="rt"]//text()'):

if i.strip():

fenshu.append(i.strip())

url=f"https://jobs.51job.com/shanghai/{job_id}.html?s=03&t=0"

return {"公司":gongsi[1],"职位":gongsi[0],"匹配度":pingjia,fenshu[3]:fenshu[2],"链接":url,"_id":job_id}

if not os.path.exists("cookie.json"):

get_cookie()

f=open("cookie.json","r")

cookies=json.loads(f.read())

f.close()

session = requests.Session()

for cookie in cookies:

driver.add_cookie(cookie)

session.cookies.set(cookie['name'], cookie['value'])

url, page = get_job()

driver.close()

code=[]

for i in get_pages(url,page):

code=code+get_job_code(i)

import pymongo

client=pymongo.MongoClient("localhost",27017)

db=client["job_he"]

job_info=db["job_info"]

for i in code:

try:

if not job_info.find_one({"_id":i}):

info=get_info(i)

sleep(1)

job_info.insert_one(info)

print(info)

print("插入成功")

except:

print(code)

总结

以上就是这篇文章的全部内容了,希望本文的内容对大家的学习或者工作具有一定的参考学习价值,谢谢大家对脚本之家的支持。

265

265

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?