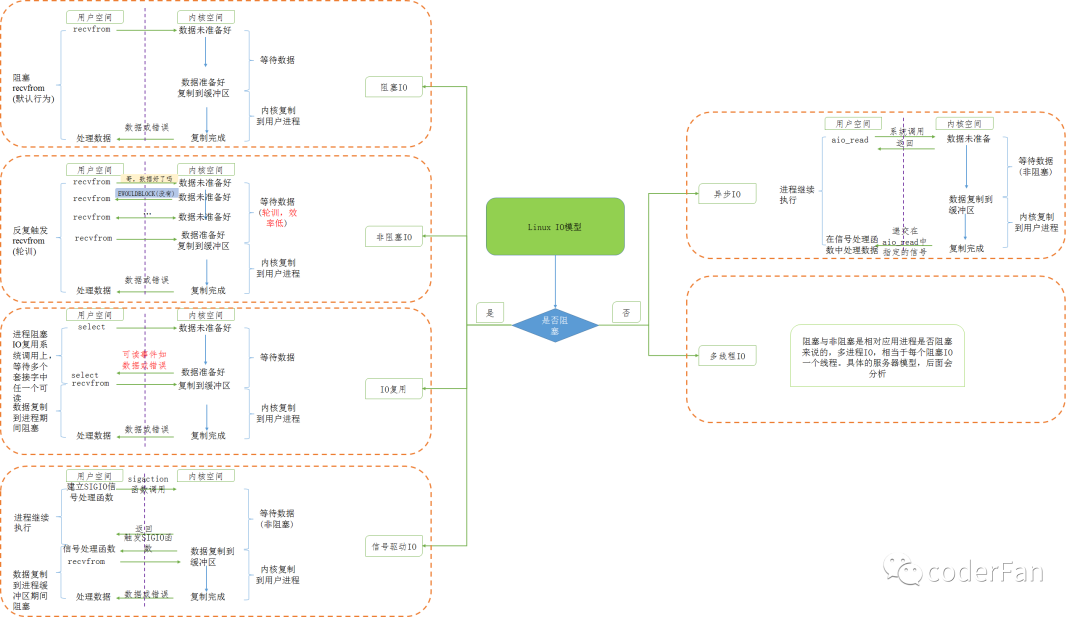

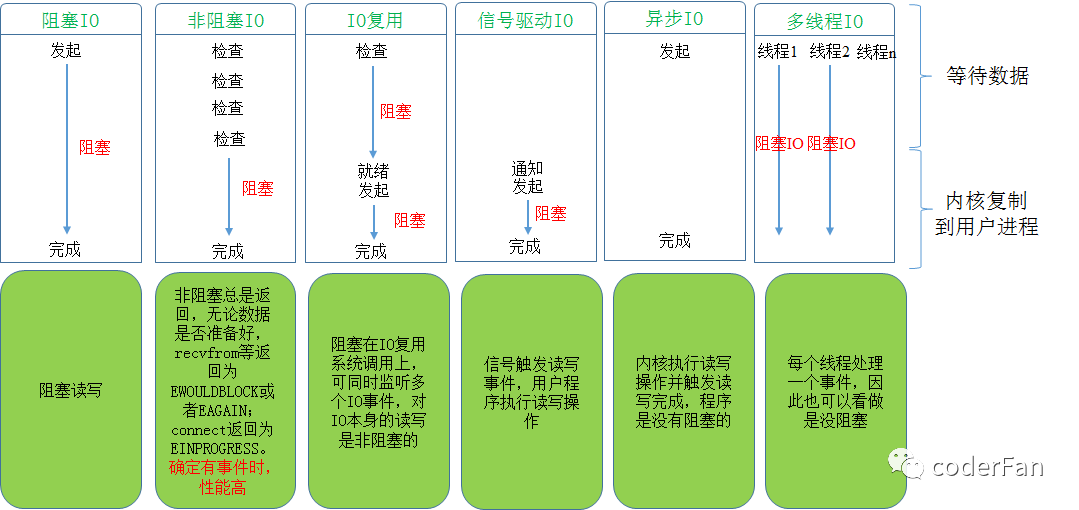

Linux I/O模型

首先我们需要明确一点,对于一个套接字上的输入包括哪两部分?

等待内核数据数据准备好(等待数据从网络中到达,然后复制到内核的缓冲区)

把数据从内核缓冲区复制到引用进程缓冲区

任一一段阻塞,我们都将其视为同步IO。

不想看图?那好我们来看一张表吧

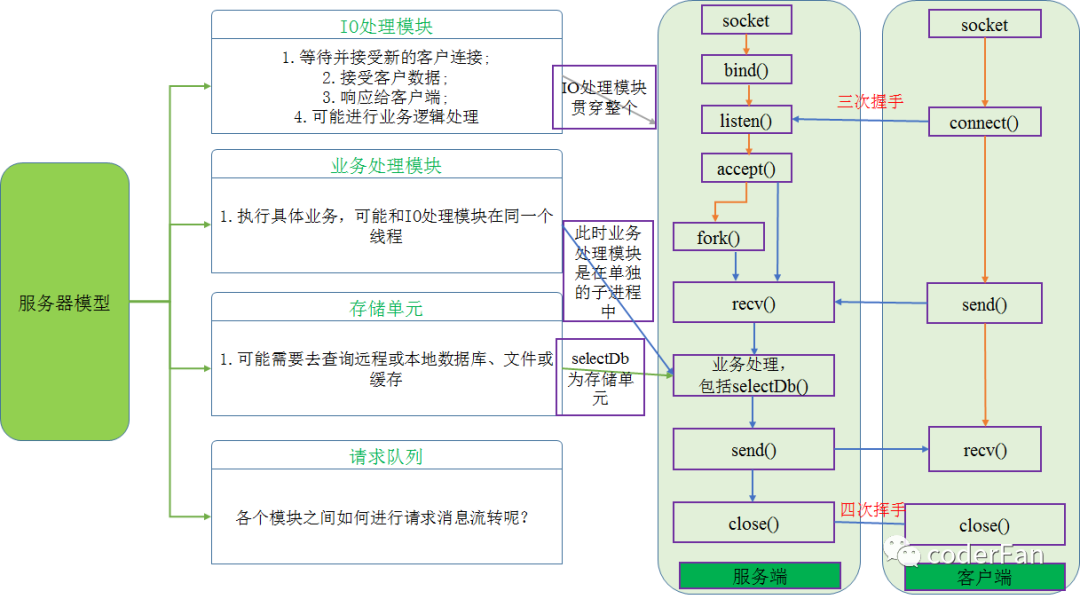

服务器设计范式

基本模型

无论服务端设计什么样的模型,但其基本组件是不变的,不同的在于如何进行巧妙、高效的组合。

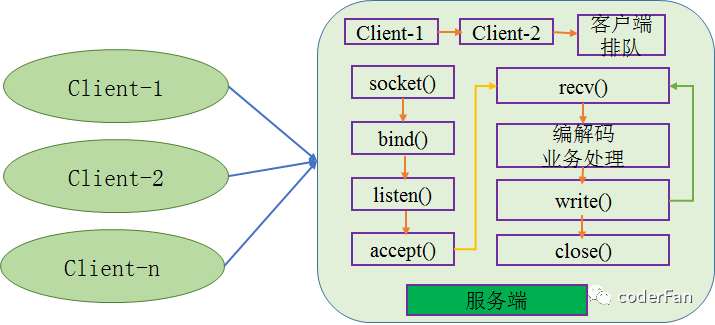

传统服务器设计模型

迭代式

客户端需要进行排队,由此可见并不适合繁忙服务器。

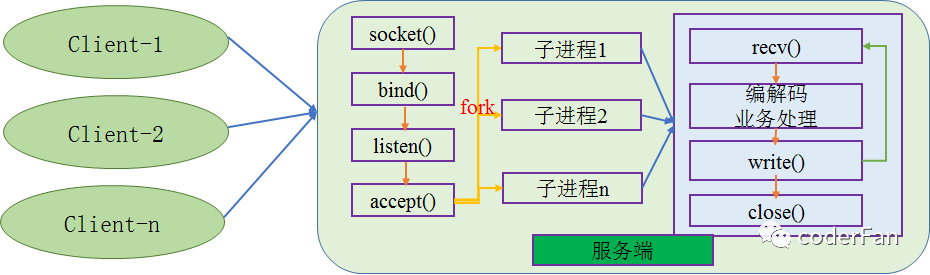

每个用户一个进程

| |

每个用户fork一个进程,最大的问题在于当用户量很大时,非常消耗资源。

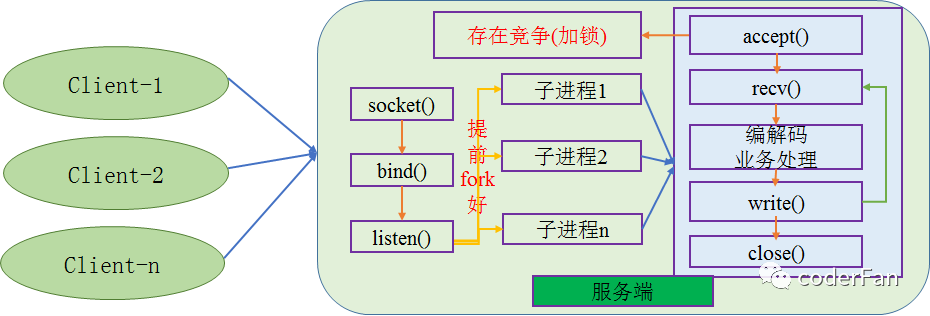

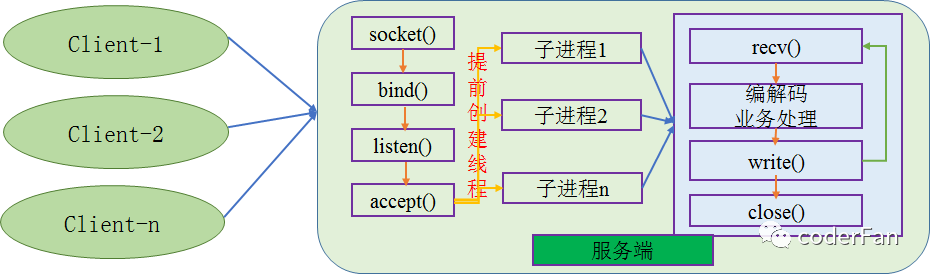

每个用户一个进程(prefork模型)

| |

引入池技术,有效的避免了在用户到来时进程执行fork的开销,然而需要在启动阶段预估判断多少个子进程,而且由于是多进程,耗费资源比较大,因此并发有限。

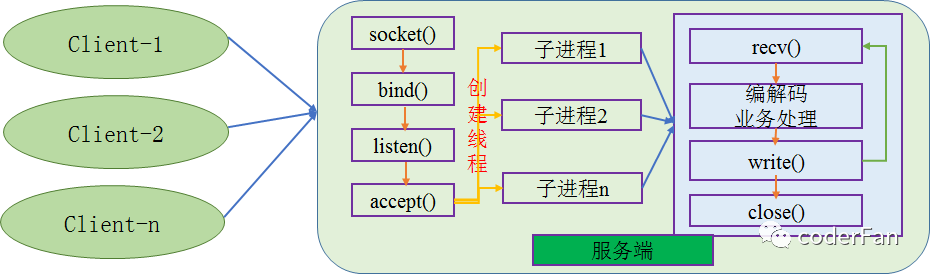

每个用户一个线程

| |

相比于多进程模型,如果服务器主机提供支持线程,我们可以改用线程以取代进程。线程相比于进程的优势节省资源,一般场景够用了。但是如果一个web服务器并发量过万,可能同时会创建1w个线程,此时看看你的服务器支不支持的住哟。

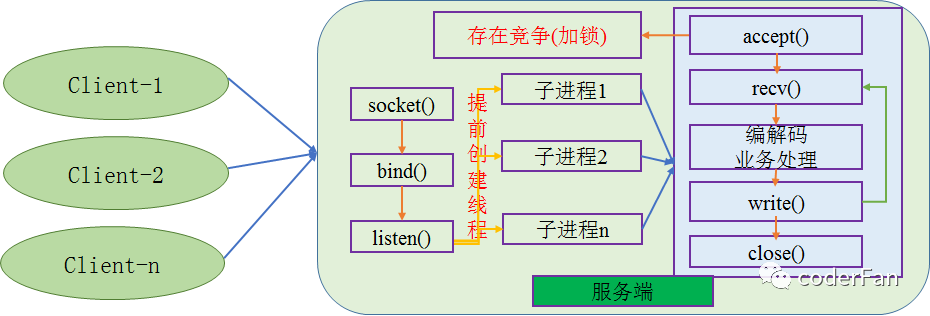

每个用户一个线程+提前创建好线程池

| |

主线程统一accept

| |

这种模式可以避免accept的线程安全问题。其实accept一个进程足够了。

事件驱动(只讨论reactor)

反应器设计模式指的是由一个或多个客户机并发地传递给应用程序的服务请求。一个应用程序中的每个服务可以由几个方法组成,并由一个单独的事件处理程序表示,该处理程序负责调度特定于服务的请求。事件处理程序的调度由管理已注册事件处理程序的启动调度程序执行。服务请求的解复用由同步事件解复用器执行。也称为调度程序、通知程序。其核心是os的IO复用(epoll_开头的相关)接口。

基本思路是:

主线程往epoll内核事件表注册socket上的读事件。

主线程调用epoll_wait等待socket上数据可读。

当socket可读时,epoll_wait通知主线程,主线程则将socket可读事件放入请求队列。

睡眠在请求队列上的某个工作线程被唤醒,他从socket读取数据,并处理用户请求,然后再往epoll内核时间表中注册socket写就绪事件。

主线程epoll_wait等待socket可写。

当socket可写时,epoll_wait通知主线程。主线程将socket可写事件放入请求队列。

睡眠在请求队列中的某个线程被环形,他往socket上写入服务器处理客户请求的结果。

优缺点

优点

响应快,不必为单个同步操作所阻塞;

可扩展性,可以很方便的通过增加reactor实例(如multi reactor)个数来利用CPU资源;

可复用性,reactor本身与具体事件处理逻辑无关,便于复用。

缺点

共享同一个reactor时,若出现较长时间的读写,会影响该reactor的响应时间,此时可以考虑thread-per-connection;

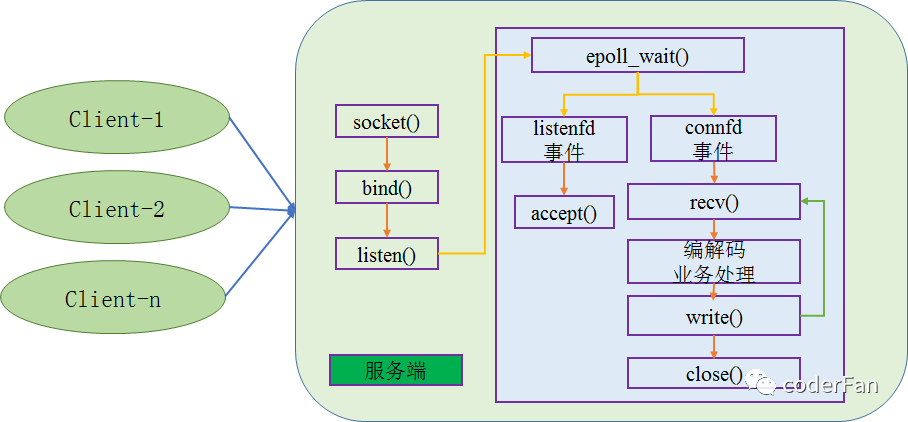

单reactor单线程模型

| |

得益于epoll的高性能,一般场景够用了。我们的Redis就是使用的是单线程版的reactor。

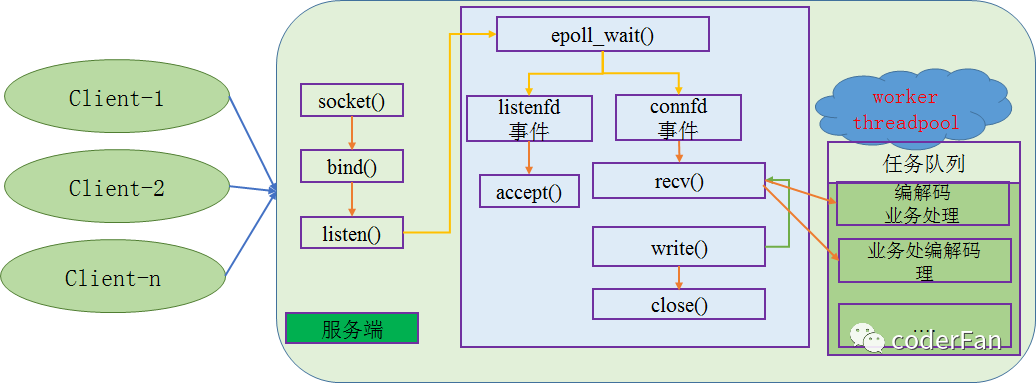

单reactor+工作线程池

单线程的reactor,业务处理也在IO线程中,此时如果有耗时操作,会影响并发。因此我们使用工作线程池来异步耗时的操作。

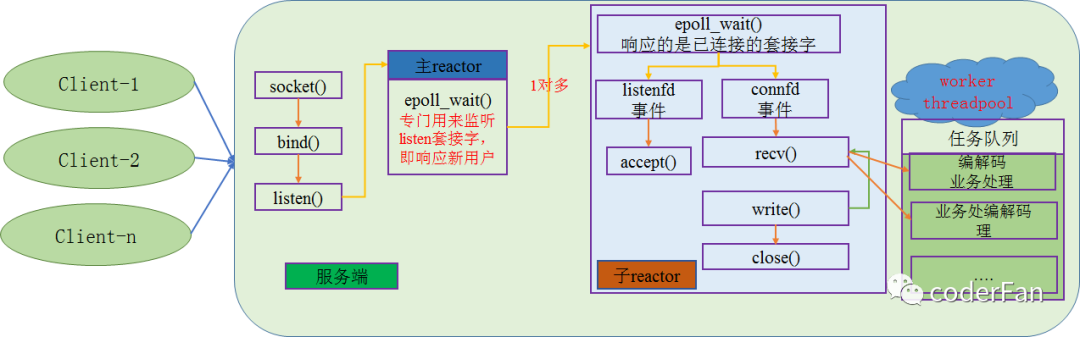

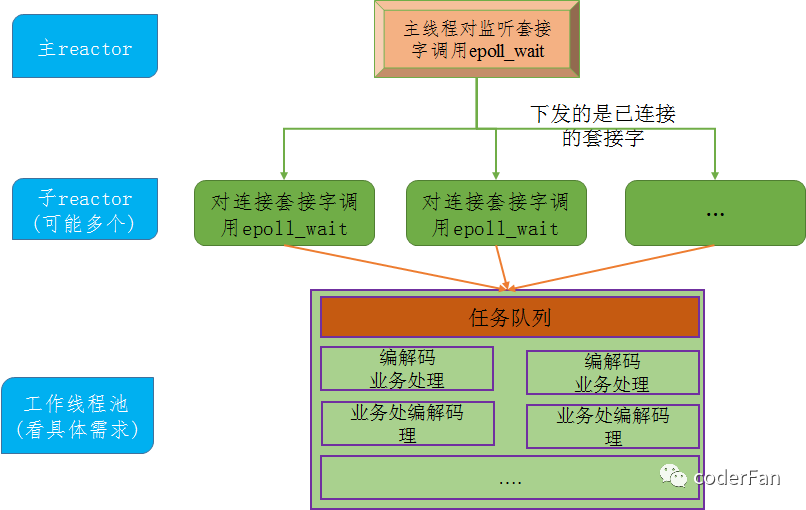

multi reactor-1

在上文分析中,我们发现单线程的reactor太忙了,既当爹(接受新的用户,即响应listenfd套接字)又当妈的(响应客户端发来了数据以及给客户端回消息,即已连接的套接字),那我们干脆直接把他在拆开不就行了吗?这样的话,是不是能响应更多的并发了?

简化版就是

| |

spawn_thread函数

| |

event_loop_create函数

| |

主reactor阻塞在监听套接字上。

| |

主reactor阻塞在监听套接字上,当有新用户来的时候,调用accept api创建已连接的套接字,按照某种负载均衡算法给子reactor。

| |

接着上面的,我们看看connection_create函数

| |

需要说明一点的是,这个模型的业务处理线程池,视场景而定,不一定需要。

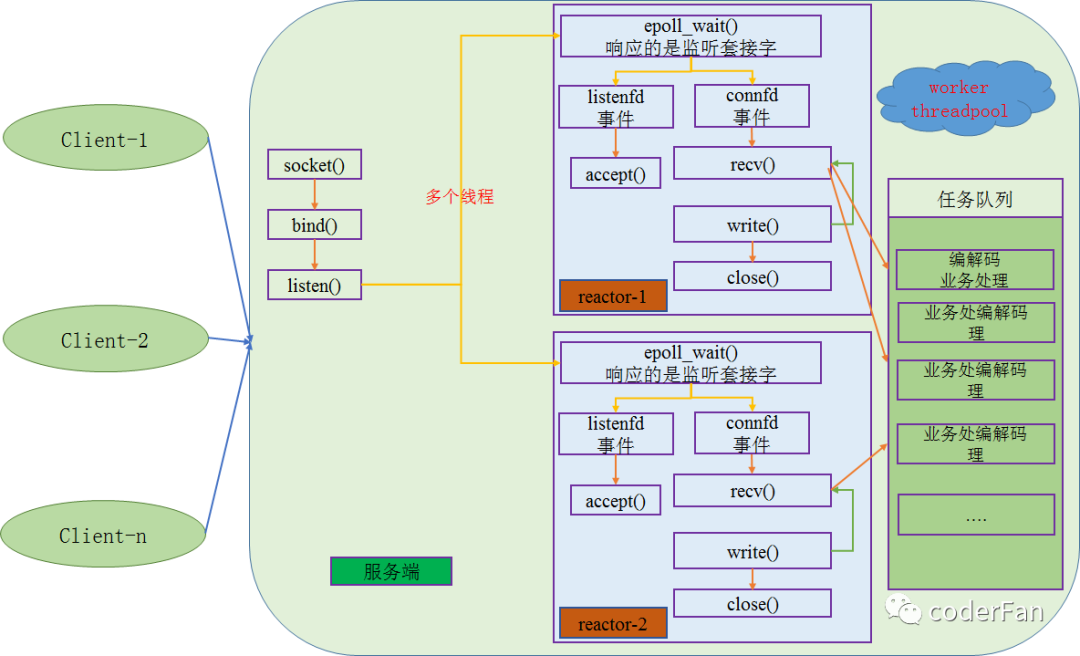

multi reactor-2

通过分析multi reactor-1版本,我们发现那就是把处理监听的套接字事件拿出来了,那我们能不能不拿出来呢?直接丢到线程池中。

| |

这种模式可行性是很高的,而且个人也比较推荐,因为方便?当然你可能会怀疑里面accept和epoll相关函数的线程安全性问题。但是很高兴的告诉你,他们是线程安全的。

| |

当然需要注意一点的是epoll_wait可能会引发惊群效应。

multi reator-3

其实这个版本是没有啥意思的,因为他出现前提是main reactor(即负责处理监听套接字的线程响应不过来),天了噜,这个得多大的并发?我估计也就tomcat那种可能会用到,因此这个不具体给出。

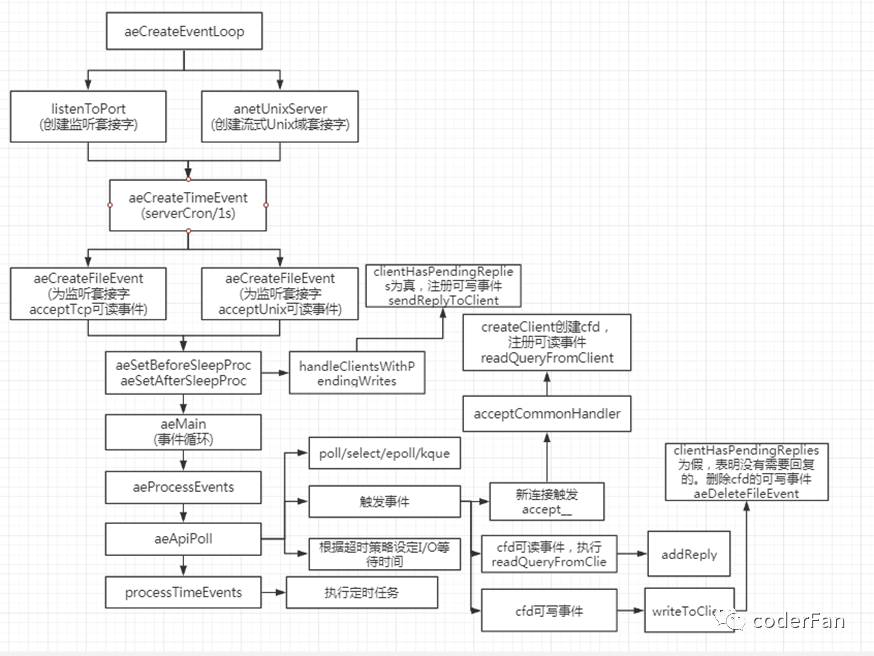

redis的网络模型

redis采用的是单线程reactor。单机压测QPS可以达到10w,因此不要小看单线程的reactor,在选型时要慎重,不是说越复杂的东西就越好,适合才是最好的。

对一个网络库而言,主要关心的是三大类事件:文件事件、定时事件以及信号。在redis中文件事件和定时事件被添加至I/O复用中进行统一管理,而信号则通过信号处理函数来异步处理。

定时事件:实际上redis支持的是周期任务事件,即执行完之后不会删除,而是在重新插入链表。

定时器采用链表的方式进行管理,新定时任务插入链表表头。

| |

具体定时事件处理如下

| |

信号:在initserver中注册信号处理函数sigShutdownHandler,在信号处理函数中主要是将shutdown_asap置为1,当然如果之前已经为1,那么直接exit。否则将会在serverCron函数的prepareForShutdown中执行收尾工作.

| |

sigShutdownHandler具体函数

| |

参考

《Unix网络编程:卷1》

《Linux高性能服务器编程》

《Scalable IO in Java》

8万+

8万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?