本文的目的是为了学习openstack, 学习openstack的最好办法是手工一步一步安装,而不是无脑通过packstack或devstack安装。 本文选择了通过mac来手工安装,openstack里最复杂的是网络,所以先选择最基本的网络来开始。

安装完毕后,mac 应该能够直接ping通虚机的地址,我们这里先选择二层打通。这样我们需要构建一个从mac到虚机的一个二层网络。

MAC -----linux-bridge----ovs-bridge ---VM

从MAC到虚机需要二个网桥,分别有linux bridge 及ovs来负责。 MAC上安装virtualbox 作为 linux host,安装centos 作为openstack的host.

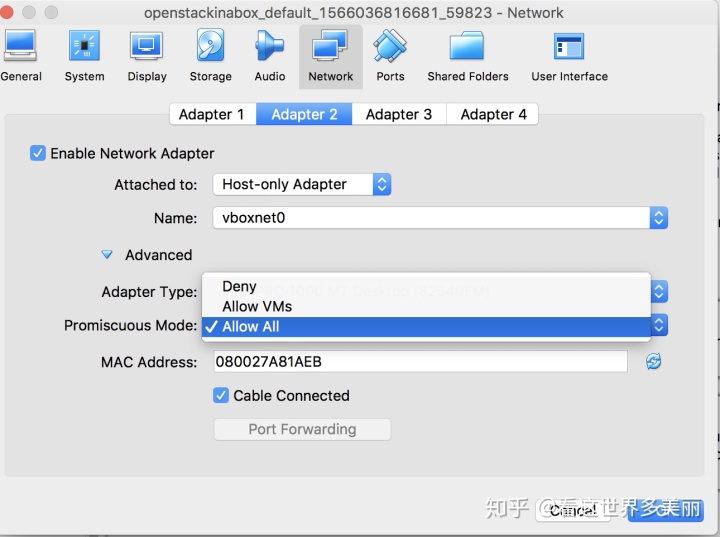

virtualbox只使用hostonly 接口,因此openstack里的VM只能与mac通讯。

- 环境准备

MAC 上需要安装virtualbox, vagrant, 安装过程略去。

#vagrant plugin list

vagrant-host 2. 配置Vagrantfile,启动linux host.

vagrant 的使用,可以参考

https://linuxacademy.com/blog/linux/vagrant-cheat-sheet-get-started-with-vagrant/

# cat Vagrantfile

Vagrant.configure("2") do |config|

config.vm.box = "centos/7"

config.vm.hostname = "rocky"

config.vm.network "private_network", ip: "192.168.33.10"

config.vm.network "public_network"

end

# vagrant up

启动时,因为 "public_network"我没有设置,所以会问你选择 mac上的对应哪个网卡做桥接,我选了wifi0,这样eth2会获得一个与mac同网段的地址

#vagrant ssh default

Last login: Sat Aug 17 10:14:21 2019 from 10.0.2.2

[vagrant@rocky ~]$ ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 52:54:00:c9:c7:04 brd ff:ff:ff:ff:ff:ff

inet 10.0.2.15/24 brd 10.0.2.255 scope global noprefixroute dynamic eth0

valid_lft 86299sec preferred_lft 86299sec

inet6 fe80::5054:ff:fec9:c704/64 scope link

valid_lft forever preferred_lft forever

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 08:00:27:a8:1a:eb brd ff:ff:ff:ff:ff:ff

inet 192.168.33.10/24 brd 192.168.33.255 scope global noprefixroute eth1

valid_lft forever preferred_lft forever

inet6 fe80::a00:27ff:fea8:1aeb/64 scope link

valid_lft forever preferred_lft forever

4: eth2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 08:00:27:aa:21:cc brd ff:ff:ff:ff:ff:ff

inet 192.168.130.173/24 brd 192.168.130.255 scope global noprefixroute dynamic eth2

valid_lft 43160sec preferred_lft 43160sec

inet6 fe80::a00:27ff:feaa:21cc/64 scope link

valid_lft forever preferred_lft forever

[vagrant@rocky ~]$可以看到rocky这台虚机有三个网卡

eth0:对应的主机的en0,eth0作为en0的nat 内网网卡通过mac的en0上internet. eth0的地址默认是10.0.2.15/24

eth1:对应的是vboxnet0, 我们可以看到他的mac地址是0a:00:27:00:00:00, IP 默认是192.168.33.1

eth2: 对应的上mac主机上的en0:Wi-Fi(Airport),IP与MAC上的en0同一网段,可以互访

# VBoxManage list hostonlyifs

Name: vboxnet0

GUID: 786f6276-656e-4074-8000-0a0027000000

DHCP: Disabled

IPAddress: 192.168.33.1

NetworkMask: 255.255.255.0

IPV6Address:

IPV6NetworkMaskPrefixLength: 0

HardwareAddress: 0a:00:27:00:00:00

MediumType: Ethernet

Wireless: No

Status: Up

VBoxNetworkName: HostInterfaceNetworking-vboxnet0我们然后把rocky这台机器停下来,我们需要修改网卡的属性

# vagrant halt

==> default: Attempting graceful shutdown of VM...

改完后,重启启动

# vagrant up

Bringing machine 'default' up with 'virtualbox' provider...

# vagrant ssh default

Last login: Sat Aug 17 10:15:41 2019 from 10.0.2.2

[vagrant@rocky ~]$#3 开始安装openstack ,我们选择的版本是rocky.

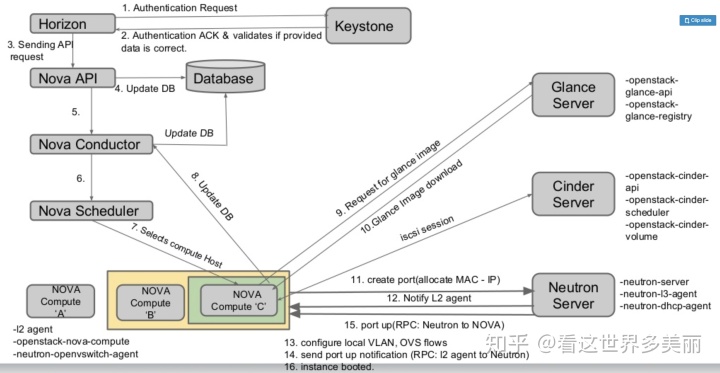

简要说下几个必须安装的组件。

基础服务,

mariadb:配置保存

memcached:加速

rabbitmq:消息通讯

openstack几大件

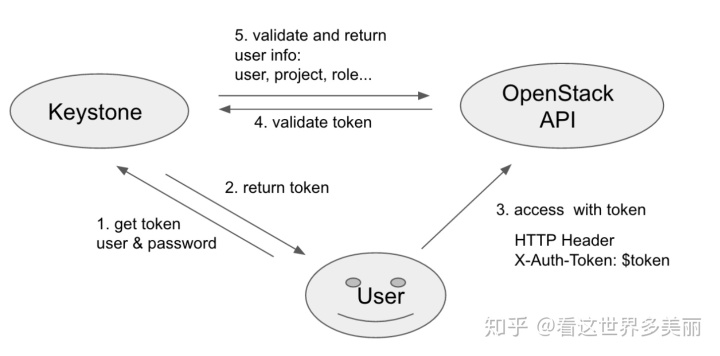

- Keystone , 提供token,验证token

- glance, 安装的镜像服务

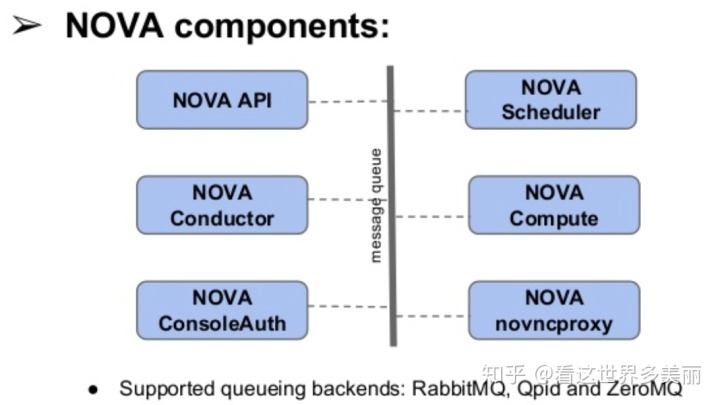

- nova, 计算服务,负责VM的生命周期管理。

NOVA API: 用户接口

NOVAS SCHEDULER: 通过FILTER为VM指定使用的计算节点

NOVA-Conductor:提供数据库访问支持

NOVA-ConsoleAuth/novncproxy:提供VNC形式的console访问支持

- cinder, 块存储服务

- neutron,网络服务,分配port,vlan等

- horizon, dashboard 界面,可以不装

#3.1

更新yum repo

sudo su -

yum -y update

yum -y install centos-release-openstack-rocky

sed -i -e "s/enabled=1/enabled=0/g" /etc/yum.repos.d/CentOS-OpenStack-rocky.repo

yum -y update 安装mariadb, 修改my.cnf配置文件, 启动,检查是否running, 设置启动自运行

yum --enablerepo=centos-openstack-rocky -y install mariadb-server

cp /etc/my.cnf /etc/my.cnf.ori

sed '/^[mysqld]/a character-set-server=utf8' /etc/my.cnf.ori > /etc/my.cnf

systemctl restart mariadb

if systemctl status mariadb | grep running; then echo 'ok'; fi;

ln -s '/usr/lib/systemd/system/mariadb.service' '/etc/systemd/system/multi-user.target.wants/mariadb.service'然后初始化数据库,设置数据库密码,其他操作y/n都可以。你可以通过 mysql -u root -p 进入数据库查看,设置防火墙允许mysql应用。如果firewall已经disable了,那么firewall-cmd不需配置。

mysql_secure_installation

firewall-cmd --add-service=mysql --permanent

firewall-cmd --reload

安装 rabbitmq, memcached, 添加rabbitmq 用户账号及权限

yum --enablerepo=centos-openstack-rocky -y install rabbitmq-server memcached

systemctl restart rabbitmq-server memcached

rabbitmqctl add_user openstack password

rabbitmqctl set_permissions openstack ".*" ".*" ".*"

firewall-cmd --add-port={11211/tcp,5672/tcp} --permanent

firewall-cmd --reload

#以上我们安装了基本服务,接下来我们安装认证组件keystone

#4.1我们首先在mysql数据库里添加keystone的账号

[root@rocky ~]# echo "

> create database keystone;

> grant all privileges on keystone.* to keystone@'localhost' identified by 'password';

> grant all privileges on keystone.* to keystone@'%' identified by 'password';

> flush privileges;

> exit

> " > keystone.sql

mysql -e "source keystone.sql" -p

安装keystone,openstack客户端,http 服务进程等

yum --enablerepo=centos-openstack-rocky,epel -y install openstack-keystone openstack-utils python-openstackclient httpd mod_wsgi

ip=`ifconfig eth2 | grep 'inet ' | awk '{print $2}'`

sed -i '/^$/d' /etc/keystone/keystone.conf

sed -i '/^#/d' /etc/keystone/keystone.conf

sed -i "/^[cache]/a memcache_servers = $ip:11211" /etc/keystone/keystone.conf

if grep -e '^[database]' /etc/keystone/keystone.conf; then sed -i "/^connection/a = mysql+pymysql://keystone:password@$ip:/keystone" /etc/keystone/keystone.conf;

else echo "[database] connection = mysql+pymysql://keystone:password@$ip/keystone" >>/etc/keystone/keystone.conf;

fi

if grep -e '^[token]' /etc/keystone/keystone.conf; then sed -i "/^[token]/a provider = fernet" /etc/keystone/keystone.conf;

else echo "[token] provider = fernet" >>/etc/keystone/keystone.conf;

fi

su -s /bin/bash keystone -c "keystone-manage db_sync"

# initialize keys

keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

keystone-manage credential_setup --keystone-user keystone --keystone-group keystone

export controller=$ip

keystone-manage bootstrap --bootstrap-password adminpassword

--bootstrap-admin-url http://$controller:5000/v3/

--bootstrap-internal-url http://$controller:5000/v3/

--bootstrap-public-url http://$controller:5000/v3/

--bootstrap-region-id RegionOne

setsebool -P httpd_use_openstack on

setsebool -P httpd_can_network_connect on

setsebool -P httpd_can_network_connect_db on

firewall-cmd --add-port=5000/tcp --permanent

firewall-cmd --reload

ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/

systemctl enable httpd

systemctl start httpd

#建立及加载环境变量

echo "

export ip=`ifconfig eth2 | grep 'inet ' | awk '{print $2}'`

export OS_PROJECT_DOMAIN_NAME=default

export OS_USER_DOMAIN_NAME=default

export OS_PROJECT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=adminpassword

export OS_AUTH_URL=http://$ip:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

" > ~/keystonerc

chmod 600 ~/keystonerc

source ~/keystonerc

echo "source ~/keystonerc " >> ~/.bash_profile#4.2 开始安装glances

步骤:

- 创建openstack service user glances

- 为user glances 创建project service 的admin角色

- 创建glances services, 类型为image

- 创建glances的服务端点,类型为public来internal,admin

# create service project

openstack project create --domain default --description "Service Project" service

openstack user create --domain default --project service --password servicepassword glance

openstack role add --project service --user glance admin

openstack service create --name glance --description "OpenStack Image service" image

export controller=`ifconfig eth2 | grep 'inet ' | awk '{print $2}'`

openstack endpoint create --region RegionOne image public http://$controller:9292

openstack endpoint create --region RegionOne image internal http://$controller:9292

openstack endpoint create --region RegionOne image admin http://$controller:9292#创建glance数据库

[root@rocky ~]# echo "

create database glance;

grant all privileges on glance.* to glance@'localhost' identified by 'password';

grant all privileges on glance.* to glance@'%' identified by 'password';

flush privileges;

exit

" > glance.sql

mysql -e "source glance.sql" -p#安装glance及配置glance的配置文件,配置内容包括

侦听端口,image存储位置,数据库的链接,keystone的认证

mv /etc/glance/glance-api.conf /etc/glance/glance-api.conf.org

mv /etc/glance/glance-registry.conf /etc/glance/glance-registry.conf.org

ip=`ifconfig eth2 | grep 'inet ' | awk '{print $2}'`

echo "

# create new

[DEFAULT]

bind_host = 0.0.0.0

[glance_store]

stores = file,http

default_store = file

filesystem_store_datadir = /var/lib/glance/images/

[database]

# MariaDB connection info

connection = mysql+pymysql://glance:password@$ip/glance

# keystone auth info

[keystone_authtoken]

www_authenticate_uri = http://$ip:5000

auth_url = http://$ip:5000

memcached_servers = $ip:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = glance

password = servicepassword

[paste_deploy]

flavor = keystone

" > /etc/glance/glance.api.conf

echo "

# create new

[DEFAULT]

bind_host = 0.0.0.0

[database]

# MariaDB connection info

connection = mysql+pymysql://glance:password@$ip/glance

# keystone auth info

[keystone_authtoken]

www_authenticate_uri = http://$ip:5000

auth_url = http://$ip:5000

memcached_servers = $ip:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = glance

password = servicepassword

[paste_deploy]

flavor = keystone

" > /etc/glance/glance-registry.conf

chmod 640 /etc/glance/glance-api.conf /etc/glance/glance-registry.conf

chown root:glance /etc/glance/glance-api.conf /etc/glance/glance-registry.conf

su -s /bin/bash glance -c "glance-manage db_sync"

systemctl start openstack-glance-api openstack-glance-registry

systemctl enable openstack-glance-api openstack-glance-registry

setsebool -P glance_api_can_network on

firewall-cmd --add-port={9191/tcp,9292/tcp} --permanent

firewall-cmd --reload #安装好glance服务后,我们可以通过该服务把我们要的image安装到glance里。我们这里安装一个比较小的cirros linux image.

cd /var/lib/glance/images

curl -O http://download.cirros-cloud.net/0.3.4/cirros-0.3.4-x86_64-disk.img

openstack image create "cirros" --file /var/lib/glance/images/cirros-0.3.4-x86_64-disk.img --disk-format qcow2 --container-format bare --public

setsebool -P glance_api_can_network on

firewall-cmd --add-port={9191/tcp,9292/tcp} --permanent

firewall-cmd --reload

#接下来我们安装最主要的计算节点,这里我们安装在同一个HOST上,实际部署中应用放在不同的节点上,因为所有的虚机都部署在计算节点上。

#安装部署

- 安装hypervisor

- 创建nova的数据库,账号,角色,端点

yum -y install qemu-kvm libvirt virt-install bridge-utils

#qemu 提供模拟的硬件

#kvm提供加速

#libvirt是管理不同hypervisor的一个统一API接口

#virt-install是libvirt的shell工具

#bride-utils 提供查看bridge的工具,不是ovs

#可以通过lsmod | grep kvm 来查看有无安装,因为我是装在mac上,所以没有kvm的支持,我们就用qemu了,会慢一些。

systemctl start libvirtd

systemctl enable libvirtd

为default domin里的project service创建用户nova,placement及指定其角色为admin,

openstack user create --domain default --project service --password servicepassword nova

openstack role add --project service --user nova admin

openstack user create --domain default --project service --password servicepassword placement

openstack role add --project service --user placement admin

openstack service create --name nova --description "OpenStack Compute service" compute

openstack service create --name placement --description "OpenStack Compute Placement service" placement

export ip=`ifconfig eth2 | grep 'inet ' | awk '{print $2}'`

openstack endpoint create --region RegionOne compute public http://$controller:8774/v2.1/%(tenant_id)s

openstack endpoint create --region RegionOne compute internal http://$controller:8774/v2.1/%(tenant_id)s

openstack endpoint create --region RegionOne compute admin http://$controller:8774/v2.1/%(tenant_id)s

openstack endpoint create --region RegionOne placement public http://$controller:8778

openstack endpoint create --region RegionOne placement internal http://$controller:8778

openstack endpoint create --region RegionOne placement admin http://$controller:8778

echo "

create database nova;

grant all privileges on nova.* to nova@'localhost' identified by 'password';

grant all privileges on nova.* to nova@'%' identified by 'password';

flush privileges;

exit

" > nova.sql

echo "

create database nova_api;

grant all privileges on nova_api.* to nova_api@'localhost' identified by 'password';

grant all privileges on nova_api.* to nova_api@'%' identified by 'password';

flush privileges;

exit

" > nova_api.sql

echo "

create database nova_placement;

grant all privileges on nova_placement.* to nova_placement@'localhost' identified by 'password';

grant all privileges on nova_placement.* to nova_placement@'%' identified by 'password';

flush privileges;

exit

" > nova_placement.sql

echo "

create database nova_cell0;

grant all privileges on nova_cell0.* to nova_cell0@'localhost' identified by 'password';

grant all privileges on nova_cell0.* to nova_cell0@'%' identified by 'password';

flush privileges;

exit

" > nova_cell0.sql

mysql -e "source nova.sql" -p

mysql -e "source nova_api.sql" -p

mysql -e "source nova_placement.sql" -p

mysql -e "source nova_cell0.sql" -p

yum --enablerepo=centos-openstack-rocky,epel -y install openstack-nova

mv /etc/nova/nova.conf /etc/nova/nova.conf.org

#you could use printf instead echo

echo "

# create new

[DEFAULT]

# define own IP

ip=`ifconfig eth2 | grep 'inet ' | awk '{print $2}'`

my_ip = $ip

state_path = /var/lib/nova

enabled_apis = osapi_compute,metadata

log_dir = /var/log/nova

# RabbitMQ connection info

transport_url = rabbit://openstack:password@$ip

[api]

auth_strategy = keystone

# Glance connection info

[glance]

api_servers = http://$ip:9292

[oslo_concurrency]

lock_path = $state_path/tmp

# MariaDB connection info

[api_database]

connection = mysql+pymysql://nova:password@$ip/nova_api

[database]

connection = mysql+pymysql://nova:password@$ip/nova

# Keystone auth info

[keystone_authtoken]

www_authenticate_uri = http://$ip:5000

auth_url = http://$ip:5000

memcached_servers = $ip:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = servicepassword

[placement]

auth_url = http://$ip:5000

os_region_name = RegionOne

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = placement

password = servicepassword

[placement_database]

connection = mysql+pymysql://nova:password@$ip/nova_placement

[wsgi]

api_paste_config = /etc/nova/api-paste.ini

" > /etc/nova/nova.conf

chmod 640 /etc/nova/nova.conf

chgrp nova /etc/nova/nova.conf

if grep -e '^</VirtualHost>$' /etc/httpd/conf.d/00-nova-placement-api.conf; then

sed -i "/VirtualHost>/a <Directory /usr/bin> n Require all granted n </Directory>" /etc/httpd/conf.d/00-nova-placement-api.conf

fi

yum --enablerepo=centos-openstack-rocky -y install openstack-selinux

semanage port -a -t http_port_t -p tcp 8778

firewall-cmd --add-port={6080/tcp,6081/tcp,6082/tcp,8774/tcp,8775/tcp,8778/tcp} --permanent

firewall-cmd --reload

su -s /bin/bash nova -c "nova-manage api_db sync"

su -s /bin/bash nova -c "nova-manage cell_v2 map_cell0"

su -s /bin/bash nova -c "nova-manage db sync"

su -s /bin/bash nova -c "nova-manage cell_v2 create_cell --name cell1"

systemctl restart httpd

chown nova. /var/log/nova/nova-placement-api.log

for service in api consoleauth conductor scheduler novncproxy; do

systemctl start openstack-nova-$service

systemctl enable openstack-nova-$service

done

openstack compute service list

#如果compute service有时候没有看到3个nova service,试下重启nova 的service

for service in api consoleauth conductor scheduler novncproxy; do systemctl restart openstack-nova-$service; done;安装nova的console服务,允许通过浏览器访问console,这是通过vnc实现的。

yum --enablerepo=centos-openstack-rocky,epel -y install openstack-nova-compute

ip=`ifconfig eth2 | grep 'inet ' | awk '{print $2}'`

if grep '^[vnc]$' /etc/nova/nova.conf; then printf 'existed';

else

printf "

[vnc]

enabled = True

server_listen = 0.0.0.0

server_proxyclient_address = $ip

novncproxy_base_url = http://$ip:6080/vnc_auto.html

" >> /etc/nova/nova.conf;

fi;

yum --enablerepo=centos-openstack-rocky -y install openstack-selinux

firewall-cmd --add-port=5900-5999/tcp --permanent

firewall-cmd --reload

systemctl restart openstack-nova-compute

systemctl restart libvirtd

systemctl enable openstack-nova-compute

su -s /bin/bash nova -c "nova-manage cell_v2 discover_hosts"

openstack compute service list

装到这里,我发现我的linux虚机只给了512M内存,已经不够用了。 需要分配更多的内存,我准备留4个G。

Vagrant.configure("2") do |config|

config.vm.box = "centos/7"

config.vm.hostname = "rocky"

config.vm.network "private_network", ip: "192.168.33.10"

config.vm.network "public_network"

config.vm.provider :virtualbox do |vb|

vb.customize [

"modifyvm", :id,

"--cpuexecutioncap", "50",

"--memory", "4096",

]

end

end

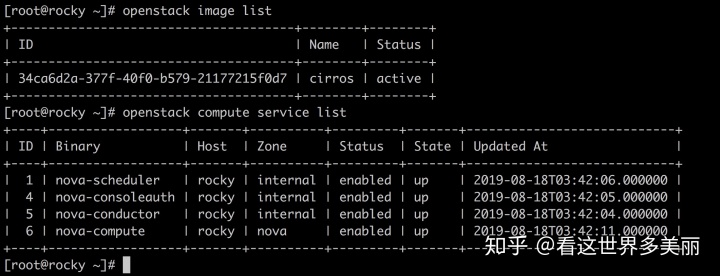

检查一下,compute service的4个服务正常启动, image服务也正常

#接下来我们安装openstack里最难的部分,neutron。

同样,我们先创建neutron 服务需要的mysql的数据库,keystone user, role, 配置neutron连接数据库,配置neutron服务端点等一些常规操作

export controller=`ifconfig eth2 | grep 'inet ' | awk '{print $2}'`

openstack user create --domain default --project service --password servicepassword neutron

openstack role add --project service --user neutron admin

openstack service create --name neutron --description "OpenStack Networking service" network

openstack endpoint create --region RegionOne network public http://$controller:9696

openstack endpoint create --region RegionOne network internal http://$controller:9696

openstack endpoint create --region RegionOne network admin http://$controller:9696

#然后安装neutron 包

#我们使用neutron的ml2 (module layer 2)插件,插件采用openvswitch提供二层的vlan,vlan等服务。需要配置neutron及neutron的插件,及插件本身

yum --enablerepo=centos-openstack-rocky,epel -y install openstack-neutron openstack-neutron-ml2 openstack-neutron-openvswitch

mv /etc/neutron/neutron.conf /etc/neutron/neutron.conf.org

ip=`ifconfig eth2 | grep 'inet ' | awk '{print $2}'`

printf "

# create new

[DEFAULT]

core_plugin = ml2

service_plugins = router

auth_strategy = keystone

state_path = /var/lib/neutron

dhcp_agent_notification = True

allow_overlapping_ips = True

notify_nova_on_port_status_changes = True

notify_nova_on_port_data_changes = True

# RabbitMQ connection info

transport_url = rabbit://openstack:password@$ip

# Keystone auth info

[keystone_authtoken]

www_authenticate_uri = http://$ip:5000

auth_url = http://$ip:5000

memcached_servers = $ip:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = servicepassword

# MariaDB connection info

[database]

connection = mysql+pymysql://neutron:password@$ip/neutron_ml2

# Nova connection info

[nova]

auth_url = http://$ip:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = nova

password = servicepassword

[oslo_concurrency]

lock_path = $state_path/tmp

" >> /etc/neutron/neutron.conf;

chmod 640 /etc/neutron/neutron.conf

chgrp neutron /etc/neutron/neutron.conf

mv /etc/neutron/l3_agent.ini /etc/neutron/l3_agent.ini.ori

printf "

[DEFAULT]

interface_driver = openvswitch

[agent]

[ovs]

" > /etc/neutron/l3_agent.ini

mv /etc/neutron/dhcp_agent.ini /etc/neutron/dhcp_agent.ini.ori

printf "

[DEFAULT]

interface_driver = openvswitch

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = true

[agent]

[ovs]

" > /etc/neutron/dhcp_agent.ini

mv /etc/neutron/metadata_agent.ini /etc/neutron/metadata_agent.ini.ori

printf "

[DEFAULT]

nova_metadata_host = $ip

metadata_proxy_shared_secret = metadata_secret

[agent]

[cache]

memcache_servers = $ip:11211

" > /etc/neutron/metadata_agent.ini

mv /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugins/ml2/ml2_conf.ini.ori

printf "

[DEFAULT]

[l2pop]

[ml2]

type_drivers = flat,vlan,gre,vxlan

tenant_network_types =

mechanism_drivers = openvswitch,l2population

extension_drivers = port_security

[ml2_type_flat]

[ml2_type_geneve]

[ml2_type_gre]

[ml2_type_vlan]

[ml2_type_vxlan]

[securitygroup]

" > /etc/neutron/plugins/ml2/ml2_conf.ini

mv /etc/neutron/plugins/ml2/openvswitch_agent.ini /etc/neutron/plugins/ml2/openvswitch_agent.ini.ori

printf "

[DEFAULT]

[agent]

[network_log]

[ovs]

[securitygroup]

firewall_driver = openvswitch

enable_security_group = true

enable_ipset = true

[xenapi]

" > /etc/neutron/plugins/ml2/openvswitch_agent.ini

cat << 'EOL' > _temp

use_neutron = True

linuxnet_interface_driver = nova.network.linux_net.LinuxOVSInterfaceDriver

firewall_driver = nova.virt.firewall.NoopFirewallDriver

vif_plugging_is_fatal = True

vif_plugging_timeout = 300

EOL

if grep -e '^[DEFAULT]' /etc/nova/nova.conf; then

sed -i '/[DEFAULT]/r _temp' /etc/nova/nova.conf

fi

cat << 'EOL' > _temp

# add follows to the end : Neutron auth info

# the value of metadata_proxy_shared_secret is the same with the one in metadata_agent.ini

[neutron]

auth_url = http://$ip:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = servicepassword

service_metadata_proxy = True

metadata_proxy_shared_secret = metadata_secret

EOL

if grep -e '^[neutron]' /etc/nova/nova.conf; then

printf 'exist';

else

cat _temp >> /etc/nova/nova.conf;

fi

setsebool -P neutron_can_network on

setsebool -P haproxy_connect_any on

setsebool -P daemons_enable_cluster_mode on

yum --enablerepo=centos-openstack-rocky -y install openstack-selinux

firewall-cmd --add-port=9696/tcp --permanent

firewall-cmd --reload

systemctl start openvswitch

systemctl enable openvswitch

ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

su -s /bin/bash neutron -c "neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugin.ini upgrade head"

for service in server dhcp-agent l3-agent metadata-agent openvswitch-agent; do

systemctl start neutron-$service

systemctl enable neutron-$service

done

systemctl restart openstack-nova-api openstack-nova-compute

#接下来配置neutron network

我们采用最简单的flat或vlan网络,需要创建一个物理网卡,并映射到host上的网卡,因为eth1是连到mac的,我们又希望vm能与mac在一个网段

所以我们把物理网卡映射到eth1.这样neutron创建的网口就能与mac通讯

ovs-vsctl add-br br-eth1

ovs-vsctl add-port br-eth1 eth1

sed -i "/^[ml2_type_flat]/a flat_networks = physnet1" /etc/neutron/plugins/ml2/ml2_conf.ini

sed -i "/^[ovs]/a bridge_mappings = physnet1:br-eth1" /etc/neutron/plugins/ml2/openvswitch_agent.ini

systemctl restart neutron-openvswitch-agent 然后我们创建虚拟网络,网络内型flat

projectID=$(openstack project list | grep service | awk '{print $2}')

openstack network create --project $projectID

--share --provider-network-type flat --provider-physical-network physnet1 sharednet1

# create subnet [10.0.0.0/24] in [sharednet1]

openstack subnet create subnet1 --network sharednet1

--project $projectID --subnet-range 10.0.0.0/24

--allocation-pool start=10.0.0.200,end=10.0.0.254

--gateway 10.0.0.1 --dns-nameserver 10.0.0.10

openstack network list

openstack subnet list

#创建openstack的project,创建该project用户,用户可以创建虚机

openstack project create --domain default --description "Demo Project" demo_project

openstack user create --domain default --project demo_project --password userpassword demo_user

openstack role create CloudUser

openstack role add --project demo_project --user demo_user CloudUser

openstack flavor create --id 0 --vcpus 1 --ram 128 --disk 10 m1.small

ip=`ifconfig eth2 | grep 'inet ' | awk '{print $2}'`

cat << 'EOL' > ~/keystonerc_demo_user

export OS_PROJECT_DOMAIN_NAME=default

export OS_USER_DOMAIN_NAME=default

export OS_PROJECT_NAME=demo_project

export OS_USERNAME=demo_user

export OS_PASSWORD=userpassword

export OS_AUTH_URL=http://$ip:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

export PS1='[u@h W(keystone)]$ '

EOL

chmod 600 ~/keystonerc

source ~/keystonerc_demo_user

openstack flavor list

openstack image list

openstack network list

openstack security group create secgroup01

openstack security group list

ssh-keygen -q -N ""

openstack keypair create --public-key ~/.ssh/id_rsa.pub mykey

openstack keypair list

netID=$(openstack network list | grep sharednet1 | awk '{ print $2 }')

nova-manage cell_v2 discover_hosts --verbose

openstack server create --flavor m1.small --image cirros --security-group secgroup01 --nic net-id=$netID --key-name mykey Cirros_1

然后我们看server是否正常启动,不幸的是,没能成功。看日志应该是机器不支持kvm.所以我们需要修改nova的配置。

RescheduledException: Build of instance 22f27839-8587-4b63-80c1-d3aaa3697fd2 was re-scheduled: invalid argument: could not find capabilities for domaintype=kvm n']

vi /etc/nova/nova.conf

[libvirt]

cpu_mode=none

virt_type = qemu

然后重启libvirtd,openstack-nova-compute,openstack-nova-scheduler 后server成功

systemctl restart libvirtd

systemctl restart openstack-nova-compute

systemctl restart openstack-nova-api

systemctl restart openstack-nova-scheduler

[vagrant@rocky nova(keystone)]$ openstack server list

+--------------------------------------+----------+--------+-----------------------+--------+----------+

| ID | Name | Status | Networks | Image | Flavor |

+--------------------------------------+----------+--------+-----------------------+--------+----------+

| 89733846-5e1a-499f-82f5-4f7c01f0dafa | Cirros_1 | ACTIVE | sharednet1=10.0.0.206 | cirros | m1.small |

+--------------------------------------+----------+--------+-----------------------+--------+----------+

[vagrant@rocky nova(keystone)]$ openstack server show Cirros_1

+-----------------------------+----------------------------------------------------------+

| Field | Value |

+-----------------------------+----------------------------------------------------------+

| OS-DCF:diskConfig | MANUAL |

| OS-EXT-AZ:availability_zone | nova |

| OS-EXT-STS:power_state | Running |

| OS-EXT-STS:task_state | None |

| OS-EXT-STS:vm_state | active |

| OS-SRV-USG:launched_at | 2019-08-18T06:56:09.000000 |

| OS-SRV-USG:terminated_at | None |

| accessIPv4 | |

| accessIPv6 | |

| addresses | sharednet1=10.0.0.206 |

| config_drive | |

| created | 2019-08-18T06:55:20Z |

| flavor | m1.small (0) |

| hostId | dfab017d98a96610774c471ce07a954fe36b71248ee0434cfb888b17 |

| id | 89733846-5e1a-499f-82f5-4f7c01f0dafa |

| image | cirros (34ca6d2a-377f-40f0-b579-21177215f0d7) |

| key_name | mykey |

| name | Cirros_1 |

| progress | 0 |

| project_id | 97bbb84f481243d8ab4253bddf571f23 |

| properties | |

| security_groups | name='secgroup01' |

| status | ACTIVE |

| updated | 2019-08-18T06:56:09Z |

| user_id | bb946f9bd59544f18830dfcac07211a8 |

| volumes_attached | |

+-----------------------------+----------------------------------------------------------+

接下来我们配置securitygateway 允许访问这台VM

openstack security group rule create --protocol icmp --ingress secgroup01

openstack security group rule create --protocol tcp --dst-port 22:22 secgroup01

openstack security group rule list

[vagrant@rocky nova(keystone)]$ openstack security group rule list

+--------------------------------------+-------------+-----------+------------+--------------------------------------+--------------------------------------+

| ID | IP Protocol | IP Range | Port Range | Remote Security Group | Security Group |

+--------------------------------------+-------------+-----------+------------+--------------------------------------+--------------------------------------+

| 190e1e78-c2b5-490c-8ee2-e53ceb0469e6 | None | None | | None | 6592135e-b200-4bc8-b4d6-0610f7e9fee4 |

| 43806334-34df-46f2-8cf0-9b7720bbd661 | None | None | | 286c90d7-7bb7-4c57-bfbb-c31a460b9c02 | 286c90d7-7bb7-4c57-bfbb-c31a460b9c02 |

| 87788da7-2a55-4177-b5a8-abfb7bda00f3 | icmp | 0.0.0.0/0 | | None | 6592135e-b200-4bc8-b4d6-0610f7e9fee4 |

| 9a33e895-0d70-4f6f-9cc4-56a5e0d20945 | None | None | | 286c90d7-7bb7-4c57-bfbb-c31a460b9c02 | 286c90d7-7bb7-4c57-bfbb-c31a460b9c02 |

| b887c0e8-c9b1-499f-91c5-5bc908e2178a | None | None | | None | 286c90d7-7bb7-4c57-bfbb-c31a460b9c02 |

| c97aea89-d185-4b32-9507-9da327176730 | None | None | | None | 286c90d7-7bb7-4c57-bfbb-c31a460b9c02 |

| cc355618-bb8d-4734-a466-f4e7e8a81306 | None | None | | None | 6592135e-b200-4bc8-b4d6-0610f7e9fee4 |

| dca07158-bf97-455e-91b1-f22bd5bb1571 | tcp | 0.0.0.0/0 | 22:22 | None | 6592135e-b200-4bc8-b4d6-0610f7e9fee4 |

+--------------------------------------+-------------+-----------+------------+--------------------------------------+--------------------------------------+

openstack console url show Cirros_1

获取这台机器的console口地址

[vagrant@rocky nova(keystone)]$ openstack console url show Cirros_1

+-------+--------------------------------------------------------------------------------------+

| Field | Value |

+-------+--------------------------------------------------------------------------------------+

| type | novnc |

| url | http://192.168.130.173:6080/vnc_auto.html?token=45d0d51e-ee73-4d37-a745-b20442757255 |

+-------+--------------------------------------------------------------------------------------+

[vagrant@rocky nova(keystone)]$

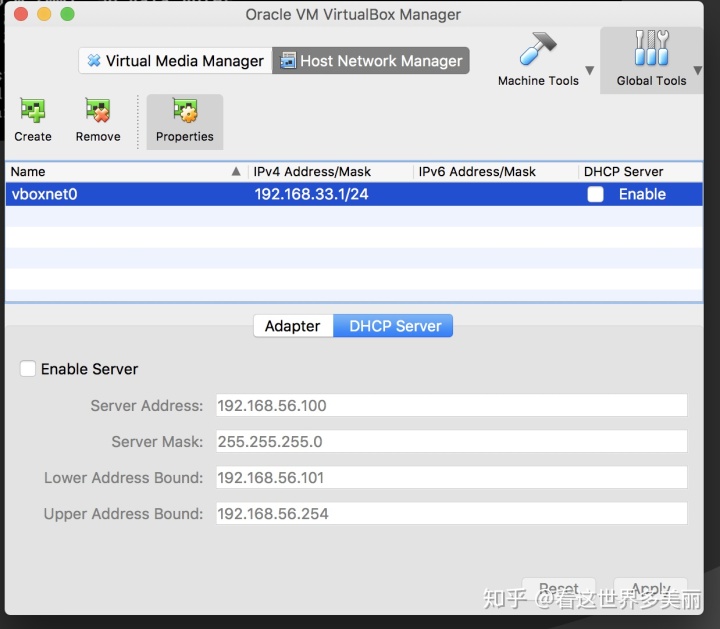

可以看到这台机器拿到了一个不正确的地址。这是因为我的MAC上的vboxnet0这个接口(对应与linux的eth1)的DHCP SERVER启用了。(这个在virtualbox的设置里),所以他拦截了namespace的DHCP server

现在关掉这个DHCP server.

重启后。再看VM已经拿到了正确的地址,并能够ping同 10.0.0.200 (DHCP server)

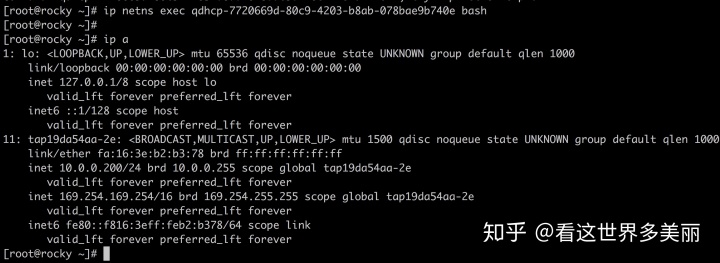

这个DHCP SERVER是neutron创建的。其实是个namespace

最后因为我们的MAC的vboxnet0这个网卡默认地址是192.168网段的,不能ping VM,所以我给接口增加一个地址。这里就可以登陆VM了。

ip addr add 10.0.0.100 dev vboxnet0

ssh cirros@10.0.0.206

cirros@10.0.0.206's password:到这里安装完毕,我们下一篇看一下网络包是如何从VM到我的MAC逐步经过的。

9207

9207

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?