搭建单节点Hadoop集群

- 集群环境规划

- 前提:已经拥有JDK环境,虚拟机联网,关闭防火墙,关闭selinux

注意:在此之前需要在node01-03中配置对应的地址映射,并且修改主机名分别为node01,node02, node03。

- 1.修改主机名

vim /etc/sysconfig/network

修改内容如下,node01 为需要修改的主机名

NETWORKING=yes

HOSTNAME=node01

- 2.添加域名映射

vim /etc/hosts

添加如下信息:对应为->主机IP地址 主机名

192.168.100.101 node01

192.168.100.102 node02

192.168.100.103 node03

1.上传Hadoop安装包并且解压,(这里上传的是重新编译后的)

cd /export/softwares

tar -zxvf hadoop-2.7.5.tar.gz -C ../servers/

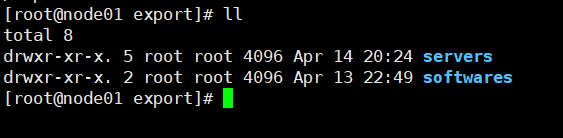

- 在此介绍下我的目录结构安排如下所示:

/export/servies 为所有相关软件的安装目录

/export/softwares 为软件存放目录

2.修改配置文件信息

2.1 core.site.xml文件

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<!-- 指定集群的文件系统类型:分布式文件系统 -->

<property>

<name>fs.default.name</name>

<value>hdfs://node01:8020</value>

</property>

<!-- 指定临时文件存储目录 -->

<property>

<name>hadoop.tmp.dir</name>

<value>/export/servers/hadoop-2.7.5/hadoopDatas/tempDatas</value>

</property>

<!-- 缓冲区大小,实际工作中根据服务器性能动态调整 -->

<property>

<name>io.file.buffer.size</name>

<value>4096</value>

</property>

<!-- 开启hdfs的垃圾桶机制,删除掉的数据可以从垃圾桶中回收,单位分钟 -->

<property>

<name>fs.trash.interval</name>

<value>10080</value>

</property>

</configuration>

2.2 hfds-site.xml文件

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>node01:50090</value>

</property>

<!-- 指定namenode的访问地址和端口 -->

<property>

<name>dfs.namenode.http-address</name>

<value>node01:50070</value>

</property>

<!-- 指定namenode元数据的存放位置 -->

<property>

<name>dfs.namenode.name.dir</name>

<value>file:///export/servers/hadoop-2.7.5/hadoopDatas/namenodeDatas,file:///export/servers/hadoop-2.7.5/hadoopDatas/namenodeDatas2</value>

</property>

<!-- 定义dataNode数据存储的节点位置,实际工作中,一般先确定磁盘的挂载目录,然后多个目录用,进行分割 -->

<property>

<name>dfs.datanode.data.dir</name>

<value>file:///export/servers/hadoop-2.7.5/hadoopDatas/datanodeDatas,file:///export/servers/hadoop-2.7.5/hadoopDatas/datanodeDatas2</value>

</property>

<!-- 指定namenode日志文件的存放目录 -->

<property>

<name>dfs.namenode.edits.dir</name>

<value>file:///export/servers/hadoop-2.7.5/hadoopDatas/nn/edits</value>

</property>

<property>

<name>dfs.namenode.checkpoint.dir</name>

<value>file:///export/servers/hadoop-2.7.5/hadoopDatas/snn/name</value>

</property>

<property>

<name>dfs.namenode.checkpoint.edits.dir</name>

<value>file:///export/servers/hadoop-2.7.5/hadoopDatas/dfs/snn/edits</value>

</property>

<!-- 文件切片的副本个数-->

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<!-- 设置HDFS的文件权限-->

<property>

<name>dfs.permissions</name>

<value>true</value>

</property>

<!-- 设置一个文件切片的大小:128M-->

<property>

<name>dfs.blocksize</name>

<value>134217728</value>

</property>

</configuration>

2.3 mapred-site.xml

<configuration>

<!-- 开启MapReduce小任务模式 -->

<property>

<name>mapreduce.job.ubertask.enable</name>

<value>true</value>

</property>

<!-- 设置历史任务的主机和端口 -->

<property>

<name>mapreduce.jobhistory.address</name>

<value>192.168.100.101:10020</value>

</property>

<!-- 设置网页访问历史任务的主机和端口 -->

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>192.168.100.101:19888</value>

</property>

</configuration>

2.4 yarn-site.xml

<configuration>

<!-- 配置yarn主节点的位置 -->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>node01</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<!-- 开启日志聚合功能 -->

<property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<!-- 设置聚合日志在hdfs上的保存时间 -->

<property>

<name>yarn.log-aggregation.retain-seconds</name>

<value>604800</value>

</property>

<!-- 设置yarn集群的内存分配方案 -->

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>20480</value>

</property>

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>2048</value>

</property>

<property>

<name>yarn.nodemanager.vmem-pmem-ratio</name>

<value>2.1</value>

</property>

</configuration>

2.5 slaves文件删除localhost,添加salve主机名

node01

node02

node03

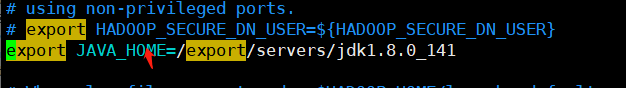

2.6 hadoop-env.sh 修改JAVA_HOME的value

2.7 mapred-env.sh 同理修改JAVA_HOME的value

3.在node1主机上执行如下命令创建以上配置文件中需要的文件夹

mkdir -p /export/servers/hadoop-2.7.5/hadoopDatas/tempDatas

mkdir -p /export/servers/hadoop-2.7.5/hadoopDatas/namenodeDatas

mkdir -p /export/servers/hadoop-2.7.5/hadoopDatas/namenodeDatas2

mkdir -p /export/servers/hadoop-2.7.5/hadoopDatas/datanodeDatas

mkdir -p /export/servers/hadoop-2.7.5/hadoopDatas/datanodeDatas2

mkdir -p /export/servers/hadoop-2.7.5/hadoopDatas/nn/edits

mkdir -p /export/servers/hadoop-2.7.5/hadoopDatas/snn/name

mkdir -p /export/servers/hadoop-2.7.5/hadoopDatas/dfs/snn/edits

4. 把在node01主机上配置的hadoop文件夹分发给node02和node03

cd /export/servers/

scp -r hadoop-2.7.5 node02:$PWD

scp -r hadoop-2.7.5 node03:$PWD

5. 配置hadoop环境变量

- node01-03都需要进行配置

vim /etc/profile

export HADOOP_HOME=/export/servers/hadoop-2.7.5

export PATH=:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH

source /etc/profile

6. 启动集群

- 注意:在第一次启动Hadoop时,需要对其进行格式化操作,因为此时的hdfs的在物理上是不存在的,分别执行如下命令操作。启动HDFS和YARN模块。

cd /export/servers/hadoop-2.7.5/

bin/hdfs namenode -format

sbin/start-dfs.sh

sbin/start-yarn.sh

sbin/mr-jobhistory-daemon.sh start historyserve

- 在浏览器端口进行查看,这里node01需要进行weindows本地ip地址映射才能使用node01访问,如果没有进行映射,就采用node01主机的IP地址进行访问。

- http://node01:50070/ #/ 查看hdfs

- http://192.168.100.101:8088/ #查看yarn集群

- http://192.168.100.101:19888/ #查看历史完成的任务

- 启动hdfs,并且采用查看成功后的进程信息。

[root@node01 hadoop-2.7.5]# sbin/start-dfs.sh

Starting namenodes on [node01]

node01: starting namenode, logging to /export/servers/hadoop-2.7.5/logs/hadoop-root-namenode-node01.out

node03: starting datanode, logging to /export/servers/hadoop-2.7.5/logs/hadoop-root-datanode-node03.out

node02: starting datanode, logging to /export/servers/hadoop-2.7.5/logs/hadoop-root-datanode-node02.out

node01: starting datanode, logging to /export/servers/hadoop-2.7.5/logs/hadoop-root-datanode-node01.out

Starting secondary namenodes [node01]

node01: starting secondarynamenode, logging to /export/servers/hadoop-2.7.5/logs/hadoop-root-secondarynamenode-node01.out

[root@node01 hadoop-2.7.5]# jps

2529 QuorumPeerMain

12931 Jps

12822 SecondaryNameNode

12647 DataNode

12509 NameNode

[root@node01 hadoop-2.7.5]#

- 启动yarn模块。

[root@node01 hadoop-2.7.5]# sbin/start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to /export/servers/hadoop-2.7.5/logs/yarn-root-resourcemanager-node01.out

node03: starting nodemanager, logging to /export/servers/hadoop-2.7.5/logs/yarn-root-nodemanager-node03.out

node02: starting nodemanager, logging to /export/servers/hadoop-2.7.5/logs/yarn-root-nodemanager-node02.out

node01: starting nodemanager, logging to /export/servers/hadoop-2.7.5/logs/yarn-root-nodemanager-node01.out

[root@node01 hadoop-2.7.5]# jps

13105 NodeManager

2529 QuorumPeerMain

12998 ResourceManager

12822 SecondaryNameNode

12647 DataNode

12509 NameNode

13422 Jps

[root@node01 hadoop-2.7.5]#

- leader node主机启动完成之后,切换到follower主机中查看是否 follower node启动。

这里切换到node02主机中,使用jps查看进程信息,发现有DataNode集成,表示启动成功,这个给Hadoop集群搭建成功。

[root@node02 ~]# jps

5666 DataNode

2482 QuorumPeerMain

5945 Jps

5787 NodeManager

[root@node02 ~]#

1243

1243

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?