1 简介

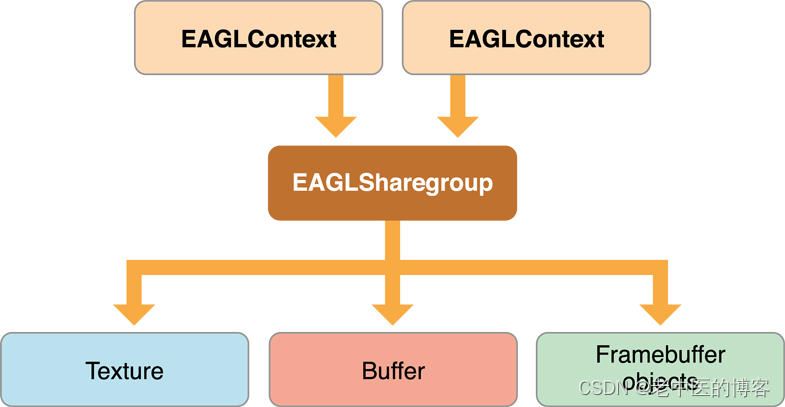

1.1 EAGL(Embedded Apple Graphics Library)

与Android系统使用EGL连接OpenGL ES与原生窗口进行surface输出类似,iOS则用EAGL将CAEAGLLayer作为OpenGL ES输出目标。

与 Android EGL 不同的是,iOS EAGL 不会让应用直接向 BackendFrameBuffer 和 FrontFrameBuffer 进行绘制,也不会让app直接控制双缓冲区的交换,系统自己保留了操作权,以便可以随时使用 Core Animation 合成器来控制显示的最终外观。

OpenGL ES 通过 CAEAGLLayer 与 Core Animation 连接,CAEAGLLayer 是一种特殊类型的 Core Animation 图层,它的内容来自 OpenGL ES 的 RenderBuffer,Core Animation 将 RenderBuffer 的内容与其他图层合成,并在屏幕上显示生成的图像。所以同一时刻可以有任意数量的层。Core Animation 合成器会联合这些层并在后帧缓存中产生最终的像素颜色,然后切换缓存。

一句话就是,OpenGL ES可与CAEAGLLayer共享RenderBuffer(FrameBuffer),用EAGLContext将CAEGLLayer与RenderBuffer进行绑定,可实现OpenGL ES最终输出到CAEGLLayer上并显示出来。

2 显示

2.1 软解

- FFmpeg软解后的输出是AVFrame,其像素数据在uint8_t* data[AV_NUM_DATA_POINTERS]所指向的内存中;

- 在软解码线程里解码后可以播放时,调用SDL_Vout中SDL_VoutOverlay中func_fill_frame方法,通过另辟的SDL_VoutOverlay的AVFrame空间,将FFmpeg解码出来的AVFrame像素数据及其参数copy走,最终将overlay->pixels[]也指向另辟的这块儿像素数据内存;

- 并从FFmpeg解码后的AVFrame填充完毕Frame属性及SDL_VoutOverlay;

- 然后将Frame放到FrameQueue队列尾部,待video_refresh_thread线程消费render;

- 此后在video_refresh_thread线程里把Frame取出来,将pixels[]所指像素数据喂给OpenGL ES进行render;

- 循环以上步骤;

2.1.1 像素源

因此,FFmpeg软解后的像素数据来源了然了,来自AVFrame的uint8_t* data[AV_NUM_DATA_POINTERS],最终是copy到Frame成员SDL_VoutOverlay中。

此处举例说明,不需要转换像素格式的填充:

static void overlay_fill(SDL_VoutOverlay *overlay, AVFrame *frame, int planes)

{

overlay->planes = planes;

for (int i = 0; i < AV_NUM_DATA_POINTERS; ++i) {

overlay->pixels[i] = frame->data[i];

overlay->pitches[i] = frame->linesize[i];

}

}需要转换像素格式的,调用libyuv或sws_scale处理,再入FrameQueue队列。

2.2 硬解

- videotoolbox解码后输出是CVPixelBuffer,为了与FFmpeg统一接口,硬解后将CVPixelBuffer由AVFrame的opaque指向;

- 然后,在硬解码线程入队FrameQueue尾部,待video_refresh_thread线程消费render;

- 随后的逻辑基本与FFmpeg软解一致,在video_refresh_thread线程里把AVFrame取出来,将pixels[]所指向的像素喂给OpenGL ES进行render;

- 具体差异在yuv420sp_vtb_uploadTexture方法里;

- 循环以上步骤;

2.2.1 像素源

所以,videotoolbox硬解后的像素数据来源也清楚了,来自AVFrame的opaque,实际是CVPixelBuffer。

static int func_fill_frame(SDL_VoutOverlay *overlay, const AVFrame *frame)

{

assert(frame->format == IJK_AV_PIX_FMT__VIDEO_TOOLBOX);

CVBufferRef pixel_buffer = CVBufferRetain(frame->opaque);

SDL_VoutOverlay_Opaque *opaque = overlay->opaque;

if (opaque->pixel_buffer != NULL) {

CVBufferRelease(opaque->pixel_buffer);

}

opaque->pixel_buffer = pixel_buffer;

overlay->format = SDL_FCC__VTB;

overlay->planes = 2;

if (CVPixelBufferLockBaseAddress(pixel_buffer, 0) != kCVReturnSuccess) {

overlay->pixels[0] = NULL;

overlay->pixels[1] = NULL;

overlay->pitches[0] = 0;

overlay->pitches[1] = 0;

overlay->w = 0;

overlay->h = 0;

CVBufferRelease(pixel_buffer);

opaque->pixel_buffer = NULL;

return -1;

}

overlay->pixels[0] = NULL;

overlay->pixels[1] = NULL;

overlay->pitches[0] = CVPixelBufferGetWidthOfPlane(pixel_buffer, 0);

overlay->pitches[1] = CVPixelBufferGetWidthOfPlane(pixel_buffer, 1);

CVPixelBufferUnlockBaseAddress(pixel_buffer, 0);

overlay->is_private = 1;

overlay->w = (int)frame->width;

overlay->h = (int)frame->height;

return 0;

}2.3 render

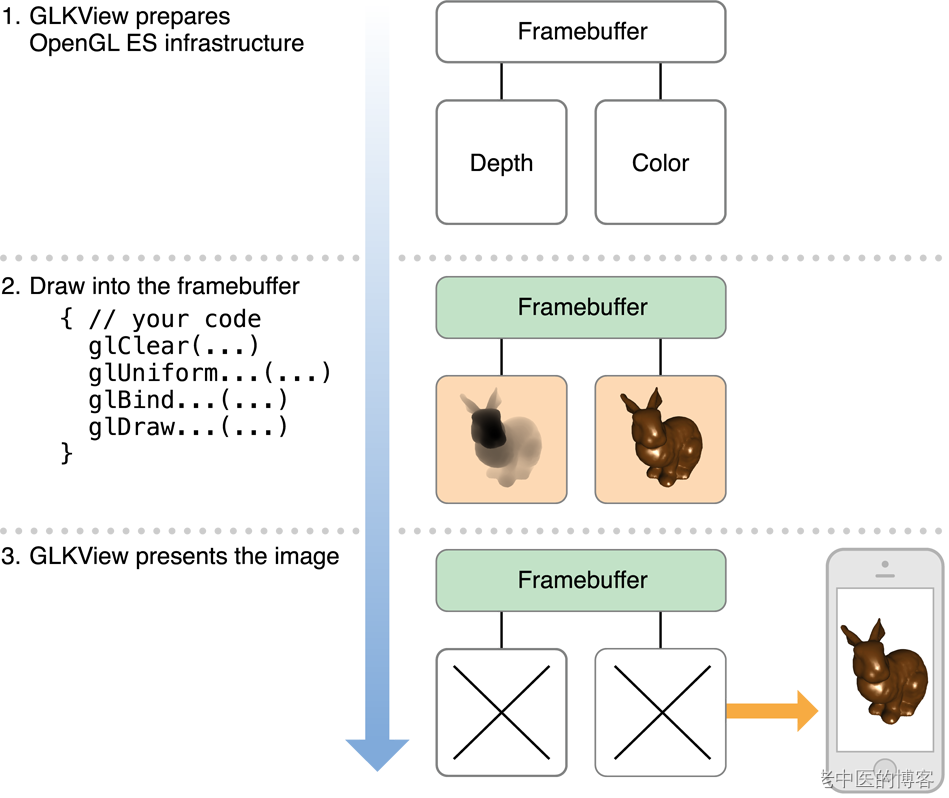

- 首先让UIView的子类视频窗口的layerClass返回CAEAGLLayer;

- 设置CAEAGLLayer的属性,创建EAGLContext,并设置render的上下文;

- 创建FBO和RBO并绑定,再用所创建的EAGLContext调用renderbufferStorage,将GL_RENDERBUFFER与CAEAGLLayer进行绑定;

- 将RBO作为GL_COLOR_ATTACHMENT0附加到FBO上;

- 将EAGLContext上下文切为当前所创建的Context,并用OpenGL ES开始1帧的render工作;

- 然后,调用EAGLContext的presentRenderbuffer方法将RenderBuffer输出到CAEAGLLayer再显示;

- 最后,将把Context切为render之前的Context;

- 重复4~6步骤,继续render下一帧;

让UIView子类窗口返回CAEAGLLayer class:

+ (Class) layerClass

{

return [CAEAGLLayer class];

}设置CAEAGLLayer属性为不透明,并创建EAGLContext,设置render的上下文:

- (BOOL)setupGL

{

if (_didSetupGL)

return YES;

CAEAGLLayer *eaglLayer = (CAEAGLLayer*) self.layer;

// 设置为不透明

eaglLayer.opaque = YES;

eaglLayer.drawableProperties = [NSDictionary dictionaryWithObjectsAndKeys:

[NSNumber numberWithBool:NO], kEAGLDrawablePropertyRetainedBacking,

kEAGLColorFormatRGBA8, kEAGLDrawablePropertyColorFormat,

nil];

_scaleFactor = [[UIScreen mainScreen] scale];

if (_scaleFactor < 0.1f)

_scaleFactor = 1.0f;

[eaglLayer setContentsScale:_scaleFactor];

_context = [[EAGLContext alloc] initWithAPI:kEAGLRenderingAPIOpenGLES2];

if (_context == nil) {

NSLog(@"failed to setup EAGLContext\n");

return NO;

}

EAGLContext *prevContext = [EAGLContext currentContext];

[EAGLContext setCurrentContext:_context];

_didSetupGL = NO;

if ([self setupEAGLContext:_context]) {

NSLog(@"OK setup GL\n");

_didSetupGL = YES;

}

[EAGLContext setCurrentContext:prevContext];

return _didSetupGL;

}创建FBO和RBO并绑定,并将RBO以GL_COLOR_ATTACHMENT0附加到FBO上:

- (BOOL)setupEAGLContext:(EAGLContext *)context

{

glGenFramebuffers(1, &_framebuffer);

glGenRenderbuffers(1, &_renderbuffer);

glBindFramebuffer(GL_FRAMEBUFFER, _framebuffer);

glBindRenderbuffer(GL_RENDERBUFFER, _renderbuffer);

[_context renderbufferStorage:GL_RENDERBUFFER fromDrawable:(CAEAGLLayer*)self.layer];

glGetRenderbufferParameteriv(GL_RENDERBUFFER, GL_RENDERBUFFER_WIDTH, &_backingWidth);

glGetRenderbufferParameteriv(GL_RENDERBUFFER, GL_RENDERBUFFER_HEIGHT, &_backingHeight);

glFramebufferRenderbuffer(GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0, GL_RENDERBUFFER, _renderbuffer);

GLenum status = glCheckFramebufferStatus(GL_FRAMEBUFFER);

if (status != GL_FRAMEBUFFER_COMPLETE) {

NSLog(@"failed to make complete framebuffer object %x\n", status);

return NO;

}

GLenum glError = glGetError();

if (GL_NO_ERROR != glError) {

NSLog(@"failed to setup GL %x\n", glError);

return NO;

}

return YES;

}iOS端显示的主函数,是在iOS端SDL_Vout接口display_overlay中回调的:

- (void)display: (SDL_VoutOverlay *) overlay

{

if (_didSetupGL == NO)

return;

if ([self isApplicationActive] == NO)

return;

if (![self tryLockGLActive]) {

if (0 == (_tryLockErrorCount % 100)) {

NSLog(@"IJKSDLGLView:display: unable to tryLock GL active: %d\n", _tryLockErrorCount);

}

_tryLockErrorCount++;

return;

}

_tryLockErrorCount = 0;

if (_context && !_didStopGL) {

EAGLContext *prevContext = [EAGLContext currentContext];

[EAGLContext setCurrentContext:_context];

[self displayInternal:overlay];

[EAGLContext setCurrentContext:prevContext];

}

[self unlockGLActive];

}具体执行render显示工作:

// NOTE: overlay could be NULl

- (void)displayInternal: (SDL_VoutOverlay *) overlay

{

if (![self setupRenderer:overlay]) {

if (!overlay && !_renderer) {

NSLog(@"IJKSDLGLView: setupDisplay not ready\n");

} else {

NSLog(@"IJKSDLGLView: setupDisplay failed\n");

}

return;

}

[[self eaglLayer] setContentsScale:_scaleFactor];

if (_isRenderBufferInvalidated) {

NSLog(@"IJKSDLGLView: renderbufferStorage fromDrawable\n");

_isRenderBufferInvalidated = NO;

glBindRenderbuffer(GL_RENDERBUFFER, _renderbuffer);

[_context renderbufferStorage:GL_RENDERBUFFER fromDrawable:(CAEAGLLayer*)self.layer];

glGetRenderbufferParameteriv(GL_RENDERBUFFER, GL_RENDERBUFFER_WIDTH, &_backingWidth);

glGetRenderbufferParameteriv(GL_RENDERBUFFER, GL_RENDERBUFFER_HEIGHT, &_backingHeight);

IJK_GLES2_Renderer_setGravity(_renderer, _rendererGravity, _backingWidth, _backingHeight);

}

glBindFramebuffer(GL_FRAMEBUFFER, _framebuffer);

glViewport(0, 0, _backingWidth, _backingHeight);

if (!IJK_GLES2_Renderer_renderOverlay(_renderer, overlay))

ALOGE("[EGL] IJK_GLES2_render failed\n");

glBindRenderbuffer(GL_RENDERBUFFER, _renderbuffer);

[_context presentRenderbuffer:GL_RENDERBUFFER];

int64_t current = (int64_t)SDL_GetTickHR();

int64_t delta = (current > _lastFrameTime) ? current - _lastFrameTime : 0;

if (delta <= 0) {

_lastFrameTime = current;

} else if (delta >= 1000) {

_fps = ((CGFloat)_frameCount) * 1000 / delta;

_frameCount = 0;

_lastFrameTime = current;

} else {

_frameCount++;

}

}首次或overlay的format发生变更,则创建 IJK_GLES2_Renderer实例:

- (BOOL)setupRenderer: (SDL_VoutOverlay *) overlay

{

if (overlay == nil)

return _renderer != nil;

if (!IJK_GLES2_Renderer_isValid(_renderer) ||

!IJK_GLES2_Renderer_isFormat(_renderer, overlay->format)) {

IJK_GLES2_Renderer_reset(_renderer);

IJK_GLES2_Renderer_freeP(&_renderer);

_renderer = IJK_GLES2_Renderer_create(overlay);

if (!IJK_GLES2_Renderer_isValid(_renderer))

return NO;

if (!IJK_GLES2_Renderer_use(_renderer))

return NO;

IJK_GLES2_Renderer_setGravity(_renderer, _rendererGravity, _backingWidth, _backingHeight);

}

return YES;

}3 小节

最后,对iOS端FFmpeg软解和硬解做一个小节:

| 视频显示 | 相同点 | 异同点 |

| FFmpeg | 1.都是通过创建EAGLContext上下文将RenderBuffer与CAEAGLLayer绑定,与OpenGL ES共享RenderBuffer,再render; 2.显示主逻辑是统一的; | 1.像素数据源不同,FFmpeg软解的输出保存在AVFrame的uint8_t* data[AV_NUM_DATA_POINTERS],再转为SDL_VoutOverlay,并填充到Frame,而videotoolbox的输出保存在AVFrame的opaque中,具体是CVPixelBuffer; 2.在给OpenGL ES喂像素数据时不同,FFmpeg解码后直接输出像素数据,因此在func_uploadTexture喂数据时直接喂raw data即可,而videotoolbox则是CVPixelBuffer; |

| videotoolbox |

本文详细描述了iOS平台如何利用EAGL和OpenGLES进行视频解码显示,比较了FFmpeg软解和videotoolbox硬解在像素数据源和OpenGLES渲染过程中的异同,强调了CAEAGLLayer在两者间的桥梁作用。

本文详细描述了iOS平台如何利用EAGL和OpenGLES进行视频解码显示,比较了FFmpeg软解和videotoolbox硬解在像素数据源和OpenGLES渲染过程中的异同,强调了CAEAGLLayer在两者间的桥梁作用。

1479

1479

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?