本文基于Kubernetes1.19版本,以二进制文件方式对如何配置,部署一个启用了安全机制、3节点高可用的Kubernetes集群进行说明。对于测试环境,可以适当进行简化,将某些组件部署为单点。

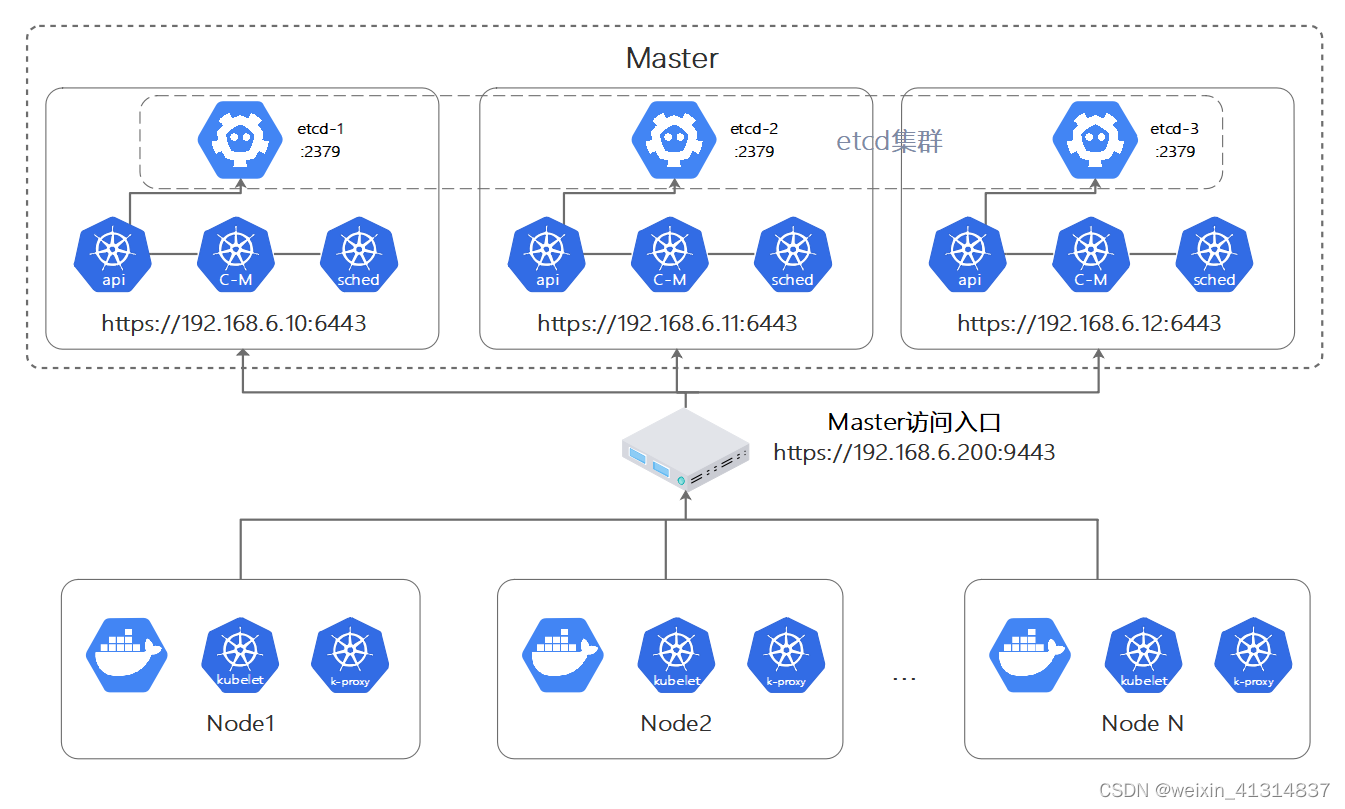

一、k8s高可用集群架构

在正式的环境中应确保Master的高可用,并启用安全访问机制,至少包括以下几方面:

- Master的

kube-apiserver、kube-controller-manager、kube-scheduler服务至少以3个节点的多实例方式部署。 - Master启用基于CA认证的

HTTPS安全机制 - etcd至少以3个节点的集群模式部署。

- etcd集群启用基于CA认证的HTTPS安全机制。

- Master启用RBAC授权模式。

Master的高可用部署架构图:

在Master的3个节点之前,应通过一个负载均衡器提供对客户端的唯一访问入口地址,负载均衡器可以选择硬件或者软件进行搭建,软件负载均衡器可以选择的访问较多,本例中以HAProxy搭配Keepalived为例进行说明。

1. 环境介绍

| 主机 | 系统 | 配置 | IP | 环境 |

|---|---|---|---|---|

| k8s-1 | CentOS Linux release 7.5.1804 (Core) | 2核2G(最低) | 192.168.6.10 | docker19.03、kubernetes1.19.0 |

| k8s-2 | CentOS Linux release 7.5.1804 (Core) | 2核2G(最低) | 192.168.6.11 | docker19.03、kubernetes1.19.0 |

| k8s-3 | CentOS Linux release 7.5.1804 (Core) | 2核2G(最低) | 192.168.6.12 | docker19.03、kubernetes1.19.0 |

| 其他 | 其他 | 其他 | 其他 | node |

| … | … | … | … | … |

2. 服务器规划

| 角色 | IP | 组件 |

|---|---|---|

| k8s-master | 192.168.6.10-12 | kube-apiserver、kube-controller-manager、kube-scheduler、etcd、kubelet、kube-proxy、docker |

| k8s-node | 其他 | docker、kubelet、kube-proxy |

| 负载均衡器 | 192.168.6.200 | keepalived、HAProxy |

二、环境初始化(所有主机)

- 关闭防火墙

[root@linuxli ~]# systemctl stop firewalld

[root@linuxli ~]# systemctl disable firewalld

- 关闭selinux

[root@linuxli ~]# sed -i 's/enforcing/disabled/' /etc/selinux/config

[root@linuxli ~]# setenforce 0

- 关闭swap

[root@linuxli ~]# swapoff -a

[root@linuxli ~]# sed -ri 's/.*swap.*/#&/' /etc/fstab

- 修改主机名(每个主机不同)

[root@linuxli ~]# hostnamectl set-hostname k8s-m1

[root@linuxli ~]# bash

- 在 master 添加 host

[root@k8s-m1 ~]# cat >> /etc/hosts << EOF

192.168.6.10 k8s-m1

192.168.6.11 k8s-m2

192.168.6.12 k8s-m3

EOF

- 将桥接的 IPv4 流量传递到 iptables 的链

[root@k8s-m1 ~]# cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

[root@k8s-m1 ~]# sysctl --system

- 时间同步

[root@k8s-m1 ~]# yum -y install ntpdate

[root@k8s-m1 ~]# ntpdate time.windows.com

- 配置yum源

[root@k8s-m1 ~]# mkdir /etc/yum.repos.d/bak

[root@k8s-m1 ~]# mv /etc/yum.repos.d/*.repo /etc/yum.repos.d/bak/

[root@k8s-m1 ~]# wget -O /etc/yum.repos.d/CentOS-Base.repo https://mirrors.163.com/.help/CentOS7-Base-163.repo

[root@k8s-m1 ~]# wget -O /etc/yum.repos.d/Docker-ce.repo https://mirrors.163.com/docker-ce/linux/centos/docker-ce.repo

三、安装Docker

docker作为kubenetes默认的CRI(运行时),所以必须安装docker

1. yum安装docker(所有主机)

[root@k8s-m1 ~]# yum -y install docker-ce-19.03.0

[root@k8s-m1 ~]# systemctl start docker &&systemctl enable docker

[root@k8s-m1 ~]# docker --version

2. 配置镜像加速

[root@k8s-m1 ~]# cat > /etc/docker/daemon.json << EOF

{

"registry-mirrors": ["https://b9pmyelo.mirror.aliyuncs.com"]

}

EOF

四、创建CA根证书

为etcd和Kubernetes服务启用基于CA认证的安全机制,需要CA证书进行配置。如果组织能够提供统一的CA认证中心,则直接使用组织颁发的CA证书即可。如果没有统一的CA认证中心,则可以通过颁发自签名的CA证书来完成安全配置。

etcd和Kubernetes在制作CA证书时,均需要基于CA根证书,本文以为kubernetes和etcd使用同一套CA根证书为例,对CA证书的制作进行说明

CA证书的制作可以使用openssl、easyrsa、cfssl等工具完成,本文以openssl为例进行 说明,下面是创建CA根证书的命令,包括私钥文件ca.key和证书文件ca.crt

1. 创建CA根证书(k8s-m1上执行)

[root@k8s-m1 ~]# mkdir -p /etc/{etcd,kubernetes}/pki

[root@k8s-m1 ~]# openssl genrsa -out /etc/kubernetes/pki/ca.key 2048

[root@k8s-m1 ~]# openssl req -x509 -new -nodes -key /etc/kubernetes/pki/ca.key -subj "/CN=192.168.6.10" -days36500 -out /etc/kubernetes/pki/ca.crt

主要参数如下:

- -subj: “/CN” 的值为Master主机名或IP地址

- -days:设置证书的有效期

生成的证书保存在/etc/kubernetes/pki目录下

五、部署安全的etcd高可用集群

etcd作为Kubernetes集群的主数据库,在安装Kubernetes各服务之前需要首先安装和启动。

1. 下载etcd二进制文件,配置systemd服务(k8s-m1上执行)

从GitHub官网下载etcd二进制文件,例如etcd-v3.4.13-linux-amd64.tar.gz

下载地址: https://github.com/etcd-io/etcd/releases/download/v3.4.13/etcd-v3.4.13-linux-amd64.tar.gz

[root@k8s-m1 ~]# wget https://github.com/etcd-io/etcd/releases/download/v3.4.13/etcd-v3.4.13-linux-amd64.tar.gz

[root@k8s-m1 ~]# tar -xvf etcd-v3.4.13-linux-amd64.tar.gz

[root@k8s-m1 ~]# cp etcd-v3.4.13-linux-amd64/etcd* /usr/bin/

为etcd创建systemd服务配置文件

[root@k8s-m1 ~]# cat > /usr/lib/systemd/system/etcd.service<< EOF

[Unit]

Description=etcd key-value store

Documentation=https://github.com/etcd-io/etcd

After=network.target

[Service]

EnvironmentFile=/etc/etcd/etcd.conf

ExecStart=/usr/bin/etcd

Restart=always

[Install]

WantedBy=multi-user.target

EOF

2. 创建etcd的CA证书

先创建一个x509v3配置文件etcd_ssl.cnf,其中subjectAltName参数(alt_names)包括所有etcd主机的IP地址

[root@k8s-m1 ~]# cat > /etc/etcd/pki/etcd_ssl.cnf<< EOF

[ req ]

req_extensions = v3_req

distinguished_name = req_distinguished_name

[ req_distinguished_name ]

[ v3_req ]

basicConstraints = CA:FALSE

keyUsage = nonRepudiation, digitalSignature, keyEncipherment

subjectAltName = @alt_names

[ alt_names ]

IP.1 = 192.168.6.10

IP.2 = 192.168.6.11

IP.3 = 192.168.6.12

EOF

然后使用 openssl命令创建etcd的服务端CA证书,包括etcd_server.key和etcd_server.crt

[root@k8s-m1 ~]# openssl genrsa -out /etc/etcd/pki/etcd_server.key 2048

[root@k8s-m1 ~]# openssl req -new -key /etc/etcd/pki/etcd_server.key -config /etc/etcd/pki/etcd_ssl.cnf -subj "/CN=etcd-server" -out /etc/etcd/pki/etcd_server.csr

[root@k8s-m1 ~]# openssl x509 -req -in /etc/etcd/pki/etcd_server.csr -CA /etc/kubernetes/pki/ca.crt -CAkey /etc/kubernetes/pki/ca.key -CAcreateserial -days 36500 -extensions v3_req -extfile /etc/etcd/pki/etcd_ssl.cnf -out /etc/etcd/pki/etcd_server.crt

再创建客户端使用的CA证书,包括etcd_client.key和etcd_client.crt文件,后续供kube-apiserver连接etcd时使用。

[root@k8s-m1 ~]# openssl genrsa -out /etc/etcd/pki/etcd_client.key 2048

[root@k8s-m1 ~]# openssl req -new -key /etc/etcd/pki/etcd_client.key -config /etc/etcd/pki/etcd_ssl.cnf -subj "/CN=etcd-server" -out /etc/etcd/pki/etcd_client.csr

[root@k8s-m1 ~]# openssl x509 -req -in /etc/etcd/pki/etcd_client.csr -CA /etc/kubernetes/pki/ca.crt -CAkey /etc/kubernetes/pki/ca.key -CAcreateserial -days 36500 -extensions v3_req -extfile /etc/etcd/pki/etcd_ssl.cnf -out /etc/etcd/pki/etcd_client.crt

3. etcd配置文件参数说明

接下来对3个etcd节点进行配置,etcd节点的配置方式包括启动参数、环境变量、配置文件等

3个etcd节点将被部署在192.168.6.10、192.168.6.11、192.168.6.12 3台主机上,配置文件/etc/etcd/etcd.conf的内容如下:

创建节点1的配置文件

[root@k8s-m1 ~]# cat > /etc/etcd/etcd.conf<< EOF

ETCD_NAME=etcd1

ETCD_DATA_DIR=/etc/etcd/data

ETCD_CERT_FILE=/etc/etcd/pki/etcd_server.crt

ETCD_KEY_FILE=/etc/etcd/pki/etcd_server.key

ETCD_TRUSTED_CA_FILE=/etc/kubernetes/pki/ca.crt

ETCD_CLIENT_CERT_AUTH=true

ETCD_LISTEN_CLIENT_URLS=https://192.168.6.10:2379

ETCD_ADVERTISE_CLIENT_URLS=https://192.168.6.10:2379

ETCD_PEER_CERT_FILE=/etc/etcd/pki/etcd_server.crt

ETCD_PEER_KEY_FILE=/etc/etcd/pki/etcd_server.key

ETCD_PEER_TRUSTED_CA_FILE=/etc/kubernetes/pki/ca.crt

ETCD_LISTEN_PEER_URLS=https://192.168.6.10:2380

ETCD_INITIAL_ADVERTISE_PEER_URLS=https://192.168.6.10:2380

ETCD_INITIAL_CLUSTER_TOKEN=etcd-cluster

ETCD_INITIAL_CLUSTER="etcd1=https://192.168.6.10:2380,etcd2=https://192.168.6.11:2380,etcd3=https://192.168.6.12:2380"

ETCD_INITIAL_CLUSTER_STATE=new

EOF

将节点1上的各种etcd配置文件与命令发送至其他节点上

[root@k8s-m1 ~]# scp -r /etc/etcd root@192.168.6.11:/etc/

[root@k8s-m1 ~]# scp -r /etc/etcd root@192.168.6.12:/etc/

[root@k8s-m1 ~]# scp /usr/bin/etcd* root@192.168.6.11:/usr/bin

[root@k8s-m1 ~]# scp /usr/bin/etcd* root@192.168.6.12:/usr/bin

[root@k8s-m1 ~]# scp /usr/lib/systemd/system/etcd.service root@192.168.6.11:/usr/lib/systemd/system/

[root@k8s-m1 ~]# scp /usr/lib/systemd/system/etcd.service root@192.168.6.12:/usr/lib/systemd/system/

[root@k8s-m1 ~]# scp -r /etc/kubernetes/ root@192.168.6.11:/etc/

[root@k8s-m1 ~]# scp -r /etc/kubernetes/ root@192.168.6.12:/etc/

修改节点2和节点3的配置文件(在k8s-m2和k8s-m3上执行)

[root@k8s-m2 ~]# cat > /etc/etcd/etcd.conf<< EOF

ETCD_NAME=etcd2

ETCD_DATA_DIR=/etc/etcd/data

ETCD_CERT_FILE=/etc/etcd/pki/etcd_server.crt

ETCD_KEY_FILE=/etc/etcd/pki/etcd_server.key

ETCD_TRUSTED_CA_FILE=/etc/kubernetes/pki/ca.crt

ETCD_CLIENT_CERT_AUTH=true

ETCD_LISTEN_CLIENT_URLS=https://192.168.6.11:2379

ETCD_ADVERTISE_CLIENT_URLS=https://192.168.6.11:2379

ETCD_PEER_CERT_FILE=/etc/etcd/pki/etcd_server.crt

ETCD_PEER_KEY_FILE=/etc/etcd/pki/etcd_server.key

ETCD_PEER_TRUSTED_CA_FILE=/etc/kubernetes/pki/ca.crt

ETCD_LISTEN_PEER_URLS=https://192.168.6.11:2380

ETCD_INITIAL_ADVERTISE_PEER_URLS=https://192.168.6.11:2380

ETCD_INITIAL_CLUSTER_TOKEN=etcd-cluster

ETCD_INITIAL_CLUSTER="etcd1=https://192.168.6.10:2380,etcd2=https://192.168.6.11:2380,etcd3=https://192.168.6.12:2380"

ETCD_INITIAL_CLUSTER_STATE=new

EOF

[root@k8s-m2 ~]# cat > /etc/etcd/etcd.conf<< EOF

ETCD_NAME=etcd3

ETCD_DATA_DIR=/etc/etcd/data

ETCD_CERT_FILE=/etc/etcd/pki/etcd_server.crt

ETCD_KEY_FILE=/etc/etcd/pki/etcd_server.key

ETCD_TRUSTED_CA_FILE=/etc/kubernetes/pki/ca.crt

ETCD_CLIENT_CERT_AUTH=true

ETCD_LISTEN_CLIENT_URLS=https://192.168.6.12:2379

ETCD_ADVERTISE_CLIENT_URLS=https://192.168.6.12:2379

ETCD_PEER_CERT_FILE=/etc/etcd/pki/etcd_server.crt

ETCD_PEER_KEY_FILE=/etc/etcd/pki/etcd_server.key

ETCD_PEER_TRUSTED_CA_FILE=/etc/kubernetes/pki/ca.crt

ETCD_LISTEN_PEER_URLS=https://192.168.6.12:2380

ETCD_INITIAL_ADVERTISE_PEER_URLS=https://192.168.6.12:2380

ETCD_INITIAL_CLUSTER_TOKEN=etcd-cluster

ETCD_INITIAL_CLUSTER="etcd1=https://192.168.6.10:2380,etcd2=https://192.168.6.11:2380,etcd3=https://192.168.6.12:2380"

ETCD_INITIAL_CLUSTER_STATE=new

EOF

4. 启动etcd集群(在三台主机上分别执行)

# systemctl start etcd &&systemctl enable etcd

然后使用etcdctl客户端命令行工具携带客户端CA证书,运行etcdctl endpoint health命令访问etcd集群,验证集群状态是否正常

etcdctl --cacert=/etc/kubernetes/pki/ca.crt --cert=/etc/etcd/pki/etcd_client.crt --key=/etc/etcd/pki/etcd_client.key --endpoints=https://192.168.6.10:2379,https://192.168.6.11:2379,https://192.168.6.12:2379 endpoint health

![![image_1f8rcvst61kv97afr1v1h917j39.png-41.4kB][3]](https://img-blog.csdnimg.cn/c8249f08c1474bda898f9b540e92a838.png)

六、部署安全的 Kubernetes Master高可用集群

1. 下载Kubernetes服务的二进制文件(k8s-m1上执行)

从Github上下载二进制安装文件

v1.19.0下载地址:https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.19.md#v1190

找到Server Binaries,下载对应的的二进制包。

![![image_1f8re05741djgmup1vred7mivq9.png-73.5kB][4]](https://img-blog.csdnimg.cn/f04d5102e37644478335217e43f27638.png)

在Kubernetes的Master节点上需要部署的服务包括etcd、kube-apiserver、kube-controller-manager、kube-scheduler。

在工作节点(Worker Node)上需要部署的服务包括docker、kubelet和kube-proxy,将kubernetes的二进制文件解压后,将二进制执行文件复制到/usr/bin/目录下。

[root@k8s-m1 ~]# wget https://dl.k8s.io/v1.19.0/kubernetes-server-linux-amd64.tar.gz

[root@k8s-m1 ~]# tar -xvf kubernetes-server-linux-amd64.tar.gz

[root@k8s-m1 ~]# cd kubernetes/server/bin/

[root@k8s-m1 bin]# cp kube-apiserver kube-controller-manager kube-scheduler kubectl /usr/bin/

#创建kubernetes的日志存放目录,后续使用

[root@k8s-m1 ~]# mkdir -p /var/log/kubernetes

2. 部署kube-apiserver服务(k8s-m1上执行)

(1)设置kube-apiserver服务需要的CA相关证书。

[root@k8s-m1 ~]# cat >/etc/kubernetes/pki/master_ssl.cnf<< EOF

[ req ]

req_extensions = v3_req

distinguished_name = req_distinguished_name

[ req_distinguished_name ]

[ v3_req ]

basicConstraints = CA:FALSE

keyUsage = nonRepudiation, digitalSignature, keyEncipherment

subjectAltName = @alt_names

[ alt_names ]

DNS.1 = kubernetes

DNS.2 = kubernetes.default

DNS.3 = kubernetes.default.svc

DNS.4 = kubernetes.default.svc.cluster.local

DNS.5 = k8s-m1

DNS.6 = k8s-m2

DNS.7 = k8s-m3

IP.1 = 169.169.0.1

IP.2 = 192.168.6.10

IP.3 = 192.168.6.11

IP.4 = 192.168.6.12

IP.5 = 192.168.6.200

EOF

在该文件中主要需要在subjectAltName字段([alt_names])设置Master服务的全部域名和IP地址,包括:

- DNS主机名:例如

k8s-m1、k8s-m2、k8s-m3 MasterService虚拟服务名称,例如:kubernetes.default等- IP地址:包括各

kube-apiserver所在主机的IP地址和负载均衡器的IP地址,例如192.168.6.10、192.168.6.11、192.168.6.12和192.168.6.200 Master Service虚拟服务的ClusterIP地址,例如169.169.0.1

然后使用openssl命令创建kube-apiserver的服务端CA证书,包括apiserver.key和apiserver.crt文件。

[root@k8s-m1 ~]# openssl genrsa -out /etc/kubernetes/pki/apiserver.key 2048

[root@k8s-m1 ~]# openssl req -new -key /etc/kubernetes/pki/apiserver.key -config /etc/kubernetes/pki/master_ssl.cnf -subj "/CN=192.168.6.10" -out /etc/kubernetes/pki/apiserver.csr

[root@k8s-m1 ~]# openssl x509 -req -in /etc/kubernetes/pki/apiserver.csr -CA /etc/kubernetes/pki/ca.crt -CAkey /etc/kubernetes/pki/ca.key -CAcreateserial -days 36500 -extensions v3_req -extfile /etc/kubernetes/pki/master_ssl.cnf -out /etc/kubernetes/pki/apiserver.crt

(2)为kube-apiserver服务创建systemd服务配置文件

[root@k8s-m1 ~]# cat > /usr/lib/systemd/system/kube-apiserver.service<< EOF

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/etc/kubernetes/apiserver

ExecStart=/usr/bin/kube-apiserver \$KUBE_API_ARGS

Restart=always

[Install]

WantedBy=multi-user.target

EOF

(3)配置文件/etc/kubernetes/apiserver的内容通过环境变量KUBE_API_ARGS设置kube-apiserver的全部启动参数,包括CA安全配置的启动参数

[root@k8s-m1 ~]# cat > /etc/kubernetes/apiserver << EOF

KUBE_API_ARGS="--insecure-port=0 \\

--secure-port=6443 \\

--tls-cert-file=/etc/kubernetes/pki/apiserver.crt \\

--tls-private-key-file=/etc/kubernetes/pki/apiserver.key \\

--client-ca-file=/etc/kubernetes/pki/ca.crt \\

--apiserver-count=3 --endpoint-reconciler-type=master-count \\

--etcd-servers=https://192.168.6.10:2379,https://192.168.6.11:2379,https://192.168.6.12:2379 \\

--etcd-cafile=/etc/kubernetes/pki/ca.crt \\

--etcd-certfile=/etc/etcd/pki/etcd_client.crt \\

--etcd-keyfile=/etc/etcd/pki/etcd_client.key \\

--service-cluster-ip-range=169.169.0.0/16 \\

--service-node-port-range=30000-32767 \\

--allow-privileged=true \\

--logtostderr=false --log-dir=/var/log/kubernetes --v=0"

EOF

主要参数说明:

--secure-port:HTTPS端口号,默认值为6443--insecure-port:HTTP端口号,默认值为8080,设置为0表示关闭HTTP访问--tls-cert-file:服务端CA证书文件全路径--tls-private-key-file:服务端CA私钥文件全路径--client-ca-file:CA根证书全路径--apiserver-count:API Server实例数量--etcd-servers:连接etcd的URL列表--etcd-cafile:etcd使用的CA根证书文件全路径--etcd-certfile:etcd客户端CA证书文件全路径--etcd-keyfile:etcd客户端私钥文件全路径--service-cluster-ip-range:Service虚拟IP地址范围,该IP范围不能与物理机的IP地址有重合--service-node-port-range:Service可使用的物理机端口号范围--allow-privileged:是否允许容器以特权模式运行,默认值为true--logtostderr:是否将日志输出到stderr,默认为true--log-dir:日志的输出目录--v:日志级别

(4)启动kube-apiserver服务,并设置为开机自启

[root@k8s-m1 ~]# systemctl start kube-apiserver.service &&systemctl enable kube-apiserver.service

[root@k8s-m1 ~]# systemctl status kube-apiserver.service

![![image_1f8rt7a6elr1dgg1cna1qra16ph9.png-79.3kB][5]](https://img-blog.csdnimg.cn/16a360707749453b89995b11a553a69a.png)

3. 创建客户端CA证书(k8s-m1上执行)

kube-controller-manager、kube-scheduler、kubelet和kube-proxy服务作为客户端连接kube-apiserver服务,需要为它们创建客户端CA证书进行访问。

(1)通过openssl工具创建CA证书和私钥文件

[root@k8s-m1 ~]# openssl -new -key /etc/kubernetes/pki/client.key -subj "/CN=admin" -out /etc/kubernetes/pki/client.csr

[root@k8s-m1 ~]# openssl req -new -key /etc/kubernetes/pki/client.key -subj "/CN=admin" -out /etc/kubernetes/pki/client.csr

[root@k8s-m1 ~]# openssl x509 -req -in /etc/kubernetes/pki/client.csr -CA /etc/kubernetes/pki/ca.crt -CAkey /etc/kubernetes/pki/ca.key -CAcreateserial -days 36500 -out /etc/kubernetes/pki/client.crt

4.创建客户端连接kube-apiserver服务所需的kubeconfig配置文件(k8s-m1上执行)

为kube-controller-manager、kube-scheduler、kubelet和kube-proxy服务统一创建一个kubeconfig文件作为连接kube-apiserver服务的配置文件,后续也作为kebectl命令行工具连接kube-apiserver服务的配置文件

[root@k8s-m1 ~]# cat > /etc/kubernetes/kubeconfig << EOF

apiVersion: v1

kind: Config

clusters:

- name: default

cluster:

server: https://192.168.6.200:9443

certificate-authority: /etc/kubernetes/pki/ca.crt

users:

- name: admin

user:

client-certificate: /etc/kubernetes/pki/client.crt

client-key: /etc/kubernetes/pki/client.key

contexts:

- context:

cluster: default

user: admin

name: default

current-context: default

EOF

关键配置参数:

server URL地址:配置为负载均衡器(HAProxy)使用的VIP地址和HAProxy监听的端口号client-certificate:配置为客户端证书文件全路径client-key:配置为客户端私钥文件全路径certificate-authority:配置为CA根证书全路径users中的user name和context中的user:连接API Server的用户名,设置为与客户端证书中的“/CN”名称保持一致

5. 部署kube-controller-manager服务(k8s-m1上执行)

(1)为kube-controller-manager服务创建systemd服务配置文件

[root@k8s-m1 ~]# cat > /usr/lib/systemd/system/kube-controller-manager.service<< EOF

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/etc/kubernetes/controller-manager

ExecStart=/usr/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_ARGS

Restart=always

[Install]

WantedBy=multi-user.target

EOF

(2)配置文件/etc/kubernetes/controller-manager的内容为通过环境变量KUBE_CONTROLLER_MANAGER_ARGS设置的kube-controller-manager的全部启动参数

[root@k8s-m1 ~]# cat > /etc/kubernetes/controller-manager<< EOF

KUBE_CONTROLLER_MANAGER_ARGS="--kubeconfig=/etc/kubernetes/kubeconfig \\

--leader-elect=true \\

--service-cluster-ip-range=169.169.0.0/16 \\

--service-account-private-key-file=/etc/kubernetes/pki/apiserver.key \\

--root-ca-file=/etc/kubernetes/pki/ca.crt \\

--log-dir=/var/log/kubernetes --logtostderr=false --v=0"

EOF

主要参数说明:

- –kubeconfig:与API Server连接的相关配置

--leader-elect:启用选举机制,在3个节点的环境中应被设置为true- –service-account-private-key-file:为ServiceAccount自动颁发token使用的私钥文件全路径

- –root-ca-file:CA根证书全路径

- –service-cluster-ip-range:Service虚拟IP地址范围,与kube-apiserver服务中的配置保持一致。

(3)启动kube-controller-manager服务,并设置为开机自启动

[root@k8s-m1 ~]# systemctl start kube-controller-manager.service &&systemctl enable kube-controller-manager.service

[root@k8s-m1 ~]# systemctl status kube-controller-manager.service

![![image_1f8s02bvaf7t1kir172e1vego6dm.png-89kB][6]](https://img-blog.csdnimg.cn/b80fe2a4314d4410ac02fe9b82a90d07.png)

6. 部署kube-scheduler服务(k8s-m1上)

(1)为kube-scheduler服务创建systemd服务配置文件

[root@k8s-m1 ~]# cat > /usr/lib/systemd/system/kube-scheduler.service<< EOF

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/etc/kubernetes/scheduler

ExecStart=/usr/bin/kube-scheduler \$KUBE_SCHEDULER_ARGS

Restart=always

[Install]

WantedBy=multi-user.target

EOF

(2)配置文件/etc/kubernetes/scheduler的内容通过环境变量KUBE_SCHEDULER_ARGS设置的kube-scheduler的全部启动参数

[root@k8s-m1 ~]# cat > /etc/kubernetes/scheduler<< EOF

KUBE_SCHEDULER_ARGS="--kubeconfig=/etc/kubernetes/kubeconfig \\

--leader-elect=true \\

--logtostderr=false --log-dir=/var/log/kubernetes --v=0"

EOF

(3)启动kube-scheduler服务,并设置为开启自启动

[root@k8s-m1 ~]# systemctl start kube-scheduler.service &&systemctl enable kube-scheduler.service

[root@k8s-m1 ~]# systemctl status kube-scheduler.service

![![image_1f8s11fsc1ip11glcb1o11cu1s3r1j.png-90.7kB][7]](https://img-blog.csdnimg.cn/2ca7c9f955074fc99989d114b7841f26.png)

7. 将生成的kube-apiserver、kube-controller-manager、kube-scheduler各种文件传送至另外俩个节点并启动相关服务(3台主机分别执行)

#k8s-m1

[root@k8s-m1 ~]# scp /usr/bin/kube* root@k8s-m2:/usr/bin/

[root@k8s-m1 ~]# scp /usr/bin/kube* root@k8s-m3:/usr/bin/

[root@k8s-m1 ~]# scp -r /etc/kubernetes root@k8s-m2:/etc/

[root@k8s-m1 ~]# scp -r /etc/kubernetes root@k8s-m3:/etc/

[root@k8s-m1 ~]# scp /usr/lib/systemd/system/kube-* root@k8s-m2:/usr/lib/systemd/system/

[root@k8s-m1 ~]# scp /usr/lib/systemd/system/kube-* root@k8s-m3:/usr/lib/systemd/system/

#k8s-m2

[root@k8s-m2 ~]# mkdir -p /var/log/kubernetes

[root@k8s-m2 ~]# systemctl start kube-apiserver.service &&systemctl start kube-controller-manager.service &&systemctl start kube-scheduler.service

[root@k8s-m2 ~]# systemctl enable kube-apiserver.service &&systemctl enable kube-controller-manager.service &&systemctl enable kube-scheduler.service

[root@k8s-m2 ~]# systemctl status kube-apiserver.service &&systemctl status kube-controller-manager.service &&systemctl status kube-scheduler.service

#k8s-m3

[root@k8s-m3 ~]# mkdir -p /var/log/kubernetes

[root@k8s-m3 ~]# systemctl start kube-apiserver.service &&systemctl start kube-controller-manager.service &&systemctl start kube-scheduler.service

[root@k8s-m3 ~]# systemctl enable kube-apiserver.service &&systemctl enable kube-controller-manager.service &&systemctl enable kube-scheduler.service

[root@k8s-m3 ~]# systemctl status kube-apiserver.service &&systemctl status kube-controller-manager.service &&systemctl status kube-scheduler.service

8. 使用HAProxy和keepalived部署高可用负载均衡器

将HAProxy和keepalived均部署为至少有两个实例的高可用架构,以免单点故障。下面以在192.168.6.10和192.168.6.11两台服务器部署为例进行说明。HAProxy负责将客户端请求转发到后端的3个kube-apiserver实例上,keepalived负责维护VIP 192.168.6.200的高可用。

HAProxy和keepalived的部署架构图

![![image_1f8s30cn519o0j88tr1bad19fd20.png-82kB][8]](https://img-blog.csdnimg.cn/e3f83f6bd13e4822b0c5f62ebf9a001c.png)

(1)部署两个HAproxy实例(k8s-m1和k8s-m2上执行)

准备HAProxy的配置文件haproxy.cfg

# mkdir -p /opt/k8s-ha-keep

# cat > /opt/k8s-ha-keep/haproxy.cfg<< EOF

global

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4096

user haproxy

group haproxy

daemon

stats socket /var/lib/haproxy/stats

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

frontend kube-apiserver

mode tcp

bind *:9443

option tcplog

default_backend kube-apiserver

listen stats

mode http

bind *:8888

stats auth admin:password

stats refresh 5s

stats realm HAProxy\ Statistics

stats uri /stats

log 127.0.0.1 local3 err

backend kube-apiserver

mode tcp

balance roundrobin

server k8s-master1 192.168.6.10:6443 check

server k8s-master2 192.168.6.11:6443 check

server k8s-master3 192.168.6.12:6443 check

EOF

主要参数说明:

frontend:HAProxy的监听协议和端口号,使用TCP,端口号为9443backend:后端3个kube-apiserver的地址listen stats: 状态监控的服务配置

在两台服务器192.168.6.10和192.168.6.11上启动HAProxy,以docker方式启动

# docker run -d --name k8s-haproxy --net=host --restart=always -v /opt/k8s-ha-keep/haproxy.cfg:/usr/local/etc/haproxy/haproxy.cfg:ro haproxytech/haproxy-debian:2.3

通过浏览器访问http://192.168.6.10:8888/stats地址即可访问HAProxy的管理页面

![![image_1f8s4tmr8i7p1dap175u1hflvmi2d.png-131.7kB][9]](https://img-blog.csdnimg.cn/2ad1fcbb629e4c308ad892c56df2cd6e.png)

(2)部署两个keepalived实例(在k8s-m1和k8s-m2上执行)

keepalived用于维护VIP地址的高可用,同样在192.168.6.10和192.168.6.11两台服务器上进行部署。主要需要配置keepalived监控HAProxy的运行状态,当某个HAProxy实例不可用时,自动将VIP地址切换到另一个主机上。

在第一台服务器192.168.6.10上创建配置文件keepalived

[root@k8s-m1 ~]# # cat >/opt/k8s-ha-keep/keepalived.conf<< EOF

! Configuration File for keepalived

global_defs {

router_id LVS_1

}

vrrp_script checkhaproxy

{

script "/usr/bin/check-haproxy.sh"

interval 2

weighr -30

}

vrrp_instance VI_1 {

state MASTER

interface ens32

virtual_router_id 51

priority 100

advert_int 1

virtual_ipaddress {

192.168.6.200/24 dev ens32

}

authentication {

auth_type PASS

auth_pass password

}

track_script {

checkhaproxy

}

}

EOF

主要参数说明:

vrrp_instance VI_1:设置keepalived虚拟路由器VRRP的名称。state:设置为“MASTER”,将其他keepalived均设置为“BACKUP”。interface:待设置VIP地址的网卡名称virtual_router_id:例如 51priority:优先级。virtual_ipaddress:VIP地址authentication:访问keepalived服务的鉴权信息track_script:HAProxy健康检查脚本

创建健康检查的脚本(两台主机上一样)

# vim /opt/k8s-ha-keep/check-haproxy.sh

#!/bin/bash

count=`netstat -apn|grep 9443|wc -l`

if [ $count -gt 0 ];then

exit 0

else

exit 1

fi

在第二台服务器192.168.6.11上创建配置文件keepalived

[root@k8s-m2 ~]# # cat >/opt/k8s-ha-keep/keepalived.conf<< EOF

! Configuration File for keepalived

global_defs {

router_id LVS_1

}

vrrp_script checkhaproxy

{

script "/usr/bin/check-haproxy.sh"

interval 2

weighr -30

}

vrrp_instance VI_1 {

state BACKUP

interface ens32

virtual_router_id 51

priority 100

advert_int 1

virtual_ipaddress {

192.168.6.200/24 dev ens32

}

authentication {

auth_type PASS

auth_pass password

}

track_script {

checkhaproxy

}

}

EOF

在两台服务器192.168.6.10和192.168.6.11上启动keepalived,以docker方式启动

# docker run -d --name k8s-keepalived --restart=always --net=host --cap-add=NET_ADMIN --cap-add=NET_BROADCAST --cap-add=NET_RAW -v /opt/k8s-ha-keep/keepalived.conf:/container/service/keepalived/assets/keepalived.conf -v /opt/k8s-ha-keep/check-haproxy.sh:/usr/bin/check-haproxy.sh osixia/keepalived:2.0.20 --copy-service

![![image_1f8tt27th3951hic25chj1ija9.png-95.1kB][10]](https://img-blog.csdnimg.cn/77f5050c0e464110b309d8a705cb49f7.png)

使用curl命令即可验证通过HAProxy的192.168.6.200:9443地址是否可以访问到kube-apiserver服务

curl -v -k https://192.168.6.200:9443

* About to connect() to 192.168.6.200 port 9443 (#0)

* Trying 192.168.6.200...

* Connected to 192.168.6.200 (192.168.6.200) port 9443 (#0)

* Initializing NSS with certpath: sql:/etc/pki/nssdb

* skipping SSL peer certificate verification

* NSS: client certificate not found (nickname not specified)

* SSL connection using TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384

* Server certificate:

* subject: CN=192.168.6.10

* start date: 6月 23 07:06:50 2021 GMT

* expire date: 5月 30 07:06:50 2121 GMT

* common name: 192.168.6.10

* issuer: CN=192.168.6.10

> GET / HTTP/1.1

> User-Agent: curl/7.29.0

> Host: 192.168.6.200:9443

> Accept: */*

>

< HTTP/1.1 401 Unauthorized

< Cache-Control: no-cache, private

< Content-Type: application/json

< Date: Thu, 24 Jun 2021 02:22:39 GMT

< Content-Length: 165

<

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {

},

"status": "Failure",

"message": "Unauthorized",

"reason": "Unauthorized",

"code": 401

* Connection #0 to host 192.168.6.200 left intact

}

七、部署Node的服务

在Node上需要部署Docker、kubelet、kube-proxy,在成功加入kubernetes集群后,还需要部署CNI网络插件,DNS插件等管理组件。

[root@k8s-m1 ~]# cd /root/kubernetes/server/bin/

[root@k8s-m1 bin]# cp kubelet kube-proxy /usr/bin/

本文以将192.168.6.10、192.168.6.11、192.168.6.12三台主机部署为Node为例进行说明,由于这三台主机都是Master节点,所以最终部署结果为一个包含三个Node的Kubernetes集群

1. 部署kubelet服务

(1)为kubelet服务创建systemd服务配置文件(k8s-m1上执行)

[root@k8s-m1 ~]# cat > /usr/lib/systemd/system/kubelet.service<< EOF

[Unit]

Description=Kubernetes Kubelet Server

Documentation=https://github.com/kubernetes/kubernetes

After=docker.target

[Service]

EnvironmentFile=/etc/kubernetes/kubelet

ExecStart=/usr/bin/kubelet \$KUBELET_ARGS

Restart=always

[Install]

WantedBy=multi-user.target

EOF

(2)配置文件/etc/kubernetes/kubelet的内容为通过环境变量KUBELET_ARGS设置的kubelet的全部启动参数

[root@k8s-m1 ~]# cat > /etc/kubernetes/kubelet<< EOF

KUBELET_ARGS="--kubeconfig=/etc/kubernetes/kubeconfig --config=/etc/kubernetes/kubelet.config \\

--hostname-override=192.168.6.10 \\

--network-plugin=cni \\

--pod-infra-container-image=docker.io/juestnow/pause-amd64:3.2 \\

--logtostderr=false --log-dir=/var/log/kubernetes --v=0"

EOF

主要参数说明:

--kubeconfig:设置与APIserver连接的相关配置,可以与kube-controller-manager使用的kubeconfig文件相同。需要将相关客户端证书从Master主机复制到Node主机的/etc/kubernetes/pki目录下。--config:kubelet配置文件--hostname-override:设置本Node在集群中的名称。--network-plugin:网络插件类型,建议使用CNI网络插件--pod-infra-container-image:设置pause镜像

建立kubelet.config配置文件

[root@k8s-m1 ~]# cat > /etc/kubernetes/kubelet.config<< EOF

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 0.0.0.0

port: 10250

cgroupDriver: cgroupfs

clusterDNS: ["169.169.0.100"]

clusterDomain: cluster.local

authentication:

anonymous:

enabled: true

EOF

kubelet参数说明:

address:服务监听IP地址port:服务监听端口号cgroupDriver:设置为cgroupDriver驱动,默认值为cgroupfs。clusterDNS:集群DNS服务的IP地址。clusterDomain:服务DNS域名后缀authentication:设置是否允许匿名访问或者是否使用webhook进行鉴权

(3)在配置文件准备完毕后,将配置文件传送到其他主机上(k8s-m1上执行)

[root@k8s-m1 ~]# scp -r /usr/bin/kubelet /usr/bin/kube-proxy root@192.168.6.11:/usr/bin/

[root@k8s-m1 ~]# scp -r /usr/bin/kubelet /usr/bin/kube-proxy root@192.168.6.12:/usr/bin/

[root@k8s-m1 ~]# scp /usr/lib/systemd/system/kubelet.service root@192.168.6.11:/usr/lib/systemd/system/

[root@k8s-m1 ~]# scp /usr/lib/systemd/system/kubelet.service root@192.168.6.12:/usr/lib/systemd/system/

[root@k8s-m1 ~]# scp /etc/kubernetes/kubelet /etc/kubernetes/kubelet.config root@192.168.6.11:/etc/kubernetes/

[root@k8s-m1 ~]# scp /etc/kubernetes/kubelet /etc/kubernetes/kubelet.config root@192.168.6.12:/etc/kubernetes/

(4)在所有节点上启动kubelet服务并设置为开机自启(3台主机上执行)

#k8s-m1

[root@k8s-m1 ~]# systemctl start kubelet &&systemctl enable kubelet

#k8s-m2

[root@k8s-m2 ~]# sed -i 's/192.168.6.10/192.168.6.11/g' /etc/kubernetes/kubelet

[root@k8s-m2 ~]# systemctl start kubelet &&systemctl enable kubelet

#k8s-m3

[root@k8s-m3 ~]# sed -i 's/192.168.6.10/192.168.6.12/g' /etc/kubernetes/kubelet

[root@k8s-m2 ~]# systemctl start kubelet &&systemctl enable kubelet

2. 部署kube-proxy服务

(1)为kube-proxy服务创建systemd服务配置文件(k8s-m1上执行)

[root@k8s-m1 ~]# cat > /usr/lib/systemd/system/kube-proxy.service<< EOF

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

EnvironmentFile=/etc/kubernetes/proxy

ExecStart=/usr/bin/kube-proxy \$KUBE_PROXY_ARGS

Restart=always

[Install]

WantedBy=multi-user.target

EOF

(2)配置文件/etc/kubernetes/proxy的内容为通过环境变量KUBE_PROXY_ARGS设置的kube-proxy的全部启动参数

[root@k8s-m1 ~]# cat > /etc/kubernetes/proxy<< EOF

KUBE_PROXY_ARGS="--kubeconfig=/etc/kubernetes/kubeconfig \\

--hostname-override=192.168.6.10 \\

--proxy-mode=iptables \\

--logtostderr=false --log-dir=/var/log/kubernetes --v=0"

EOF

主要参数说明:

--kubeconfig:设置与APIserver连接的相关配置,可以与kube-controller-manager使用的kubeconfig文件相同。需要将相关客户端证书从Master主机复制到Node主机的/etc/kubernetes/pki目录下。--hostname-override:设置本Node在集群中的名称。--proxy-mode:代理模式,包括iptables、ipvs、kernelspace等

(3)在配置文件准备完毕后,将配置文件传送到其他主机上(k8s-m1上执行)

[root@k8s-m1 ~]# scp /usr/lib/systemd/system/kube-proxy.service root@192.168.6.11:/usr/lib/systemd/system/

[root@k8s-m1 ~]# scp /usr/lib/systemd/system/kube-proxy.service root@192.168.6.12:/usr/lib/systemd/system/

[root@k8s-m1 ~]# scp /etc/kubernetes/proxy root@192.168.6.11:/etc/kubernetes/

[root@k8s-m1 ~]# scp /etc/kubernetes/proxy root@192.168.6.12:/etc/kubernetes/

(4)在所有节点上启动kube-proxy服务并设置为开机自启(3台主机上执行)

#k8s-m1

[root@k8s-m1 ~]# systemctl start kube-proxy.service &&systemctl enable kube-proxy.service

#k8s-m2

[root@k8s-m2 ~]# sed -i 's/192.168.6.10/192.168.6.11/g' /etc/kubernetes/proxy

[root@k8s-m2 ~]# systemctl start kube-proxy.service &&systemctl enable kube-proxy.service

#k8s-m3

[root@k8s-m3 ~]# sed -i 's/192.168.6.10/192.168.6.12/g' /etc/kubernetes/proxy

[root@k8s-m3 ~]# systemctl start kube-proxy.service &&systemctl enable kube-proxy.service

3. 在Master上通过kubectl验证Node信息

在各个Node的kubelet和kube-proxy服务正常启动之后,会将本Node自动注册到Master上,然后就可以到Master主机上通过kubectl查询自动注册到Kubernetes集群的Node的信息了。

[root@k8s-m1 ~]# kubectl --kubeconfig=/etc/kubernetes/kubeconfig get nodes

NAME STATUS ROLES AGE VERSION

192.168.6.10 NotReady <none> 34m v1.19.0

192.168.6.11 NotReady <none> 24m v1.19.0

192.168.6.12 NotReady <none> 23m v1.19.0

为Kubernetes集群部署CNI网络,这里选择calico CNI插件

[root@k8s-m1 ~]# alias kubectl="kubectl --kubeconfig=/etc/kubernetes/kubeconfig"

[root@k8s-m1 ~]# kubectl apply -f "https://docs.projectcalico.org/manifests/calico.yaml"

在CNI网络插件成功运行之后,Node的状态会更新为"Ready"

[root@k8s-m1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

192.168.6.10 Ready <none> 44m v1.19.0

192.168.6.11 Ready <none> 35m v1.19.0

192.168.6.12 Ready <none> 34m v1.19.0

5569

5569

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?