详细:

k8s内部使用helm安装harbor镜像仓库,整体上和docker-compose部署的大同小异。

官方helm商店地址:

话不多说,开始

1.提前准备StorageClasses,使用nfs网络文件系统

如果服务器没有安装nfs的,参考我的文章k8s部署nginx+NFS+SSL_kubernetes nginx ssl-CSDN博客

#挂载配置文件

cat provisioner.yaml

```

apiVersion: apps/v1

kind: Deployment # provisioner的类型是一个deployment

metadata:

name: harbor-nfs-client-provisioner

labels:

app: harbor-nfs-client-provisioner

namespace: harbor # 指定provisioner所属的namespace,改成你自己的namespace

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: harbor-nfs-client-provisioner

template:

metadata:

labels:

app: harbor-nfs-client-provisioner

spec:

serviceAccountName: harbor-nfs-client-provisioner # 指定provisioner使用的sa

containers:

- name: harbor-nfs-client-provisioner

image: vbouchaud/nfs-client-provisioner:latest # 指定provisioner的镜像

volumeMounts:

- name: nfs-client-harbor

mountPath: /persistentvolumes # 固定写法

env:

- name: PROVISIONER_NAME

value: harbor-storage-class # 指定分配器的名称,创建storageclass会用到

- name: NFS_SERVER

value: x.x.x.x # 指定使用哪一块存储,这里用的是nfs,此处填写nfs的地址

- name: NFS_PATH

value: /nfs/harbor # 使用nfs哪一块盘符

volumes:

- name: nfs-client-harbor

nfs:

server: x.x.x.x # 和上面指定的nfs地址保持一致

path: /nfs/harbor # 和上面指定的盘符保持一致

```

#账号权限rbac

cat rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: harbor-nfs-client-provisioner

namespace: harbor

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: harbor-nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-harbor-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: harbor-nfs-client-provisioner

namespace: harbor

roleRef:

kind: ClusterRole

name: harbor-nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-harbor-nfs-client-provisioner

namespace: harbor

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-harbor-nfs-client-provisioner

namespace: harbor

subjects:

- kind: ServiceAccount

name: harbor-nfs-client-provisioner

namespace: harbor

roleRef:

kind: Role

name: leader-locking-harbor-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

#sc文件

cat sc.yaml

```

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: harbor-sc

namespace: harbor

provisioner: harbor-storage-class

parameters:

archiveOnDelete: "true" ## 删除pv的时候,pv的内容是否要备份

allowVolumeExpansion: true

```

#创建ns

kubectl create ns harbor

#创建sc

kubectl create -f provisioner.yaml -f rbac.yaml -f sc.yaml

#查看sc

[root@master01 harbor]# kubectl get sc | grep harbor

harbor-sc harbor-storage-class Delete Immediate true 1h

[root@master01 harbor]# kubectl get pod -n harbor

NAME READY STATUS RESTARTS AGE

harbor-nfs-client-provisioner-59cc7977c6-62vjm 1/1 Running 0 1h2.下载修改安装Harbor

当然如果你不喜欢用SC,可以自建pv+pvc解决持久化问题。

在harbor/values.yaml里面的existingClaim: ""修改为你创建好的pvc名字。

#添加repo

helm repo add harbor https://helm.goharbor.io

#下载解压

helm pull harbor/harbor --version 1.14.2

tar -xzf harbor-1.14.2.tgz

#修改默认值

vim harbor/values.yaml

```

29 ingress:

30 hosts:

31 core: webharbor.xxx.com #外部访问

106 externalURL: http://harbor.xxx.com #内部访问

110 internalTLS:

112 enabled: false

178 persistence:

193 storageClass: "harbor-sc"

201 storageClass: "harbor-sc"

210 storageClass: "harbor-sc"

219 storageClass: "harbor-sc"

226 storageClass: "harbor-sc"

346 existingSecretAdminPasswordKey: HARBOR_ADMIN_PASSWORD

347 harborAdminPassword: "123123"

752 database:

765 password: "456456"

```

#安装运行

helm install -n harbor harbor harbor/

#查看pod,svc,ingress

[root@master01 harbor]# kubectl get pod,svc,ingress -n harbor

NAME READY STATUS RESTARTS AGE

pod/harbor-core-54576d4c5b-6jxbk 1/1 Running 0 1h

pod/harbor-database-0 1/1 Running 0 1h

pod/harbor-jobservice-5b6687c4d6-tn7k7 1/1 Running 0 1h

pod/harbor-nfs-client-provisioner-59cc7977c6-62vjm 1/1 Running 0 1h

pod/harbor-portal-66b88c5b74-62ptd 1/1 Running 0 1h

pod/harbor-redis-0 1/1 Running 0 1h

pod/harbor-registry-77dc686f45-8slpq 2/2 Running 0 1h

pod/harbor-trivy-0 1/1 Running 0 1h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/harbor-core ClusterIP 10.101.189.9 <none> 80/TCP 1h

service/harbor-database ClusterIP 10.102.15.48 <none> 5432/TCP 1h

service/harbor-jobservice ClusterIP 10.106.137.127 <none> 80/TCP 1h

service/harbor-portal NodePort 10.98.119.164 <none> 80:31001/TCP 1h

service/harbor-redis ClusterIP 10.101.222.243 <none> 6379/TCP 1h

service/harbor-registry ClusterIP 10.96.79.152 <none> 5000/TCP,8080/TCP 1h

service/harbor-trivy ClusterIP 10.98.2.75 <none> 8080/TCP 1h

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress.networking.k8s.io/harbor-ingress <none> webharbor.xxx.com,harbor.xxx.com 172.28.42.150 80 1h

webharbor.xxx.com,harbor.xxx.com,这个是外+内域名访问(你的域名映射决定),提升镜像拉取速度又不用公网,然后IP是内部LB的IP,挂一个nginx转发的。

如果你发现你只有一个ingress,那么就kubectl edit ingress -n harbor harbor-ingress 把webharbor的copy一份改一个域名就好了。

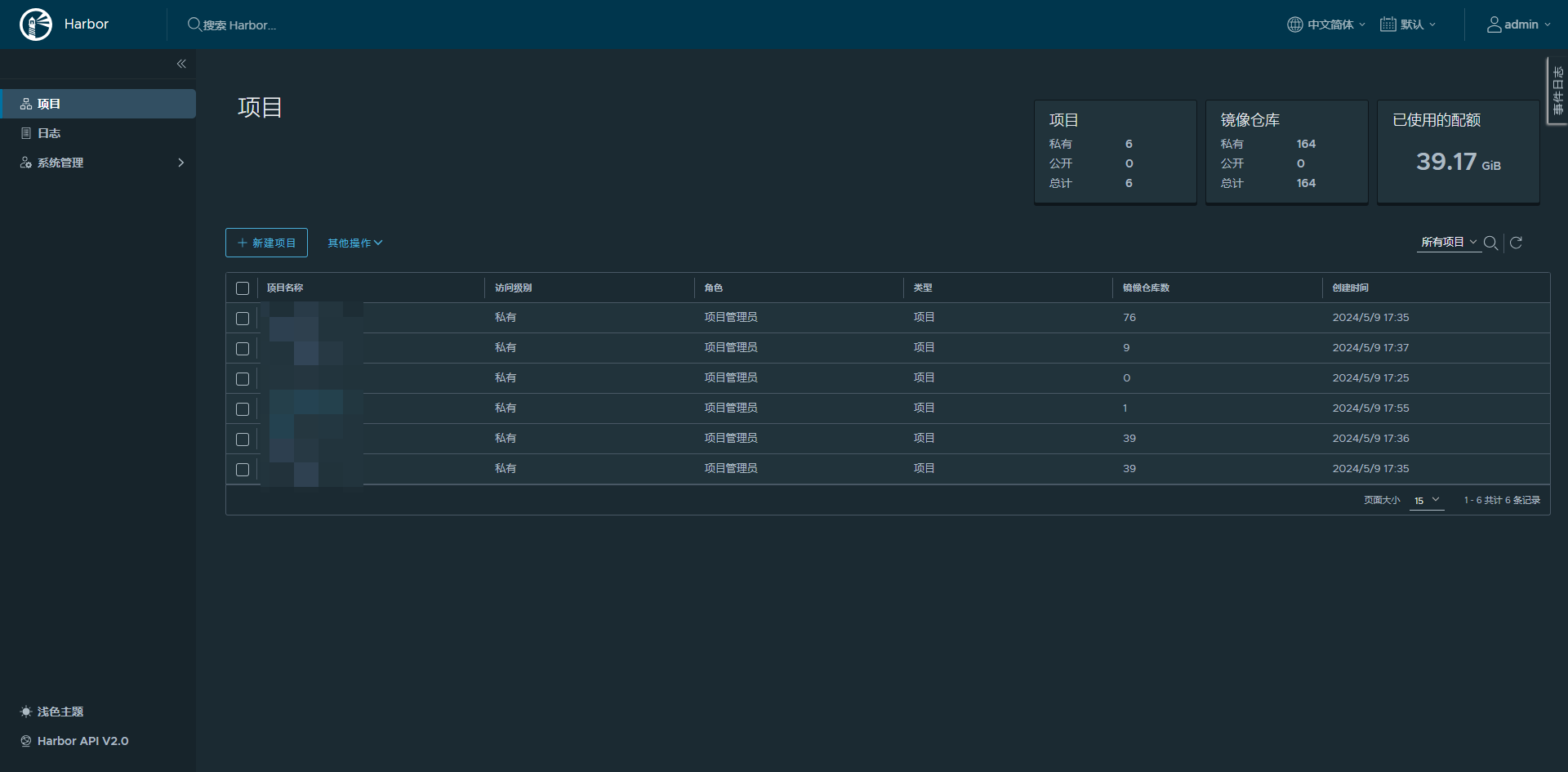

3.访问http://webharbor.xxx.com harbor的面板

输入你配置在values.yaml里面的密码123123,账号是admin

当然我建议安全提升下,给ingress配置白名单给你的访问IP

#添加白名单

kubectl edit ingress -n harbor harbor-ingress

```

metadata:

annotations:

nginx.ingress.kubernetes.io/whitelist-source-range: 103.220.11.120/32

```4.docker和containerd连接Harbor

docker

#新建配置文件

[root@master01 harbor]# cat /etc/docker/daemon.json

{

"insecure-registries": ["http://harbor.xxx.com"]

}

#生效

systemctl daemon-reload

systemctl restart docker

#登录

docker login harbor.xxx.com

#然后输入你的Harbor账号密码

#显示login secuess即可containerd

#没有/etc/containerd/config.toml文件的

containerd config default | tee /etc/containerd/config.toml

#修改config.toml文件

```

# [plugins."io.containerd.grpc.v1.cri".registry]

# config_path = ""

#

# [plugins."io.containerd.grpc.v1.cri".registry.auths]

#

# [plugins."io.containerd.grpc.v1.cri".registry.configs]

#

# [plugins."io.containerd.grpc.v1.cri".registry.headers]

#

# [plugins."io.containerd.grpc.v1.cri".registry.mirrors]

#

# [plugins."io.containerd.grpc.v1.cri".x509_key_pair_streaming]

# tls_cert_file = ""

# tls_key_file = ""

[plugins."io.containerd.grpc.v1.cri".registry]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."harbor.xxx.com"]

endpoint = ["http://harbor.xxx.com"]

[plugins."io.containerd.grpc.v1.cri".registry.configs]

[plugins."io.containerd.grpc.v1.cri".registry.configs."harbor.xxx.com".auth]

username = "admin"

password = "123123"

```

#重启containerd

systemctl restart containerd.service

#下载一个harbor里面的镜像,成功就是OK

crictl pull harbor.xxx.com/images/nginx:latest如果有Harbor迁移的需要,请看我的另外一篇文章Harbor迁移/同步镜像replications复制管理-CSDN博客

好了大功告成,K8S部署Harbor的优势还是很明显的,会更加的便于管理,将所有业务k8s容器化才是能让运维脱离冗余管理的关键。

也是能放心摸鱼的关键。

2197

2197

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?