假如ceph集群已经创建

1.创建cephfs_pool存储池

ceph osd pool create fs_kube_data 16 16

2.创建cephfs_metadata存储池

ceph osd pool create fs_kube_metadata 16 16

3 创建cephfs

ceph fs new cephfs01 fs_kube_metadata fs_kube_data

4 设置最大活动数

ceph fs set cephfs01 max_mds 2

5 创建卷子组(非常重要,reef版的文件存储多一步这个)

ceph fs subvolumegroup create cephfs01 myfsg

创建k8s访问cephfs的认证用户

#ceph auth get-or-create client.cephfs01 mon 'allow r' mds 'allow rw' osd 'allow rw pool=cephfs_data, allow rw pool=cephfs_metadata'

# ceph auth get client.cephfs01

[client.cephfs01]

key = AQAHRD9mmCOLCBAAb+gJ3WBM/KU/FbZEofGOJg==

caps mds = "allow rw"

caps mon = "allow r"

caps osd = "allow rw pool=cephfs_data, allow rw pool=cephfs_metadata"

#目前这个版本需要手动创建

# ceph auth get client.cephfs01 > /etc/ceph/ceph.client.cephfs01.keyring

本地测试挂载并创建目录

#目前这个版本需要手动创建

# ceph auth get client.cephfs01 > /etc/ceph/ceph.client.cephfs01.keyring

# mount.ceph ceph-163:6789:/ /mnt -o name=cephfs01,secret=AQAHRD9mmCOLCBAAb+gJ3WBM/KU/FbZEofGOJg==

#挂着成功

# df -h | grep mnt

127.0.1.1:6789,192.168.0.163:6789:/ 222G 0 222G 0% /mnt

在写你的外部config配置,如果不想使用,就不用写

cat <<EOF > config.yaml

apiVersion: v1

kind: ConfigMap

data:

config.json: |-

[

{

"clusterID": "588abbf6-0f74-11ef-ba10-bc2411f077b2",

"monitors": [

"192.168.0.163:6789",

"192.168.0.164:6789",

"192.168.0.165:6789"

],

"cephFS": {

"subVolumeGroup": "myfsg"

}

}

]

metadata:

name: ceph-csi-config

EOF

本次使用helm安装

请认真阅读完yaml在安装

# egrep -v "^#|^$" values.yaml

---

rbac:

# Specifies whether RBAC resources should be created

create: true

serviceAccounts:

nodeplugin:

# Specifies whether a ServiceAccount should be created

create: true

# The name of the ServiceAccount to use.

# If not set and create is true, a name is generated using the fullname

name:

provisioner:

# Specifies whether a ServiceAccount should be created

create: true

# The name of the ServiceAccount to use.

# If not set and create is true, a name is generated using the fullname

name:

csiConfig:

- clusterID: "588abbf6-0f74-11ef-ba10-bc2411f077b2"

monitors:

- "192.168.0.163:6789"

- "192.168.0.164:6789"

- "192.168.0.165:6789"

cephFS:

subvolumeGroup: "myfsg"

#netNamespaceFilePath: "{{ .kubeletDir }}/plugins/{{ .driverName }}/net"

commonLabels: {}

logLevel: 5

sidecarLogLevel: 1

CSIDriver:

fsGroupPolicy: "File"

seLinuxMount: false

nodeplugin:

name: nodeplugin

# if you are using ceph-fuse client set this value to OnDelete

updateStrategy: RollingUpdate

# set user created priorityclassName for csi plugin pods. default is

# system-node-critical which is highest priority

priorityClassName: system-node-critical

httpMetrics:

# Metrics only available for cephcsi/cephcsi => 1.2.0

# Specifies whether http metrics should be exposed

enabled: true

# The port of the container to expose the metrics

containerPort: 8081

service:

# Specifies whether a service should be created for the metrics

enabled: true

# The port to use for the service

servicePort: 8080

type: ClusterIP

# Annotations for the service

# Example:

# annotations:

# prometheus.io/scrape: "true"

# prometheus.io/port: "9080"

annotations: {}

clusterIP: ""

## List of IP addresses at which the stats-exporter service is available

## Ref: https://kubernetes.io/docs/user-guide/services/#external-ips

##

externalIPs: []

loadBalancerIP: ""

loadBalancerSourceRanges: []

## Reference to one or more secrets to be used when pulling images

##

imagePullSecrets: []

# - name: "image-pull-secret"

profiling:

enabled: false

registrar:

image:

repository: registry.cn-shenzhen.aliyuncs.com/neway-sz/uat

tag: registrar-v2.10.1

pullPolicy: IfNotPresent

resources: {}

plugin:

image:

repository: quay.io/cephcsi/cephcsi

#tag: v3.11-canary

tag: canary

pullPolicy: IfNotPresent

resources: {}

nodeSelector: {}

tolerations: []

affinity: {}

# Set to true to enable Ceph Kernel clients

# on kernel < 4.17 which support quotas

# forcecephkernelclient: true

# common mount options to apply all mounting

# example: kernelmountoptions: "recover_session=clean"

kernelmountoptions: ""

fusemountoptions: ""

provisioner:

name: provisioner

replicaCount: 1

strategy:

# RollingUpdate strategy replaces old pods with new ones gradually,

# without incurring downtime.

type: RollingUpdate

rollingUpdate:

# maxUnavailable is the maximum number of pods that can be

# unavailable during the update process.

maxUnavailable: 50%

# Timeout for waiting for creation or deletion of a volume

timeout: 60s

# cluster name to set on the subvolume

# clustername: "k8s-cluster-1"

# set user created priorityclassName for csi provisioner pods. default is

# system-cluster-critical which is less priority than system-node-critical

priorityClassName: system-cluster-critical

# enable hostnetwork for provisioner pod. default is false

# useful for deployments where the podNetwork has no access to ceph

enableHostNetwork: false

httpMetrics:

# Metrics only available for cephcsi/cephcsi => 1.2.0

# Specifies whether http metrics should be exposed

enabled: true

# The port of the container to expose the metrics

containerPort: 8081

service:

# Specifies whether a service should be created for the metrics

enabled: true

# The port to use for the service

servicePort: 8080

type: ClusterIP

# Annotations for the service

# Example:

# annotations:

# prometheus.io/scrape: "true"

# prometheus.io/port: "9080"

annotations: {}

clusterIP: ""

## List of IP addresses at which the stats-exporter service is available

## Ref: https://kubernetes.io/docs/user-guide/services/#external-ips

##

externalIPs: []

loadBalancerIP: ""

loadBalancerSourceRanges: []

## Reference to one or more secrets to be used when pulling images

##

imagePullSecrets: []

# - name: "image-pull-secret"

profiling:

enabled: false

provisioner:

image:

repository: registry.cn-shenzhen.aliyuncs.com/neway-sz/uat

tag: provisioner-v4.0.1

pullPolicy: IfNotPresent

resources: {}

## For further options, check

## https://github.com/kubernetes-csi/external-provisioner#command-line-options

extraArgs: []

# set metadata on volume

setmetadata: true

resizer:

name: resizer

enabled: true

image:

repository: registry.cn-shenzhen.aliyuncs.com/neway-sz/uat

tag: resizer-v1.10.1

pullPolicy: IfNotPresent

resources: {}

## For further options, check

## https://github.com/kubernetes-csi/external-resizer#recommended-optional-arguments

extraArgs: []

snapshotter:

image:

repository: registry.cn-shenzhen.aliyuncs.com/neway-sz/uat

tag: snapshotter-v7.0.2

pullPolicy: IfNotPresent

resources: {}

## For further options, check

## https://github.com/kubernetes-csi/external-snapshotter#csi-external-snapshotter-sidecar-command-line-options

extraArgs: []

args:

# enableVolumeGroupSnapshots enables support for volume group snapshots

enableVolumeGroupSnapshots: false

nodeSelector: {}

tolerations: []

affinity: {}

selinuxMount: false

storageClass:

# Specifies whether the Storage class should be created

create: true

name: csi-cephfs-sc

# Annotations for the storage class

# Example:

# annotations:

# storageclass.kubernetes.io/is-default-class: "true"

annotations: {}

# String representing a Ceph cluster to provision storage from.

# Should be unique across all Ceph clusters in use for provisioning,

# cannot be greater than 36 bytes in length, and should remain immutable for

# the lifetime of the StorageClass in use.

clusterID: 588abbf6-0f74-11ef-ba10-bc2411f077b2

# (required) CephFS filesystem name into which the volume shall be created

# eg: fsName: myfs

fsName: cephfs01

# (optional) Ceph pool into which volume data shall be stored

# pool: <cephfs-data-pool>

# For eg:

# pool: "replicapool"

#pool: "fs_kube_data"

# (optional) Comma separated string of Ceph-fuse mount options.

# For eg:

# fuseMountOptions: debug

fuseMountOptions: ""

# (optional) Comma separated string of Cephfs kernel mount options.

# Check man mount.ceph for mount options. For eg:

# kernelMountOptions: readdir_max_bytes=1048576,norbytes

kernelMountOptions: ""

# (optional) The driver can use either ceph-fuse (fuse) or

# ceph kernelclient (kernel).

# If omitted, default volume mounter will be used - this is

# determined by probing for ceph-fuse and mount.ceph

# mounter: kernel

mounter: ""

# (optional) Prefix to use for naming subvolumes.

# If omitted, defaults to "csi-vol-".

# volumeNamePrefix: "foo-bar-"

volumeNamePrefix: ""

# The secrets have to contain user and/or Ceph admin credentials.

provisionerSecret: csi-cephfs-secret

# If the Namespaces are not specified, the secrets are assumed to

# be in the Release namespace.

provisionerSecretNamespace: ""

controllerExpandSecret: csi-cephfs-secret

controllerExpandSecretNamespace: ""

nodeStageSecret: csi-cephfs-secret

nodeStageSecretNamespace: ""

reclaimPolicy: Delete

allowVolumeExpansion: true

mountOptions:

# Mount Options

# Example:

#mountOptions:

- discard

secret:

# Specifies whether the secret should be created

create: true

name: csi-cephfs-secret

annotations: {}

# Key values correspond to a user name and its key, as defined in the

# ceph cluster. User ID should have required access to the 'pool'

# specified in the storage class

userID: cephfs01

userKey: AQAHRD9mmCOLCBAAb+gJ3WBM/KU/FbZEofGOJg==

adminID: admin

adminKey: AQASMz9mgVCqNxAABEAu/WYy0gaEcTC5zC60Ug==

cephconf: |

[global]

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

# ceph-fuse which uses libfuse2 by default has write buffer size of 2KiB

# adding 'fuse_big_writes = true' option by default to override this limit

# see https://github.com/ceph/ceph-csi/issues/1928

#fuse_big_writes = true

extraDeploy: []

provisionerSocketFile: csi-provisioner.sock

pluginSocketFile: csi.sock

kubeletDir: /var/lib/kubelet

driverName: cephfs.csi.ceph.com

configMapName: ceph-csi-config

externallyManagedConfigmap: false <<<<----如果你是外部config文件就改成true

cephConfConfigMapName: ceph-config

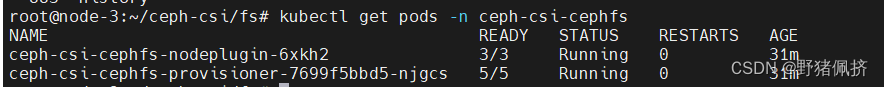

最后部署你的csi驱动

helm 安装包点击下载

链接:分享文件:ceph-csi-cephfs-3.11.0.tgz

helm install -n ceph-csi-cephfs ceph-csi-cephfs ceph-csi-cephfs-3.11.0.tgz -f values.yaml

编辑一个demon

cat <<EOF > pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: csi-cephfs-pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

storageClassName: csi-cephfs-sc

EOF

不在解释了

# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

csi-cephfs-pvc Bound pvc-e29b9393-9473-4c59-b981-0e24d5835018 1Gi RWX csi-cephfs-sc 31m

1183

1183

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?